VisionGuard: Runtime Detection of Adversarial Inputs to Perception Systems

Paper and Code

Feb 23, 2020

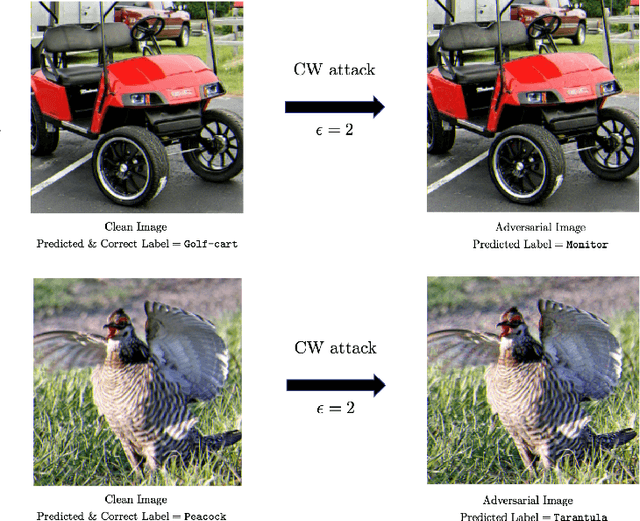

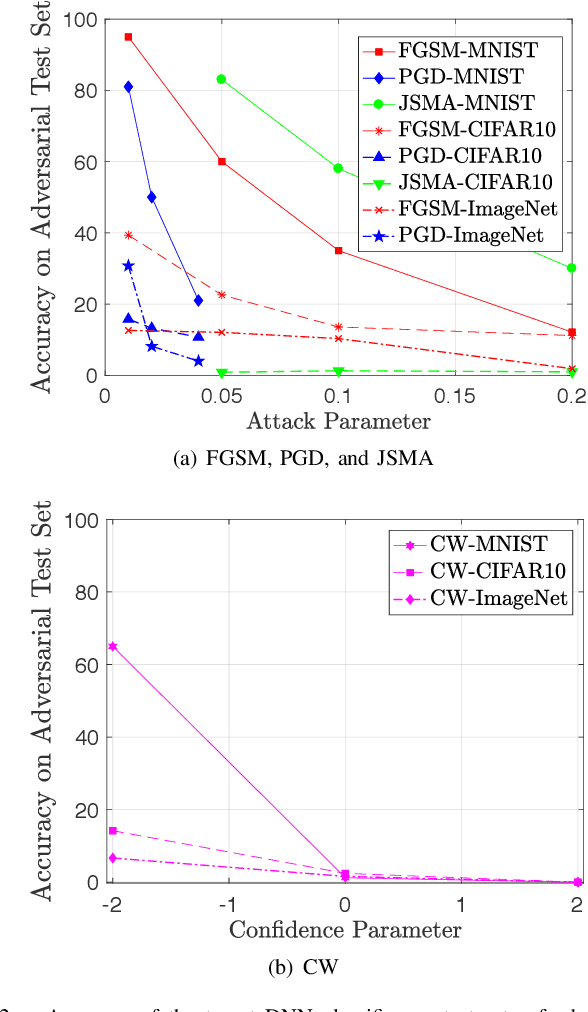

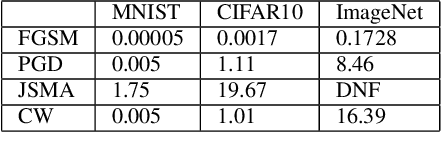

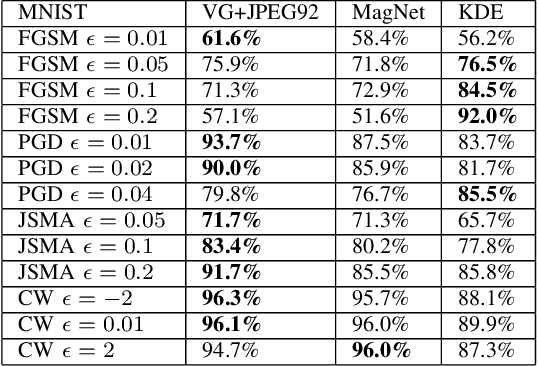

Deep neural network (DNN) models have proven to be vulnerable to adversarial attacks. In this paper, we propose VisionGuard, a novel attack- and dataset-agnostic and computationally-light defense mechanism for adversarial inputs to DNN-based perception systems. In particular, VisionGuard relies on the observation that adversarial images are sensitive to lossy compression transformations. Specifically, to determine if an image is adversarial, VisionGuard checks if the output of the target classifier on a given input image changes significantly after feeding it a transformed version of the image under investigation. Moreover, we show that VisionGuard is computationally-light both at runtime and design-time which makes it suitable for real-time applications that may also involve large-scale image domains. To highlight this, we demonstrate the efficiency of VisionGuard on ImageNet, a task that is computationally challenging for the majority of relevant defenses. Finally, we include extensive comparative experiments on the MNIST, CIFAR10, and ImageNet datasets that show that VisionGuard outperforms existing defenses in terms of scalability and detection performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge