Confidence Composition for Monitors of Verification Assumptions

Paper and Code

Nov 03, 2021

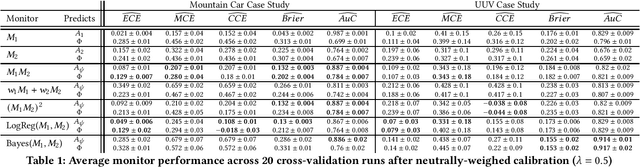

Closed-loop verification of cyber-physical systems with neural network controllers offers strong safety guarantees under certain assumptions. It is, however, difficult to determine whether these guarantees apply at run time because verification assumptions may be violated. To predict safety violations in a verified system, we propose a three-step framework for monitoring the confidence in verification assumptions. First, we represent the sufficient condition for verified safety with a propositional logical formula over assumptions. Second, we build calibrated confidence monitors that evaluate the probability that each assumption holds. Third, we obtain the confidence in the verification guarantees by composing the assumption monitors using a composition function suitable for the logical formula. Our framework provides theoretical bounds on the calibration and conservatism of compositional monitors. In two case studies, we demonstrate that the composed monitors improve over their constituents and successfully predict safety violations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge