ModelGuard: Runtime Validation of Lipschitz-continuous Models

Paper and Code

Apr 30, 2021

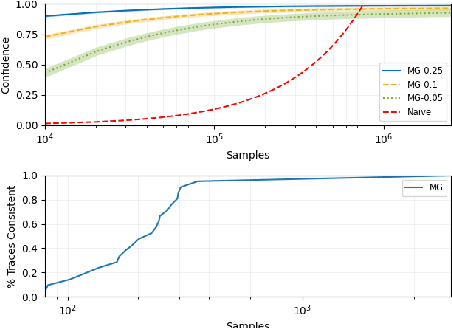

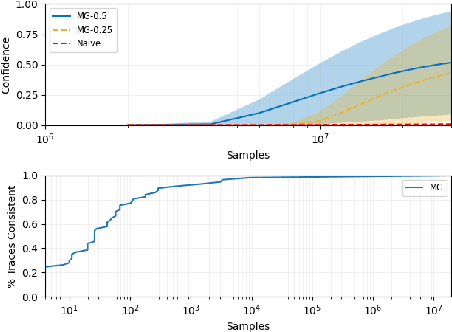

This paper presents ModelGuard, a sampling-based approach to runtime model validation for Lipschitz-continuous models. Although techniques exist for the validation of many classes of models the majority of these methods cannot be applied to the whole of Lipschitz-continuous models, which includes neural network models. Additionally, existing techniques generally consider only white-box models. By taking a sampling-based approach, we can address black-box models, represented only by an input-output relationship and a Lipschitz constant. We show that by randomly sampling from a parameter space and evaluating the model, it is possible to guarantee the correctness of traces labeled consistent and provide a confidence on the correctness of traces labeled inconsistent. We evaluate the applicability and scalability of ModelGuard in three case studies, including a physical platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge