Rachid Alami

LAAS-CNRS, Universite de Toulouse

Latent Perspective-Taking via a Schrödinger Bridge in Influence-Augmented Local Models

Feb 02, 2026Abstract:Operating in environments alongside humans requires robots to make decisions under uncertainty. In addition to exogenous dynamics, they must reason over others' hidden mental-models and mental-states. While Interactive POMDPs and Bayesian Theory of Mind formulations are principled, exact nested-belief inference is intractable, and hand-specified models are brittle in open-world settings. We address both by learning structured mental-models and an estimator of others' mental-states. Building on the Influence-Based Abstraction, we instantiate an Influence-Augmented Local Model to decompose socially-aware robot tasks into local dynamics, social influences, and exogenous factors. We propose (a) a neuro-symbolic world model instantiating a factored, discrete Dynamic Bayesian Network, and (b) a perspective-shift operator modeled as an amortized Schrödinger Bridge over the learned local dynamics that transports factored egocentric beliefs into other-centric beliefs. We show that this architecture enables agents to synthesize socially-aware policies in model-based reinforcement learning, via decision-time mental-state planning (a Schrödinger Bridge in belief space), with preliminary results in a MiniGrid social navigation task.

How Human Motion Prediction Quality Shapes Social Robot Navigation Performance in Constrained Spaces

Jan 14, 2026Abstract:Motivated by the vision of integrating mobile robots closer to humans in warehouses, hospitals, manufacturing plants, and the home, we focus on robot navigation in dynamic and spatially constrained environments. Ensuring human safety, comfort, and efficiency in such settings requires that robots are endowed with a model of how humans move around them. Human motion prediction around robots is especially challenging due to the stochasticity of human behavior, differences in user preferences, and data scarcity. In this work, we perform a methodical investigation of the effects of human motion prediction quality on robot navigation performance, as well as human productivity and impressions. We design a scenario involving robot navigation among two human subjects in a constrained workspace and instantiate it in a user study ($N=80$) involving two different robot platforms, conducted across two sites from different world regions. Key findings include evidence that: 1) the widely adopted average displacement error is not a reliable predictor of robot navigation performance and human impressions; 2) the common assumption of human cooperation breaks down in constrained environments, with users often not reciprocating robot cooperation, and causing performance degradations; 3) more efficient robot navigation often comes at the expense of human efficiency and comfort.

Perspective-Shifted Neuro-Symbolic World Models: A Framework for Socially-Aware Robot Navigation

Mar 26, 2025

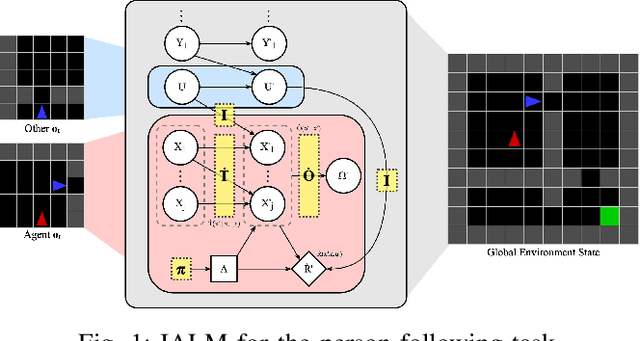

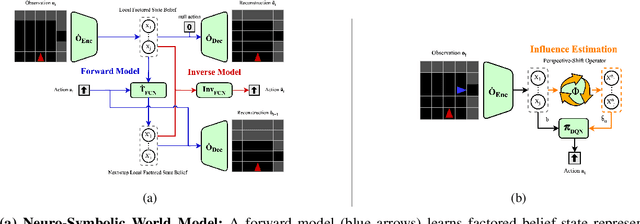

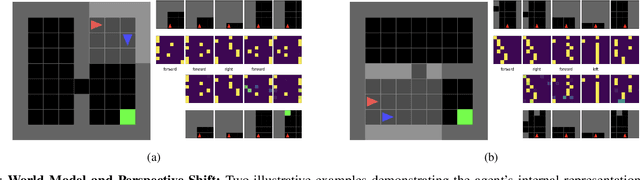

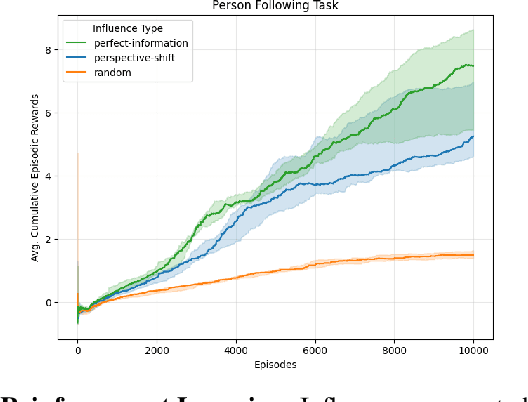

Abstract:Navigating in environments alongside humans requires agents to reason under uncertainty and account for the beliefs and intentions of those around them. Under a sequential decision-making framework, egocentric navigation can naturally be represented as a Markov Decision Process (MDP). However, social navigation additionally requires reasoning about the hidden beliefs of others, inherently leading to a Partially Observable Markov Decision Process (POMDP), where agents lack direct access to others' mental states. Inspired by Theory of Mind and Epistemic Planning, we propose (1) a neuro-symbolic model-based reinforcement learning architecture for social navigation, addressing the challenge of belief tracking in partially observable environments; and (2) a perspective-shift operator for belief estimation, leveraging recent work on Influence-based Abstractions (IBA) in structured multi-agent settings.

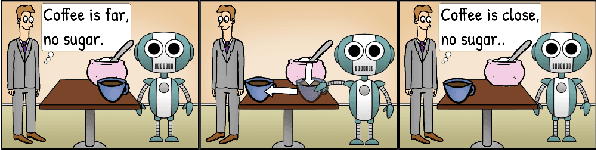

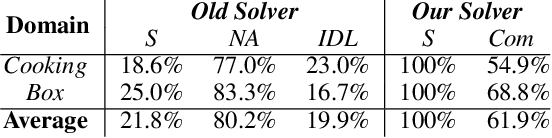

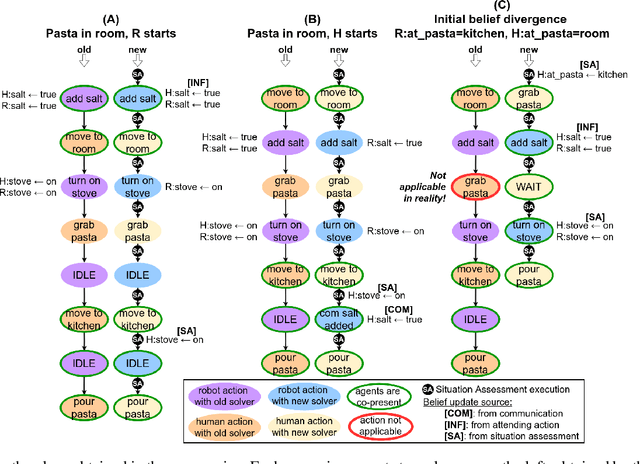

An Epistemic Human-Aware Task Planner which Anticipates Human Beliefs and Decisions

Sep 27, 2024Abstract:We present a substantial extension of our Human-Aware Task Planning framework, tailored for scenarios with intermittent shared execution experiences and significant belief divergence between humans and robots, particularly due to the uncontrollable nature of humans. Our objective is to build a robot policy that accounts for uncontrollable human behaviors, thus enabling the anticipation of possible advancements achieved by the robot when the execution is not shared, e.g. when humans are briefly absent from the shared environment to complete a subtask. But, this anticipation is considered from the perspective of humans who have access to an estimated model for the robot. To this end, we propose a novel planning framework and build a solver based on AND-OR search, which integrates knowledge reasoning, including situation assessment by perspective taking. Our approach dynamically models and manages the expansion and contraction of potential advances while precisely keeping track of when (and when not) agents share the task execution experience. The planner systematically assesses the situation and ignores worlds that it has reason to think are impossible for humans. Overall, our new solver can estimate the distinct beliefs of the human and the robot along potential courses of action, enabling the synthesis of plans where the robot selects the right moment for communication, i.e. informing, or replying to an inquiry, or defers ontic actions until the execution experiences can be shared. Preliminary experiments in two domains, one novel and one adapted, demonstrate the effectiveness of the framework.

A Survey on Socially Aware Robot Navigation: Taxonomy and Future Challenges

Nov 18, 2023Abstract:Socially aware robot navigation is gaining popularity with the increase in delivery and assistive robots. The research is further fueled by a need for socially aware navigation skills in autonomous vehicles to move safely and appropriately in spaces shared with humans. Although most of these are ground robots, drones are also entering the field. In this paper, we present a literature survey of the works on socially aware robot navigation in the past 10 years. We propose four different faceted taxonomies to navigate the literature and examine the field from four different perspectives. Through the taxonomic review, we discuss the current research directions and the extending scope of applications in various domains. Further, we put forward a list of current research opportunities and present a discussion on possible future challenges that are likely to emerge in the field.

Principles and Guidelines for Evaluating Social Robot Navigation Algorithms

Jun 29, 2023

Abstract:A major challenge to deploying robots widely is navigation in human-populated environments, commonly referred to as social robot navigation. While the field of social navigation has advanced tremendously in recent years, the fair evaluation of algorithms that tackle social navigation remains hard because it involves not just robotic agents moving in static environments but also dynamic human agents and their perceptions of the appropriateness of robot behavior. In contrast, clear, repeatable, and accessible benchmarks have accelerated progress in fields like computer vision, natural language processing and traditional robot navigation by enabling researchers to fairly compare algorithms, revealing limitations of existing solutions and illuminating promising new directions. We believe the same approach can benefit social navigation. In this paper, we pave the road towards common, widely accessible, and repeatable benchmarking criteria to evaluate social robot navigation. Our contributions include (a) a definition of a socially navigating robot as one that respects the principles of safety, comfort, legibility, politeness, social competency, agent understanding, proactivity, and responsiveness to context, (b) guidelines for the use of metrics, development of scenarios, benchmarks, datasets, and simulators to evaluate social navigation, and (c) a design of a social navigation metrics framework to make it easier to compare results from different simulators, robots and datasets.

Robust Robot Planning for Human-Robot Collaboration

Feb 27, 2023

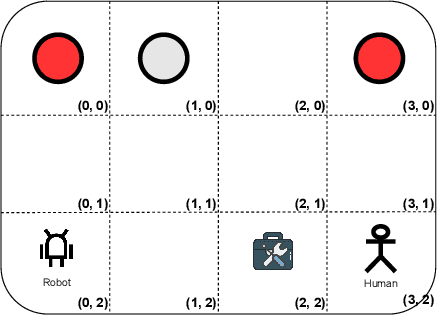

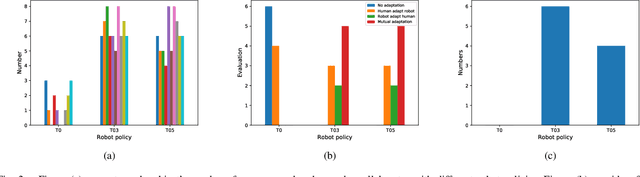

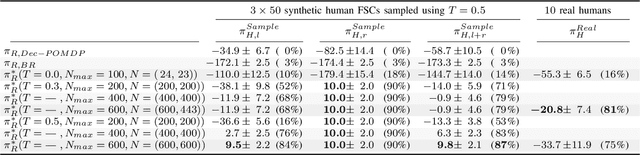

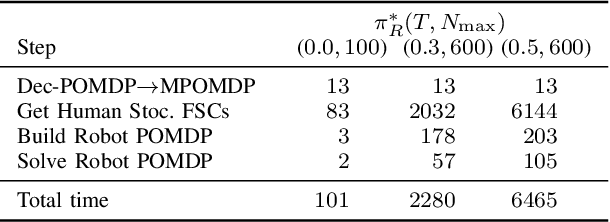

Abstract:In human-robot collaboration, the objectives of the human are often unknown to the robot. Moreover, even assuming a known objective, the human behavior is also uncertain. In order to plan a robust robot behavior, a key preliminary question is then: How to derive realistic human behaviors given a known objective? A major issue is that such a human behavior should itself account for the robot behavior, otherwise collaboration cannot happen. In this paper, we rely on Markov decision models, representing the uncertainty over the human objective as a probability distribution over a finite set of objective functions (inducing a distribution over human behaviors). Based on this, we propose two contributions: 1) an approach to automatically generate an uncertain human behavior (a policy) for each given objective function while accounting for possible robot behaviors; and 2) a robot planning algorithm that is robust to the above-mentioned uncertainties and relies on solving a partially observable Markov decision process (POMDP) obtained by reasoning on a distribution over human behaviors. A co-working scenario allows conducting experiments and presenting qualitative and quantitative results to evaluate our approach.

Watch out! There may be a Human. Addressing Invisible Humans in Social Navigation

Nov 22, 2022

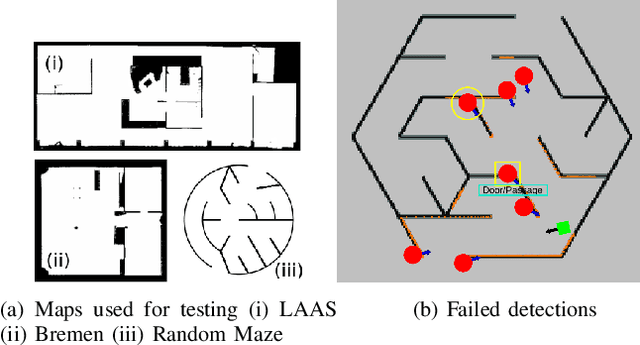

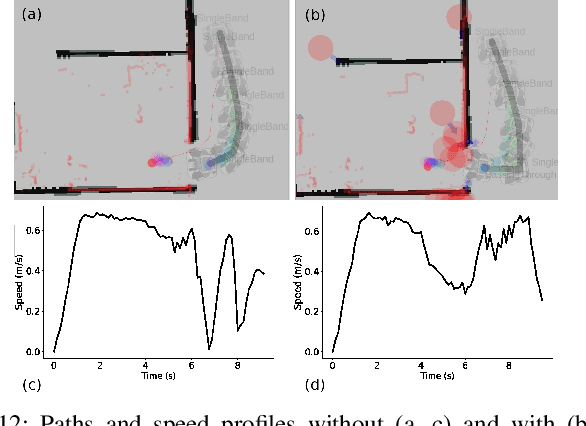

Abstract:Current approaches in human-aware or social robot navigation address the humans that are visible to the robot. However, it is also important to address the possible emergences of humans to avoid shocks or surprises to humans and erratic behavior of the robot planner. In this paper, we propose a novel approach to detect and address these human emergences called `invisible humans'. We determine the places from which a human, currently not visible to the robot, can appear suddenly and then adapt the path and speed of the robot with the anticipation of potential collisions. This is done while still considering and adapting humans present in the robot's field of view. We also show how this detection can be exploited to identify and address the doorways or narrow passages. Finally, the effectiveness of the proposed methodology is shown through several simulated and real-world experiments.

Robust Planning for Human-Robot Joint Tasks with Explicit Reasoning on Human Mental State

Oct 17, 2022

Abstract:We consider the human-aware task planning problem where a human-robot team is given a shared task with a known objective to achieve. Recent approaches tackle it by modeling it as a team of independent, rational agents, where the robot plans for both agents' (shared) tasks. However, the robot knows that humans cannot be administered like artificial agents, so it emulates and predicts the human's decisions, actions, and reactions. Based on earlier approaches, we describe a novel approach to solve such problems, which models and uses execution-time observability conventions. Abstractly, this modeling is based on situation assessment, which helps our approach capture the evolution of individual agents' beliefs and anticipate belief divergences that arise in practice. It decides if and when belief alignment is needed and achieves it with communication. These changes improve the solver's performance: (a) communication is effectively used, and (b) robust for more realistic and challenging problems.

Logic-Based Ethical Planning

Jun 02, 2022Abstract:In this paper we propose a framework for ethical decision making in the context of planning, with intended application to robotics. We put forward a compact but highly expressive language for ethical planning that combines linear temporal logic with lexicographic preference modelling. This original combination allows us to assess plans both with respect to an agent's values and their desires, introducing the novel concept of the morality level of an agent and moving towards multigoal, multivalue planning. We initiate the study of computational complexity of planning tasks in our setting, and we discuss potential applications to robotics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge