Anthony Favier

LAAS-RIS

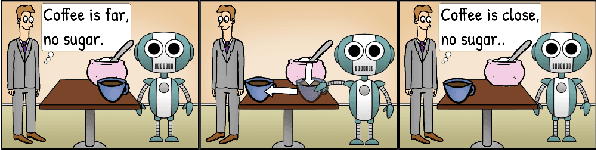

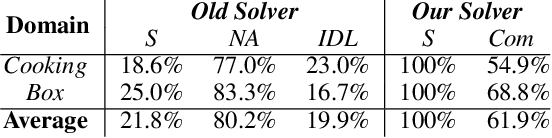

An Epistemic Human-Aware Task Planner which Anticipates Human Beliefs and Decisions

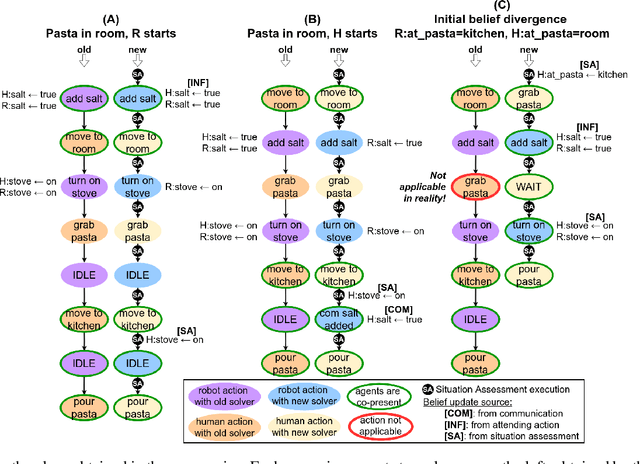

Sep 27, 2024Abstract:We present a substantial extension of our Human-Aware Task Planning framework, tailored for scenarios with intermittent shared execution experiences and significant belief divergence between humans and robots, particularly due to the uncontrollable nature of humans. Our objective is to build a robot policy that accounts for uncontrollable human behaviors, thus enabling the anticipation of possible advancements achieved by the robot when the execution is not shared, e.g. when humans are briefly absent from the shared environment to complete a subtask. But, this anticipation is considered from the perspective of humans who have access to an estimated model for the robot. To this end, we propose a novel planning framework and build a solver based on AND-OR search, which integrates knowledge reasoning, including situation assessment by perspective taking. Our approach dynamically models and manages the expansion and contraction of potential advances while precisely keeping track of when (and when not) agents share the task execution experience. The planner systematically assesses the situation and ignores worlds that it has reason to think are impossible for humans. Overall, our new solver can estimate the distinct beliefs of the human and the robot along potential courses of action, enabling the synthesis of plans where the robot selects the right moment for communication, i.e. informing, or replying to an inquiry, or defers ontic actions until the execution experiences can be shared. Preliminary experiments in two domains, one novel and one adapted, demonstrate the effectiveness of the framework.

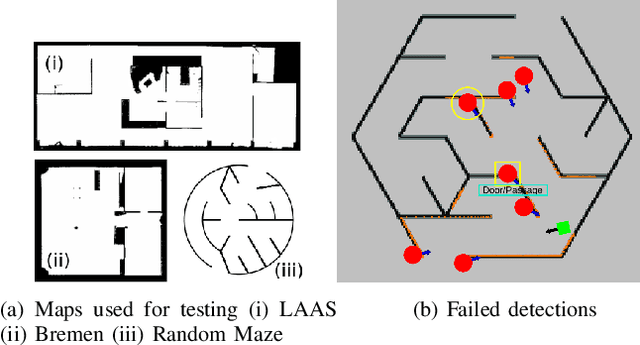

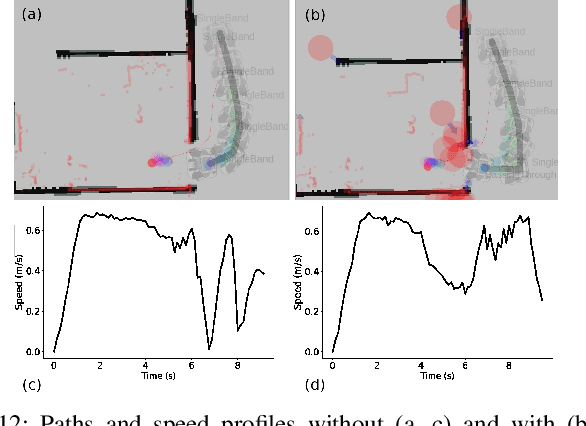

Watch out! There may be a Human. Addressing Invisible Humans in Social Navigation

Nov 22, 2022

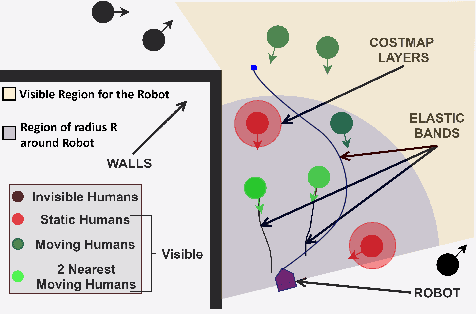

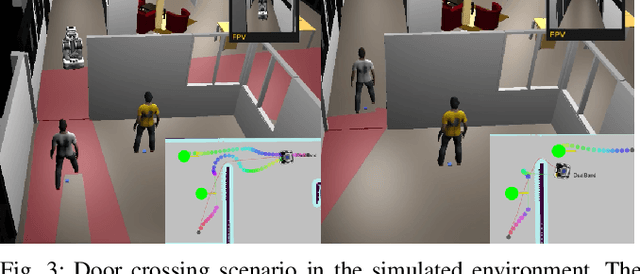

Abstract:Current approaches in human-aware or social robot navigation address the humans that are visible to the robot. However, it is also important to address the possible emergences of humans to avoid shocks or surprises to humans and erratic behavior of the robot planner. In this paper, we propose a novel approach to detect and address these human emergences called `invisible humans'. We determine the places from which a human, currently not visible to the robot, can appear suddenly and then adapt the path and speed of the robot with the anticipation of potential collisions. This is done while still considering and adapting humans present in the robot's field of view. We also show how this detection can be exploited to identify and address the doorways or narrow passages. Finally, the effectiveness of the proposed methodology is shown through several simulated and real-world experiments.

Robust Planning for Human-Robot Joint Tasks with Explicit Reasoning on Human Mental State

Oct 17, 2022

Abstract:We consider the human-aware task planning problem where a human-robot team is given a shared task with a known objective to achieve. Recent approaches tackle it by modeling it as a team of independent, rational agents, where the robot plans for both agents' (shared) tasks. However, the robot knows that humans cannot be administered like artificial agents, so it emulates and predicts the human's decisions, actions, and reactions. Based on earlier approaches, we describe a novel approach to solve such problems, which models and uses execution-time observability conventions. Abstractly, this modeling is based on situation assessment, which helps our approach capture the evolution of individual agents' beliefs and anticipate belief divergences that arise in practice. It decides if and when belief alignment is needed and achieves it with communication. These changes improve the solver's performance: (a) communication is effectively used, and (b) robust for more realistic and challenging problems.

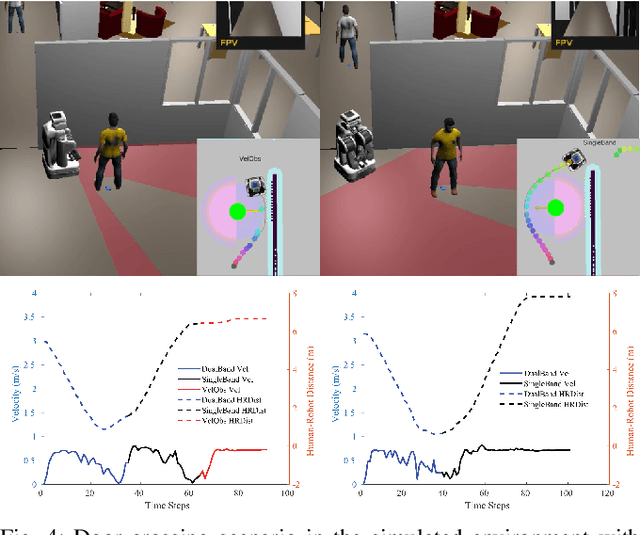

Human-Aware Navigation Planner for Diverse Human-Robot Contexts

Jun 18, 2021

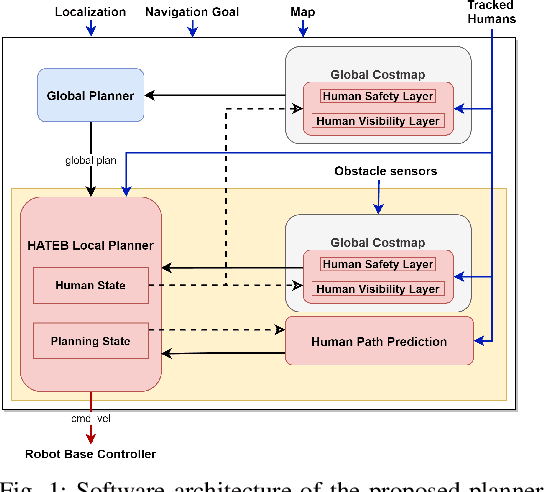

Abstract:As more robots are being deployed into human environments, a human-aware navigation planner needs to handle multiple contexts that occur in indoor and outdoor environments. In this paper, we propose a tunable human-aware robot navigation planner that can handle a variety of humanrobot contexts. We present the architecture of the planner and discuss the features and some implementation details. Then we present a detailed analysis of various simulated humanrobot contexts using the proposed planner along with some quantitative results. Finally, we show the results in a real-world scenario after deploying our system on a real robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge