Emiliano Lorini

IRIT-CNRS, Toulouse University, France

A Computationally Grounded Framework for Cognitive Attitudes (extended version)

Dec 18, 2024

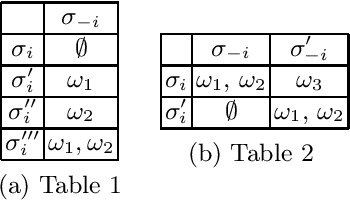

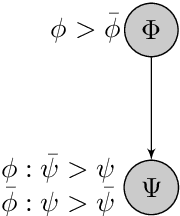

Abstract:We introduce a novel language for reasoning about agents' cognitive attitudes of both epistemic and motivational type. We interpret it by means of a computationally grounded semantics using belief bases. Our language includes five types of modal operators for implicit belief, complete attraction, complete repulsion, realistic attraction and realistic repulsion. We give an axiomatization and show that our operators are not mutually expressible and that they can be combined to represent a large variety of psychological concepts including ambivalence, indifference, being motivated, being demotivated and preference. We present a dynamic extension of the language that supports reasoning about the effects of belief change operations. Finally, we provide a succinct formulation of model checking for our languages and a PSPACE model checking algorithm relying on a reduction into TQBF. We present some experimental results for the implemented algorithm on computation time in a concrete example.

Responsibility in a Multi-Value Strategic Setting

Oct 22, 2024

Abstract:Responsibility is a key notion in multi-agent systems and in creating safe, reliable and ethical AI. However, most previous work on responsibility has only considered responsibility for single outcomes. In this paper we present a model for responsibility attribution in a multi-agent, multi-value setting. We also expand our model to cover responsibility anticipation, demonstrating how considerations of responsibility can help an agent to select strategies that are in line with its values. In particular we show that non-dominated regret-minimising strategies reliably minimise an agent's expected degree of responsibility.

Computational Grounding of Responsibility Attribution and Anticipation in LTLf

Oct 18, 2024Abstract:Responsibility is one of the key notions in machine ethics and in the area of autonomous systems. It is a multi-faceted notion involving counterfactual reasoning about actions and strategies. In this paper, we study different variants of responsibility in a strategic setting based on LTLf. We show a connection with notions in reactive synthesis, including synthesis of winning, dominant, and best-effort strategies. This connection provides the building blocks for a computational grounding of responsibility including complexity characterizations and sound, complete, and optimal algorithms for attributing and anticipating responsibility.

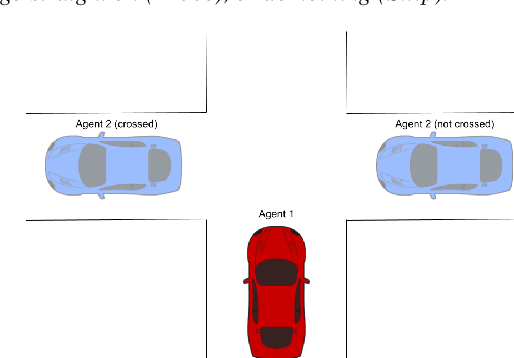

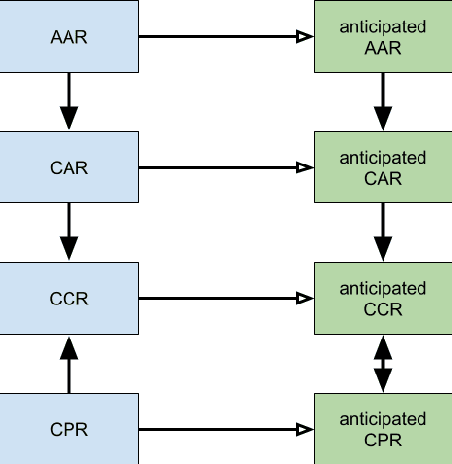

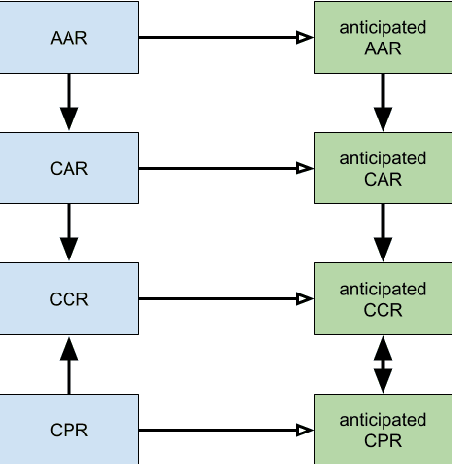

Anticipating Responsibility in Multiagent Planning

Jul 31, 2023

Abstract:Responsibility anticipation is the process of determining if the actions of an individual agent may cause it to be responsible for a particular outcome. This can be used in a multi-agent planning setting to allow agents to anticipate responsibility in the plans they consider. The planning setting in this paper includes partial information regarding the initial state and considers formulas in linear temporal logic as positive or negative outcomes to be attained or avoided. We firstly define attribution for notions of active, passive and contributive responsibility, and consider their agentive variants. We then use these to define the notion of responsibility anticipation. We prove that our notions of anticipated responsibility can be used to coordinate agents in a planning setting and give complexity results for our model, discussing equivalence with classical planning. We also present an outline for solving some of our attribution and anticipation problems using PDDL solvers.

Base-based Model Checking for Multi-Agent Only Believing

Jul 27, 2023

Abstract:We present a novel semantics for the language of multi-agent only believing exploiting belief bases, and show how to use it for automatically checking formulas of this language and of its dynamic extension with private belief expansion operators. We provide a PSPACE algorithm for model checking relying on a reduction to QBF and alternative dedicated algorithm relying on the exploration of the state space. We present an implementation of the QBF-based algorithm and some experimental results on computation time in a concrete example.

Modelling and Explaining Legal Case-based Reasoners through Classifiers

Oct 20, 2022Abstract:This paper brings together two lines of research: factor-based models of case-based reasoning (CBR) and the logical specification of classifiers. Logical approaches to classifiers capture the connection between features and outcomes in classifier systems. Factor-based reasoning is a popular approach to reasoning by precedent in AI & Law. Horty (2011) has developed the factor-based models of precedent into a theory of precedential constraint. In this paper we combine the modal logic approach (binary-input classifier, BLC) to classifiers and their explanations given by Liu & Lorini (2021) with Horty's account of factor-based CBR, since both a classifier and CBR map sets of features to decisions or classifications. We reformulate case bases of Horty in the language of BCL, and give several representation results. Furthermore, we show how notions of CBR, e.g. reason, preference between reasons, can be analyzed by notions of classifier system.

Logic-Based Ethical Planning

Jun 02, 2022Abstract:In this paper we propose a framework for ethical decision making in the context of planning, with intended application to robotics. We put forward a compact but highly expressive language for ethical planning that combines linear temporal logic with lexicographic preference modelling. This original combination allows us to assess plans both with respect to an agent's values and their desires, introducing the novel concept of the morality level of an agent and moving towards multigoal, multivalue planning. We initiate the study of computational complexity of planning tasks in our setting, and we discuss potential applications to robotics.

A logic for binary classifiers and their explanation

May 30, 2021

Abstract:Recent years have witnessed a renewed interest in Boolean function in explaining binary classifiers in the field of explainable AI (XAI). The standard approach of Boolean function is propositional logic. We present a modal language of a ceteris paribus nature which supports reasoning about binary classifiers and their properties. We study families of decision models for binary classifiers, axiomatize them and show completeness of our axiomatics. Moreover, we prove that the variant of our modal language with finite propositional atoms interpreted over these models is NP-complete. We leverage the language to formalize counterfactual conditional as well as a bunch of notions of explanation such as abductive, contrastive and counterfactual explanations, and biases. Finally, we present two extensions of our language: a dynamic extension by the notion of assignment enabling classifier change and an epistemic extension in which the classifier's uncertainty about the actual input can be represented.

A Qualitative Theory of Cognitive Attitudes and their Change

Feb 16, 2021

Abstract:We present a general logical framework for reasoning about agents' cognitive attitudes of both epistemic type and motivational type. We show that it allows us to express a variety of relevant concepts for qualitative decision theory including the concepts of knowledge, belief, strong belief, conditional belief, desire, conditional desire, strong desire and preference. We also present two extensions of the logic, one by the notion of choice and the other by dynamic operators for belief change and desire change, and we apply the former to the analysis of single-stage games under incomplete information. We provide sound and complete axiomatizations for the basic logic and for its two extensions. The paper is under consideration in Theory and Practice of Logic Programming (TPLP).

Modeling Contrary-to-Duty with CP-nets

Mar 23, 2020

Abstract:In a ceteris-paribus semantics for deontic logic, a state of affairs where a larger set of prescriptions is respected is preferable to a state of affairs where some of them are violated. Conditional preference nets (CP-nets) are a compact formalism to express and analyse ceteris paribus preferences, which nice computational properties. This paper shows how deontic concepts can be captured through conditional preference models. A restricted deontic logic will be defined, and mapped into conditional preference nets. We shall also show how to model contrary to duties obligations in CP-nets and how to capture in this formalism the distinction between strong and weak permission.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge