Francesca Rossi

University of Padova

Sheaf Neural Networks and biomedical applications

Jan 29, 2026Abstract:The purpose of this paper is to elucidate the theory and mathematical modelling behind the sheaf neural network (SNN) algorithm and then show how SNN can effectively answer to biomedical questions in a concrete case study and outperform the most popular graph neural networks (GNNs) as graph convolutional networks (GCNs), graph attention networks (GAT) and GraphSage.

Language Models Coupled with Metacognition Can Outperform Reasoning Models

Aug 25, 2025Abstract:Large language models (LLMs) excel in speed and adaptability across various reasoning tasks, but they often struggle when strict logic or constraint enforcement is required. In contrast, Large Reasoning Models (LRMs) are specifically designed for complex, step-by-step reasoning, although they come with significant computational costs and slower inference times. To address these trade-offs, we employ and generalize the SOFAI (Slow and Fast AI) cognitive architecture into SOFAI-LM, which coordinates a fast LLM with a slower but more powerful LRM through metacognition. The metacognitive module actively monitors the LLM's performance and provides targeted, iterative feedback with relevant examples. This enables the LLM to progressively refine its solutions without requiring the need for additional model fine-tuning. Extensive experiments on graph coloring and code debugging problems demonstrate that our feedback-driven approach significantly enhances the problem-solving capabilities of the LLM. In many instances, it achieves performance levels that match or even exceed those of standalone LRMs while requiring considerably less time. Additionally, when the LLM and feedback mechanism alone are insufficient, we engage the LRM by providing appropriate information collected during the LLM's feedback loop, tailored to the specific characteristics of the problem domain and leads to improved overall performance. Evaluations on two contrasting domains: graph coloring, requiring globally consistent solutions, and code debugging, demanding localized fixes, demonstrate that SOFAI-LM enables LLMs to match or outperform standalone LRMs in accuracy while maintaining significantly lower inference time.

Neo-FREE: Policy Composition Through Thousand Brains And Free Energy Optimization

Dec 10, 2024

Abstract:We consider the problem of optimally composing a set of primitives to tackle control tasks. To address this problem, we introduce Neo-FREE: a control architecture inspired by the Thousand Brains Theory and Free Energy Principle from cognitive sciences. In accordance with the neocortical (Neo) processes postulated by the Thousand Brains Theory, Neo-FREE consists of functional units returning control primitives. These are linearly combined by a gating mechanism that minimizes the variational free energy (FREE). The problem of finding the optimal primitives' weights is then recast as a finite-horizon optimal control problem, which is convex even when the cost is not and the environment is nonlinear, stochastic, non-stationary. The results yield an algorithm for primitives composition and the effectiveness of Neo-FREE is illustrated via in-silico and hardware experiments on an application involving robot navigation in an environment with obstacles.

A Neurosymbolic Fast and Slow Architecture for Graph Coloring

Dec 02, 2024

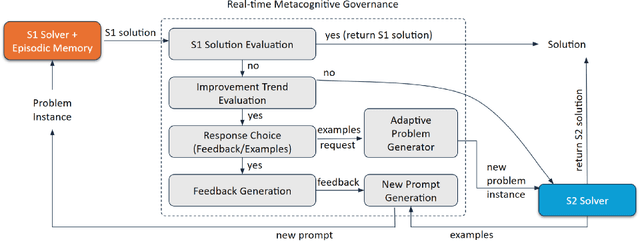

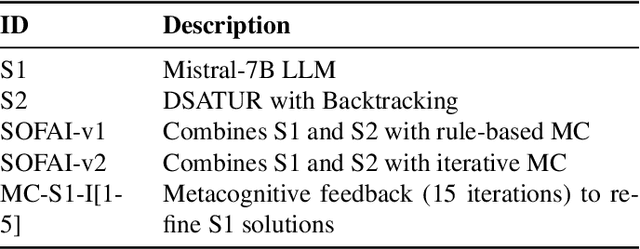

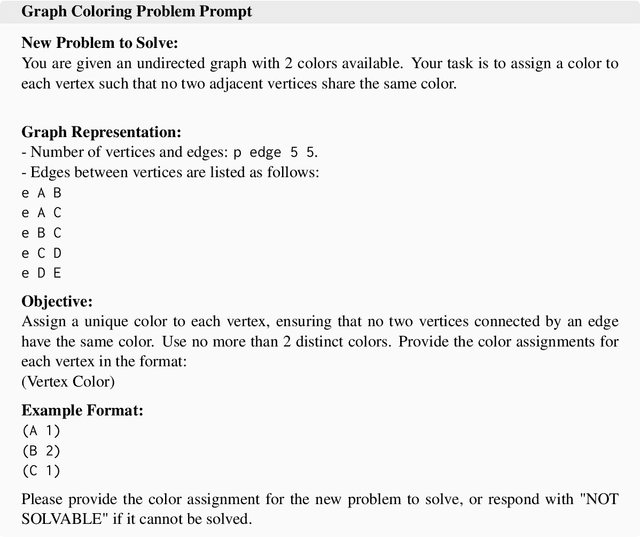

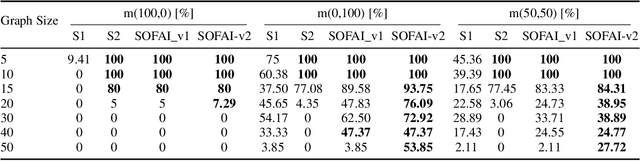

Abstract:Constraint Satisfaction Problems (CSPs) present significant challenges to artificial intelligence due to their intricate constraints and the necessity for precise solutions. Existing symbolic solvers are often slow, and prior research has shown that Large Language Models (LLMs) alone struggle with CSPs because of their complexity. To bridge this gap, we build upon the existing SOFAI architecture (or SOFAI-v1), which adapts Daniel Kahneman's ''Thinking, Fast and Slow'' cognitive model to AI. Our enhanced architecture, SOFAI-v2, integrates refined metacognitive governance mechanisms to improve adaptability across complex domains, specifically tailored for solving CSPs like graph coloring. SOFAI-v2 combines a fast System 1 (S1) based on LLMs with a deliberative System 2 (S2) governed by a metacognition module. S1's initial solutions, often limited by non-adherence to constraints, are enhanced through metacognitive governance, which provides targeted feedback and examples to adapt S1 to CSP requirements. If S1 fails to solve the problem, metacognition strategically invokes S2, ensuring accurate and reliable solutions. With empirical results, we show that SOFAI-v2 for graph coloring problems achieves a 16.98% increased success rate and is 32.42% faster than symbolic solvers.

On the Prospects of Incorporating Large Language Models (LLMs) in Automated Planning and Scheduling (APS)

Jan 04, 2024

Abstract:Automated Planning and Scheduling is among the growing areas in Artificial Intelligence (AI) where mention of LLMs has gained popularity. Based on a comprehensive review of 126 papers, this paper investigates eight categories based on the unique applications of LLMs in addressing various aspects of planning problems: language translation, plan generation, model construction, multi-agent planning, interactive planning, heuristics optimization, tool integration, and brain-inspired planning. For each category, we articulate the issues considered and existing gaps. A critical insight resulting from our review is that the true potential of LLMs unfolds when they are integrated with traditional symbolic planners, pointing towards a promising neuro-symbolic approach. This approach effectively combines the generative aspects of LLMs with the precision of classical planning methods. By synthesizing insights from existing literature, we underline the potential of this integration to address complex planning challenges. Our goal is to encourage the ICAPS community to recognize the complementary strengths of LLMs and symbolic planners, advocating for a direction in automated planning that leverages these synergistic capabilities to develop more advanced and intelligent planning systems.

Value-based Fast and Slow AI Nudging

Jul 14, 2023

Abstract:Nudging is a behavioral strategy aimed at influencing people's thoughts and actions. Nudging techniques can be found in many situations in our daily lives, and these nudging techniques can targeted at human fast and unconscious thinking, e.g., by using images to generate fear or the more careful and effortful slow thinking, e.g., by releasing information that makes us reflect on our choices. In this paper, we propose and discuss a value-based AI-human collaborative framework where AI systems nudge humans by proposing decision recommendations. Three different nudging modalities, based on when recommendations are presented to the human, are intended to stimulate human fast thinking, slow thinking, or meta-cognition. Values that are relevant to a specific decision scenario are used to decide when and how to use each of these nudging modalities. Examples of values are decision quality, speed, human upskilling and learning, human agency, and privacy. Several values can be present at the same time, and their priorities can vary over time. The framework treats values as parameters to be instantiated in a specific decision environment.

Understanding the Capabilities of Large Language Models for Automated Planning

May 25, 2023

Abstract:Automated planning is concerned with developing efficient algorithms to generate plans or sequences of actions to achieve a specific goal in a given environment. Emerging Large Language Models (LLMs) can answer questions, write high-quality programming code, and predict protein folding, showcasing their versatility in solving various tasks beyond language-based problems. In this paper, we aim to explore how LLMs can also be used for automated planning. To do so, we seek to answer four key questions. Firstly, we want to understand the extent to which LLMs can be used for plan generation. Secondly, we aim to identify which pre-training data is most effective in facilitating plan generation. Thirdly, we investigate whether fine-tuning or prompting is a more effective approach for plan generation. Finally, we explore whether LLMs are capable of plan generalization. By answering these questions, the study seeks to shed light on the capabilities of LLMs in solving complex planning problems and provide insights into the most effective approaches for using LLMs in this context.

Fast and Slow Planning

Mar 07, 2023Abstract:The concept of Artificial Intelligence has gained a lot of attention over the last decade. In particular, AI-based tools have been employed in several scenarios and are, by now, pervading our everyday life. Nonetheless, most of these systems lack many capabilities that we would naturally consider to be included in a notion of "intelligence". In this work, we present an architecture that, inspired by the cognitive theory known as Thinking Fast and Slow by D. Kahneman, is tasked with solving planning problems in different settings, specifically: classical and multi-agent epistemic. The system proposed is an instance of a more general AI paradigm, referred to as SOFAI (for Slow and Fast AI). SOFAI exploits multiple solving approaches, with different capabilities that characterize them as either fast or slow, and a metacognitive module to regulate them. This combination of components, which roughly reflects the human reasoning process according to D. Kahneman, allowed us to enhance the reasoning process that, in this case, is concerned with planning in two different settings. The behavior of this system is then compared to state-of-the-art solvers, showing that the newly introduced system presents better results in terms of generality, solving a wider set of problems with an acceptable trade-off between solving times and solution accuracy.

Plansformer: Generating Symbolic Plans using Transformers

Dec 16, 2022Abstract:Large Language Models (LLMs) have been the subject of active research, significantly advancing the field of Natural Language Processing (NLP). From BERT to BLOOM, LLMs have surpassed state-of-the-art results in various natural language tasks such as question answering, summarization, and text generation. Many ongoing efforts focus on understanding LLMs' capabilities, including their knowledge of the world, syntax, and semantics. However, extending the textual prowess of LLMs to symbolic reasoning has been slow and predominantly focused on tackling problems related to the mathematical field. In this paper, we explore the use of LLMs for automated planning - a branch of AI concerned with the realization of action sequences (plans) to achieve a goal, typically executed by intelligent agents, autonomous robots, and unmanned vehicles. We introduce Plansformer; an LLM fine-tuned on planning problems and capable of generating plans with favorable behavior in terms of correctness and length with reduced knowledge-engineering efforts. We also demonstrate the adaptability of Plansformer in solving different planning domains with varying complexities, owing to the transfer learning abilities of LLMs. For one configuration of Plansformer, we achieve ~97% valid plans, out of which ~95% are optimal for Towers of Hanoi - a puzzle-solving domain.

Learning Behavioral Soft Constraints from Demonstrations

Feb 21, 2022

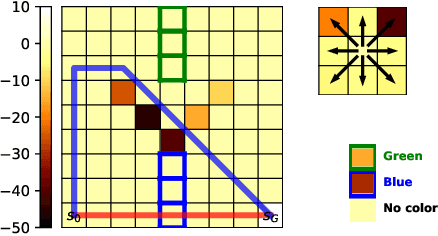

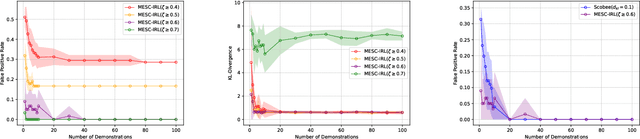

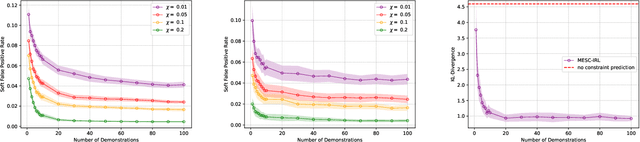

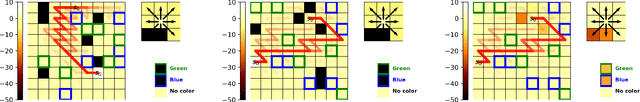

Abstract:Many real-life scenarios require humans to make difficult trade-offs: do we always follow all the traffic rules or do we violate the speed limit in an emergency? These scenarios force us to evaluate the trade-off between collective rules and norms with our own personal objectives and desires. To create effective AI-human teams, we must equip AI agents with a model of how humans make these trade-offs in complex environments when there are implicit and explicit rules and constraints. Agent equipped with these models will be able to mirror human behavior and/or to draw human attention to situations where decision making could be improved. To this end, we propose a novel inverse reinforcement learning (IRL) method: Max Entropy Inverse Soft Constraint IRL (MESC-IRL), for learning implicit hard and soft constraints over states, actions, and state features from demonstrations in deterministic and non-deterministic environments modeled as Markov Decision Processes (MDPs). Our method enables agents implicitly learn human constraints and desires without the need for explicit modeling by the agent designer and to transfer these constraints between environments. Our novel method generalizes prior work which only considered deterministic hard constraints and achieves state of the art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge