Vishal Pallagani

FABLE: A Novel Data-Flow Analysis Benchmark on Procedural Text for Large Language Model Evaluation

May 30, 2025Abstract:Understanding how data moves, transforms, and persists, known as data flow, is fundamental to reasoning in procedural tasks. Despite their fluency in natural and programming languages, large language models (LLMs), although increasingly being applied to decisions with procedural tasks, have not been systematically evaluated for their ability to perform data-flow reasoning. We introduce FABLE, an extensible benchmark designed to assess LLMs' understanding of data flow using structured, procedural text. FABLE adapts eight classical data-flow analyses from software engineering: reaching definitions, very busy expressions, available expressions, live variable analysis, interval analysis, type-state analysis, taint analysis, and concurrency analysis. These analyses are instantiated across three real-world domains: cooking recipes, travel routes, and automated plans. The benchmark includes 2,400 question-answer pairs, with 100 examples for each domain-analysis combination. We evaluate three types of LLMs: a reasoning-focused model (DeepSeek-R1 8B), a general-purpose model (LLaMA 3.1 8B), and a code-specific model (Granite Code 8B). Each model is tested using majority voting over five sampled completions per prompt. Results show that the reasoning model achieves higher accuracy, but at the cost of over 20 times slower inference compared to the other models. In contrast, the general-purpose and code-specific models perform close to random chance. FABLE provides the first diagnostic benchmark to systematically evaluate data-flow reasoning and offers insights for developing models with stronger procedural understanding.

A Neurosymbolic Fast and Slow Architecture for Graph Coloring

Dec 02, 2024

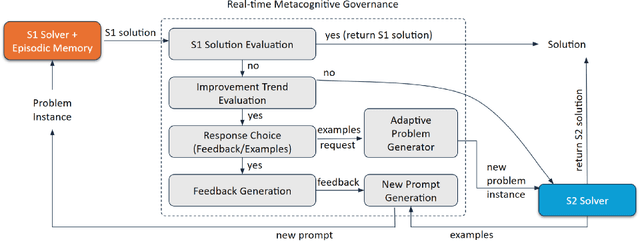

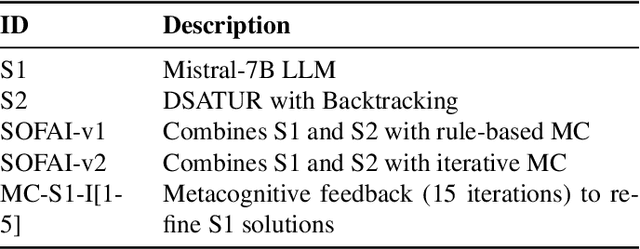

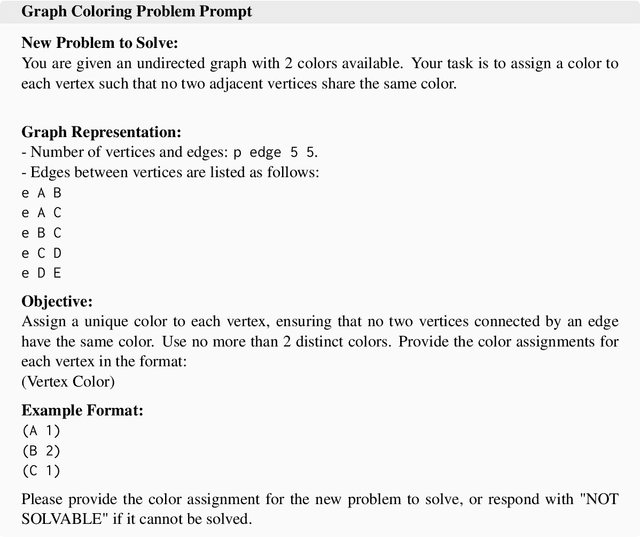

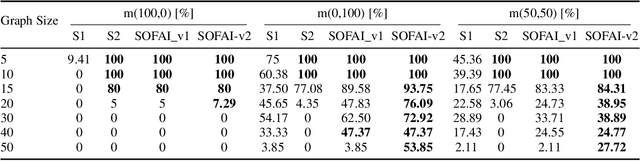

Abstract:Constraint Satisfaction Problems (CSPs) present significant challenges to artificial intelligence due to their intricate constraints and the necessity for precise solutions. Existing symbolic solvers are often slow, and prior research has shown that Large Language Models (LLMs) alone struggle with CSPs because of their complexity. To bridge this gap, we build upon the existing SOFAI architecture (or SOFAI-v1), which adapts Daniel Kahneman's ''Thinking, Fast and Slow'' cognitive model to AI. Our enhanced architecture, SOFAI-v2, integrates refined metacognitive governance mechanisms to improve adaptability across complex domains, specifically tailored for solving CSPs like graph coloring. SOFAI-v2 combines a fast System 1 (S1) based on LLMs with a deliberative System 2 (S2) governed by a metacognition module. S1's initial solutions, often limited by non-adherence to constraints, are enhanced through metacognitive governance, which provides targeted feedback and examples to adapt S1 to CSP requirements. If S1 fails to solve the problem, metacognition strategically invokes S2, ensuring accurate and reliable solutions. With empirical results, we show that SOFAI-v2 for graph coloring problems achieves a 16.98% increased success rate and is 32.42% faster than symbolic solvers.

Neurosymbolic AI for Enhancing Instructability in Generative AI

Jul 26, 2024

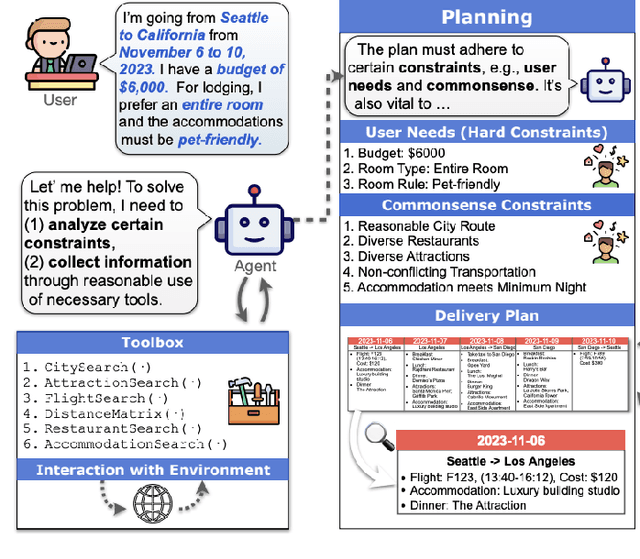

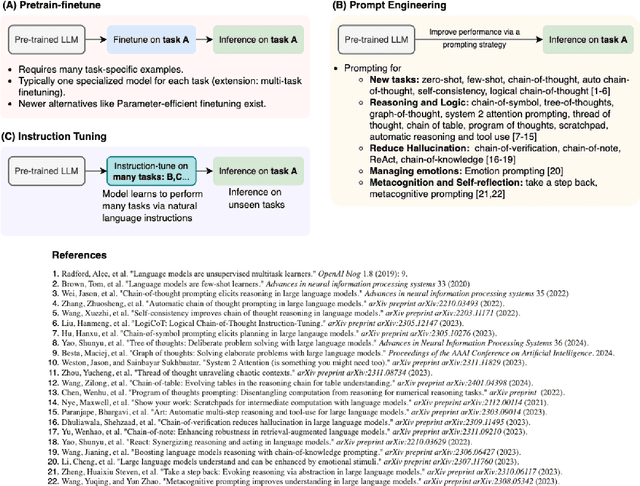

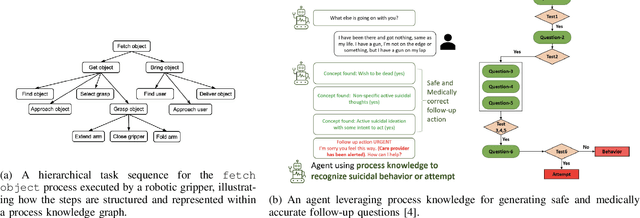

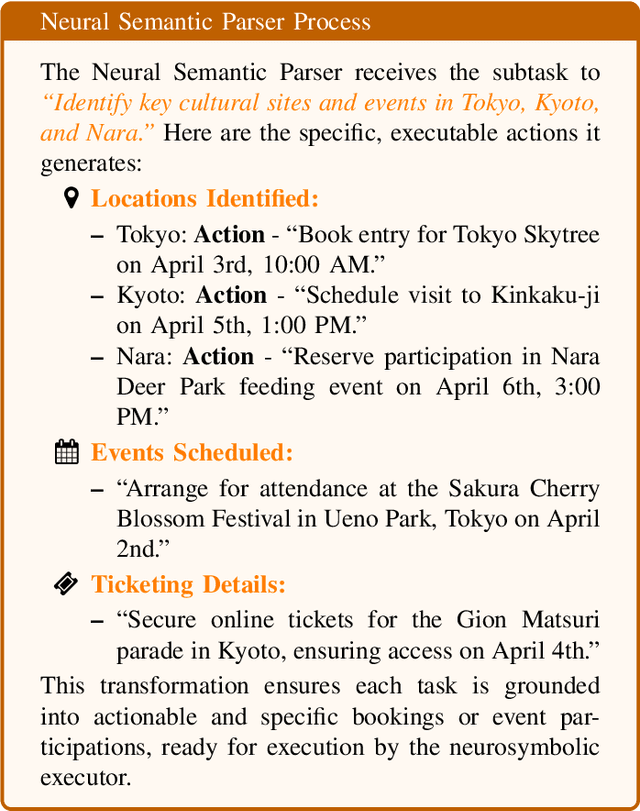

Abstract:Generative AI, especially via Large Language Models (LLMs), has transformed content creation across text, images, and music, showcasing capabilities in following instructions through prompting, largely facilitated by instruction tuning. Instruction tuning is a supervised fine-tuning method where LLMs are trained on datasets formatted with specific tasks and corresponding instructions. This method systematically enhances the model's ability to comprehend and execute the provided directives. Despite these advancements, LLMs still face challenges in consistently interpreting complex, multi-step instructions and generalizing them to novel tasks, which are essential for broader applicability in real-world scenarios. This article explores why neurosymbolic AI offers a better path to enhance the instructability of LLMs. We explore the use a symbolic task planner to decompose high-level instructions into structured tasks, a neural semantic parser to ground these tasks into executable actions, and a neuro-symbolic executor to implement these actions while dynamically maintaining an explicit representation of state. We also seek to show that neurosymbolic approach enhances the reliability and context-awareness of task execution, enabling LLMs to dynamically interpret and respond to a wider range of instructional contexts with greater precision and flexibility.

PLANTS: A Novel Problem and Dataset for Summarization of Planning-Like (PL) Tasks

Jul 18, 2024

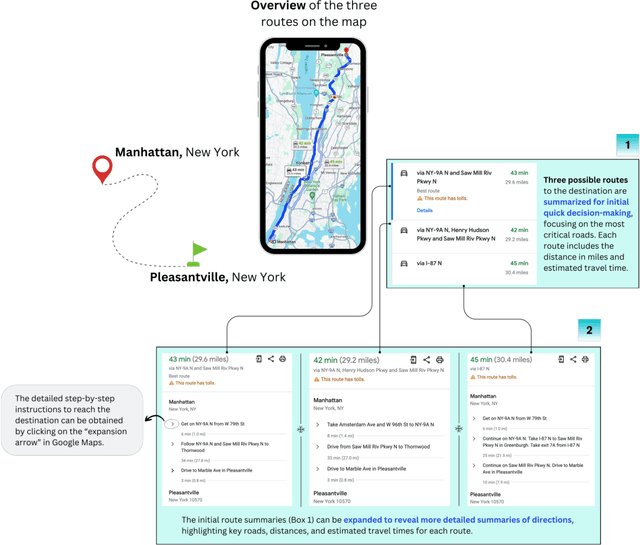

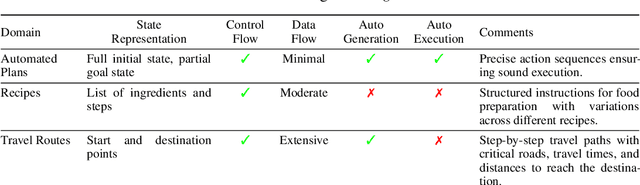

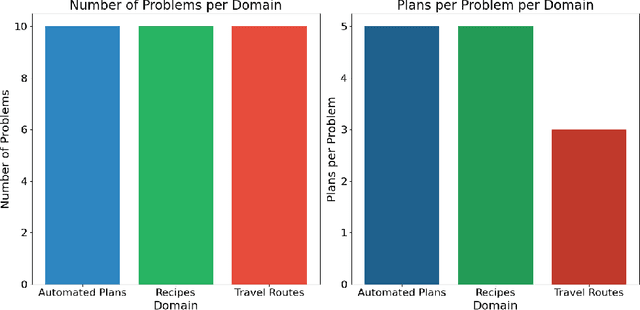

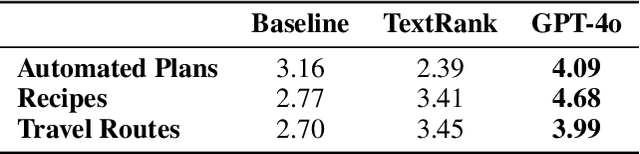

Abstract:Text summarization is a well-studied problem that deals with deriving insights from unstructured text consumed by humans, and it has found extensive business applications. However, many real-life tasks involve generating a series of actions to achieve specific goals, such as workflows, recipes, dialogs, and travel plans. We refer to them as planning-like (PL) tasks noting that the main commonality they share is control flow information. which may be partially specified. Their structure presents an opportunity to create more practical summaries to help users make quick decisions. We investigate this observation by introducing a novel plan summarization problem, presenting a dataset, and providing a baseline method for generating PL summaries. Using quantitative metrics and qualitative user studies to establish baselines, we evaluate the plan summaries from our method and large language models. We believe the novel problem and dataset can reinvigorate research in summarization, which some consider as a solved problem.

The Case for Developing a Foundation Model for Planning-like Tasks from Scratch

Apr 06, 2024

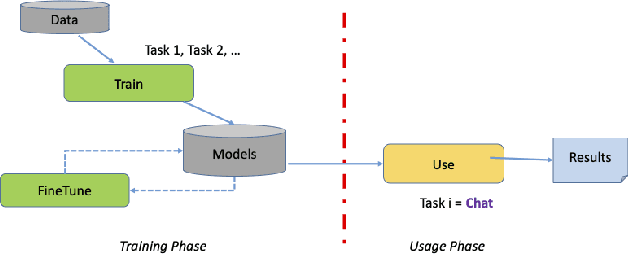

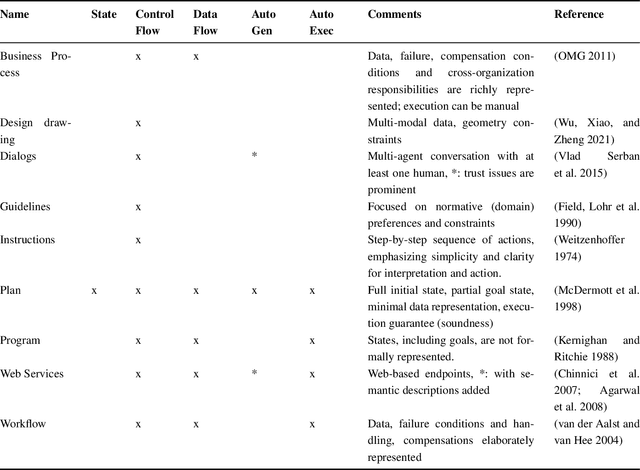

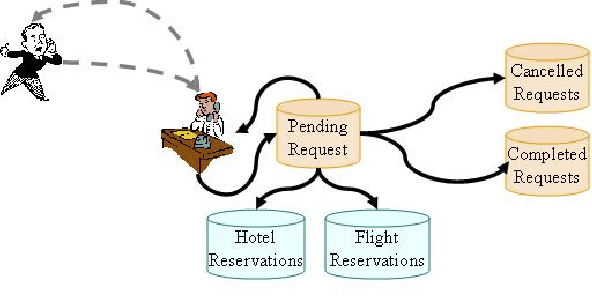

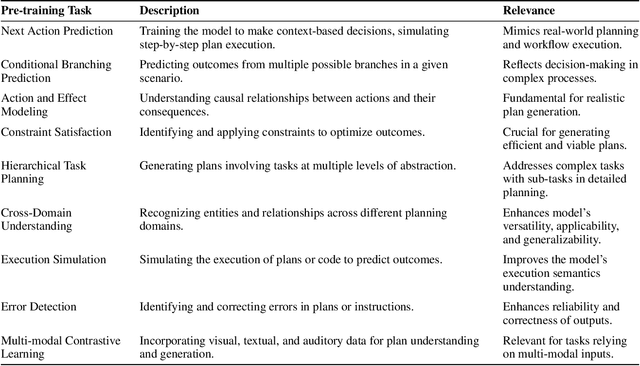

Abstract:Foundation Models (FMs) have revolutionized many areas of computing, including Automated Planning and Scheduling (APS). For example, a recent study found them useful for planning problems: plan generation, language translation, model construction, multi-agent planning, interactive planning, heuristics optimization, tool integration, and brain-inspired planning. Besides APS, there are many seemingly related tasks involving the generation of a series of actions with varying guarantees of their executability to achieve intended goals, which we collectively call planning-like (PL) tasks like business processes, programs, workflows, and guidelines, where researchers have considered using FMs. However, previous works have primarily focused on pre-trained, off-the-shelf FMs and optionally fine-tuned them. This paper discusses the need for a comprehensive FM for PL tasks from scratch and explores its design considerations. We argue that such an FM will open new and efficient avenues for PL problem-solving, just like LLMs are creating for APS.

On the Prospects of Incorporating Large Language Models (LLMs) in Automated Planning and Scheduling (APS)

Jan 04, 2024

Abstract:Automated Planning and Scheduling is among the growing areas in Artificial Intelligence (AI) where mention of LLMs has gained popularity. Based on a comprehensive review of 126 papers, this paper investigates eight categories based on the unique applications of LLMs in addressing various aspects of planning problems: language translation, plan generation, model construction, multi-agent planning, interactive planning, heuristics optimization, tool integration, and brain-inspired planning. For each category, we articulate the issues considered and existing gaps. A critical insight resulting from our review is that the true potential of LLMs unfolds when they are integrated with traditional symbolic planners, pointing towards a promising neuro-symbolic approach. This approach effectively combines the generative aspects of LLMs with the precision of classical planning methods. By synthesizing insights from existing literature, we underline the potential of this integration to address complex planning challenges. Our goal is to encourage the ICAPS community to recognize the complementary strengths of LLMs and symbolic planners, advocating for a direction in automated planning that leverages these synergistic capabilities to develop more advanced and intelligent planning systems.

A Planning Ontology to Represent and Exploit Planning Knowledge for Performance Efficiency

Jul 25, 2023Abstract:Ontologies are known for their ability to organize rich metadata, support the identification of novel insights via semantic queries, and promote reuse. In this paper, we consider the problem of automated planning, where the objective is to find a sequence of actions that will move an agent from an initial state of the world to a desired goal state. We hypothesize that given a large number of available planners and diverse planning domains; they carry essential information that can be leveraged to identify suitable planners and improve their performance for a domain. We use data on planning domains and planners from the International Planning Competition (IPC) to construct a planning ontology and demonstrate via experiments in two use cases that the ontology can lead to the selection of promising planners and improving their performance using macros - a form of action ordering constraints extracted from planning ontology. We also make the planning ontology and associated resources available to the community to promote further research.

On Solving the Rubik's Cube with Domain-Independent Planners Using Standard Representations

Jul 25, 2023

Abstract:Rubik's Cube (RC) is a well-known and computationally challenging puzzle that has motivated AI researchers to explore efficient alternative representations and problem-solving methods. The ideal situation for planning here is that a problem be solved optimally and efficiently represented in a standard notation using a general-purpose solver and heuristics. The fastest solver today for RC is DeepCubeA with a custom representation, and another approach is with Scorpion planner with State-Action-Space+ (SAS+) representation. In this paper, we present the first RC representation in the popular PDDL language so that the domain becomes more accessible to PDDL planners, competitions, and knowledge engineering tools, and is more human-readable. We then bridge across existing approaches and compare performance. We find that in one comparable experiment, DeepCubeA solves all problems with varying complexities, albeit only 18\% are optimal plans. For the same problem set, Scorpion with SAS+ representation and pattern database heuristics solves 61.50\% problems, while FastDownward with PDDL representation and FF heuristic solves 56.50\% problems, out of which all the plans generated were optimal. Our study provides valuable insights into the trade-offs between representational choice and plan optimality that can help researchers design future strategies for challenging domains combining general-purpose solving methods (planning, reinforcement learning), heuristics, and representations (standard or custom).

Value-based Fast and Slow AI Nudging

Jul 14, 2023

Abstract:Nudging is a behavioral strategy aimed at influencing people's thoughts and actions. Nudging techniques can be found in many situations in our daily lives, and these nudging techniques can targeted at human fast and unconscious thinking, e.g., by using images to generate fear or the more careful and effortful slow thinking, e.g., by releasing information that makes us reflect on our choices. In this paper, we propose and discuss a value-based AI-human collaborative framework where AI systems nudge humans by proposing decision recommendations. Three different nudging modalities, based on when recommendations are presented to the human, are intended to stimulate human fast thinking, slow thinking, or meta-cognition. Values that are relevant to a specific decision scenario are used to decide when and how to use each of these nudging modalities. Examples of values are decision quality, speed, human upskilling and learning, human agency, and privacy. Several values can be present at the same time, and their priorities can vary over time. The framework treats values as parameters to be instantiated in a specific decision environment.

Can LLMs be Good Financial Advisors?: An Initial Study in Personal Decision Making for Optimized Outcomes

Jul 08, 2023Abstract:Increasingly powerful Large Language Model (LLM) based chatbots, like ChatGPT and Bard, are becoming available to users that have the potential to revolutionize the quality of decision-making achieved by the public. In this context, we set out to investigate how such systems perform in the personal finance domain, where financial inclusion has been an overarching stated aim of banks for decades. We asked 13 questions representing banking products in personal finance: bank account, credit card, and certificate of deposits and their inter-product interactions, and decisions related to high-value purchases, payment of bank dues, and investment advice, and in different dialects and languages (English, African American Vernacular English, and Telugu). We find that although the outputs of the chatbots are fluent and plausible, there are still critical gaps in providing accurate and reliable financial information using LLM-based chatbots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge