Raghava Mutharaju

ApplE: An Applied Ethics Ontology with Event Context

Feb 07, 2025

Abstract:Applied ethics is ubiquitous in most domains, requiring much deliberation due to its philosophical nature. Varying views often lead to conflicting courses of action where ethical dilemmas become challenging to resolve. Although many factors contribute to such a decision, the major driving forces can be discretized and thus simplified to provide an indicative answer. Knowledge representation and reasoning offer a way to explicitly translate abstract ethical concepts into applicable principles within the context of an event. To achieve this, we propose ApplE, an Applied Ethics ontology that captures philosophical theory and event context to holistically describe the morality of an action. The development process adheres to a modified version of the Simplified Agile Methodology for Ontology Development (SAMOD) and utilizes standard design and publication practices. Using ApplE, we model a use case from the bioethics domain that demonstrates our ontology's social and scientific value. Apart from the ontological reasoning and quality checks, ApplE is also evaluated using the three-fold testing process of SAMOD. ApplE follows FAIR principles and aims to be a viable resource for applied ethicists and ontology engineers.

RConE: Rough Cone Embedding for Multi-Hop Logical Query Answering on Multi-Modal Knowledge Graphs

Aug 21, 2024

Abstract:Multi-hop query answering over a Knowledge Graph (KG) involves traversing one or more hops from the start node to answer a query. Path-based and logic-based methods are state-of-the-art for multi-hop question answering. The former is used in link prediction tasks. The latter is for answering complex logical queries. The logical multi-hop querying technique embeds the KG and queries in the same embedding space. The existing work incorporates First Order Logic (FOL) operators, such as conjunction ($\wedge$), disjunction ($\vee$), and negation ($\neg$), in queries. Though current models have most of the building blocks to execute the FOL queries, they cannot use the dense information of multi-modal entities in the case of Multi-Modal Knowledge Graphs (MMKGs). We propose RConE, an embedding method to capture the multi-modal information needed to answer a query. The model first shortlists candidate (multi-modal) entities containing the answer. It then finds the solution (sub-entities) within those entities. Several existing works tackle path-based question-answering in MMKGs. However, to our knowledge, we are the first to introduce logical constructs in querying MMKGs and to answer queries that involve sub-entities of multi-modal entities as the answer. Extensive evaluation of four publicly available MMKGs indicates that RConE outperforms the current state-of-the-art.

Knowledge-Driven Cross-Document Relation Extraction

May 22, 2024Abstract:Relation extraction (RE) is a well-known NLP application often treated as a sentence- or document-level task. However, a handful of recent efforts explore it across documents or in the cross-document setting (CrossDocRE). This is distinct from the single document case because different documents often focus on disparate themes, while text within a document tends to have a single goal. Linking findings from disparate documents to identify new relationships is at the core of the popular literature-based knowledge discovery paradigm in biomedicine and other domains. Current CrossDocRE efforts do not consider domain knowledge, which are often assumed to be known to the reader when documents are authored. Here, we propose a novel approach, KXDocRE, that embed domain knowledge of entities with input text for cross-document RE. Our proposed framework has three main benefits over baselines: 1) it incorporates domain knowledge of entities along with documents' text; 2) it offers interpretability by producing explanatory text for predicted relations between entities 3) it improves performance over the prior methods.

Revisiting Document-Level Relation Extraction with Context-Guided Link Prediction

Jan 22, 2024Abstract:Document-level relation extraction (DocRE) poses the challenge of identifying relationships between entities within a document as opposed to the traditional RE setting where a single sentence is input. Existing approaches rely on logical reasoning or contextual cues from entities. This paper reframes document-level RE as link prediction over a knowledge graph with distinct benefits: 1) Our approach combines entity context with document-derived logical reasoning, enhancing link prediction quality. 2) Predicted links between entities offer interpretability, elucidating employed reasoning. We evaluate our approach on three benchmark datasets: DocRED, ReDocRED, and DWIE. The results indicate that our proposed method outperforms the state-of-the-art models and suggests that incorporating context-based link prediction techniques can enhance the performance of document-level relation extraction models.

ReOnto: A Neuro-Symbolic Approach for Biomedical Relation Extraction

Sep 04, 2023Abstract:Relation Extraction (RE) is the task of extracting semantic relationships between entities in a sentence and aligning them to relations defined in a vocabulary, which is generally in the form of a Knowledge Graph (KG) or an ontology. Various approaches have been proposed so far to address this task. However, applying these techniques to biomedical text often yields unsatisfactory results because it is hard to infer relations directly from sentences due to the nature of the biomedical relations. To address these issues, we present a novel technique called ReOnto, that makes use of neuro symbolic knowledge for the RE task. ReOnto employs a graph neural network to acquire the sentence representation and leverages publicly accessible ontologies as prior knowledge to identify the sentential relation between two entities. The approach involves extracting the relation path between the two entities from the ontology. We evaluate the effect of using symbolic knowledge from ontologies with graph neural networks. Experimental results on two public biomedical datasets, BioRel and ADE, show that our method outperforms all the baselines (approximately by 3\%).

Neuro-Symbolic RDF and Description Logic Reasoners: The State-Of-The-Art and Challenges

Aug 09, 2023

Abstract:Ontologies are used in various domains, with RDF and OWL being prominent standards for ontology development. RDF is favored for its simplicity and flexibility, while OWL enables detailed domain knowledge representation. However, as ontologies grow larger and more expressive, reasoning complexity increases, and traditional reasoners struggle to perform efficiently. Despite optimization efforts, scalability remains an issue. Additionally, advancements in automated knowledge base construction have created large and expressive ontologies that are often noisy and inconsistent, posing further challenges for conventional reasoners. To address these challenges, researchers have explored neuro-symbolic approaches that combine neural networks' learning capabilities with symbolic systems' reasoning abilities. In this chapter,we provide an overview of the existing literature in the field of neuro-symbolic deductive reasoning supported by RDF(S), the description logics EL and ALC, and OWL 2 RL, discussing the techniques employed, the tasks they address, and other relevant efforts in this area.

A Planning Ontology to Represent and Exploit Planning Knowledge for Performance Efficiency

Jul 25, 2023Abstract:Ontologies are known for their ability to organize rich metadata, support the identification of novel insights via semantic queries, and promote reuse. In this paper, we consider the problem of automated planning, where the objective is to find a sequence of actions that will move an agent from an initial state of the world to a desired goal state. We hypothesize that given a large number of available planners and diverse planning domains; they carry essential information that can be leveraged to identify suitable planners and improve their performance for a domain. We use data on planning domains and planners from the International Planning Competition (IPC) to construct a planning ontology and demonstrate via experiments in two use cases that the ontology can lead to the selection of promising planners and improving their performance using macros - a form of action ordering constraints extracted from planning ontology. We also make the planning ontology and associated resources available to the community to promote further research.

OntoSeer -- A Recommendation System to Improve the Quality of Ontologies

Feb 04, 2022

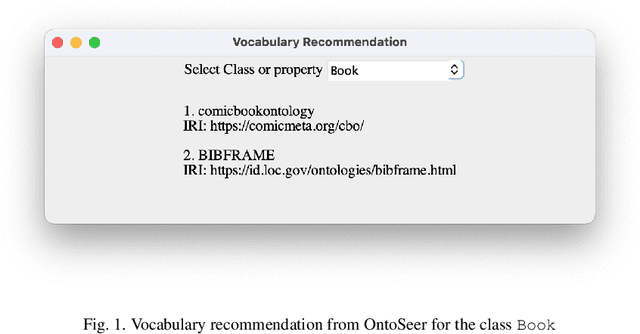

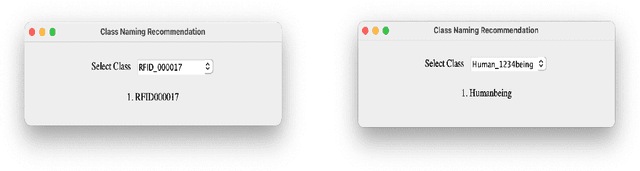

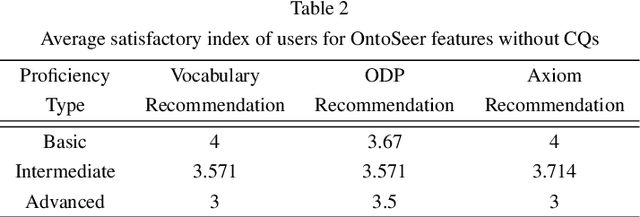

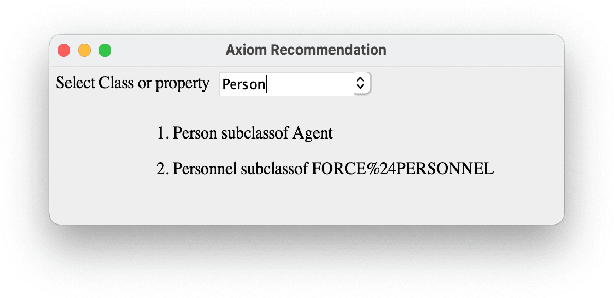

Abstract:Building an ontology is not only a time-consuming process, but it is also confusing, especially for beginners and the inexperienced. Although ontology developers can take the help of domain experts in building an ontology, they are not readily available in several cases for a variety of reasons. Ontology developers have to grapple with several questions related to the choice of classes, properties, and the axioms that should be included. Apart from this, there are aspects such as modularity and reusability that should be taken care of. From among the thousands of publicly available ontologies and vocabularies in repositories such as Linked Open Vocabularies (LOV) and BioPortal, it is hard to know the terms (classes and properties) that can be reused in the development of an ontology. A similar problem exists in implementing the right set of ontology design patterns (ODPs) from among the several available. Generally, ontology developers make use of their experience in handling these issues, and the inexperienced ones have a hard time. In order to bridge this gap, we propose a tool named OntoSeer, that monitors the ontology development process and provides suggestions in real-time to improve the quality of the ontology under development. It can provide suggestions on the naming conventions to follow, vocabulary to reuse, ODPs to implement, and axioms to be added to the ontology. OntoSeer has been implemented as a Prot\'eg\'e plug-in.

SERC: Syntactic and Semantic Sequence based Event Relation Classification

Nov 09, 2021

Abstract:Temporal and causal relations play an important role in determining the dependencies between events. Classifying the temporal and causal relations between events has many applications, such as generating event timelines, event summarization, textual entailment and question answering. Temporal and causal relations are closely related and influence each other. So we propose a joint model that incorporates both temporal and causal features to perform causal relation classification. We use the syntactic structure of the text for identifying temporal and causal relations between two events from the text. We extract parts-of-speech tag sequence, dependency tag sequence and word sequence from the text. We propose an LSTM based model for temporal and causal relation classification that captures the interrelations between the three encoded features. Evaluation of our model on four popular datasets yields promising results for temporal and causal relation classification.

Why Settle for Just One? Extending EL++ Ontology Embeddings with Many-to-Many Relationships

Oct 20, 2021

Abstract:Knowledge Graph (KG) embeddings provide a low-dimensional representation of entities and relations of a Knowledge Graph and are used successfully for various applications such as question answering and search, reasoning, inference, and missing link prediction. However, most of the existing KG embeddings only consider the network structure of the graph and ignore the semantics and the characteristics of the underlying ontology that provides crucial information about relationships between entities in the KG. Recent efforts in this direction involve learning embeddings for a Description Logic (logical underpinning for ontologies) named EL++. However, such methods consider all the relations defined in the ontology to be one-to-one which severely limits their performance and applications. We provide a simple and effective solution to overcome this shortcoming that allows such methods to consider many-to-many relationships while learning embedding representations. Experiments conducted using three different EL++ ontologies show substantial performance improvement over five baselines. Our proposed solution also paves the way for learning embedding representations for even more expressive description logics such as SROIQ.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge