Pedro U. Lima

Latent Perspective-Taking via a Schrödinger Bridge in Influence-Augmented Local Models

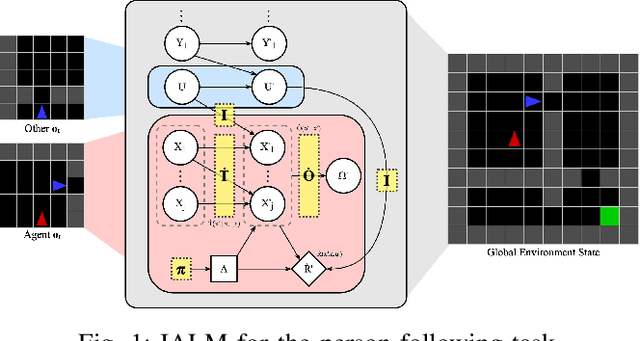

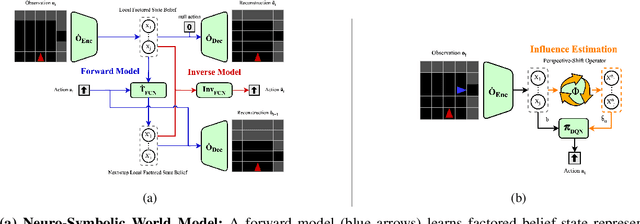

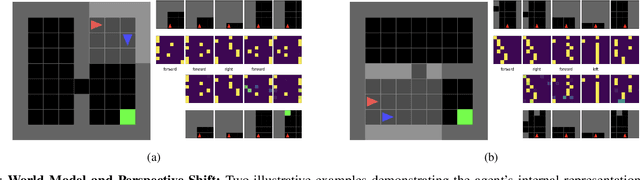

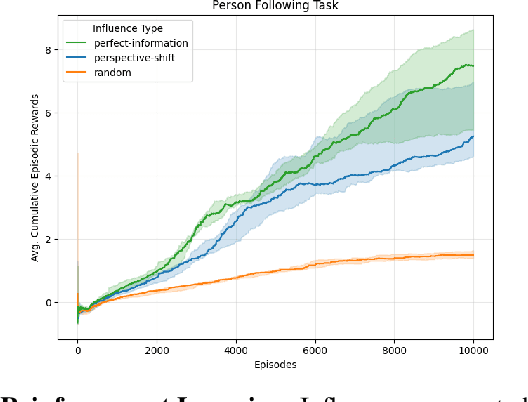

Feb 02, 2026Abstract:Operating in environments alongside humans requires robots to make decisions under uncertainty. In addition to exogenous dynamics, they must reason over others' hidden mental-models and mental-states. While Interactive POMDPs and Bayesian Theory of Mind formulations are principled, exact nested-belief inference is intractable, and hand-specified models are brittle in open-world settings. We address both by learning structured mental-models and an estimator of others' mental-states. Building on the Influence-Based Abstraction, we instantiate an Influence-Augmented Local Model to decompose socially-aware robot tasks into local dynamics, social influences, and exogenous factors. We propose (a) a neuro-symbolic world model instantiating a factored, discrete Dynamic Bayesian Network, and (b) a perspective-shift operator modeled as an amortized Schrödinger Bridge over the learned local dynamics that transports factored egocentric beliefs into other-centric beliefs. We show that this architecture enables agents to synthesize socially-aware policies in model-based reinforcement learning, via decision-time mental-state planning (a Schrödinger Bridge in belief space), with preliminary results in a MiniGrid social navigation task.

Perspective-Shifted Neuro-Symbolic World Models: A Framework for Socially-Aware Robot Navigation

Mar 26, 2025

Abstract:Navigating in environments alongside humans requires agents to reason under uncertainty and account for the beliefs and intentions of those around them. Under a sequential decision-making framework, egocentric navigation can naturally be represented as a Markov Decision Process (MDP). However, social navigation additionally requires reasoning about the hidden beliefs of others, inherently leading to a Partially Observable Markov Decision Process (POMDP), where agents lack direct access to others' mental states. Inspired by Theory of Mind and Epistemic Planning, we propose (1) a neuro-symbolic model-based reinforcement learning architecture for social navigation, addressing the challenge of belief tracking in partially observable environments; and (2) a perspective-shift operator for belief estimation, leveraging recent work on Influence-based Abstractions (IBA) in structured multi-agent settings.

Frontier Shepherding: A Bio-Mimetic Multi-robot Framework for Large-Scale Exploration

Sep 17, 2024

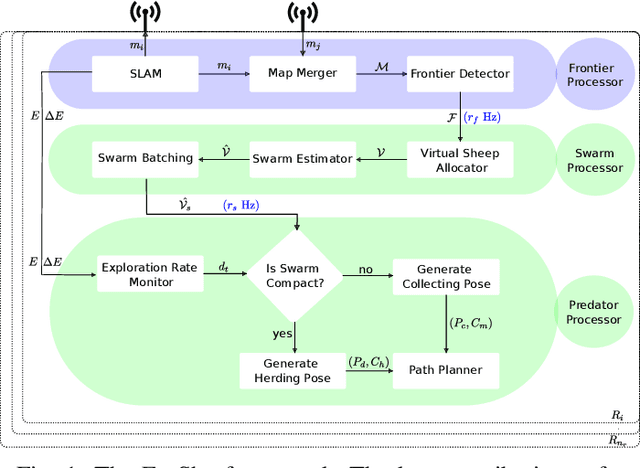

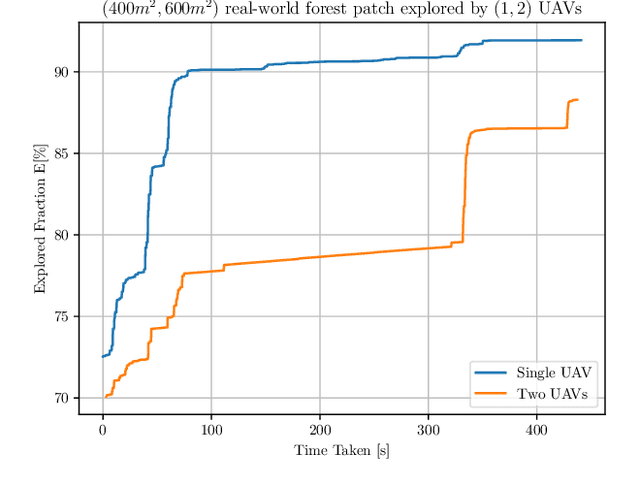

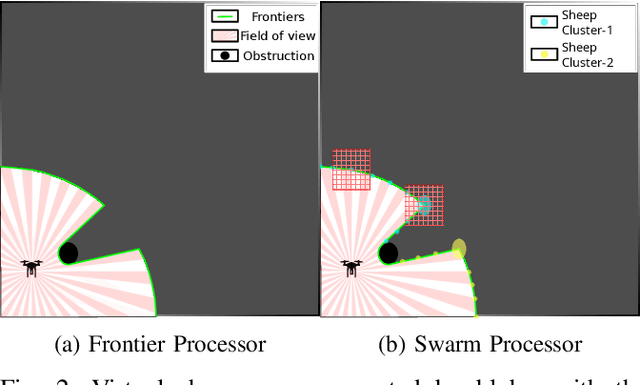

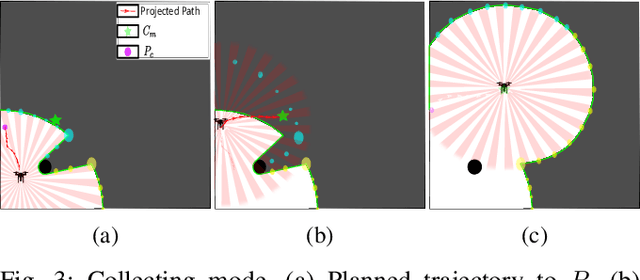

Abstract:Efficient exploration of large-scale environments remains a critical challenge in robotics, with applications ranging from environmental monitoring to search and rescue operations. This article proposes a bio-mimetic multi-robot framework, \textit{Frontier Shepherding (FroShe)}, for large-scale exploration. The presented bio-inspired framework heuristically models frontier exploration similar to the shepherding behavior of herding dogs. This is achieved by modeling frontiers as a sheep swarm reacting to robots modeled as shepherding dogs. The framework is robust across varying environment sizes and obstacle densities and can be easily deployed across multiple agents. Simulation results showcase that the proposed method consistently performed irrespective of the simulated environment's varying sizes and obstacle densities. With the increase in the number of agents, the proposed method outperforms other state-of-the-art exploration methods, with an average improvement of $20\%$ with the next-best approach(for $3$ UAVs). The proposed technique was implemented and tested in a single and dual drone scenario in a real-world forest-like environment.

DeepThought: An Architecture for Autonomous Self-motivated Systems

Nov 14, 2023Abstract:The ability of large language models (LLMs) to engage in credible dialogues with humans, taking into account the training data and the context of the conversation, has raised discussions about their ability to exhibit intrinsic motivations, agency, or even some degree of consciousness. We argue that the internal architecture of LLMs and their finite and volatile state cannot support any of these properties. By combining insights from complementary learning systems, global neuronal workspace, and attention schema theories, we propose to integrate LLMs and other deep learning systems into an architecture for cognitive language agents able to exhibit properties akin to agency, self-motivation, even some features of meta-cognition.

An observer cascade for velocity and multiple line estimation

Mar 03, 2022

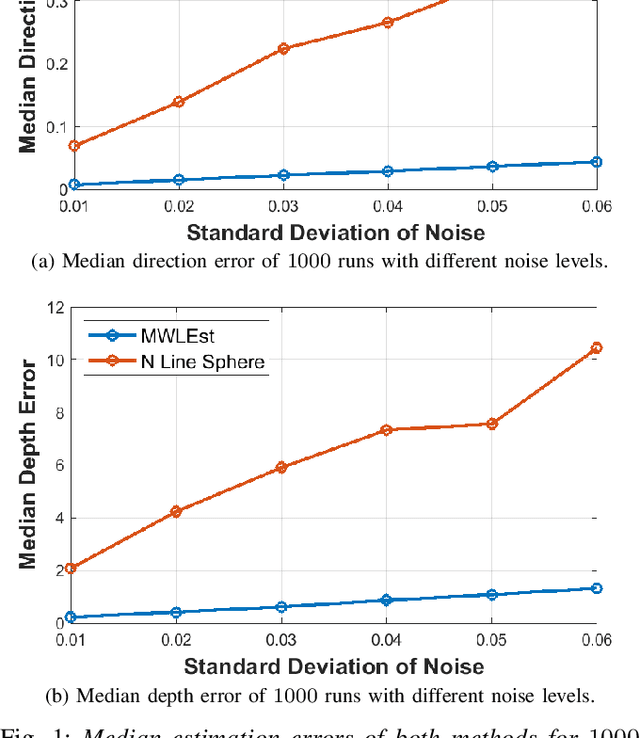

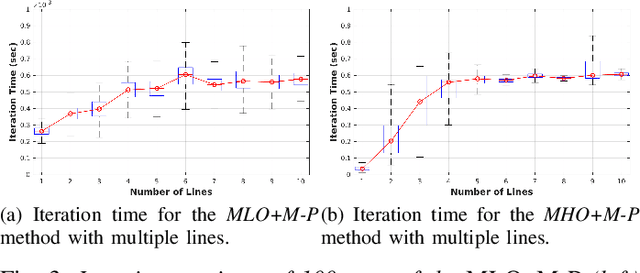

Abstract:Previous incremental estimation methods consider estimating a single line, requiring as many observers as the number of lines to be mapped. This leads to the need for having at least $4N$ state variables, with $N$ being the number of lines. This paper presents the first approach for multi-line incremental estimation. Since lines are common in structured environments, we aim to exploit that structure to reduce the state space. The modeling of structured environments proposed in this paper reduces the state space to $3N + 3$ and is also less susceptible to singular configurations. An assumption the previous methods make is that the camera velocity is available at all times. However, the velocity is usually retrieved from odometry, which is noisy. With this in mind, we propose coupling the camera with an Inertial Measurement Unit (IMU) and an observer cascade. A first observer retrieves the scale of the linear velocity and a second observer for the lines mapping. The stability of the entire system is analyzed. The cascade is shown to be asymptotically stable and shown to converge in experiments with simulated data.

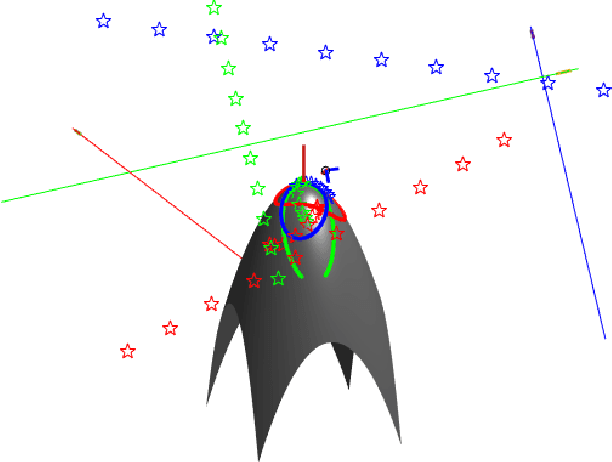

On Incremental Structure-from-Motion using Lines

May 24, 2021

Abstract:Humans tend to build environments with structure, which consists of mainly planar surfaces. From the intersection of planar surfaces arise straight lines. Lines have more degrees-of-freedom than points. Thus, line-based Structure-from-Motion (SfM) provides more information about the environment. In this paper, we present solutions for SfM using lines, namely, incremental SfM. These approaches consist of designing state observers for a camera's dynamical visual system looking at a 3D line. We start by presenting a model that uses spherical coordinates for representing the line's moment vector. We show that this parameterization has singularities, and therefore we introduce a more suitable model that considers the line's moment and shortest viewing ray. Concerning the observers, we present two different methodologies. The first uses a memory-less state-of-the-art framework for dynamic visual systems. Since the previous states of the robotic agent are accessible -- while performing the 3D mapping of the environment -- the second approach aims at exploiting the use of memory to improve the estimation accuracy and convergence speed. The two models and the two observers are evaluated in simulation and real data, where mobile and manipulator robots are used.

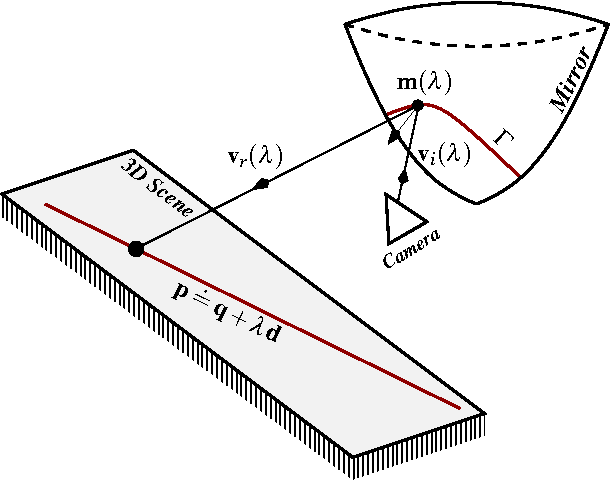

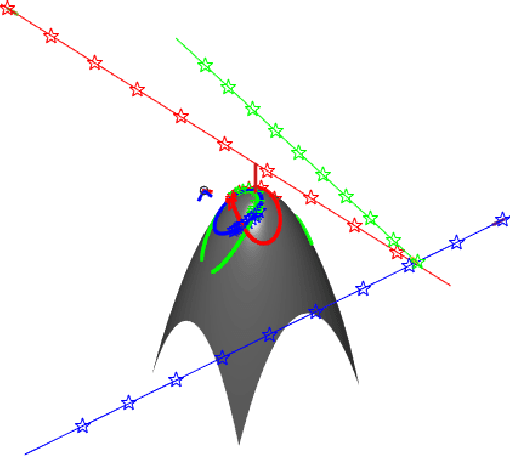

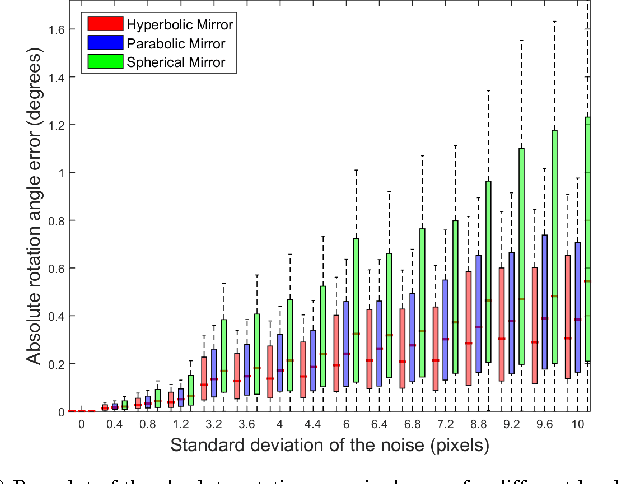

Non-Central Catadioptric Cameras Pose Estimation using 3D Lines

Jul 08, 2016

Abstract:In this article we purpose a novel method for planar pose estimation of mobile robots. This method is based on an analytic solution (which we derived) for the projection of 3D straight lines, onto the mirror of Non-Central Catadioptric Cameras (NCCS). The resulting solution is rewritten as a function of the rotation and translation parameters, which is then used as an error function for a set of mirror points. Those should be the result of the projection of a set of points incident with the respective 3D lines. The camera's pose is given by minimizing the error function, with the associated constraints. The method is validated by experiments both with synthetic and real data. The latter was collected from a mobile robot equipped with a NCCS.

Artificial Intelligence and Systems Theory: Applied to Cooperative Robots

Nov 08, 2004

Abstract:This paper describes an approach to the design of a population of cooperative robots based on concepts borrowed from Systems Theory and Artificial Intelligence. The research has been developed under the SocRob project, carried out by the Intelligent Systems Laboratory at the Institute for Systems and Robotics - Instituto Superior Tecnico (ISR/IST) in Lisbon. The acronym of the project stands both for "Society of Robots" and "Soccer Robots", the case study where we are testing our population of robots. Designing soccer robots is a very challenging problem, where the robots must act not only to shoot a ball towards the goal, but also to detect and avoid static (walls, stopped robots) and dynamic (moving robots) obstacles. Furthermore, they must cooperate to defeat an opposing team. Our past and current research in soccer robotics includes cooperative sensor fusion for world modeling, object recognition and tracking, robot navigation, multi-robot distributed task planning and coordination, including cooperative reinforcement learning in cooperative and adversarial environments, and behavior-based architectures for real time task execution of cooperating robot teams.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge