Andre Mateus

Efficient and Robust Pedestrian Detection using Deep Learning for Human-Aware Navigation

Dec 13, 2018

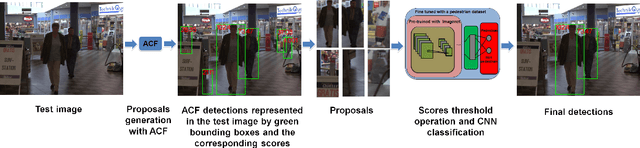

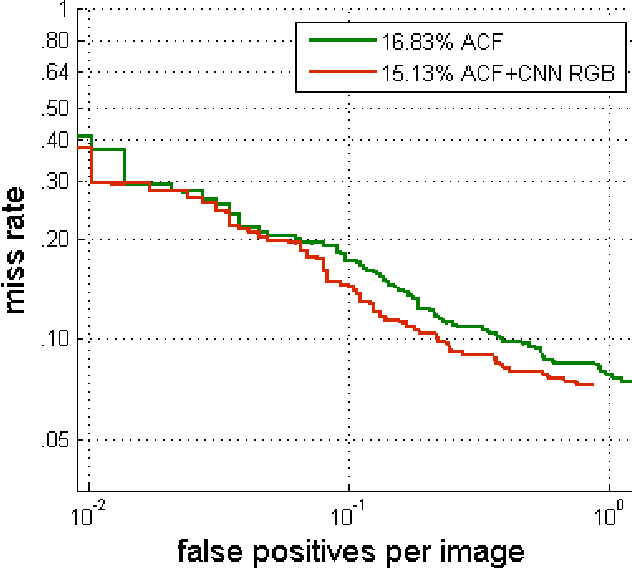

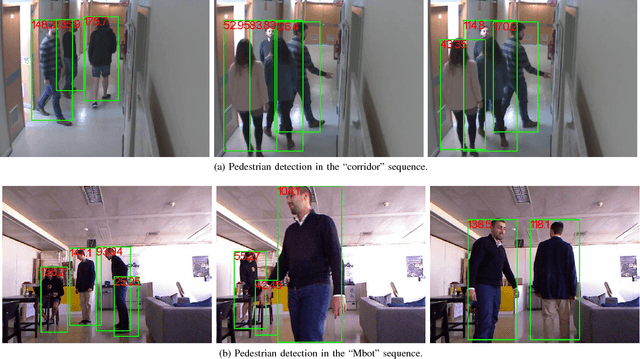

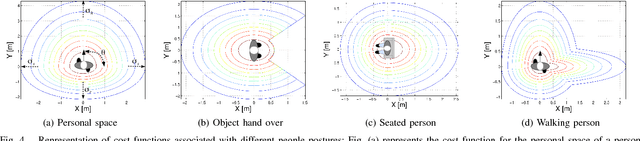

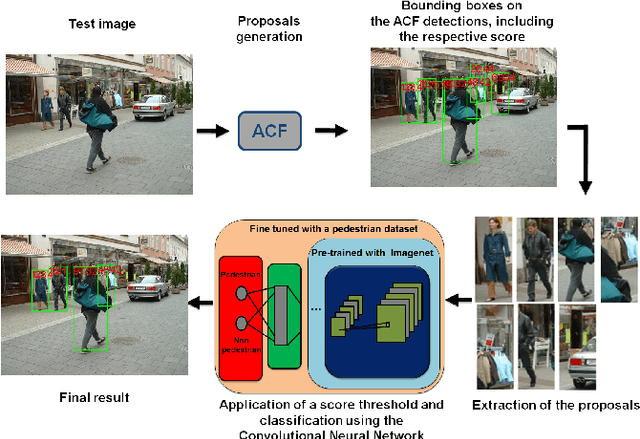

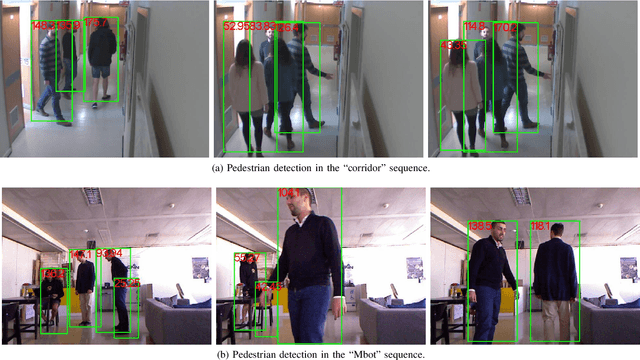

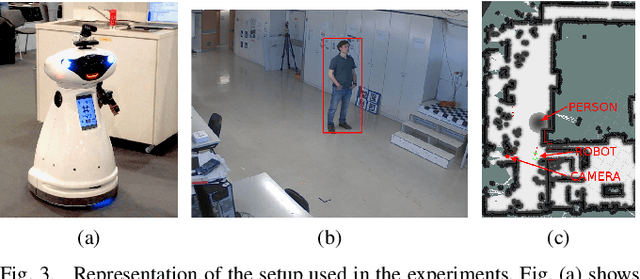

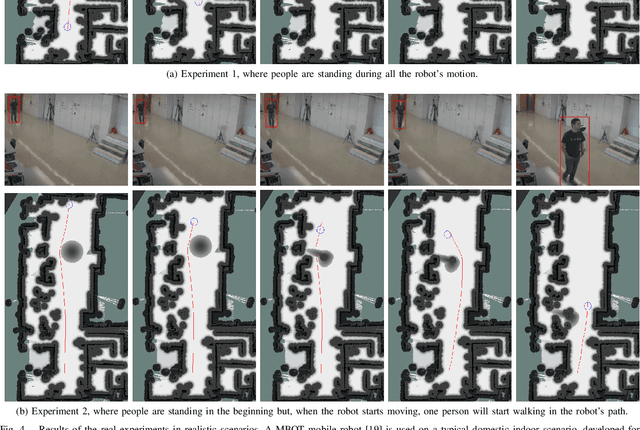

Abstract:This paper addresses the problem of Human-Aware Navigation (HAN), using multi camera sensors to implement a vision-based person tracking system. The main contributions of this paper are as follows: a novel and efficient Deep Learning person detection and a standardization of human-aware constraints. In the first stage of the approach, we propose to cascade the Aggregate Channel Features (ACF) detector with a deep Convolutional Neural Network (CNN) to achieve fast and accurate Pedestrian Detection (PD). Regarding the human awareness (that can be defined as constraints associated with the robot's motion), we use a mixture of asymmetric Gaussian functions, to define the cost functions associated to each constraint. Both methods proposed herein are evaluated individually to measure the impact of each of the components. The final solution (including both the proposed pedestrian detection and the human-aware constraints) is tested in a typical domestic indoor scenario, in four distinct experiments. The results show that the robot is able to cope with human-aware constraints, defined after common proxemics and social rules.

A Real-Time Deep Learning Pedestrian Detector for Robot Navigation

Sep 19, 2017

Abstract:A real-time Deep Learning based method for Pedestrian Detection (PD) is applied to the Human-Aware robot navigation problem. The pedestrian detector combines the Aggregate Channel Features (ACF) detector with a deep Convolutional Neural Network (CNN) in order to obtain fast and accurate performance. Our solution is firstly evaluated using a set of real images taken from onboard and offboard cameras and, then, it is validated in a typical robot navigation environment with pedestrians (two distinct experiments are conducted). The results on both tests show that our pedestrian detector is robust and fast enough to be used on robot navigation applications.

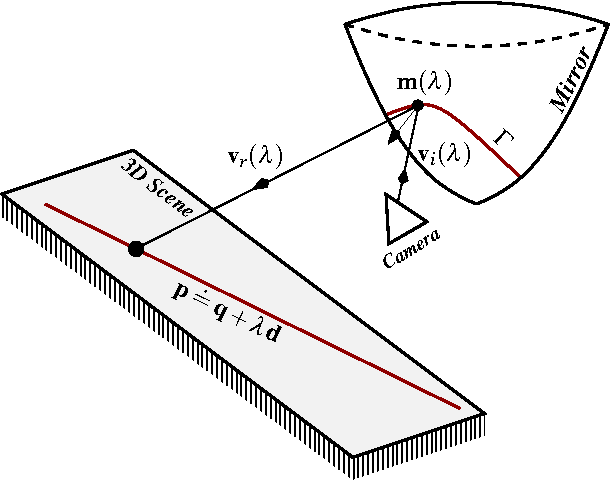

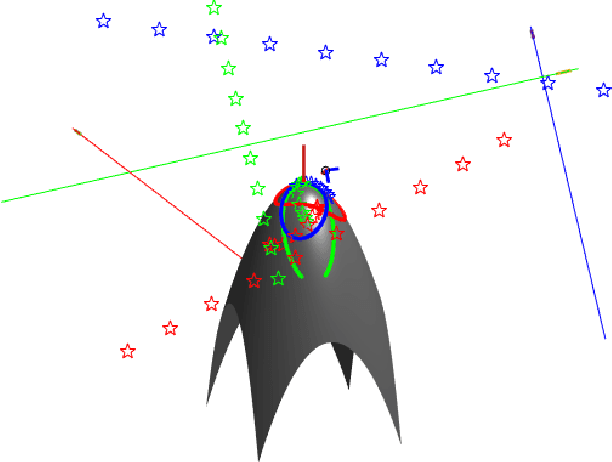

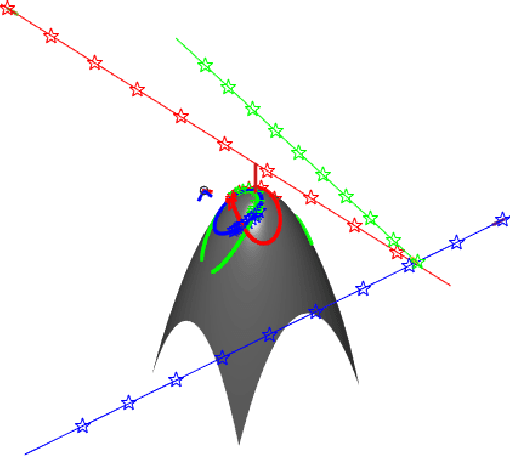

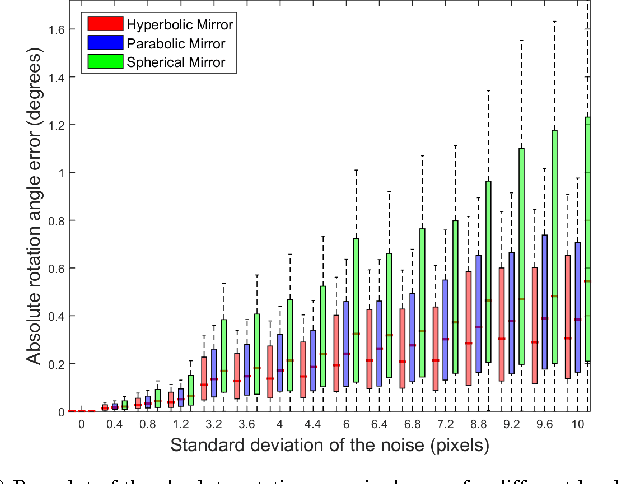

Non-Central Catadioptric Cameras Pose Estimation using 3D Lines

Jul 08, 2016

Abstract:In this article we purpose a novel method for planar pose estimation of mobile robots. This method is based on an analytic solution (which we derived) for the projection of 3D straight lines, onto the mirror of Non-Central Catadioptric Cameras (NCCS). The resulting solution is rewritten as a function of the rotation and translation parameters, which is then used as an error function for a set of mirror points. Those should be the result of the projection of a set of points incident with the respective 3D lines. The camera's pose is given by minimizing the error function, with the associated constraints. The method is validated by experiments both with synthetic and real data. The latter was collected from a mobile robot equipped with a NCCS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge