A. Pedro Aguiar

Investigation of Feature Selection and Pooling Methods for Environmental Sound Classification

Nov 12, 2025

Abstract:This paper explores the impact of dimensionality reduction and pooling methods for Environmental Sound Classification (ESC) using lightweight CNNs. We evaluate Sparse Salient Region Pooling (SSRP) and its variants, SSRP-Basic (SSRP-B) and SSRP-Top-K (SSRP-T), under various hyperparameter settings and compare them with Principal Component Analysis (PCA). Experiments on the ESC-50 dataset demonstrate that SSRP-T achieves up to 80.69 % accuracy, significantly outperforming both the baseline CNN (66.75 %) and the PCA-reduced model (37.60 %). Our findings confirm that a well-tuned sparse pooling strategy provides a robust, efficient, and high-performing solution for ESC tasks, particularly in resource-constrained scenarios where balancing accuracy and computational cost is crucial.

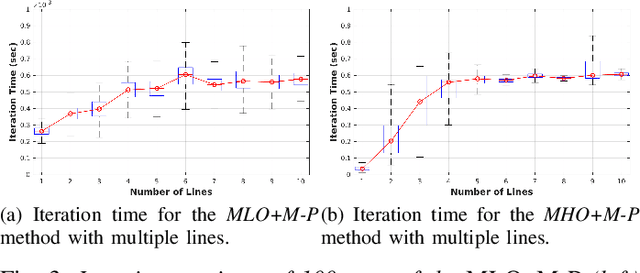

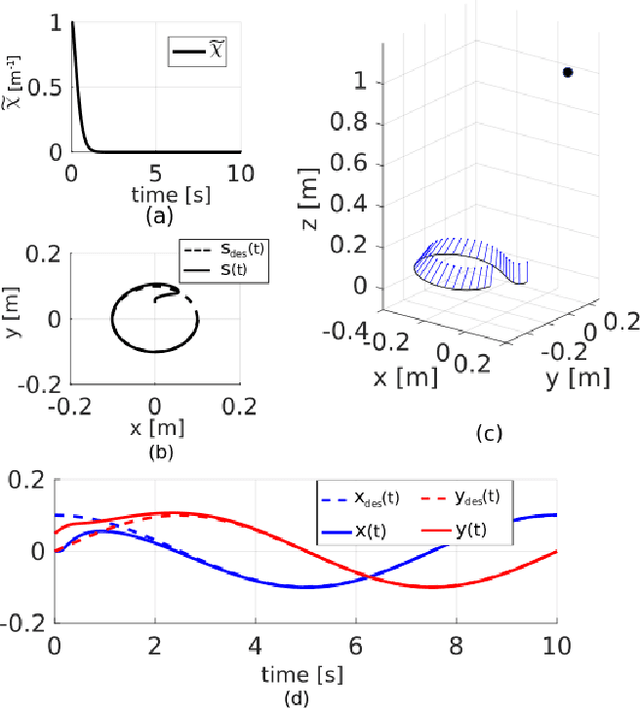

On Incremental Structure-from-Motion using Lines

May 24, 2021

Abstract:Humans tend to build environments with structure, which consists of mainly planar surfaces. From the intersection of planar surfaces arise straight lines. Lines have more degrees-of-freedom than points. Thus, line-based Structure-from-Motion (SfM) provides more information about the environment. In this paper, we present solutions for SfM using lines, namely, incremental SfM. These approaches consist of designing state observers for a camera's dynamical visual system looking at a 3D line. We start by presenting a model that uses spherical coordinates for representing the line's moment vector. We show that this parameterization has singularities, and therefore we introduce a more suitable model that considers the line's moment and shortest viewing ray. Concerning the observers, we present two different methodologies. The first uses a memory-less state-of-the-art framework for dynamic visual systems. Since the previous states of the robotic agent are accessible -- while performing the 3D mapping of the environment -- the second approach aims at exploiting the use of memory to improve the estimation accuracy and convergence speed. The two models and the two observers are evaluated in simulation and real data, where mobile and manipulator robots are used.

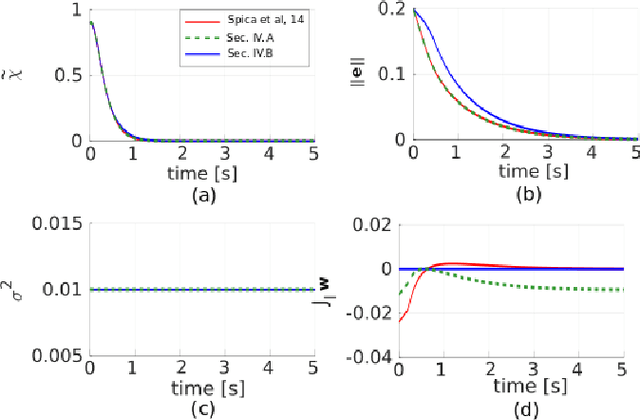

Active Depth Estimation: Stability Analysis and its Applications

Mar 16, 2020

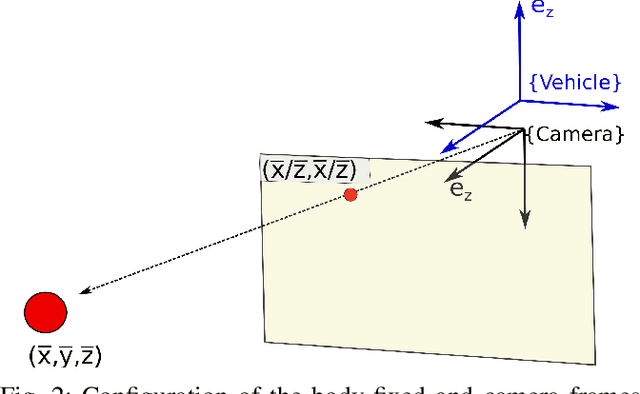

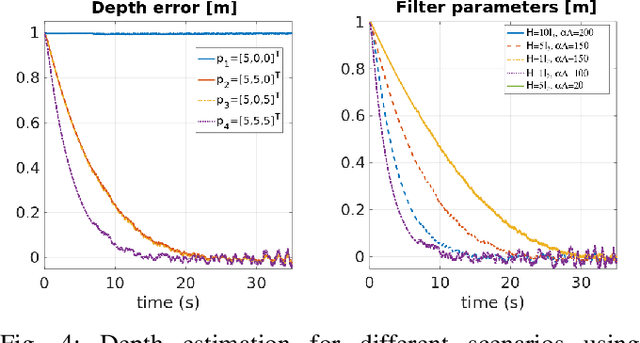

Abstract:Recovering the 3D structure of the surrounding environment is an essential task in any vision-controlled Structure-from-Motion (SfM) scheme. This paper focuses on the theoretical properties of the SfM, known as the incremental active depth estimation. The term incremental stands for estimating the 3D structure of the scene over a chronological sequence of image frames. Active means that the camera actuation is such that it improves estimation performance. Starting from a known depth estimation filter, this paper presents the stability analysis of the filter in terms of the control inputs of the camera. By analyzing the convergence of the estimator using the Lyapunov theory, we relax the constraints on the projection of the 3D point in the image plane when compared to previous results. Nonetheless, our method is capable of dealing with the cameras' limited field-of-view constraints. The main results are validated through experiments with simulated data.

* 7 pages, 3 figures, conference

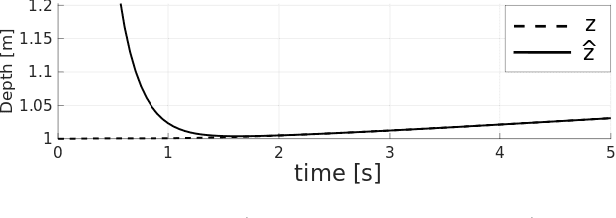

A Framework for Depth Estimation and Relative Localization of Ground Robots using Computer Vision

Aug 01, 2019

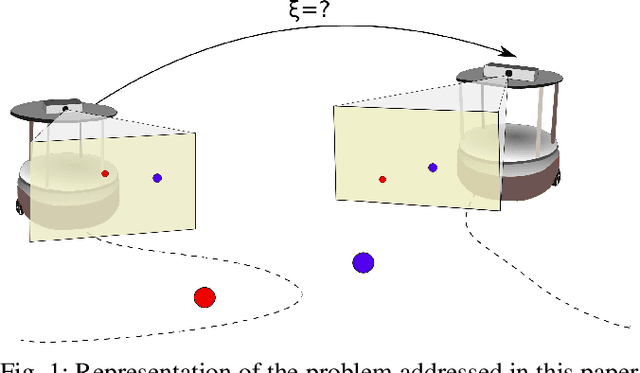

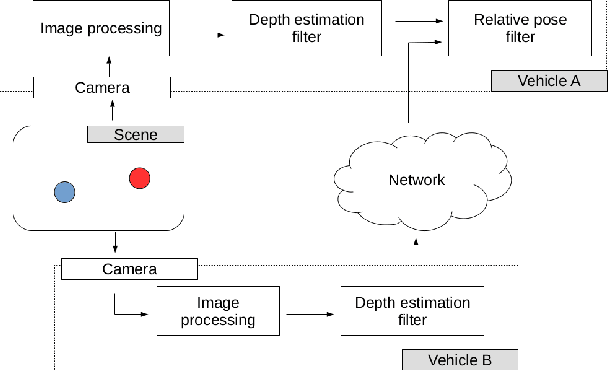

Abstract:The 3D depth estimation and relative pose estimation problem within a decentralized architecture is a challenging problem that arises in missions that require coordination among multiple vision-controlled robots. The depth estimation problem aims at recovering the 3D information of the environment. The relative localization problem consists of estimating the relative pose between two robots, by sensing each other's pose or sharing information about the perceived environment. Most solutions for these problems use a set of discrete data without taking into account the chronological order of the events. This paper builds on recent results on continuous estimation to propose a framework that estimates the depth and relative pose between two non-holonomic vehicles. The basic idea consists in estimating the depth of the points by explicitly considering the dynamics of the camera mounted on a ground robot, and feeding the estimates of 3D points observed by both cameras in a filter that computes the relative pose between the robots. We evaluate the convergence for a set of simulated scenarios and show experimental results validating the proposed framework.

* 6 pages, 7 figures, conference

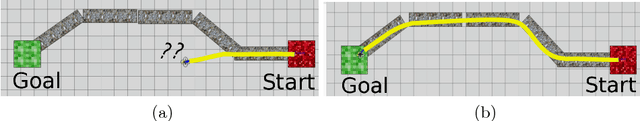

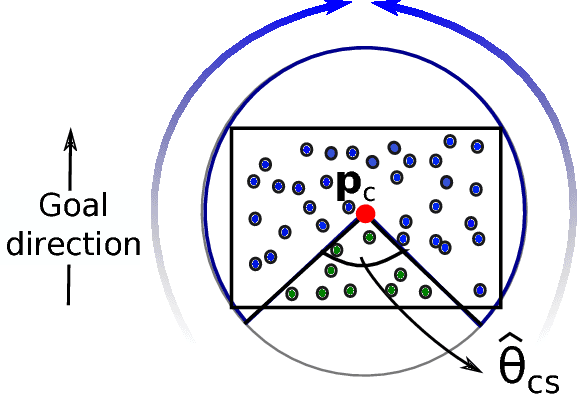

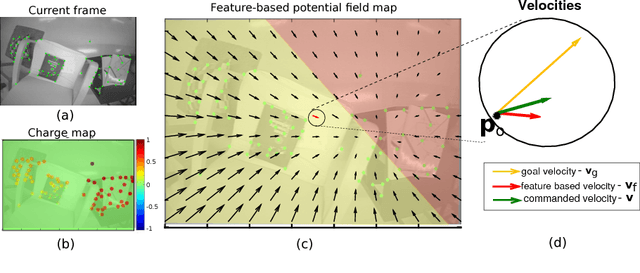

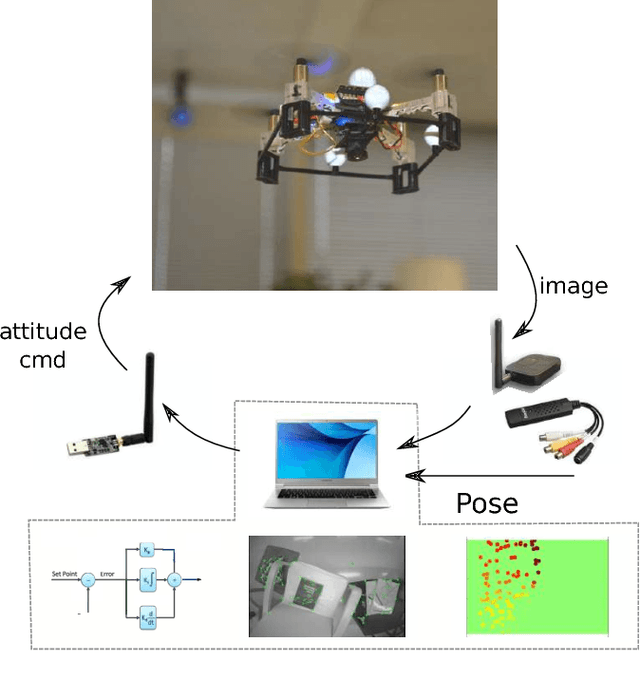

Low-level Active Visual Navigation: Increasing robustness of vision-based localization using potential fields

Mar 23, 2018

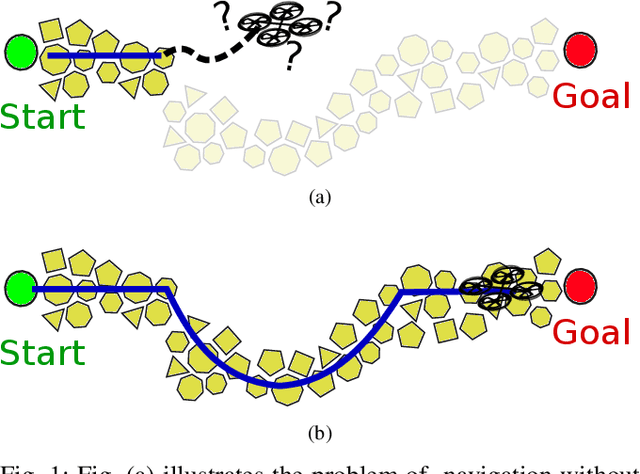

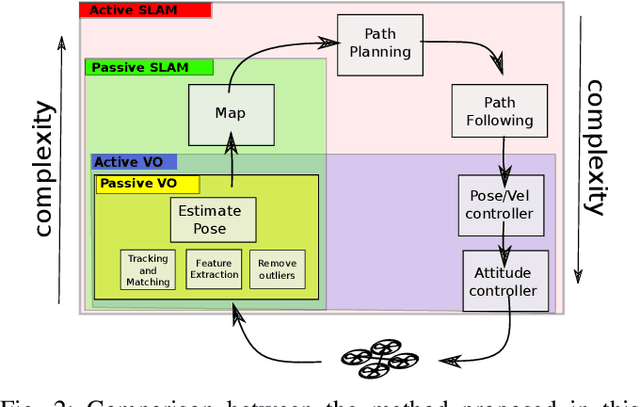

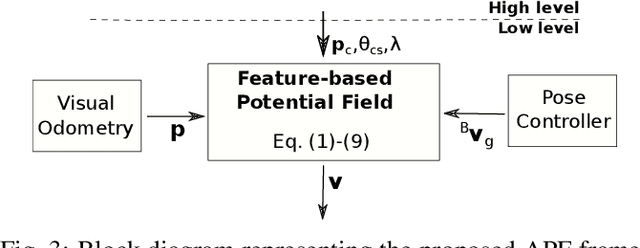

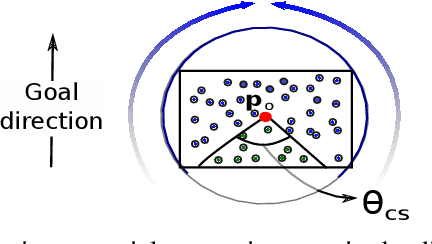

Abstract:This paper proposes a low-level visual navigation algorithm to improve visual localization of a mobile robot. The algorithm, based on artificial potential fields, associates each feature in the current image frame with an attractive or neutral potential energy, with the objective of generating a control action that drives the vehicle towards the goal, while still favoring feature rich areas within a local scope, thus improving the localization performance. One key property of the proposed method is that it does not rely on mapping, and therefore it is a lightweight solution that can be deployed on miniaturized aerial robots, in which memory and computational power are major constraints. Simulations and real experimental results using a mini quadrotor equipped with a downward looking camera demonstrate that the proposed method can effectively drive the vehicle to a designated goal through a path that prevents localization failure.

Feature Based Potential Field for Low-level Active Visual Navigation

Sep 14, 2017

Abstract:This paper proposes a novel solution for improving visual localization in an active fashion. The solution, based on artificial potential field, associates each feature in the current image frame with an attractive or neutral potential energy. The resultant action drives the vehicle towards the goal, while still favoring feature rich areas. Experimental results with a mini quadrotor equipped with a downward looking camera assess the performance of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge