Qiurui He

STT: Stateful Tracking with Transformers for Autonomous Driving

Apr 30, 2024Abstract:Tracking objects in three-dimensional space is critical for autonomous driving. To ensure safety while driving, the tracker must be able to reliably track objects across frames and accurately estimate their states such as velocity and acceleration in the present. Existing works frequently focus on the association task while either neglecting the model performance on state estimation or deploying complex heuristics to predict the states. In this paper, we propose STT, a Stateful Tracking model built with Transformers, that can consistently track objects in the scenes while also predicting their states accurately. STT consumes rich appearance, geometry, and motion signals through long term history of detections and is jointly optimized for both data association and state estimation tasks. Since the standard tracking metrics like MOTA and MOTP do not capture the combined performance of the two tasks in the wider spectrum of object states, we extend them with new metrics called S-MOTA and MOTPS that address this limitation. STT achieves competitive real-time performance on the Waymo Open Dataset.

MyStyle: A Personalized Generative Prior

Mar 31, 2022

Abstract:We introduce MyStyle, a personalized deep generative prior trained with a few shots of an individual. MyStyle allows to reconstruct, enhance and edit images of a specific person, such that the output is faithful to the person's key facial characteristics. Given a small reference set of portrait images of a person (~100), we tune the weights of a pretrained StyleGAN face generator to form a local, low-dimensional, personalized manifold in the latent space. We show that this manifold constitutes a personalized region that spans latent codes associated with diverse portrait images of the individual. Moreover, we demonstrate that we obtain a personalized generative prior, and propose a unified approach to apply it to various ill-posed image enhancement problems, such as inpainting and super-resolution, as well as semantic editing. Using the personalized generative prior we obtain outputs that exhibit high-fidelity to the input images and are also faithful to the key facial characteristics of the individual in the reference set. We demonstrate our method with fair-use images of numerous widely recognizable individuals for whom we have the prior knowledge for a qualitative evaluation of the expected outcome. We evaluate our approach against few-shots baselines and show that our personalized prior, quantitatively and qualitatively, outperforms state-of-the-art alternatives.

Single-Image Lens Flare Removal

Nov 26, 2020

Abstract:Lens flare is a common artifact in photographs occurring when the camera is pointed at a strong light source. It is caused by either multiple reflections within the lens or scattering due to scratches or dust on the lens, and may appear in a wide variety of patterns: halos, streaks, color bleeding, haze, etc. The diversity in its appearance makes flare removal extremely challenging. Existing software methods make strong assumptions about the artifacts' geometry or brightness, and thus only handle a small subset of flares. We take a principled approach to explicitly model the optical causes of flare, which leads to a novel semi-synthetic pipeline for generating flare-corrupted images from both empirical and wave-optics-simulated lens flares. Using the semi-synthetic data generated by this pipeline, we build a neural network to remove lens flare. Experiments show that our model generalizes well to real lens flares captured by different devices, and outperforms start-of-the-art methods by 3dB in PSNR.

Learning to Autofocus

May 02, 2020

Abstract:Autofocus is an important task for digital cameras, yet current approaches often exhibit poor performance. We propose a learning-based approach to this problem, and provide a realistic dataset of sufficient size for effective learning. Our dataset is labeled with per-pixel depths obtained from multi-view stereo, following "Learning single camera depth estimation using dual-pixels". Using this dataset, we apply modern deep classification models and an ordinal regression loss to obtain an efficient learning-based autofocus technique. We demonstrate that our approach provides a significant improvement compared with previous learned and non-learned methods: our model reduces the mean absolute error by a factor of 3.6 over the best comparable baseline algorithm. Our dataset and code are publicly available.

Handheld Mobile Photography in Very Low Light

Oct 24, 2019

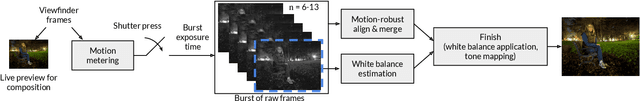

Abstract:Taking photographs in low light using a mobile phone is challenging and rarely produces pleasing results. Aside from the physical limits imposed by read noise and photon shot noise, these cameras are typically handheld, have small apertures and sensors, use mass-produced analog electronics that cannot easily be cooled, and are commonly used to photograph subjects that move, like children and pets. In this paper we describe a system for capturing clean, sharp, colorful photographs in light as low as 0.3~lux, where human vision becomes monochromatic and indistinct. To permit handheld photography without flash illumination, we capture, align, and combine multiple frames. Our system employs "motion metering", which uses an estimate of motion magnitudes (whether due to handshake or moving objects) to identify the number of frames and the per-frame exposure times that together minimize both noise and motion blur in a captured burst. We combine these frames using robust alignment and merging techniques that are specialized for high-noise imagery. To ensure accurate colors in such low light, we employ a learning-based auto white balancing algorithm. To prevent the photographs from looking like they were shot in daylight, we use tone mapping techniques inspired by illusionistic painting: increasing contrast, crushing shadows to black, and surrounding the scene with darkness. All of these processes are performed using the limited computational resources of a mobile device. Our system can be used by novice photographers to produce shareable pictures in a few seconds based on a single shutter press, even in environments so dim that humans cannot see clearly.

* 22 pages, 27 figures

MoSculp: Interactive Visualization of Shape and Time

Sep 14, 2018

Abstract:We present a system that allows users to visualize complex human motion via 3D motion sculptures---a representation that conveys the 3D structure swept by a human body as it moves through space. Given an input video, our system computes the motion sculptures and provides a user interface for rendering it in different styles, including the options to insert the sculpture back into the original video, render it in a synthetic scene or physically print it. To provide this end-to-end workflow, we introduce an algorithm that estimates that human's 3D geometry over time from a set of 2D images and develop a 3D-aware image-based rendering approach that embeds the sculpture back into the scene. By automating the process, our system takes motion sculpture creation out of the realm of professional artists, and makes it applicable to a wide range of existing video material. By providing viewers with 3D information, motion sculptures reveal space-time motion information that is difficult to perceive with the naked eye, and allow viewers to interpret how different parts of the object interact over time. We validate the effectiveness of this approach with user studies, finding that our motion sculpture visualizations are significantly more informative about motion than existing stroboscopic and space-time visualization methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge