Phong Nguyen

Semise: Semi-supervised learning for severity representation in medical image

Jan 07, 2025Abstract:This paper introduces SEMISE, a novel method for representation learning in medical imaging that combines self-supervised and supervised learning. By leveraging both labeled and augmented data, SEMISE addresses the challenge of data scarcity and enhances the encoder's ability to extract meaningful features. This integrated approach leads to more informative representations, improving performance on downstream tasks. As result, our approach achieved a 12% improvement in classification and a 3% improvement in segmentation, outperforming existing methods. These results demonstrate the potential of SIMESE to advance medical image analysis and offer more accurate solutions for healthcare applications, particularly in contexts where labeled data is limited.

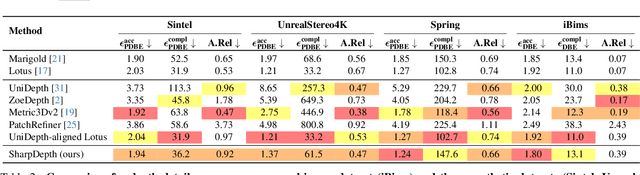

SharpDepth: Sharpening Metric Depth Predictions Using Diffusion Distillation

Nov 27, 2024

Abstract:We propose SharpDepth, a novel approach to monocular metric depth estimation that combines the metric accuracy of discriminative depth estimation methods (e.g., Metric3D, UniDepth) with the fine-grained boundary sharpness typically achieved by generative methods (e.g., Marigold, Lotus). Traditional discriminative models trained on real-world data with sparse ground-truth depth can accurately predict metric depth but often produce over-smoothed or low-detail depth maps. Generative models, in contrast, are trained on synthetic data with dense ground truth, generating depth maps with sharp boundaries yet only providing relative depth with low accuracy. Our approach bridges these limitations by integrating metric accuracy with detailed boundary preservation, resulting in depth predictions that are both metrically precise and visually sharp. Our extensive zero-shot evaluations on standard depth estimation benchmarks confirm SharpDepth effectiveness, showing its ability to achieve both high depth accuracy and detailed representation, making it well-suited for applications requiring high-quality depth perception across diverse, real-world environments.

Design and Implementation of Smart Infrastructures and Connected Vehicles in A Mini-city Platform

Aug 08, 2024

Abstract:This paper presents a 1/10th scale mini-city platform used as a testing bed for evaluating autonomous and connected vehicles. Using the mini-city platform, we can evaluate different driving scenarios including human-driven and autonomous driving. We provide a unique, visual feature-rich environment for evaluating computer vision methods. The conducted experiments utilize onboard sensors mounted on a robotic platform we built, allowing them to navigate in a controlled real-world urban environment. The designed city is occupied by cars, stop signs, a variety of residential and business buildings, and complex intersections mimicking an urban area. Furthermore, We have designed an intelligent infrastructure at one of the intersections in the city which helps safer and more efficient navigation in the presence of multiple cars and pedestrians. We have used the mini-city platform for the analysis of three different applications: city mapping, depth estimation in challenging occluded environments, and smart infrastructure for connected vehicles. Our smart infrastructure is among the first to develop and evaluate Vehicle-to-Infrastructure (V2I) communication at intersections. The intersection-related result shows how inaccuracy in perception, including mapping and localization, can affect safety. The proposed mini-city platform can be considered as a baseline environment for developing research and education in intelligent transportation systems.

Nemotron-4 340B Technical Report

Jun 17, 2024

Abstract:We release the Nemotron-4 340B model family, including Nemotron-4-340B-Base, Nemotron-4-340B-Instruct, and Nemotron-4-340B-Reward. Our models are open access under the NVIDIA Open Model License Agreement, a permissive model license that allows distribution, modification, and use of the models and its outputs. These models perform competitively to open access models on a wide range of evaluation benchmarks, and were sized to fit on a single DGX H100 with 8 GPUs when deployed in FP8 precision. We believe that the community can benefit from these models in various research studies and commercial applications, especially for generating synthetic data to train smaller language models. Notably, over 98% of data used in our model alignment process is synthetically generated, showcasing the effectiveness of these models in generating synthetic data. To further support open research and facilitate model development, we are also open-sourcing the synthetic data generation pipeline used in our model alignment process.

DiverseDream: Diverse Text-to-3D Synthesis with Augmented Text Embedding

Dec 02, 2023Abstract:Text-to-3D synthesis has recently emerged as a new approach to sampling 3D models by adopting pretrained text-to-image models as guiding visual priors. An intriguing but underexplored problem with existing text-to-3D methods is that 3D models obtained from the sampling-by-optimization procedure tend to have mode collapses, and hence poor diversity in their results. In this paper, we provide an analysis and identify potential causes of such a limited diversity, and then devise a new method that considers the joint generation of different 3D models from the same text prompt, where we propose to use augmented text prompts via textual inversion of reference images to diversify the joint generation. We show that our method leads to improved diversity in text-to-3D synthesis qualitatively and quantitatively.

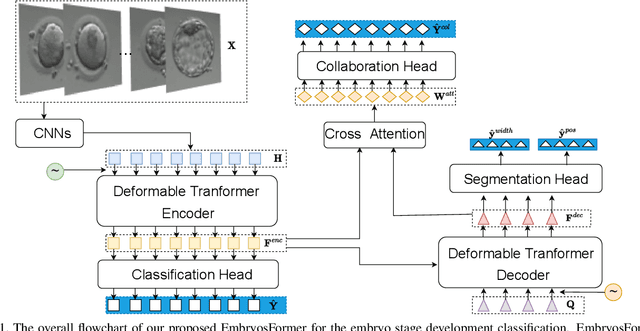

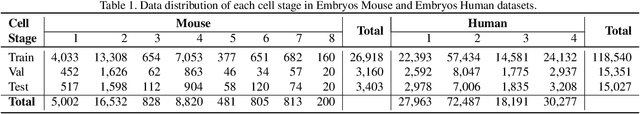

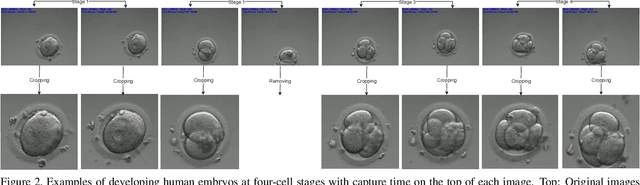

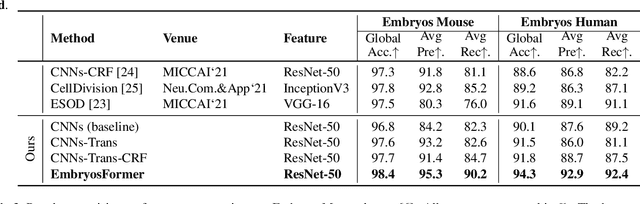

EmbryosFormer: Deformable Transformer and Collaborative Encoding-Decoding for Embryos Stage Development Classification

Oct 07, 2022

Abstract:The timing of cell divisions in early embryos during the In-Vitro Fertilization (IVF) process is a key predictor of embryo viability. However, observing cell divisions in Time-Lapse Monitoring (TLM) is a time-consuming process and highly depends on experts. In this paper, we propose EmbryosFormer, a computational model to automatically detect and classify cell divisions from original time-lapse images. Our proposed network is designed as an encoder-decoder deformable transformer with collaborative heads. The transformer contracting path predicts per-image labels and is optimized by a classification head. The transformer expanding path models the temporal coherency between embryo images to ensure monotonic non-decreasing constraint and is optimized by a segmentation head. Both contracting and expanding paths are synergetically learned by a collaboration head. We have benchmarked our proposed EmbryosFormer on two datasets: a public dataset with mouse embryos with 8-cell stage and an in-house dataset with human embryos with 4-cell stage. Source code: https://github.com/UARK-AICV/Embryos.

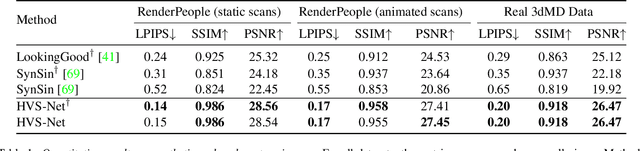

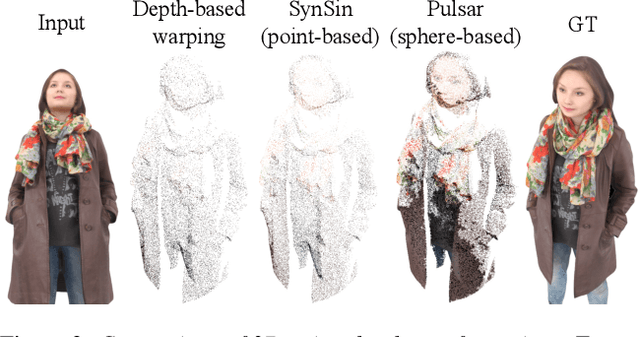

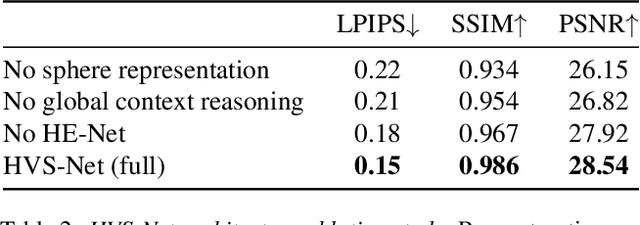

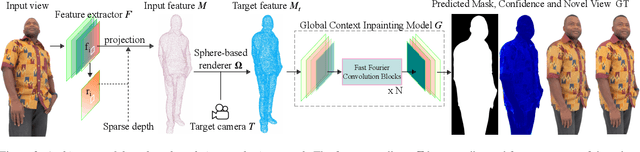

Human View Synthesis using a Single Sparse RGB-D Input

Dec 30, 2021

Abstract:Novel view synthesis for humans in motion is a challenging computer vision problem that enables applications such as free-viewpoint video. Existing methods typically use complex setups with multiple input views, 3D supervision, or pre-trained models that do not generalize well to new identities. Aiming to address these limitations, we present a novel view synthesis framework to generate realistic renders from unseen views of any human captured from a single-view sensor with sparse RGB-D, similar to a low-cost depth camera, and without actor-specific models. We propose an architecture to learn dense features in novel views obtained by sphere-based neural rendering, and create complete renders using a global context inpainting model. Additionally, an enhancer network leverages the overall fidelity, even in occluded areas from the original view, producing crisp renders with fine details. We show our method generates high-quality novel views of synthetic and real human actors given a single sparse RGB-D input. It generalizes to unseen identities, new poses and faithfully reconstructs facial expressions. Our approach outperforms prior human view synthesis methods and is robust to different levels of input sparsity.

SP-GPT2: Semantics Improvement in Vietnamese Poetry Generation

Oct 10, 2021

Abstract:Automatic text generation has garnered growing attention in recent years as an essential step towards computer creativity. Generative Pretraining Transformer 2 (GPT2) is one of the state of the art approaches that have excellent successes. In this paper, we took the first step to investigate the power of GPT2 in traditional Vietnamese poetry generation. In the earlier time, our experiment with base GPT2 was quite good at generating the poem in the proper template. Though it can learn the patterns, including rhyme and tone rules, from the training data, like almost all other text generation approaches, the poems generated still has a topic drift and semantic inconsistency. To improve the cohesion within the poems, we proposed a new model SP-GPT2 (semantic poem GPT2) which was built on the top GPT2 model and an additional loss to constrain context throughout the entire poem. For better evaluation, we examined the methods by both automatic quantitative evaluation and human evaluation. Both automatic and human evaluation demonstrated that our approach can generate poems that have better cohesion without losing the quality due to additional loss. At the same time, we are the pioneers of this topic. We released the first computational scoring module for poems generated in the template containing the style rule dictionary. Additionally, we are the first to publish a Luc-Bat dataset, including 87609 Luc Bat poems, which is equivalent to about 2.6 million sentences, combined with about 83579 poems in other styles was also published for further exploration. The code is available at https://github.com/fsoft-ailab/Poem-Generator

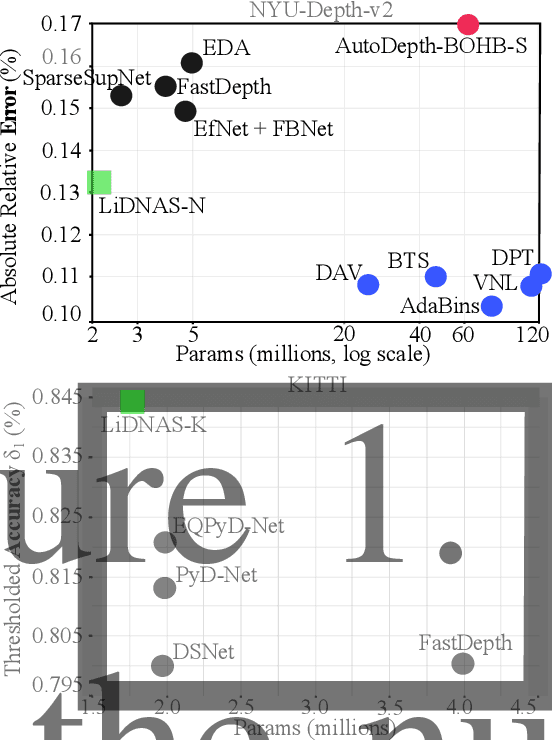

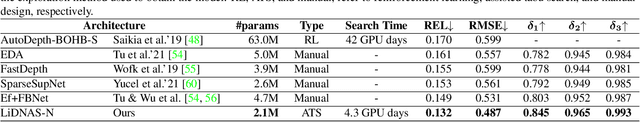

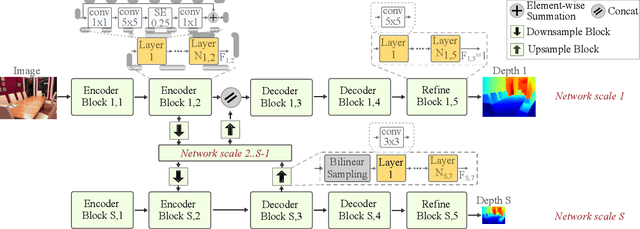

Lightweight Monocular Depth with a Novel Neural Architecture Search Method

Aug 25, 2021

Abstract:This paper presents a novel neural architecture search method, called LiDNAS, for generating lightweight monocular depth estimation models. Unlike previous neural architecture search (NAS) approaches, where finding optimized networks are computationally highly demanding, the introduced novel Assisted Tabu Search leads to efficient architecture exploration. Moreover, we construct the search space on a pre-defined backbone network to balance layer diversity and search space size. The LiDNAS method outperforms the state-of-the-art NAS approach, proposed for disparity and depth estimation, in terms of search efficiency and output model performance. The LiDNAS optimized models achieve results superior to compact depth estimation state-of-the-art on NYU-Depth-v2, KITTI, and ScanNet, while being 7%-500% more compact in size, i.e the number of model parameters.

Monocular Depth Estimation Primed by Salient Point Detection and Normalized Hessian Loss

Aug 25, 2021

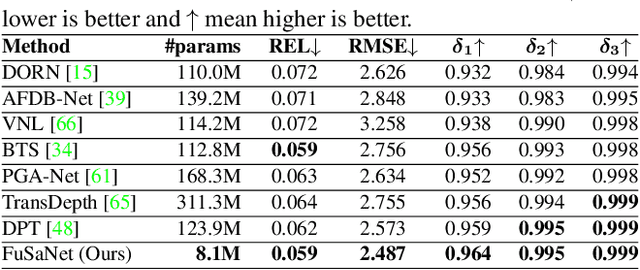

Abstract:Deep neural networks have recently thrived on single image depth estimation. That being said, current developments on this topic highlight an apparent compromise between accuracy and network size. This work proposes an accurate and lightweight framework for monocular depth estimation based on a self-attention mechanism stemming from salient point detection. Specifically, we utilize a sparse set of keypoints to train a FuSaNet model that consists of two major components: Fusion-Net and Saliency-Net. In addition, we introduce a normalized Hessian loss term invariant to scaling and shear along the depth direction, which is shown to substantially improve the accuracy. The proposed method achieves state-of-the-art results on NYU-Depth-v2 and KITTI while using 3.1-38.4 times smaller model in terms of the number of parameters than baseline approaches. Experiments on the SUN-RGBD further demonstrate the generalizability of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge