Naoto Yokoya

Experience-Driven Multi-Agent Systems Are Training-free Context-aware Earth Observers

Jan 30, 2026Abstract:Recent advances have enabled large language model (LLM) agents to solve complex tasks by orchestrating external tools. However, these agents often struggle in specialized, tool-intensive domains that demand long-horizon execution, tight coordination across modalities, and strict adherence to implicit tool constraints. Earth Observation (EO) tasks exemplify this challenge due to the multi-modal and multi-temporal data inputs, as well as the requirements of geo-knowledge constraints (spectrum library, spatial reasoning, etc): many high-level plans can be derailed by subtle execution errors that propagate through a pipeline and invalidate final results. A core difficulty is that existing agents lack a mechanism to learn fine-grained, tool-level expertise from interaction. Without such expertise, they cannot reliably configure tool parameters or recover from mid-execution failures, limiting their effectiveness in complex EO workflows. To address this, we introduce \textbf{GeoEvolver}, a self-evolving multi-agent system~(MAS) that enables LLM agents to acquire EO expertise through structured interaction without any parameter updates. GeoEvolver decomposes each query into independent sub-goals via a retrieval-augmented multi-agent orchestrator, then explores diverse tool-parameter configurations at the sub-goal level. Successful patterns and root-cause attribution from failures are then distilled in an evolving memory bank that provides in-context demonstrations for future queries. Experiments on three tool-integrated EO benchmarks show that GeoEvolver consistently improves end-to-end task success, with an average gain of 12\% across multiple LLM backbones, demonstrating that EO expertise can emerge progressively from efficient, fine-grained interactions with the environment.

The Confidence Dichotomy: Analyzing and Mitigating Miscalibration in Tool-Use Agents

Jan 12, 2026Abstract:Autonomous agents based on large language models (LLMs) are rapidly evolving to handle multi-turn tasks, but ensuring their trustworthiness remains a critical challenge. A fundamental pillar of this trustworthiness is calibration, which refers to an agent's ability to express confidence that reliably reflects its actual performance. While calibration is well-established for static models, its dynamics in tool-integrated agentic workflows remain underexplored. In this work, we systematically investigate verbalized calibration in tool-use agents, revealing a fundamental confidence dichotomy driven by tool type. Specifically, our pilot study identifies that evidence tools (e.g., web search) systematically induce severe overconfidence due to inherent noise in retrieved information, while verification tools (e.g., code interpreters) can ground reasoning through deterministic feedback and mitigate miscalibration. To robustly improve calibration across tool types, we propose a reinforcement learning (RL) fine-tuning framework that jointly optimizes task accuracy and calibration, supported by a holistic benchmark of reward designs. We demonstrate that our trained agents not only achieve superior calibration but also exhibit robust generalization from local training environments to noisy web settings and to distinct domains such as mathematical reasoning. Our results highlight the necessity of domain-specific calibration strategies for tool-use agents. More broadly, this work establishes a foundation for building self-aware agents that can reliably communicate uncertainty in high-stakes, real-world deployments.

Geo3DVQA: Evaluating Vision-Language Models for 3D Geospatial Reasoning from Aerial Imagery

Dec 21, 2025Abstract:Three-dimensional geospatial analysis is critical for applications in urban planning, climate adaptation, and environmental assessment. However, current methodologies depend on costly, specialized sensors, such as LiDAR and multispectral sensors, which restrict global accessibility. Additionally, existing sensor-based and rule-driven methods struggle with tasks requiring the integration of multiple 3D cues, handling diverse queries, and providing interpretable reasoning. We present Geo3DVQA, a comprehensive benchmark that evaluates vision-language models (VLMs) in height-aware 3D geospatial reasoning from RGB imagery alone. Unlike conventional sensor-based frameworks, Geo3DVQA emphasizes realistic scenarios integrating elevation, sky view factors, and land cover patterns. The benchmark comprises 110k curated question-answer pairs across 16 task categories, including single-feature inference, multi-feature reasoning, and application-level analysis. Through a systematic evaluation of ten state-of-the-art VLMs, we reveal fundamental limitations in RGB-to-3D spatial reasoning. Our results further show that domain-specific instruction tuning consistently enhances model performance across all task categories, including height-aware and open-ended, application-oriented reasoning. Geo3DVQA provides a unified, interpretable framework for evaluating RGB-based 3D geospatial reasoning and identifies key challenges and opportunities for scalable 3D spatial analysis. The code and data are available at https://github.com/mm1129/Geo3DVQA.

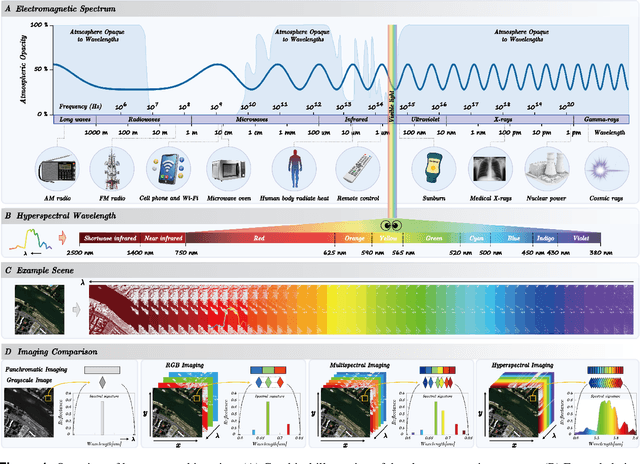

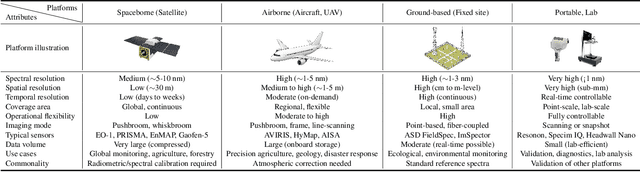

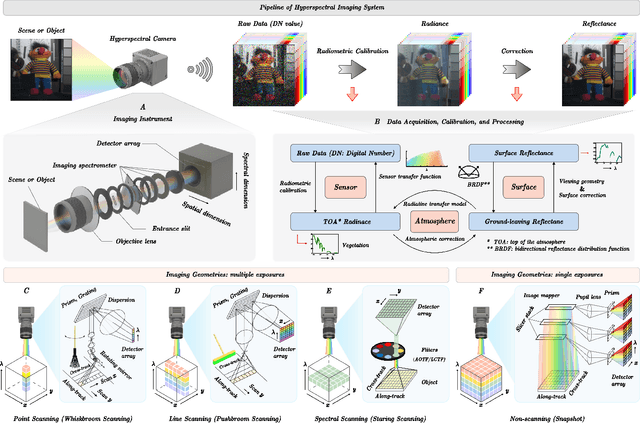

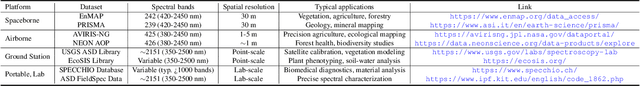

Hyperspectral Imaging

Aug 11, 2025

Abstract:Hyperspectral imaging (HSI) is an advanced sensing modality that simultaneously captures spatial and spectral information, enabling non-invasive, label-free analysis of material, chemical, and biological properties. This Primer presents a comprehensive overview of HSI, from the underlying physical principles and sensor architectures to key steps in data acquisition, calibration, and correction. We summarize common data structures and highlight classical and modern analysis methods, including dimensionality reduction, classification, spectral unmixing, and AI-driven techniques such as deep learning. Representative applications across Earth observation, precision agriculture, biomedicine, industrial inspection, cultural heritage, and security are also discussed, emphasizing HSI's ability to uncover sub-visual features for advanced monitoring, diagnostics, and decision-making. Persistent challenges, such as hardware trade-offs, acquisition variability, and the complexity of high-dimensional data, are examined alongside emerging solutions, including computational imaging, physics-informed modeling, cross-modal fusion, and self-supervised learning. Best practices for dataset sharing, reproducibility, and metadata documentation are further highlighted to support transparency and reuse. Looking ahead, we explore future directions toward scalable, real-time, and embedded HSI systems, driven by sensor miniaturization, self-supervised learning, and foundation models. As HSI evolves into a general-purpose, cross-disciplinary platform, it holds promise for transformative applications in science, technology, and society.

DynamicVL: Benchmarking Multimodal Large Language Models for Dynamic City Understanding

May 27, 2025Abstract:Multimodal large language models have demonstrated remarkable capabilities in visual understanding, but their application to long-term Earth observation analysis remains limited, primarily focusing on single-temporal or bi-temporal imagery. To address this gap, we introduce DVL-Suite, a comprehensive framework for analyzing long-term urban dynamics through remote sensing imagery. Our suite comprises 15,063 high-resolution (1.0m) multi-temporal images spanning 42 megacities in the U.S. from 2005 to 2023, organized into two components: DVL-Bench and DVL-Instruct. The DVL-Bench includes seven urban understanding tasks, from fundamental change detection (pixel-level) to quantitative analyses (regional-level) and comprehensive urban narratives (scene-level), capturing diverse urban dynamics including expansion/transformation patterns, disaster assessment, and environmental challenges. We evaluate 17 state-of-the-art multimodal large language models and reveal their limitations in long-term temporal understanding and quantitative analysis. These challenges motivate the creation of DVL-Instruct, a specialized instruction-tuning dataset designed to enhance models' capabilities in multi-temporal Earth observation. Building upon this dataset, we develop DVLChat, a baseline model capable of both image-level question-answering and pixel-level segmentation, facilitating a comprehensive understanding of city dynamics through language interactions.

DisasterM3: A Remote Sensing Vision-Language Dataset for Disaster Damage Assessment and Response

May 27, 2025Abstract:Large vision-language models (VLMs) have made great achievements in Earth vision. However, complex disaster scenes with diverse disaster types, geographic regions, and satellite sensors have posed new challenges for VLM applications. To fill this gap, we curate a remote sensing vision-language dataset (DisasterM3) for global-scale disaster assessment and response. DisasterM3 includes 26,988 bi-temporal satellite images and 123k instruction pairs across 5 continents, with three characteristics: 1) Multi-hazard: DisasterM3 involves 36 historical disaster events with significant impacts, which are categorized into 10 common natural and man-made disasters. 2)Multi-sensor: Extreme weather during disasters often hinders optical sensor imaging, making it necessary to combine Synthetic Aperture Radar (SAR) imagery for post-disaster scenes. 3) Multi-task: Based on real-world scenarios, DisasterM3 includes 9 disaster-related visual perception and reasoning tasks, harnessing the full potential of VLM's reasoning ability with progressing from disaster-bearing body recognition to structural damage assessment and object relational reasoning, culminating in the generation of long-form disaster reports. We extensively evaluated 14 generic and remote sensing VLMs on our benchmark, revealing that state-of-the-art models struggle with the disaster tasks, largely due to the lack of a disaster-specific corpus, cross-sensor gap, and damage object counting insensitivity. Focusing on these issues, we fine-tune four VLMs using our dataset and achieve stable improvements across all tasks, with robust cross-sensor and cross-disaster generalization capabilities.

Seeing is Believing, but How Much? A Comprehensive Analysis of Verbalized Calibration in Vision-Language Models

May 26, 2025Abstract:Uncertainty quantification is essential for assessing the reliability and trustworthiness of modern AI systems. Among existing approaches, verbalized uncertainty, where models express their confidence through natural language, has emerged as a lightweight and interpretable solution in large language models (LLMs). However, its effectiveness in vision-language models (VLMs) remains insufficiently studied. In this work, we conduct a comprehensive evaluation of verbalized confidence in VLMs, spanning three model categories, four task domains, and three evaluation scenarios. Our results show that current VLMs often display notable miscalibration across diverse tasks and settings. Notably, visual reasoning models (i.e., thinking with images) consistently exhibit better calibration, suggesting that modality-specific reasoning is critical for reliable uncertainty estimation. To further address calibration challenges, we introduce Visual Confidence-Aware Prompting, a two-stage prompting strategy that improves confidence alignment in multimodal settings. Overall, our study highlights the inherent miscalibration in VLMs across modalities. More broadly, our findings underscore the fundamental importance of modality alignment and model faithfulness in advancing reliable multimodal systems.

Enhancing Monocular Height Estimation via Sparse LiDAR-Guided Correction

May 11, 2025Abstract:Monocular height estimation (MHE) from very-high-resolution (VHR) remote sensing imagery via deep learning is notoriously challenging due to the lack of sufficient structural information. Conventional digital elevation models (DEMs), typically derived from airborne LiDAR or multi-view stereo, remain costly and geographically limited. Recently, models trained on synthetic data and refined through domain adaptation have shown remarkable performance in MHE, yet it remains unclear how these models make predictions or how reliable they truly are. In this paper, we investigate a state-of-the-art MHE model trained purely on synthetic data to explore where the model looks when making height predictions. Through systematic analyses, we find that the model relies heavily on shadow cues, a factor that can lead to overestimation or underestimation of heights when shadows deviate from expected norms. Furthermore, the inherent difficulty of evaluating regression tasks with the human eye underscores additional limitations of purely synthetic training. To address these issues, we propose a novel correction pipeline that integrates sparse, imperfect global LiDAR measurements (ICESat-2) with deep-learning outputs to improve local accuracy and achieve spatially consistent corrections. Our method comprises two stages: pre-processing raw ICESat-2 data, followed by a random forest-based approach to densely refine height estimates. Experiments in three representative urban regions -- Saint-Omer, Tokyo, and Sao Paulo -- reveal substantial error reductions, with mean absolute error (MAE) decreased by 22.8\%, 6.9\%, and 4.9\%, respectively. These findings highlight the critical role of shadow awareness in synthetic data-driven models and demonstrate how fusing imperfect real-world LiDAR data can bolster the robustness of MHE, paving the way for more reliable and scalable 3D mapping solutions.

Joint Super-Resolution and Segmentation for 1-m Impervious Surface Area Mapping in China's Yangtze River Economic Belt

May 08, 2025Abstract:We propose a novel joint framework by integrating super-resolution and segmentation, called JointSeg, which enables the generation of 1-meter ISA maps directly from freely available Sentinel-2 imagery. JointSeg was trained on multimodal cross-resolution inputs, offering a scalable and affordable alternative to traditional approaches. This synergistic design enables gradual resolution enhancement from 10m to 1m while preserving fine-grained spatial textures, and ensures high classification fidelity through effective cross-scale feature fusion. This method has been successfully applied to the Yangtze River Economic Belt (YREB), a region characterized by complex urban-rural patterns and diverse topography. As a result, a comprehensive ISA mapping product for 2021, referred to as ISA-1, was generated, covering an area of over 2.2 million square kilometers. Quantitative comparisons against the 10m ESA WorldCover and other benchmark products reveal that ISA-1 achieves an F1-score of 85.71%, outperforming bilinear-interpolation-based segmentation by 9.5%, and surpassing other ISA datasets by 21.43%-61.07%. In densely urbanized areas (e.g., Suzhou, Nanjing), ISA-1 reduces ISA overestimation through improved discrimination of green spaces and water bodies. Conversely, in mountainous regions (e.g., Ganzi, Zhaotong), it identifies significantly more ISA due to its enhanced ability to detect fragmented anthropogenic features such as rural roads and sparse settlements, demonstrating its robustness across diverse landscapes. Moreover, we present biennial ISA maps from 2017 to 2023, capturing spatiotemporal urbanization dynamics across representative cities. The results highlight distinct regional growth patterns: rapid expansion in upstream cities, moderate growth in midstream regions, and saturation in downstream metropolitan areas.

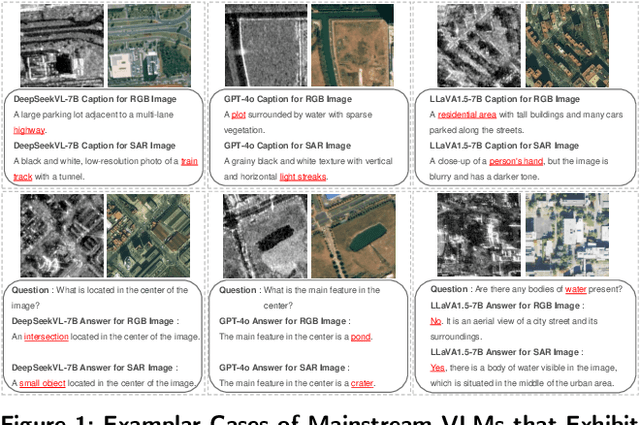

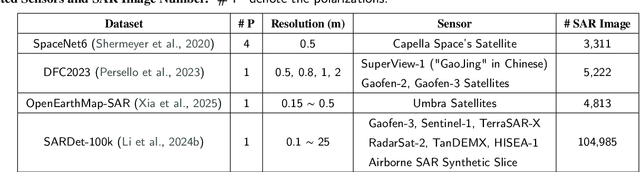

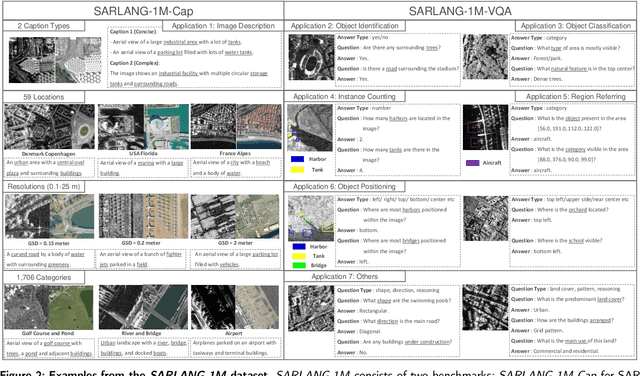

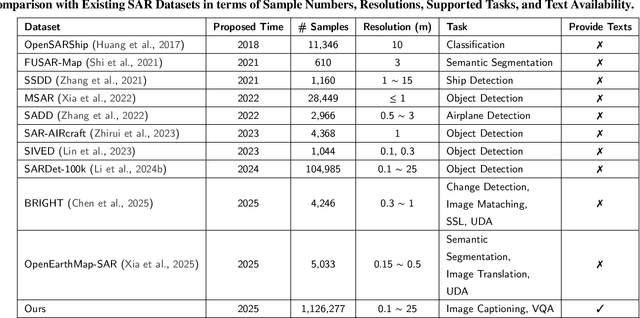

SARLANG-1M: A Benchmark for Vision-Language Modeling in SAR Image Understanding

Apr 04, 2025

Abstract:Synthetic Aperture Radar (SAR) is a crucial remote sensing technology, enabling all-weather, day-and-night observation with strong surface penetration for precise and continuous environmental monitoring and analysis. However, SAR image interpretation remains challenging due to its complex physical imaging mechanisms and significant visual disparities from human perception. Recently, Vision-Language Models (VLMs) have demonstrated remarkable success in RGB image understanding, offering powerful open-vocabulary interpretation and flexible language interaction. However, their application to SAR images is severely constrained by the absence of SAR-specific knowledge in their training distributions, leading to suboptimal performance. To address this limitation, we introduce SARLANG-1M, a large-scale benchmark tailored for multimodal SAR image understanding, with a primary focus on integrating SAR with textual modality. SARLANG-1M comprises more than 1 million high-quality SAR image-text pairs collected from over 59 cities worldwide. It features hierarchical resolutions (ranging from 0.1 to 25 meters), fine-grained semantic descriptions (including both concise and detailed captions), diverse remote sensing categories (1,696 object types and 16 land cover classes), and multi-task question-answering pairs spanning seven applications and 1,012 question types. Extensive experiments on mainstream VLMs demonstrate that fine-tuning with SARLANG-1M significantly enhances their performance in SAR image interpretation, reaching performance comparable to human experts. The dataset and code will be made publicly available at https://github.com/Jimmyxichen/SARLANG-1M.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge