Luojun Lin

Distilling Vision-Language Foundation Models: A Data-Free Approach via Prompt Diversification

Jul 21, 2024

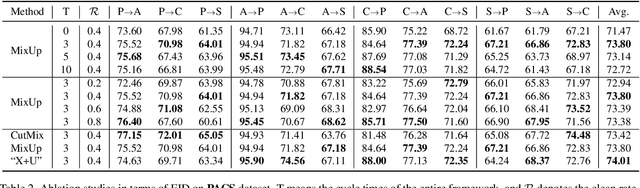

Abstract:Data-Free Knowledge Distillation (DFKD) has shown great potential in creating a compact student model while alleviating the dependency on real training data by synthesizing surrogate data. However, prior arts are seldom discussed under distribution shifts, which may be vulnerable in real-world applications. Recent Vision-Language Foundation Models, e.g., CLIP, have demonstrated remarkable performance in zero-shot out-of-distribution generalization, yet consuming heavy computation resources. In this paper, we discuss the extension of DFKD to Vision-Language Foundation Models without access to the billion-level image-text datasets. The objective is to customize a student model for distribution-agnostic downstream tasks with given category concepts, inheriting the out-of-distribution generalization capability from the pre-trained foundation models. In order to avoid generalization degradation, the primary challenge of this task lies in synthesizing diverse surrogate images driven by text prompts. Since not only category concepts but also style information are encoded in text prompts, we propose three novel Prompt Diversification methods to encourage image synthesis with diverse styles, namely Mix-Prompt, Random-Prompt, and Contrastive-Prompt. Experiments on out-of-distribution generalization datasets demonstrate the effectiveness of the proposed methods, with Contrastive-Prompt performing the best.

MEAT: Median-Ensemble Adversarial Training for Improving Robustness and Generalization

Jun 20, 2024Abstract:Self-ensemble adversarial training methods improve model robustness by ensembling models at different training epochs, such as model weight averaging (WA). However, previous research has shown that self-ensemble defense methods in adversarial training (AT) still suffer from robust overfitting, which severely affects the generalization performance. Empirically, in the late phases of training, the AT becomes more overfitting to the extent that the individuals for weight averaging also suffer from overfitting and produce anomalous weight values, which causes the self-ensemble model to continue to undergo robust overfitting due to the failure in removing the weight anomalies. To solve this problem, we aim to tackle the influence of outliers in the weight space in this work and propose an easy-to-operate and effective Median-Ensemble Adversarial Training (MEAT) method to solve the robust overfitting phenomenon existing in self-ensemble defense from the source by searching for the median of the historical model weights. Experimental results show that MEAT achieves the best robustness against the powerful AutoAttack and can effectively allievate the robust overfitting. We further demonstrate that most defense methods can improve robust generalization and robustness by combining with MEAT.

MetaFBP: Learning to Learn High-Order Predictor for Personalized Facial Beauty Prediction

Nov 23, 2023

Abstract:Predicting individual aesthetic preferences holds significant practical applications and academic implications for human society. However, existing studies mainly focus on learning and predicting the commonality of facial attractiveness, with little attention given to Personalized Facial Beauty Prediction (PFBP). PFBP aims to develop a machine that can adapt to individual aesthetic preferences with only a few images rated by each user. In this paper, we formulate this task from a meta-learning perspective that each user corresponds to a meta-task. To address such PFBP task, we draw inspiration from the human aesthetic mechanism that visual aesthetics in society follows a Gaussian distribution, which motivates us to disentangle user preferences into a commonality and an individuality part. To this end, we propose a novel MetaFBP framework, in which we devise a universal feature extractor to capture the aesthetic commonality and then optimize to adapt the aesthetic individuality by shifting the decision boundary of the predictor via a meta-learning mechanism. Unlike conventional meta-learning methods that may struggle with slow adaptation or overfitting to tiny support sets, we propose a novel approach that optimizes a high-order predictor for fast adaptation. In order to validate the performance of the proposed method, we build several PFBP benchmarks by using existing facial beauty prediction datasets rated by numerous users. Extensive experiments on these benchmarks demonstrate the effectiveness of the proposed MetaFBP method.

Periodically Exchange Teacher-Student for Source-Free Object Detection

Nov 23, 2023

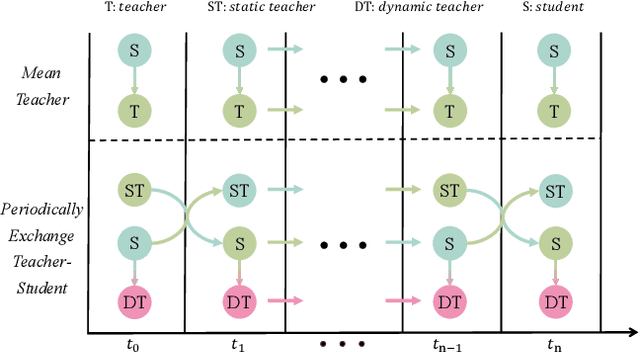

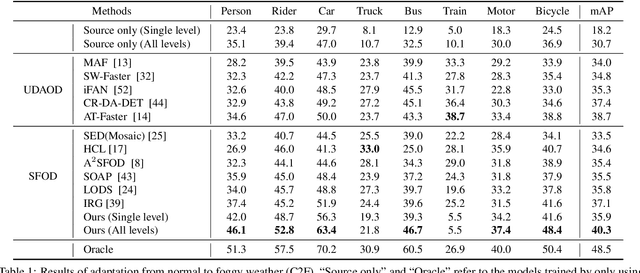

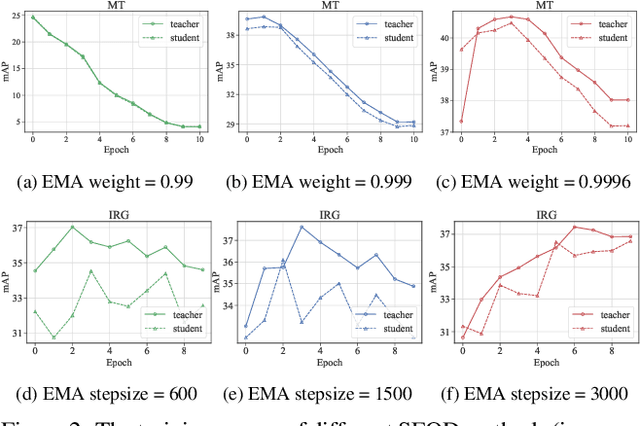

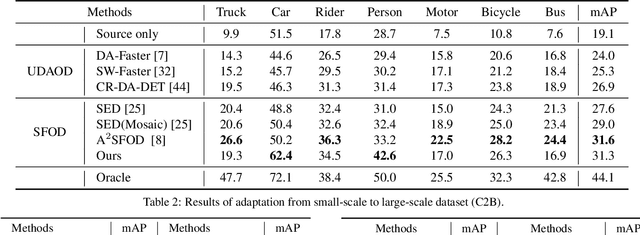

Abstract:Source-free object detection (SFOD) aims to adapt the source detector to unlabeled target domain data in the absence of source domain data. Most SFOD methods follow the same self-training paradigm using mean-teacher (MT) framework where the student model is guided by only one single teacher model. However, such paradigm can easily fall into a training instability problem that when the teacher model collapses uncontrollably due to the domain shift, the student model also suffers drastic performance degradation. To address this issue, we propose the Periodically Exchange Teacher-Student (PETS) method, a simple yet novel approach that introduces a multiple-teacher framework consisting of a static teacher, a dynamic teacher, and a student model. During the training phase, we periodically exchange the weights between the static teacher and the student model. Then, we update the dynamic teacher using the moving average of the student model that has already been exchanged by the static teacher. In this way, the dynamic teacher can integrate knowledge from past periods, effectively reducing error accumulation and enabling a more stable training process within the MT-based framework. Further, we develop a consensus mechanism to merge the predictions of two teacher models to provide higher-quality pseudo labels for student model. Extensive experiments on multiple SFOD benchmarks show that the proposed method achieves state-of-the-art performance compared with other related methods, demonstrating the effectiveness and superiority of our method on SFOD task.

Parameter Exchange for Robust Dynamic Domain Generalization

Nov 23, 2023Abstract:Agnostic domain shift is the main reason of model degradation on the unknown target domains, which brings an urgent need to develop Domain Generalization (DG). Recent advances at DG use dynamic networks to achieve training-free adaptation on the unknown target domains, termed Dynamic Domain Generalization (DDG), which compensates for the lack of self-adaptability in static models with fixed weights. The parameters of dynamic networks can be decoupled into a static and a dynamic component, which are designed to learn domain-invariant and domain-specific features, respectively. Based on the existing arts, in this work, we try to push the limits of DDG by disentangling the static and dynamic components more thoroughly from an optimization perspective. Our main consideration is that we can enable the static component to learn domain-invariant features more comprehensively by augmenting the domain-specific information. As a result, the more comprehensive domain-invariant features learned by the static component can then enforce the dynamic component to focus more on learning adaptive domain-specific features. To this end, we propose a simple yet effective Parameter Exchange (PE) method to perturb the combination between the static and dynamic components. We optimize the model using the gradients from both the perturbed and non-perturbed feed-forward jointly to implicitly achieve the aforementioned disentanglement. In this way, the two components can be optimized in a mutually-beneficial manner, which can resist the agnostic domain shifts and improve the self-adaptability on the unknown target domain. Extensive experiments show that PE can be easily plugged into existing dynamic networks to improve their generalization ability without bells and whistles.

Adapt Anything: Tailor Any Image Classifiers across Domains And Categories Using Text-to-Image Diffusion Models

Oct 25, 2023

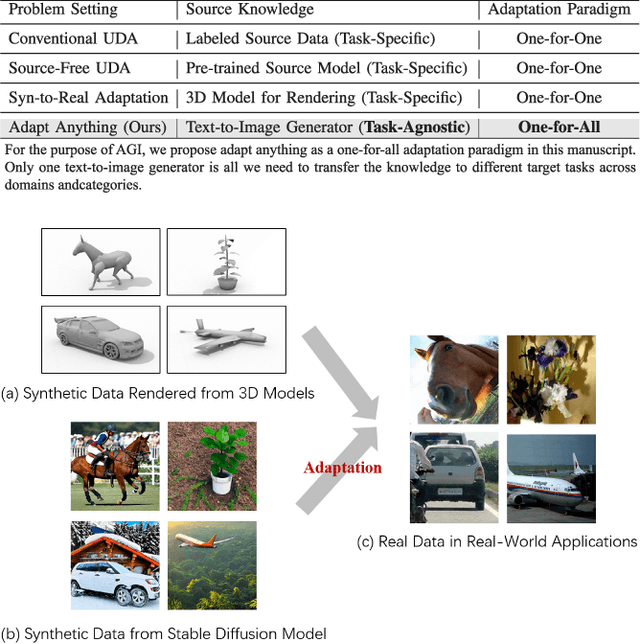

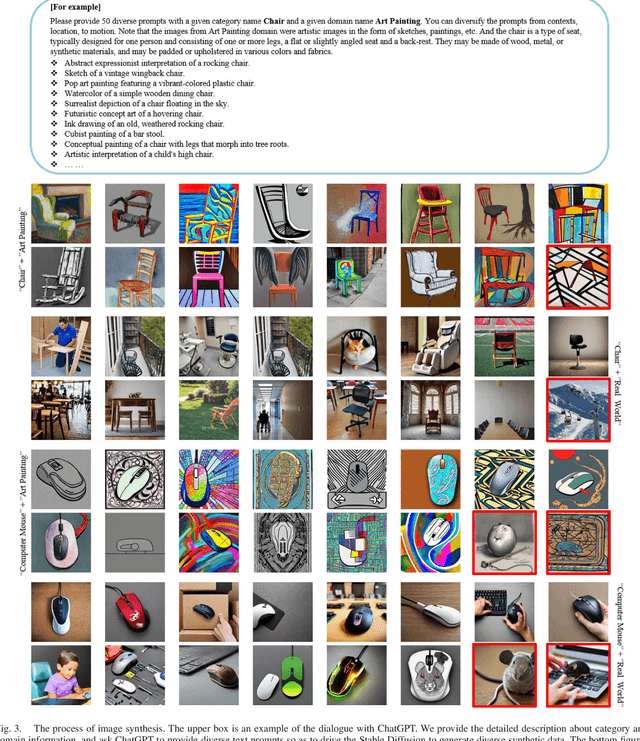

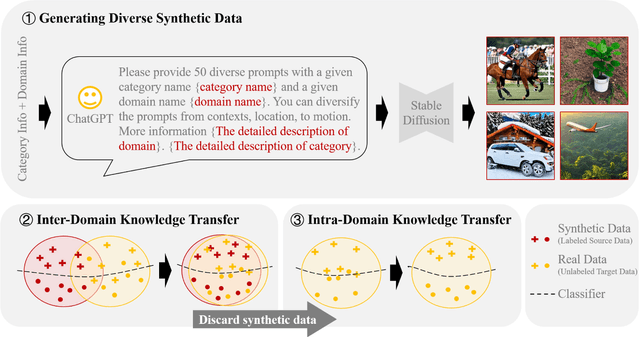

Abstract:We do not pursue a novel method in this paper, but aim to study if a modern text-to-image diffusion model can tailor any task-adaptive image classifier across domains and categories. Existing domain adaptive image classification works exploit both source and target data for domain alignment so as to transfer the knowledge learned from the labeled source data to the unlabeled target data. However, as the development of the text-to-image diffusion model, we wonder if the high-fidelity synthetic data from the text-to-image generator can serve as a surrogate of the source data in real world. In this way, we do not need to collect and annotate the source data for each domain adaptation task in a one-for-one manner. Instead, we utilize only one off-the-shelf text-to-image model to synthesize images with category labels derived from the corresponding text prompts, and then leverage the surrogate data as a bridge to transfer the knowledge embedded in the task-agnostic text-to-image generator to the task-oriented image classifier via domain adaptation. Such a one-for-all adaptation paradigm allows us to adapt anything in the world using only one text-to-image generator as well as the corresponding unlabeled target data. Extensive experiments validate the feasibility of the proposed idea, which even surpasses the state-of-the-art domain adaptation works using the source data collected and annotated in real world.

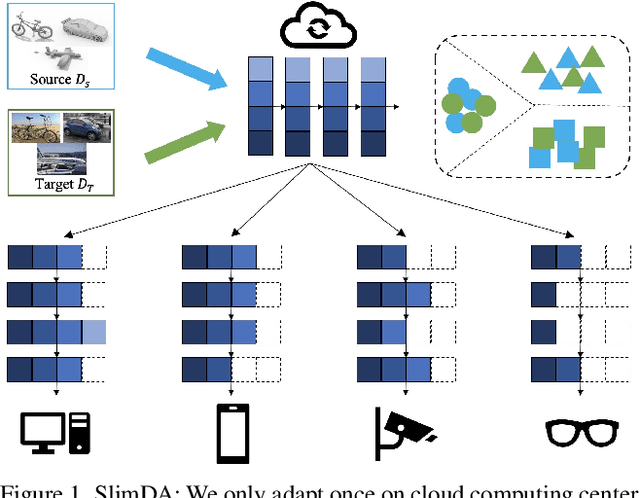

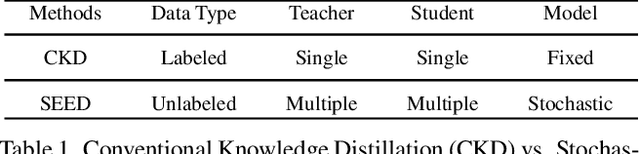

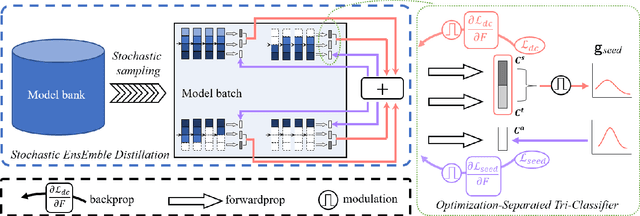

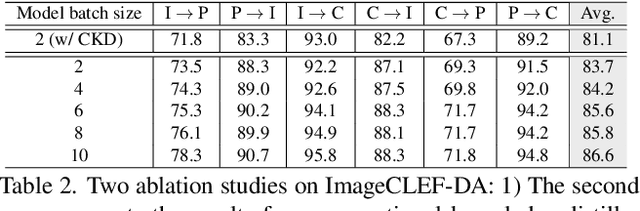

Slimmable Domain Adaptation

Jun 14, 2022

Abstract:Vanilla unsupervised domain adaptation methods tend to optimize the model with fixed neural architecture, which is not very practical in real-world scenarios since the target data is usually processed by different resource-limited devices. It is therefore of great necessity to facilitate architecture adaptation across various devices. In this paper, we introduce a simple framework, Slimmable Domain Adaptation, to improve cross-domain generalization with a weight-sharing model bank, from which models of different capacities can be sampled to accommodate different accuracy-efficiency trade-offs. The main challenge in this framework lies in simultaneously boosting the adaptation performance of numerous models in the model bank. To tackle this problem, we develop a Stochastic EnsEmble Distillation method to fully exploit the complementary knowledge in the model bank for inter-model interaction. Nevertheless, considering the optimization conflict between inter-model interaction and intra-model adaptation, we augment the existing bi-classifier domain confusion architecture into an Optimization-Separated Tri-Classifier counterpart. After optimizing the model bank, architecture adaptation is leveraged via our proposed Unsupervised Performance Evaluation Metric. Under various resource constraints, our framework surpasses other competing approaches by a very large margin on multiple benchmarks. It is also worth emphasizing that our framework can preserve the performance improvement against the source-only model even when the computing complexity is reduced to $1/64$. Code will be available at https://github.com/hikvision-research/SlimDA.

* To appear in CVPR 2022. Code is coming soon: https://github.com/hikvision-research/SlimDA

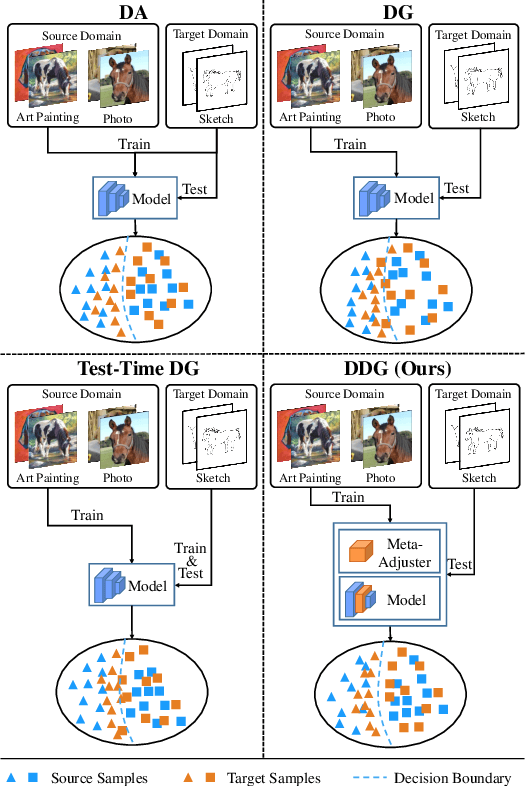

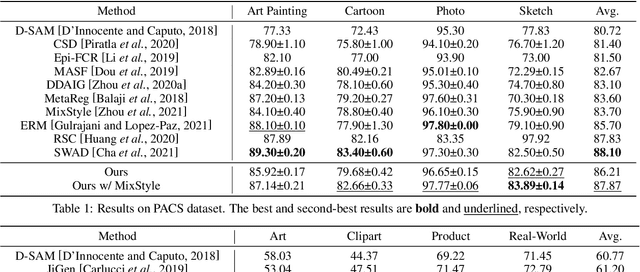

Dynamic Domain Generalization

May 27, 2022

Abstract:Domain generalization (DG) is a fundamental yet very challenging research topic in machine learning. The existing arts mainly focus on learning domain-invariant features with limited source domains in a static model. Unfortunately, there is a lack of training-free mechanism to adjust the model when generalized to the agnostic target domains. To tackle this problem, we develop a brand-new DG variant, namely Dynamic Domain Generalization (DDG), in which the model learns to twist the network parameters to adapt the data from different domains. Specifically, we leverage a meta-adjuster to twist the network parameters based on the static model with respect to different data from different domains. In this way, the static model is optimized to learn domain-shared features, while the meta-adjuster is designed to learn domain-specific features. To enable this process, DomainMix is exploited to simulate data from diverse domains during teaching the meta-adjuster to adapt to the upcoming agnostic target domains. This learning mechanism urges the model to generalize to different agnostic target domains via adjusting the model without training. Extensive experiments demonstrate the effectiveness of our proposed method. Code is available at: https://github.com/MetaVisionLab/DDG

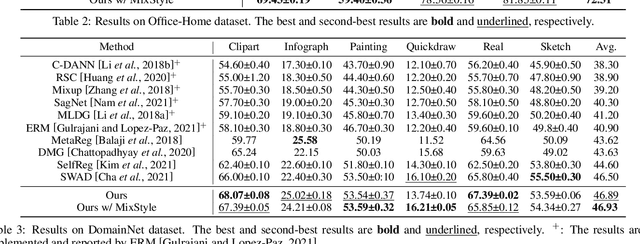

Semi-Supervised Domain Generalization in Real World:New Benchmark and Strong Baseline

Nov 19, 2021

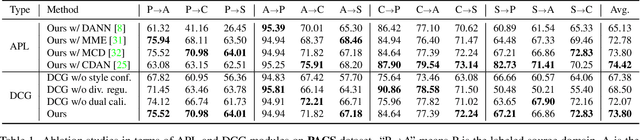

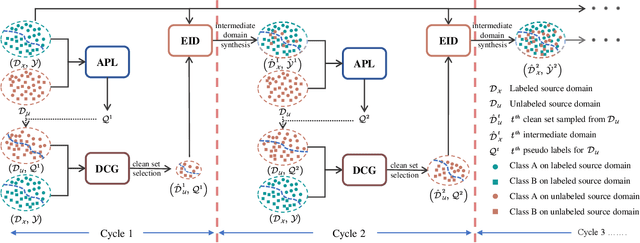

Abstract:Conventional domain generalization aims to learn domain invariant representation from multiple domains, which requires accurate annotations. In realistic application scenarios, however, it is too cumbersome or even infeasible to collect and annotate the large mass of data. Yet, web data provides a free lunch to access a huge amount of unlabeled data with rich style information that can be harnessed to augment domain generalization ability. In this paper, we introduce a novel task, termed as semi-supervised domain generalization, to study how to interact the labeled and unlabeled domains, and establish two benchmarks including a web-crawled dataset, which poses a novel yet realistic challenge to push the limits of existing technologies. To tackle this task, a straightforward solution is to propagate the class information from the labeled to the unlabeled domains via pseudo labeling in conjunction with domain confusion training. Considering narrowing domain gap can improve the quality of pseudo labels and further advance domain invariant feature learning for generalization, we propose a cycle learning framework to encourage the positive feedback between label propagation and domain generalization, in favor of an evolving intermediate domain bridging the labeled and unlabeled domains in a curriculum learning manner. Experiments are conducted to validate the effectiveness of our framework. It is worth highlighting that web-crawled data benefits domain generalization as demonstrated in our results. Our code will be available later.

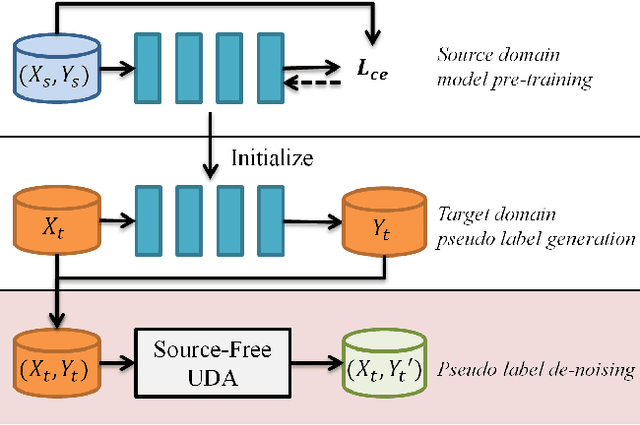

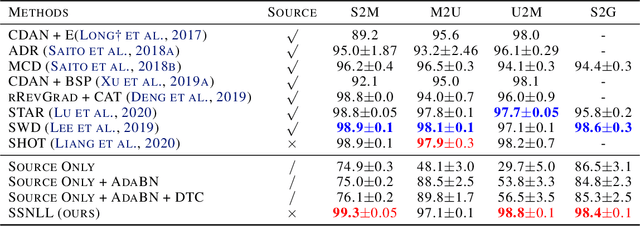

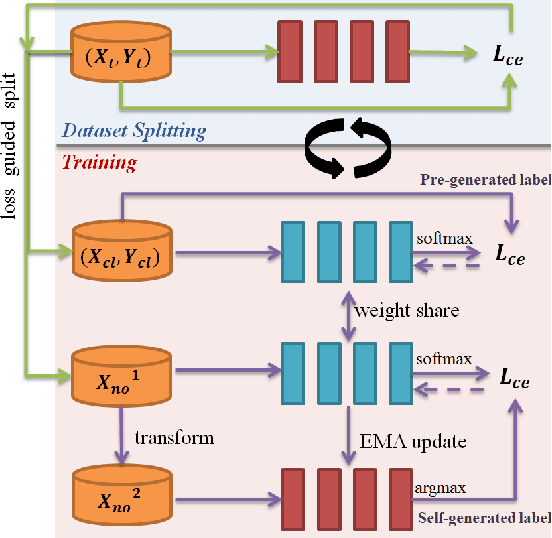

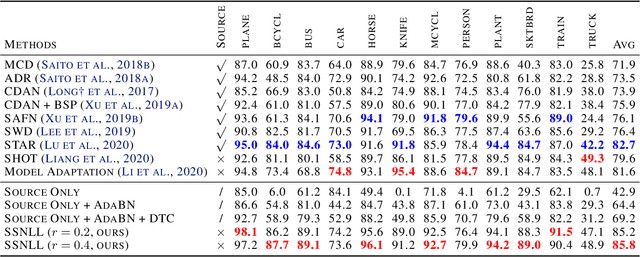

Self-Supervised Noisy Label Learning for Source-Free Unsupervised Domain Adaptation

Feb 23, 2021

Abstract:It is a strong prerequisite to access source data freely in many existing unsupervised domain adaptation approaches. However, source data is agnostic in many practical scenarios due to the constraints of expensive data transmission and data privacy protection. Usually, the given source domain pre-trained model is expected to optimize with only unlabeled target data, which is termed as source-free unsupervised domain adaptation. In this paper, we solve this problem from the perspective of noisy label learning, since the given pre-trained model can pre-generate noisy label for unlabeled target data via directly network inference. Under this problem modeling, incorporating self-supervised learning, we propose a novel Self-Supervised Noisy Label Learning method, which can effectively fine-tune the pre-trained model with pre-generated label as well as selfgenerated label on the fly. Extensive experiments had been conducted to validate its effectiveness. Our method can easily achieve state-of-the-art results and surpass other methods by a very large margin. Code will be released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge