Li Ding

NVIDIA Nemotron 3: Efficient and Open Intelligence

Dec 24, 2025Abstract:We introduce the Nemotron 3 family of models - Nano, Super, and Ultra. These models deliver strong agentic, reasoning, and conversational capabilities. The Nemotron 3 family uses a Mixture-of-Experts hybrid Mamba-Transformer architecture to provide best-in-class throughput and context lengths of up to 1M tokens. Super and Ultra models are trained with NVFP4 and incorporate LatentMoE, a novel approach that improves model quality. The two larger models also include MTP layers for faster text generation. All Nemotron 3 models are post-trained using multi-environment reinforcement learning enabling reasoning, multi-step tool use, and support granular reasoning budget control. Nano, the smallest model, outperforms comparable models in accuracy while remaining extremely cost-efficient for inference. Super is optimized for collaborative agents and high-volume workloads such as IT ticket automation. Ultra, the largest model, provides state-of-the-art accuracy and reasoning performance. Nano is released together with its technical report and this white paper, while Super and Ultra will follow in the coming months. We will openly release the model weights, pre- and post-training software, recipes, and all data for which we hold redistribution rights.

Nemotron 3 Nano: Open, Efficient Mixture-of-Experts Hybrid Mamba-Transformer Model for Agentic Reasoning

Dec 23, 2025Abstract:We present Nemotron 3 Nano 30B-A3B, a Mixture-of-Experts hybrid Mamba-Transformer language model. Nemotron 3 Nano was pretrained on 25 trillion text tokens, including more than 3 trillion new unique tokens over Nemotron 2, followed by supervised fine tuning and large-scale RL on diverse environments. Nemotron 3 Nano achieves better accuracy than our previous generation Nemotron 2 Nano while activating less than half of the parameters per forward pass. It achieves up to 3.3x higher inference throughput than similarly-sized open models like GPT-OSS-20B and Qwen3-30B-A3B-Thinking-2507, while also being more accurate on popular benchmarks. Nemotron 3 Nano demonstrates enhanced agentic, reasoning, and chat abilities and supports context lengths up to 1M tokens. We release both our pretrained Nemotron 3 Nano 30B-A3B Base and post-trained Nemotron 3 Nano 30B-A3B checkpoints on Hugging Face.

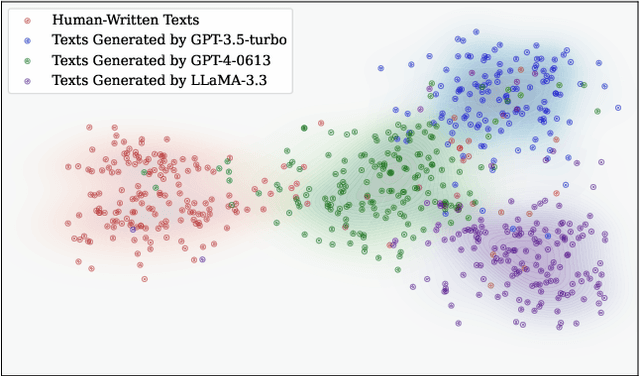

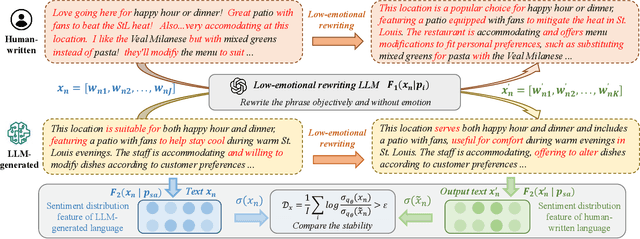

Model-Agnostic Sentiment Distribution Stability Analysis for Robust LLM-Generated Texts Detection

Aug 09, 2025

Abstract:The rapid advancement of large language models (LLMs) has resulted in increasingly sophisticated AI-generated content, posing significant challenges in distinguishing LLM-generated text from human-written language. Existing detection methods, primarily based on lexical heuristics or fine-tuned classifiers, often suffer from limited generalizability and are vulnerable to paraphrasing, adversarial perturbations, and cross-domain shifts. In this work, we propose SentiDetect, a model-agnostic framework for detecting LLM-generated text by analyzing the divergence in sentiment distribution stability. Our method is motivated by the empirical observation that LLM outputs tend to exhibit emotionally consistent patterns, whereas human-written texts display greater emotional variability. To capture this phenomenon, we define two complementary metrics: sentiment distribution consistency and sentiment distribution preservation, which quantify stability under sentiment-altering and semantic-preserving transformations. We evaluate SentiDetect on five diverse datasets and a range of advanced LLMs,including Gemini-1.5-Pro, Claude-3, GPT-4-0613, and LLaMa-3.3. Experimental results demonstrate its superiority over state-of-the-art baselines, with over 16% and 11% F1 score improvements on Gemini-1.5-Pro and GPT-4-0613, respectively. Moreover, SentiDetect also shows greater robustness to paraphrasing, adversarial attacks, and text length variations, outperforming existing detectors in challenging scenarios.

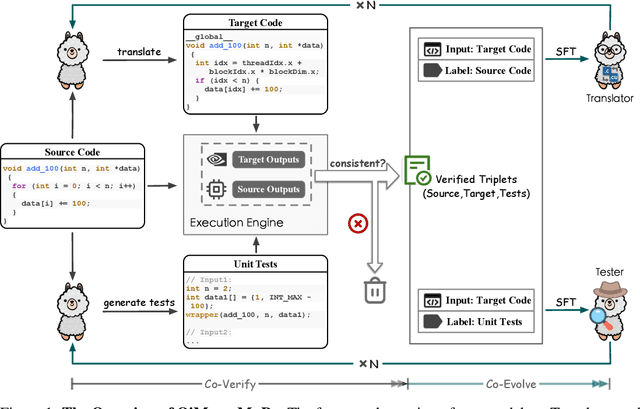

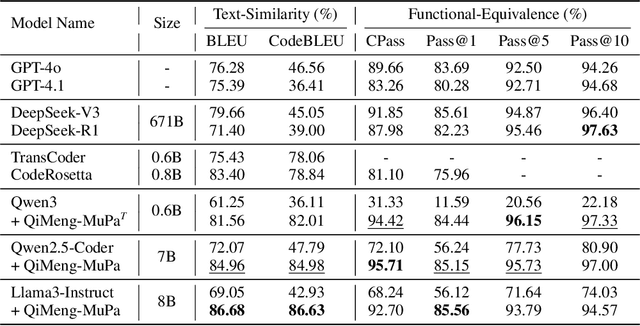

Mutual-Supervised Learning for Sequential-to-Parallel Code Translation

Jun 11, 2025

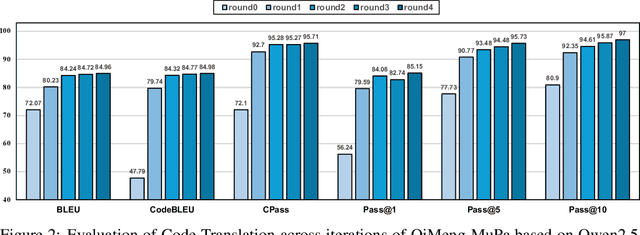

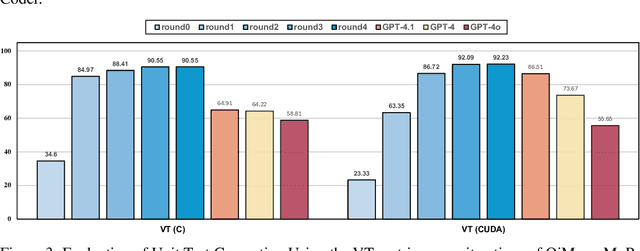

Abstract:The rise of GPU-based high-performance computing (HPC) has driven the widespread adoption of parallel programming models such as CUDA. Yet, the inherent complexity of parallel programming creates a demand for the automated sequential-to-parallel approaches. However, data scarcity poses a significant challenge for machine learning-based sequential-to-parallel code translation. Although recent back-translation methods show promise, they still fail to ensure functional equivalence in the translated code. In this paper, we propose a novel Mutual-Supervised Learning (MSL) framework for sequential-to-parallel code translation to address the functional equivalence issue. MSL consists of two models, a Translator and a Tester. Through an iterative loop consisting of Co-verify and Co-evolve steps, the Translator and the Tester mutually generate data for each other and improve collectively. The Tester generates unit tests to verify and filter functionally equivalent translated code, thereby evolving the Translator, while the Translator generates translated code as augmented input to evolve the Tester. Experimental results demonstrate that MuSL significantly enhances the performance of the base model: when applied to Qwen2.5-Coder, it not only improves Pass@1 by up to 28.91% and boosts Tester performance by 68.90%, but also outperforms the previous state-of-the-art method CodeRosetta by 1.56 and 6.92 in BLEU and CodeBLEU scores, while achieving performance comparable to DeepSeek-R1 and GPT-4.1. Our code is available at https://github.com/kcxain/musl.

Fast-Powerformer: A Memory-Efficient Transformer for Accurate Mid-Term Wind Power Forecasting

Apr 15, 2025

Abstract:Wind power forecasting (WPF), as a significant research topic within renewable energy, plays a crucial role in enhancing the security, stability, and economic operation of power grids. However, due to the high stochasticity of meteorological factors (e.g., wind speed) and significant fluctuations in wind power output, mid-term wind power forecasting faces a dual challenge of maintaining high accuracy and computational efficiency. To address these issues, this paper proposes an efficient and lightweight mid-term wind power forecasting model, termed Fast-Powerformer. The proposed model is built upon the Reformer architecture, incorporating structural enhancements such as a lightweight Long Short-Term Memory (LSTM) embedding module, an input transposition mechanism, and a Frequency Enhanced Channel Attention Mechanism (FECAM). These improvements enable the model to strengthen temporal feature extraction, optimize dependency modeling across variables, significantly reduce computational complexity, and enhance sensitivity to periodic patterns and dominant frequency components. Experimental results conducted on multiple real-world wind farm datasets demonstrate that the proposed Fast-Powerformer achieves superior prediction accuracy and operational efficiency compared to mainstream forecasting approaches. Furthermore, the model exhibits fast inference speed and low memory consumption, highlighting its considerable practical value for real-world deployment scenarios.

Large Language Model Inference Acceleration: A Comprehensive Hardware Perspective

Oct 06, 2024

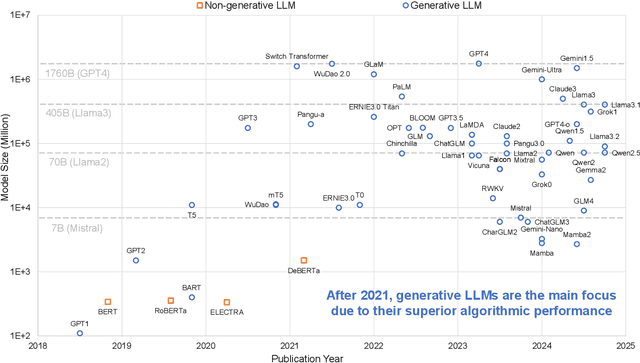

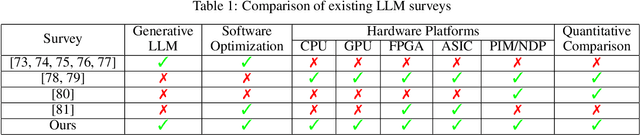

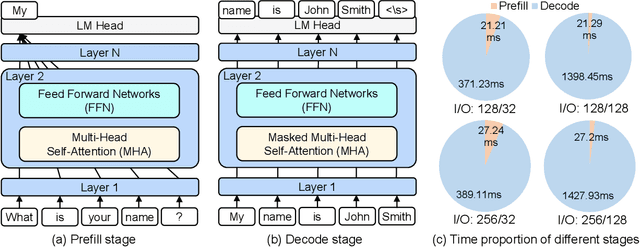

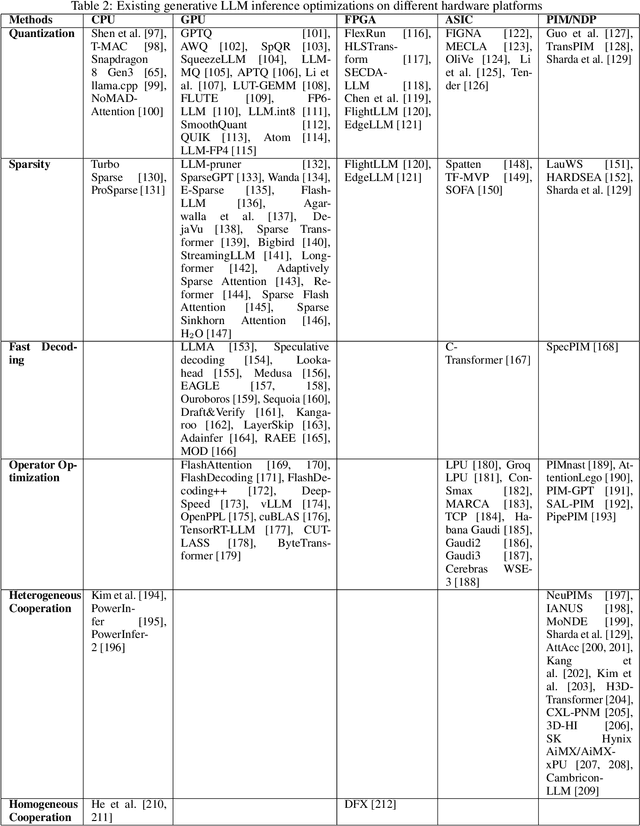

Abstract:Large Language Models (LLMs) have demonstrated remarkable capabilities across various fields, from natural language understanding to text generation. Compared to non-generative LLMs like BERT and DeBERTa, generative LLMs like GPT series and Llama series are currently the main focus due to their superior algorithmic performance. The advancements in generative LLMs are closely intertwined with the development of hardware capabilities. Various hardware platforms exhibit distinct hardware characteristics, which can help improve LLM inference performance. Therefore, this paper comprehensively surveys efficient generative LLM inference on different hardware platforms. First, we provide an overview of the algorithm architecture of mainstream generative LLMs and delve into the inference process. Then, we summarize different optimization methods for different platforms such as CPU, GPU, FPGA, ASIC, and PIM/NDP, and provide inference results for generative LLMs. Furthermore, we perform a qualitative and quantitative comparison of inference performance with batch sizes 1 and 8 on different hardware platforms by considering hardware power consumption, absolute inference speed (tokens/s), and energy efficiency (tokens/J). We compare the performance of the same optimization methods across different hardware platforms, the performance across different hardware platforms, and the performance of different methods on the same hardware platform. This provides a systematic and comprehensive summary of existing inference acceleration work by integrating software optimization methods and hardware platforms, which can point to the future trends and potential developments of generative LLMs and hardware technology for edge-side scenarios.

MARCA: Mamba Accelerator with ReConfigurable Architecture

Sep 16, 2024

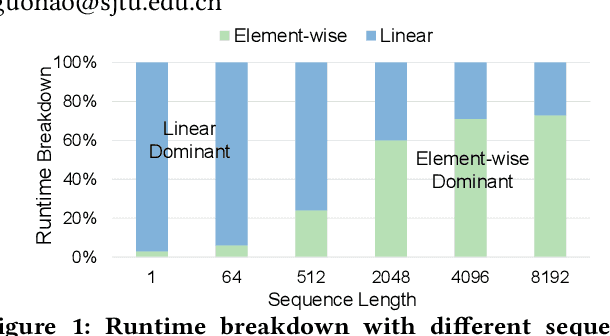

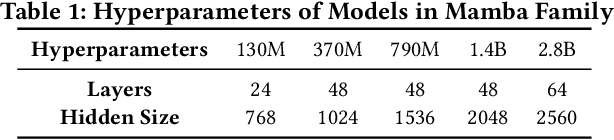

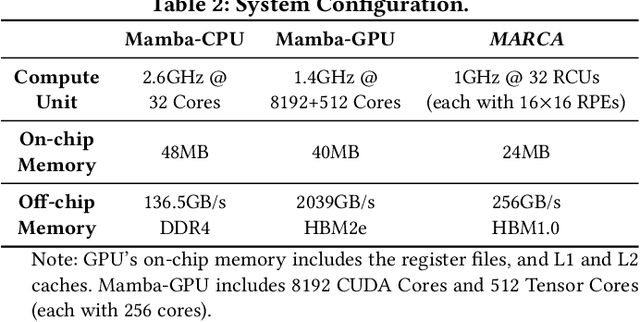

Abstract:We propose a Mamba accelerator with reconfigurable architecture, MARCA.We propose three novel approaches in this paper. (1) Reduction alternative PE array architecture for both linear and element-wise operations. For linear operations, the reduction tree connected to PE arrays is enabled and executes the reduction operation. For element-wise operations, the reduction tree is disabled and the output bypasses. (2) Reusable nonlinear function unit based on the reconfigurable PE. We decompose the exponential function into element-wise operations and a shift operation by a fast biased exponential algorithm, and the activation function (SiLU) into a range detection and element-wise operations by a piecewise approximation algorithm. Thus, the reconfigurable PEs are reused to execute nonlinear functions with negligible accuracy loss.(3) Intra-operation and inter-operation buffer management strategy. We propose intra-operation buffer management strategy to maximize input data sharing for linear operations within operations, and inter-operation strategy for element-wise operations between operations. We conduct extensive experiments on Mamba model families with different sizes.MARCA achieves up to 463.22$\times$/11.66$\times$ speedup and up to 9761.42$\times$/242.52$\times$ energy efficiency compared to Intel Xeon 8358P CPU and NVIDIA Tesla A100 GPU implementations, respectively.

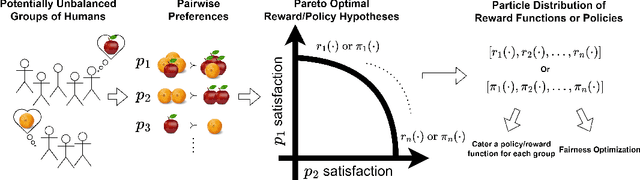

Pareto-Optimal Learning from Preferences with Hidden Context

Jun 21, 2024

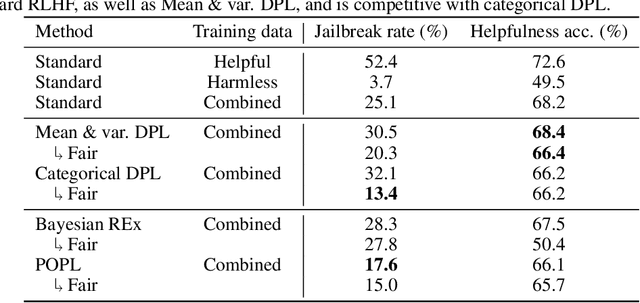

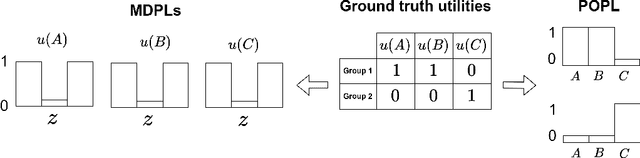

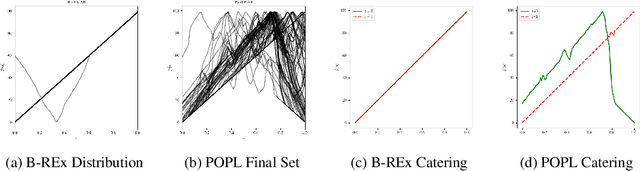

Abstract:Ensuring AI models align with human values is essential for their safety and functionality. Reinforcement learning from human feedback (RLHF) uses human preferences to achieve this alignment. However, preferences sourced from diverse populations can result in point estimates of human values that may be sub-optimal or unfair to specific groups. We propose Pareto Optimal Preference Learning (POPL), which frames discrepant group preferences as objectives with potential trade-offs, aiming for policies that are Pareto-optimal on the preference dataset. POPL utilizes Lexicase selection, an iterative process to select diverse and Pareto-optimal solutions. Our empirical evaluations demonstrate that POPL surpasses baseline methods in learning sets of reward functions, effectively catering to distinct groups without access to group numbers or membership labels. Furthermore, we illustrate that POPL can serve as a foundation for techniques optimizing specific notions of group fairness, ensuring inclusive and equitable AI model alignment.

DALex: Lexicase-like Selection via Diverse Aggregation

Jan 23, 2024

Abstract:Lexicase selection has been shown to provide advantages over other selection algorithms in several areas of evolutionary computation and machine learning. In its standard form, lexicase selection filters a population or other collection based on randomly ordered training cases that are considered one at a time. This iterated filtering process can be time-consuming, particularly in settings with large numbers of training cases. In this paper, we propose a new method that is nearly equivalent to lexicase selection in terms of the individuals that it selects, but which does so significantly more quickly. The new method, called DALex (for Diversely Aggregated Lexicase), selects the best individual with respect to a weighted sum of training case errors, where the weights are randomly sampled. This allows us to formulate the core computation required for selection as matrix multiplication instead of recursive loops of comparisons, which in turn allows us to take advantage of optimized and parallel algorithms designed for matrix multiplication for speedup. Furthermore, we show that we can interpolate between the behavior of lexicase selection and its "relaxed" variants, such as epsilon or batch lexicase selection, by adjusting a single hyperparameter, named "particularity pressure," which represents the importance granted to each individual training case. Results on program synthesis, deep learning, symbolic regression, and learning classifier systems demonstrate that DALex achieves significant speedups over lexicase selection and its relaxed variants while maintaining almost identical problem-solving performance. Under a fixed computational budget, these savings free up resources that can be directed towards increasing population size or the number of generations, enabling the potential for solving more difficult problems.

Optimizing Neural Networks with Gradient Lexicase Selection

Dec 19, 2023

Abstract:One potential drawback of using aggregated performance measurement in machine learning is that models may learn to accept higher errors on some training cases as compromises for lower errors on others, with the lower errors actually being instances of overfitting. This can lead to both stagnation at local optima and poor generalization. Lexicase selection is an uncompromising method developed in evolutionary computation, which selects models on the basis of sequences of individual training case errors instead of using aggregated metrics such as loss and accuracy. In this paper, we investigate how lexicase selection, in its general form, can be integrated into the context of deep learning to enhance generalization. We propose Gradient Lexicase Selection, an optimization framework that combines gradient descent and lexicase selection in an evolutionary fashion. Our experimental results demonstrate that the proposed method improves the generalization performance of various widely-used deep neural network architectures across three image classification benchmarks. Additionally, qualitative analysis suggests that our method assists networks in learning more diverse representations. Our source code is available on GitHub: https://github.com/ld-ing/gradient-lexicase.

* ICLR 2022

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge