Koustav Rudra

GRACE: GRaph-based Addiction Care prEdiction

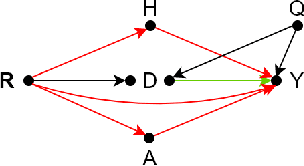

Oct 23, 2025Abstract:Determining the appropriate locus of care for addiction patients is one of the most critical clinical decisions that affects patient treatment outcomes and effective use of resources. With a lack of sufficient specialized treatment resources, such as inpatient beds or staff, there is an unmet need to develop an automated framework for the same. Current decision-making approaches suffer from severe class imbalances in addiction datasets. To address this limitation, we propose a novel graph neural network (GRACE) framework that formalizes locus of care prediction as a structured learning problem. Further, we perform extensive feature engineering and propose a new approach of obtaining an unbiased meta-graph to train a GNN to overcome the class imbalance problem. Experimental results in real-world data show an improvement of 11-35% in terms of the F1 score of the minority class over competitive baselines. The codes and note embeddings are available at https://anonymous.4open.science/r/GRACE-F8E1/.

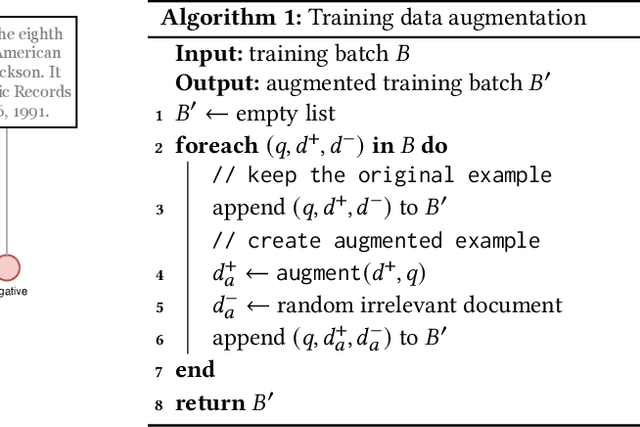

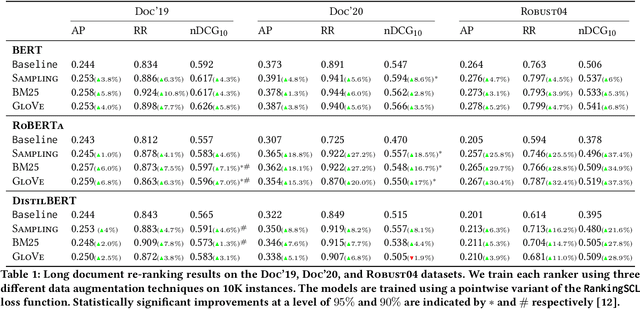

Data Augmentation for Sample Efficient and Robust Document Ranking

Nov 26, 2023Abstract:Contextual ranking models have delivered impressive performance improvements over classical models in the document ranking task. However, these highly over-parameterized models tend to be data-hungry and require large amounts of data even for fine-tuning. In this paper, we propose data-augmentation methods for effective and robust ranking performance. One of the key benefits of using data augmentation is in achieving sample efficiency or learning effectively when we have only a small amount of training data. We propose supervised and unsupervised data augmentation schemes by creating training data using parts of the relevant documents in the query-document pairs. We then adapt a family of contrastive losses for the document ranking task that can exploit the augmented data to learn an effective ranking model. Our extensive experiments on subsets of the MS MARCO and TREC-DL test sets show that data augmentation, along with the ranking-adapted contrastive losses, results in performance improvements under most dataset sizes. Apart from sample efficiency, we conclusively show that data augmentation results in robust models when transferred to out-of-domain benchmarks. Our performance improvements in in-domain and more prominently in out-of-domain benchmarks show that augmentation regularizes the ranking model and improves its robustness and generalization capability.

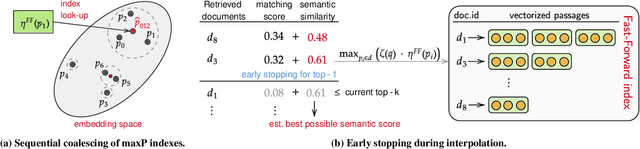

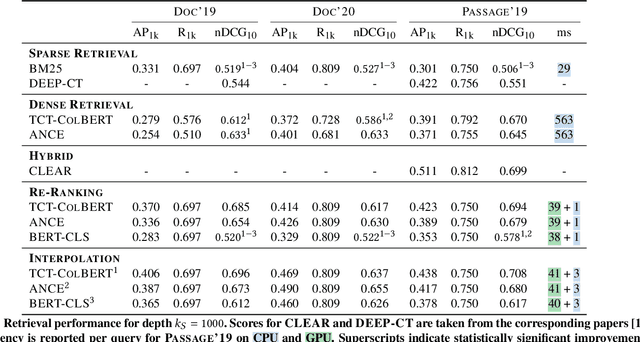

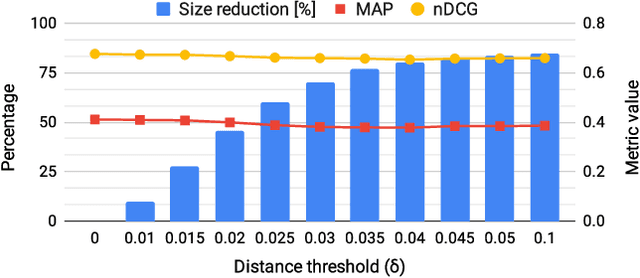

Efficient Neural Ranking using Forward Indexes and Lightweight Encoders

Nov 02, 2023Abstract:Dual-encoder-based dense retrieval models have become the standard in IR. They employ large Transformer-based language models, which are notoriously inefficient in terms of resources and latency. We propose Fast-Forward indexes -- vector forward indexes which exploit the semantic matching capabilities of dual-encoder models for efficient and effective re-ranking. Our framework enables re-ranking at very high retrieval depths and combines the merits of both lexical and semantic matching via score interpolation. Furthermore, in order to mitigate the limitations of dual-encoders, we tackle two main challenges: Firstly, we improve computational efficiency by either pre-computing representations, avoiding unnecessary computations altogether, or reducing the complexity of encoders. This allows us to considerably improve ranking efficiency and latency. Secondly, we optimize the memory footprint and maintenance cost of indexes; we propose two complementary techniques to reduce the index size and show that, by dynamically dropping irrelevant document tokens, the index maintenance efficiency can be improved substantially. We perform evaluation to show the effectiveness and efficiency of Fast-Forward indexes -- our method has low latency and achieves competitive results without the need for hardware acceleration, such as GPUs.

Understanding Lexical Biases when Identifying Gang-related Social Media Communications

Apr 22, 2023

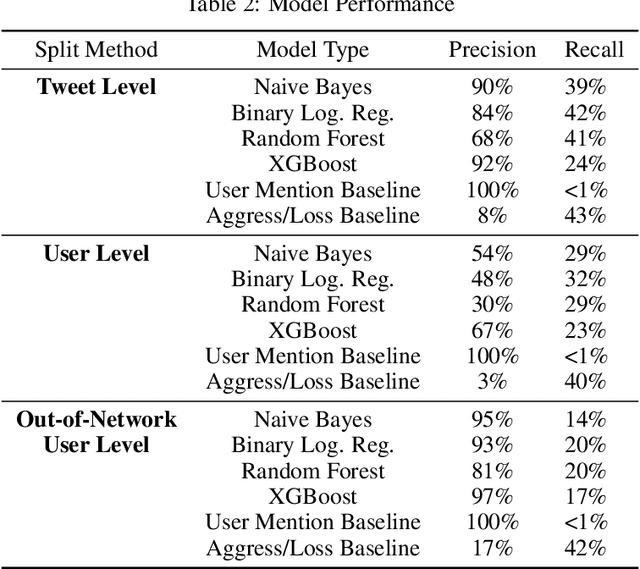

Abstract:Individuals involved in gang-related activity use mainstream social media including Facebook and Twitter to express taunts and threats as well as grief and memorializing. However, identifying the impact of gang-related activity in order to serve community member needs through social media sources has a unique set of challenges. This includes the difficulty of ethically identifying training data of individuals impacted by gang activity and the need to account for a non-standard language style commonly used in the tweets from these individuals. Our study provides evidence of methods where natural language processing tools can be helpful in efficiently identifying individuals who may be in need of community care resources such as counselors, conflict mediators, or academic/professional training programs. We demonstrate that our binary logistic classifier outperforms baseline standards in identifying individuals impacted by gang-related violence using a sample of gang-related tweets associated with Chicago. We ultimately found that the language of a tweet is highly relevant and that uses of ``big data'' methods or machine learning models need to better understand how language impacts the model's performance and how it discriminates among populations.

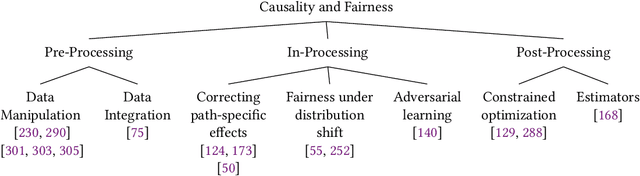

A Review of the Role of Causality in Developing Trustworthy AI Systems

Feb 14, 2023

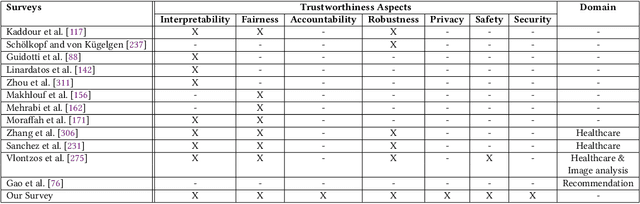

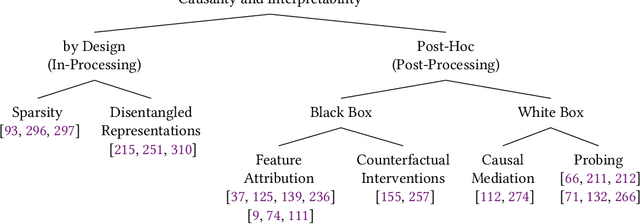

Abstract:State-of-the-art AI models largely lack an understanding of the cause-effect relationship that governs human understanding of the real world. Consequently, these models do not generalize to unseen data, often produce unfair results, and are difficult to interpret. This has led to efforts to improve the trustworthiness aspects of AI models. Recently, causal modeling and inference methods have emerged as powerful tools. This review aims to provide the reader with an overview of causal methods that have been developed to improve the trustworthiness of AI models. We hope that our contribution will motivate future research on causality-based solutions for trustworthy AI.

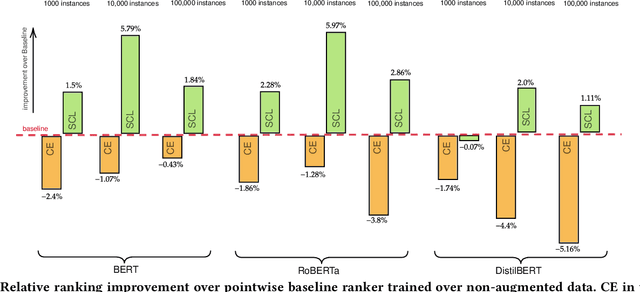

Supervised Contrastive Learning Approach for Contextual Ranking

Jul 07, 2022

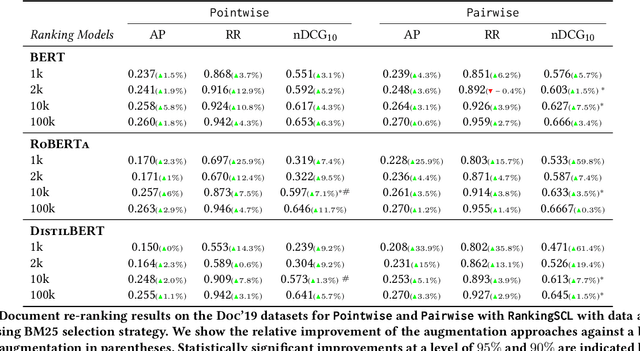

Abstract:Contextual ranking models have delivered impressive performance improvements over classical models in the document ranking task. However, these highly over-parameterized models tend to be data-hungry and require large amounts of data even for fine tuning. This paper proposes a simple yet effective method to improve ranking performance on smaller datasets using supervised contrastive learning for the document ranking problem. We perform data augmentation by creating training data using parts of the relevant documents in the query-document pairs. We then use a supervised contrastive learning objective to learn an effective ranking model from the augmented dataset. Our experiments on subsets of the TREC-DL dataset show that, although data augmentation leads to an increasing the training data sizes, it does not necessarily improve the performance using existing pointwise or pairwise training objectives. However, our proposed supervised contrastive loss objective leads to performance improvements over the standard non-augmented setting showcasing the utility of data augmentation using contrastive losses. Finally, we show the real benefit of using supervised contrastive learning objectives by showing marked improvements in smaller ranking datasets relating to news (Robust04), finance (FiQA), and scientific fact checking (SciFact).

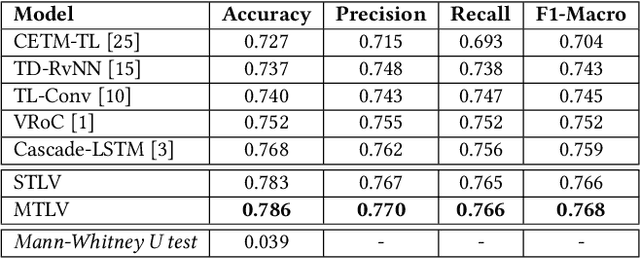

MTLTS: A Multi-Task Framework To Obtain Trustworthy Summaries From Crisis-Related Microblogs

Dec 10, 2021

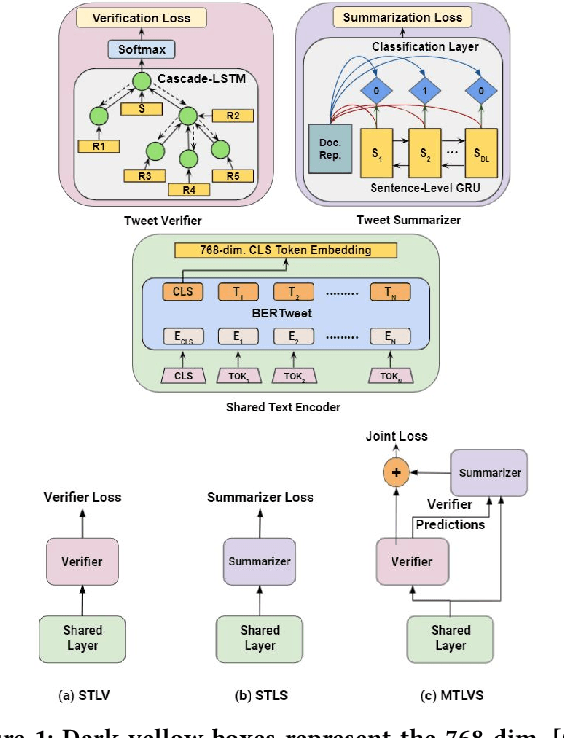

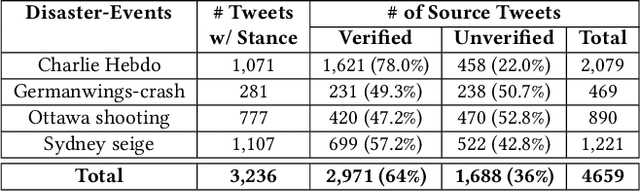

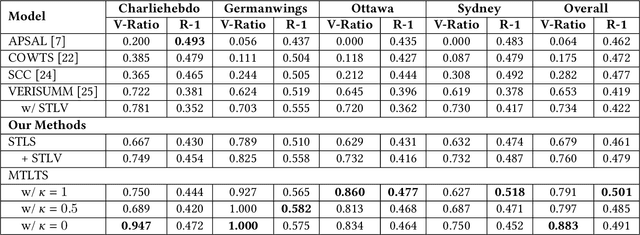

Abstract:Occurrences of catastrophes such as natural or man-made disasters trigger the spread of rumours over social media at a rapid pace. Presenting a trustworthy and summarized account of the unfolding event in near real-time to the consumers of such potentially unreliable information thus becomes an important task. In this work, we propose MTLTS, the first end-to-end solution for the task that jointly determines the credibility and summary-worthiness of tweets. Our credibility verifier is designed to recursively learn the structural properties of a Twitter conversation cascade, along with the stances of replies towards the source tweet. We then take a hierarchical multi-task learning approach, where the verifier is trained at a lower layer, and the summarizer is trained at a deeper layer where it utilizes the verifier predictions to determine the salience of a tweet. Different from existing disaster-specific summarizers, we model tweet summarization as a supervised task. Such an approach can automatically learn summary-worthy features, and can therefore generalize well across domains. When trained on the PHEME dataset [29], not only do we outperform the strongest baselines for the auxiliary task of verification/rumour detection, we also achieve 21 - 35% gains in the verified ratio of summary tweets, and 16 - 20% gains in ROUGE1-F1 scores over the existing state-of-the-art solutions for the primary task of trustworthy summarization.

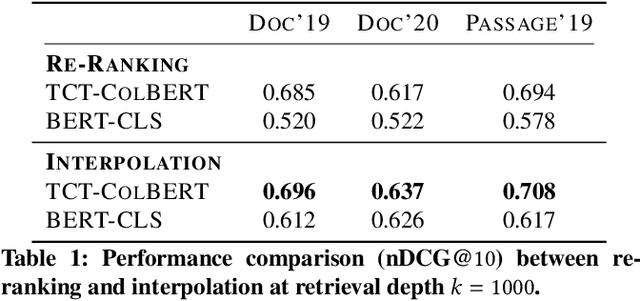

Fast Forward Indexes for Efficient Document Ranking

Oct 12, 2021

Abstract:Neural approaches, specifically transformer models, for ranking documents have delivered impressive gains in ranking performance. However, query processing using such over-parameterized models is both resource and time intensive. Consequently, to keep query processing costs manageable, trade-offs are made to reduce the number of documents to be re-ranked or consider leaner models with fewer parameters. In this paper, we propose the fast-forward index -- a simple vector forward index that facilitates ranking documents using interpolation-based ranking models. Fast-forward indexes pre-compute the dense transformer-based vector representations of documents and passages for fast CPU-based semantic similarity computation during query processing. We propose theoretically grounded index pruning and early stopping techniques to improve the query-processing throughput using fast-forward indexes. We conduct extensive large-scale experiments over the TREC-DL datasets and show up to 75% improvement in query-processing performance over hybrid indexes using only CPUs. Along with the efficiency benefits, we show that fast-forward indexes can deliver superior ranking performance due to the complementary benefits of interpolation between lexical and semantic similarities.

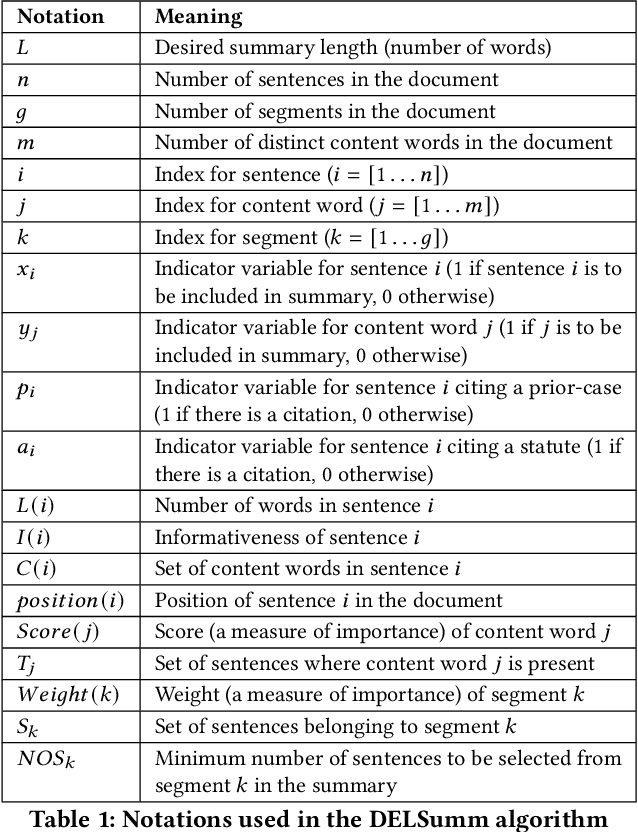

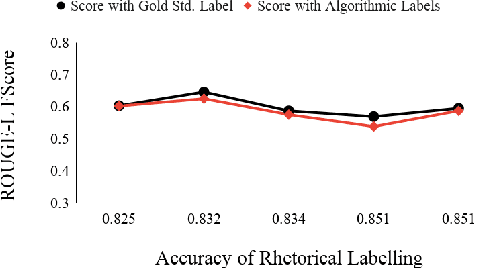

Incorporating Domain Knowledge for Extractive Summarization of Legal Case Documents

Jun 30, 2021

Abstract:Automatic summarization of legal case documents is an important and practical challenge. Apart from many domain-independent text summarization algorithms that can be used for this purpose, several algorithms have been developed specifically for summarizing legal case documents. However, most of the existing algorithms do not systematically incorporate domain knowledge that specifies what information should ideally be present in a legal case document summary. To address this gap, we propose an unsupervised summarization algorithm DELSumm which is designed to systematically incorporate guidelines from legal experts into an optimization setup. We conduct detailed experiments over case documents from the Indian Supreme Court. The experiments show that our proposed unsupervised method outperforms several strong baselines in terms of ROUGE scores, including both general summarization algorithms and legal-specific ones. In fact, though our proposed algorithm is unsupervised, it outperforms several supervised summarization models that are trained over thousands of document-summary pairs.

Learnt Sparsity for Effective and Interpretable Document Ranking

Jun 23, 2021

Abstract:Machine learning models for the ad-hoc retrieval of documents and passages have recently shown impressive improvements due to better language understanding using large pre-trained language models. However, these over-parameterized models are inherently non-interpretable and do not provide any information on the parts of the documents that were used to arrive at a certain prediction. In this paper we introduce the select and rank paradigm for document ranking, where interpretability is explicitly ensured when scoring longer documents. Specifically, we first select sentences in a document based on the input query and then predict the query-document score based only on the selected sentences, acting as an explanation. We treat sentence selection as a latent variable trained jointly with the ranker from the final output. We conduct extensive experiments to demonstrate that our inherently interpretable select-and-rank approach is competitive in comparison to other state-of-the-art methods and sometimes even outperforms them. This is due to our novel end-to-end training approach based on weighted reservoir sampling that manages to train the selector despite the stochastic sentence selection. We also show that our sentence selection approach can be used to provide explanations for models that operate on only parts of the document, such as BERT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge