Jurek Leonhardt

Understanding the User: An Intent-Based Ranking Dataset

Aug 30, 2024Abstract:As information retrieval systems continue to evolve, accurate evaluation and benchmarking of these systems become pivotal. Web search datasets, such as MS MARCO, primarily provide short keyword queries without accompanying intent or descriptions, posing a challenge in comprehending the underlying information need. This paper proposes an approach to augmenting such datasets to annotate informative query descriptions, with a focus on two prominent benchmark datasets: TREC-DL-21 and TREC-DL-22. Our methodology involves utilizing state-of-the-art LLMs to analyze and comprehend the implicit intent within individual queries from benchmark datasets. By extracting key semantic elements, we construct detailed and contextually rich descriptions for these queries. To validate the generated query descriptions, we employ crowdsourcing as a reliable means of obtaining diverse human perspectives on the accuracy and informativeness of the descriptions. This information can be used as an evaluation set for tasks such as ranking, query rewriting, or others.

Data Augmentation for Sample Efficient and Robust Document Ranking

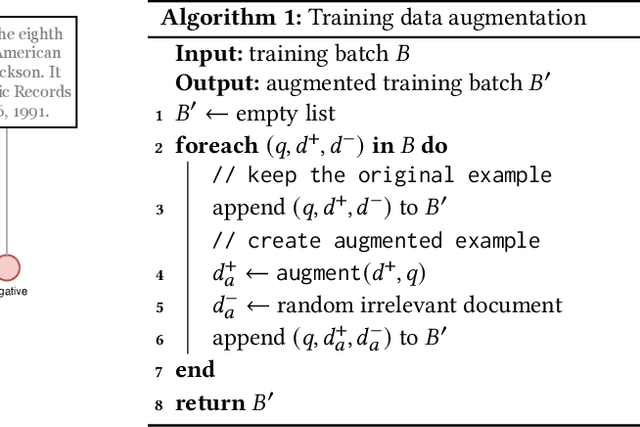

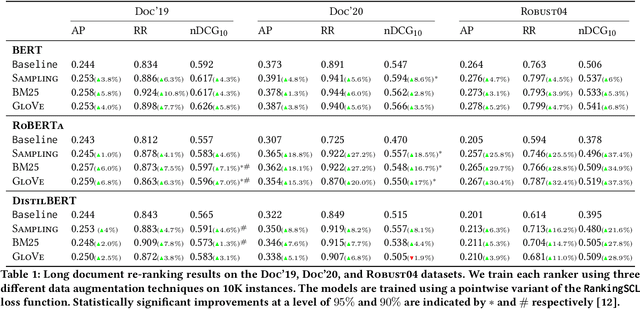

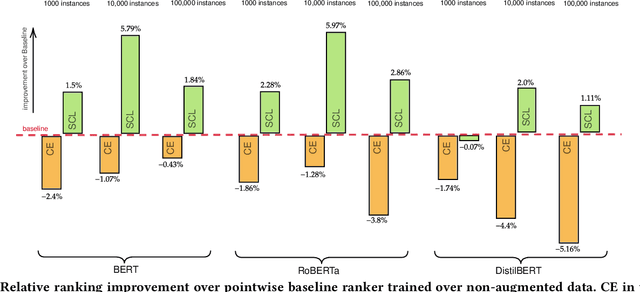

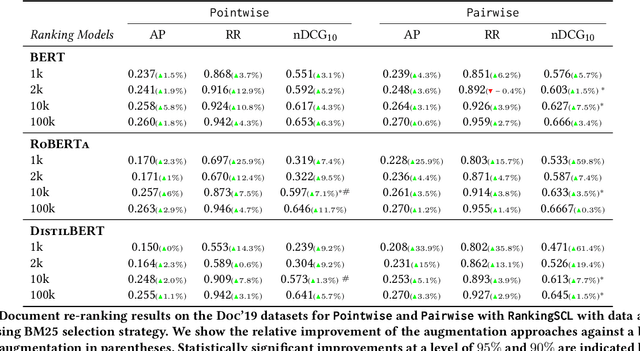

Nov 26, 2023Abstract:Contextual ranking models have delivered impressive performance improvements over classical models in the document ranking task. However, these highly over-parameterized models tend to be data-hungry and require large amounts of data even for fine-tuning. In this paper, we propose data-augmentation methods for effective and robust ranking performance. One of the key benefits of using data augmentation is in achieving sample efficiency or learning effectively when we have only a small amount of training data. We propose supervised and unsupervised data augmentation schemes by creating training data using parts of the relevant documents in the query-document pairs. We then adapt a family of contrastive losses for the document ranking task that can exploit the augmented data to learn an effective ranking model. Our extensive experiments on subsets of the MS MARCO and TREC-DL test sets show that data augmentation, along with the ranking-adapted contrastive losses, results in performance improvements under most dataset sizes. Apart from sample efficiency, we conclusively show that data augmentation results in robust models when transferred to out-of-domain benchmarks. Our performance improvements in in-domain and more prominently in out-of-domain benchmarks show that augmentation regularizes the ranking model and improves its robustness and generalization capability.

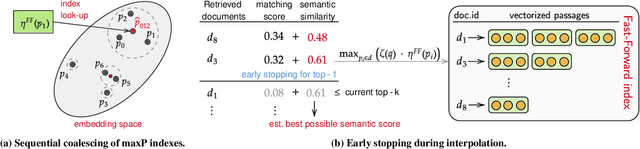

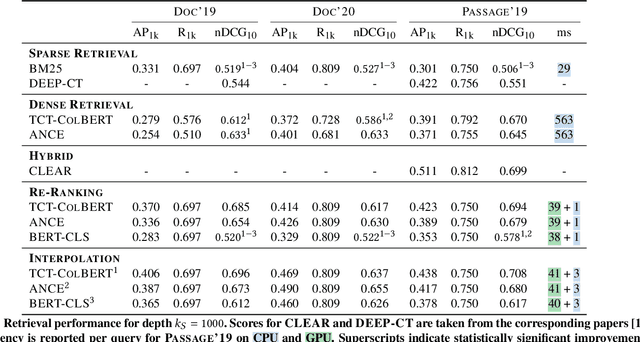

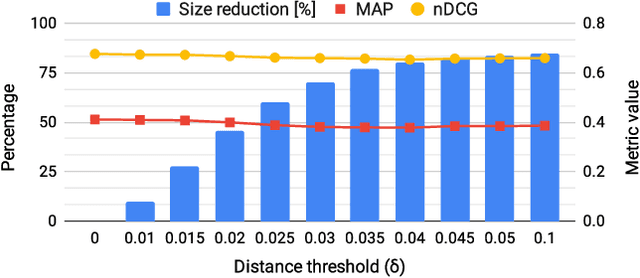

Efficient Neural Ranking using Forward Indexes and Lightweight Encoders

Nov 02, 2023Abstract:Dual-encoder-based dense retrieval models have become the standard in IR. They employ large Transformer-based language models, which are notoriously inefficient in terms of resources and latency. We propose Fast-Forward indexes -- vector forward indexes which exploit the semantic matching capabilities of dual-encoder models for efficient and effective re-ranking. Our framework enables re-ranking at very high retrieval depths and combines the merits of both lexical and semantic matching via score interpolation. Furthermore, in order to mitigate the limitations of dual-encoders, we tackle two main challenges: Firstly, we improve computational efficiency by either pre-computing representations, avoiding unnecessary computations altogether, or reducing the complexity of encoders. This allows us to considerably improve ranking efficiency and latency. Secondly, we optimize the memory footprint and maintenance cost of indexes; we propose two complementary techniques to reduce the index size and show that, by dynamically dropping irrelevant document tokens, the index maintenance efficiency can be improved substantially. We perform evaluation to show the effectiveness and efficiency of Fast-Forward indexes -- our method has low latency and achieves competitive results without the need for hardware acceleration, such as GPUs.

Distribution-Aligned Fine-Tuning for Efficient Neural Retrieval

Nov 09, 2022

Abstract:Dual-encoder-based neural retrieval models achieve appreciable performance and complement traditional lexical retrievers well due to their semantic matching capabilities, which makes them a common choice for hybrid IR systems. However, these models exhibit a performance bottleneck in the online query encoding step, as the corresponding query encoders are usually large and complex Transformer models. In this paper we investigate heterogeneous dual-encoder models, where the two encoders are separate models that do not share parameters or initializations. We empirically show that heterogeneous dual-encoders are susceptible to collapsing representations, causing them to output constant trivial representations when they are fine-tuned using a standard contrastive loss due to a distribution mismatch. We propose DAFT, a simple two-stage fine-tuning approach that aligns the two encoders in order to prevent them from collapsing. We further demonstrate how DAFT can be used to train efficient heterogeneous dual-encoder models using lightweight query encoders.

Supervised Contrastive Learning Approach for Contextual Ranking

Jul 07, 2022

Abstract:Contextual ranking models have delivered impressive performance improvements over classical models in the document ranking task. However, these highly over-parameterized models tend to be data-hungry and require large amounts of data even for fine tuning. This paper proposes a simple yet effective method to improve ranking performance on smaller datasets using supervised contrastive learning for the document ranking problem. We perform data augmentation by creating training data using parts of the relevant documents in the query-document pairs. We then use a supervised contrastive learning objective to learn an effective ranking model from the augmented dataset. Our experiments on subsets of the TREC-DL dataset show that, although data augmentation leads to an increasing the training data sizes, it does not necessarily improve the performance using existing pointwise or pairwise training objectives. However, our proposed supervised contrastive loss objective leads to performance improvements over the standard non-augmented setting showcasing the utility of data augmentation using contrastive losses. Finally, we show the real benefit of using supervised contrastive learning objectives by showing marked improvements in smaller ranking datasets relating to news (Robust04), finance (FiQA), and scientific fact checking (SciFact).

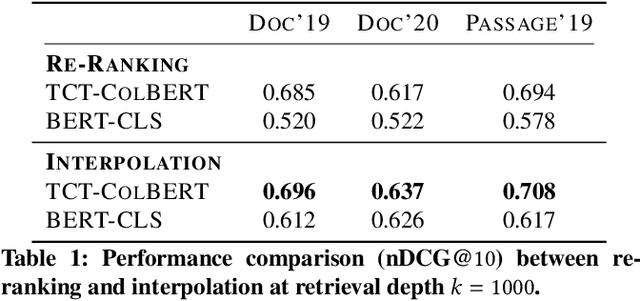

Fast Forward Indexes for Efficient Document Ranking

Oct 12, 2021

Abstract:Neural approaches, specifically transformer models, for ranking documents have delivered impressive gains in ranking performance. However, query processing using such over-parameterized models is both resource and time intensive. Consequently, to keep query processing costs manageable, trade-offs are made to reduce the number of documents to be re-ranked or consider leaner models with fewer parameters. In this paper, we propose the fast-forward index -- a simple vector forward index that facilitates ranking documents using interpolation-based ranking models. Fast-forward indexes pre-compute the dense transformer-based vector representations of documents and passages for fast CPU-based semantic similarity computation during query processing. We propose theoretically grounded index pruning and early stopping techniques to improve the query-processing throughput using fast-forward indexes. We conduct extensive large-scale experiments over the TREC-DL datasets and show up to 75% improvement in query-processing performance over hybrid indexes using only CPUs. Along with the efficiency benefits, we show that fast-forward indexes can deliver superior ranking performance due to the complementary benefits of interpolation between lexical and semantic similarities.

Learnt Sparsity for Effective and Interpretable Document Ranking

Jun 23, 2021

Abstract:Machine learning models for the ad-hoc retrieval of documents and passages have recently shown impressive improvements due to better language understanding using large pre-trained language models. However, these over-parameterized models are inherently non-interpretable and do not provide any information on the parts of the documents that were used to arrive at a certain prediction. In this paper we introduce the select and rank paradigm for document ranking, where interpretability is explicitly ensured when scoring longer documents. Specifically, we first select sentences in a document based on the input query and then predict the query-document score based only on the selected sentences, acting as an explanation. We treat sentence selection as a latent variable trained jointly with the ranker from the final output. We conduct extensive experiments to demonstrate that our inherently interpretable select-and-rank approach is competitive in comparison to other state-of-the-art methods and sometimes even outperforms them. This is due to our novel end-to-end training approach based on weighted reservoir sampling that manages to train the selector despite the stochastic sentence selection. We also show that our sentence selection approach can be used to provide explanations for models that operate on only parts of the document, such as BERT.

Exploiting Sentence-Level Representations for Passage Ranking

Jun 14, 2021

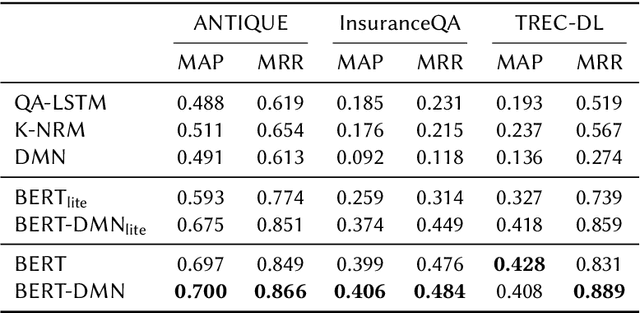

Abstract:Recently, pre-trained contextual models, such as BERT, have shown to perform well in language related tasks. We revisit the design decisions that govern the applicability of these models for the passage re-ranking task in open-domain question answering. We find that common approaches in the literature rely on fine-tuning a pre-trained BERT model and using a single, global representation of the input, discarding useful fine-grained relevance signals in token- or sentence-level representations. We argue that these discarded tokens hold useful information that can be leveraged. In this paper, we explicitly model the sentence-level representations by using Dynamic Memory Networks (DMNs) and conduct empirical evaluation to show improvements in passage re-ranking over fine-tuned vanilla BERT models by memory-enhanced explicit sentence modelling on a diverse set of open-domain QA datasets. We further show that freezing the BERT model and only training the DMN layer still comes close to the original performance, while improving training efficiency drastically. This indicates that the usual fine-tuning step mostly helps to aggregate the inherent information in a single output token, as opposed to adapting the whole model to the new task, and only achieves rather small gains.

Boilerplate Removal using a Neural Sequence Labeling Model

Apr 22, 2020

Abstract:The extraction of main content from web pages is an important task for numerous applications, ranging from usability aspects, like reader views for news articles in web browsers, to information retrieval or natural language processing. Existing approaches are lacking as they rely on large amounts of hand-crafted features for classification. This results in models that are tailored to a specific distribution of web pages, e.g. from a certain time frame, but lack in generalization power. We propose a neural sequence labeling model that does not rely on any hand-crafted features but takes only the HTML tags and words that appear in a web page as input. This allows us to present a browser extension which highlights the content of arbitrary web pages directly within the browser using our model. In addition, we create a new, more current dataset to show that our model is able to adapt to changes in the structure of web pages and outperform the state-of-the-art model.

Node Representation Learning for Directed Graphs

Oct 22, 2018

Abstract:We propose a novel approach for learning node representations in directed graphs, which maintains separate views or embedding spaces for the two distinct node roles induced by the directionality of the edges. In order to achieve this, we propose a novel alternating random walk strategy to generate training samples from the directed graph while preserving the role information. These samples are then trained using Skip-Gram with Negative Sampling (SGNS) with nodes retaining their source/target semantics. We conduct extensive experimental evaluation to showcase our effectiveness on several real-world datasets on link prediction, multi-label classification and graph reconstruction tasks. We show that the embeddings from our approach are indeed robust, generalizable and well performing across multiple kinds of tasks and networks. We show that we consistently outperform all random-walk based neural embedding methods for link prediction and graph reconstruction tasks. In addition to providing a theoretical interpretation of our method we also show that we are more considerably robust than the other directed graph approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge