Jongho Lee

Department of Electrical and Computer Engineering, Seoul National University, Seoul, Republic of Korea

Deep learning water-unsuppressed MRSI at ultra-high field for simultaneous quantitative metabolic, susceptibility and myelin water imaging

Dec 16, 2025

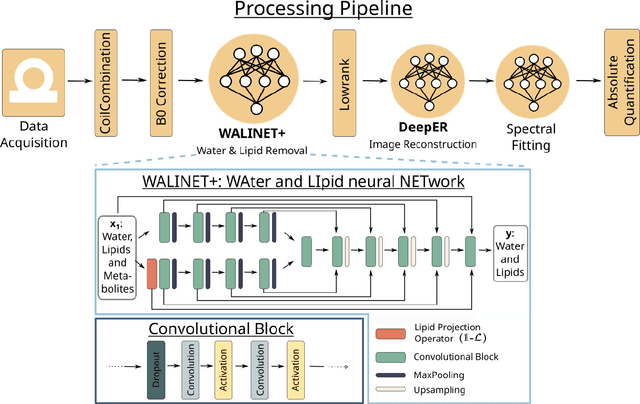

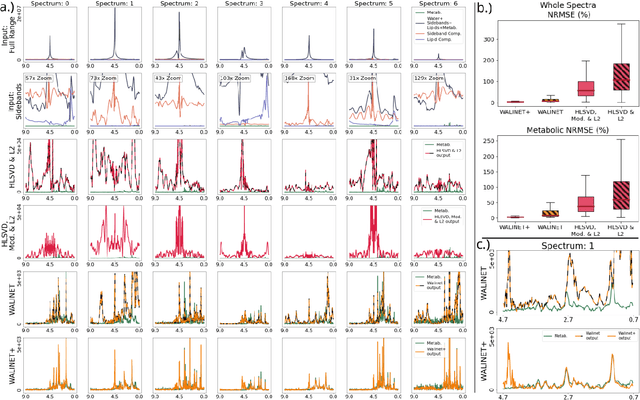

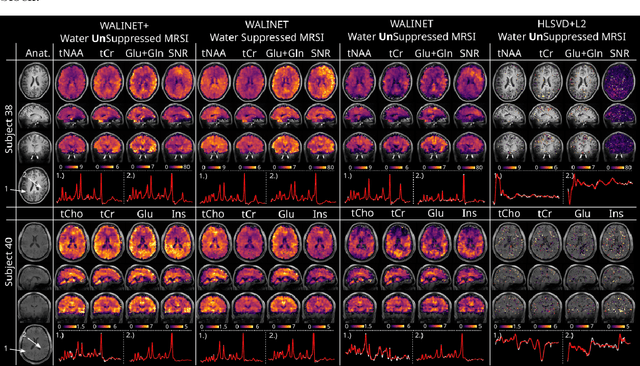

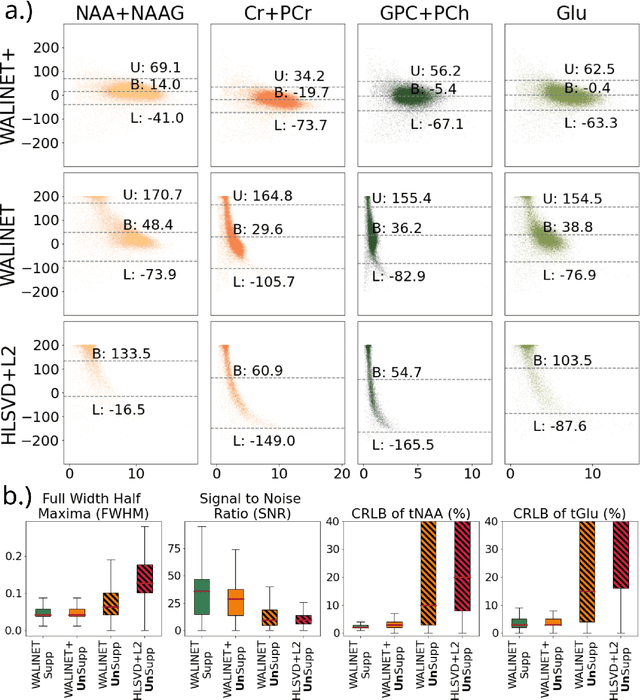

Abstract:Purpose: Magnetic Resonance Spectroscopic Imaging (MRSI) maps endogenous brain metabolism while suppressing the overwhelming water signal. Water-unsuppressed MRSI (wu-MRSI) allows simultaneous imaging of water and metabolites, but large water sidebands cause challenges for metabolic fitting. We developed an end-to-end deep-learning pipeline to overcome these challenges at ultra-high field. Methods:Fast high-resolution wu-MRSI was acquired at 7T with non-cartesian ECCENTRIC sampling and ultra-short echo time. A water and lipid removal network (WALINET+) was developed to remove lipids, water signal, and sidebands. MRSI reconstruction was performed by DeepER and a physics-informed network for metabolite fitting. Water signal was used for absolute metabolite quantification, quantitative susceptibility mapping (QSM), and myelin water fraction imaging (MWF). Results: WALINET+ provided the lowest NRMSE (< 2%) in simulations and in vivo the smallest bias (< 20%) and limits-of-agreement (+-63%) between wu-MRSI and ws-MRSI scans. Several metabolites such as creatine and glutamate showed higher SNR in wu-MRSI. QSM and MWF obtained from wu-MRSI and GRE showed good agreement with 0 ppm/5.5% bias and +-0.05 ppm/ +- 12.75% limits-of-agreement. Conclusion: High-quality metabolic, QSM, and MWF mapping of the human brain can be obtained simultaneously by ECCENTRIC wu-MRSI at 7T with 2 mm isotropic resolution in 12 min. WALINET+ robustly removes water sidebands while preserving metabolite signal, eliminating the need for water suppression and separate water acquisitions.

MIMOSA: Multi-parametric Imaging using Multiple-echoes with Optimized Simultaneous Acquisition for highly-efficient quantitative MRI

Aug 13, 2025

Abstract:Purpose: To develop a new sequence, MIMOSA, for highly-efficient T1, T2, T2*, proton density (PD), and source separation quantitative susceptibility mapping (QSM). Methods: MIMOSA was developed based on 3D-quantification using an interleaved Look-Locker acquisition sequence with T2 preparation pulse (3D-QALAS) by combining 3D turbo Fast Low Angle Shot (FLASH) and multi-echo gradient echo acquisition modules with a spiral-like Cartesian trajectory to facilitate highly-efficient acquisition. Simulations were performed to optimize the sequence. Multi-contrast/-slice zero-shot self-supervised learning algorithm was employed for reconstruction. The accuracy of quantitative mapping was assessed by comparing MIMOSA with 3D-QALAS and reference techniques in both ISMRM/NIST phantom and in-vivo experiments. MIMOSA's acceleration capability was assessed at R = 3.3, 6.5, and 11.8 in in-vivo experiments, with repeatability assessed through scan-rescan studies. Beyond the 3T experiments, mesoscale quantitative mapping was performed at 750 um isotropic resolution at 7T. Results: Simulations demonstrated that MIMOSA achieved improved parameter estimation accuracy compared to 3D-QALAS. Phantom experiments indicated that MIMOSA exhibited better agreement with the reference techniques than 3D-QALAS. In-vivo experiments demonstrated that an acceleration factor of up to R = 11.8-fold can be achieved while preserving parameter estimation accuracy, with intra-class correlation coefficients of 0.998 (T1), 0.973 (T2), 0.947 (T2*), 0.992 (QSM), 0.987 (paramagnetic susceptibility), and 0.977 (diamagnetic susceptibility) in scan-rescan studies. Whole-brain T1, T2, T2*, PD, source separation QSM were obtained with 1 mm isotropic resolution in 3 min at 3T and 750 um isotropic resolution in 13 min at 7T. Conclusion: MIMOSA demonstrated potential for highly-efficient multi-parametric mapping.

Efficient and robust 3D blind harmonization for large domain gaps

Apr 30, 2025Abstract:Blind harmonization has emerged as a promising technique for MR image harmonization to achieve scale-invariant representations, requiring only target domain data (i.e., no source domain data necessary). However, existing methods face limitations such as inter-slice heterogeneity in 3D, moderate image quality, and limited performance for a large domain gap. To address these challenges, we introduce BlindHarmonyDiff, a novel blind 3D harmonization framework that leverages an edge-to-image model tailored specifically to harmonization. Our framework employs a 3D rectified flow trained on target domain images to reconstruct the original image from an edge map, then yielding a harmonized image from the edge of a source domain image. We propose multi-stride patch training for efficient 3D training and a refinement module for robust inference by suppressing hallucination. Extensive experiments demonstrate that BlindHarmonyDiff outperforms prior arts by harmonizing diverse source domain images to the target domain, achieving higher correspondence to the target domain characteristics. Downstream task-based quality assessments such as tissue segmentation and age prediction on diverse MR scanners further confirm the effectiveness of our approach and demonstrate the capability of our robust and generalizable blind harmonization.

Vessel segmentation for X-separation

Feb 03, 2025

Abstract:$\chi$-separation is an advanced quantitative susceptibility mapping (QSM) method that is designed to generate paramagnetic ($\chi_{para}$) and diamagnetic ($|\chi_{dia}|$) susceptibility maps, reflecting the distribution of iron and myelin in the brain. However, vessels have shown artifacts, interfering with the accurate quantification of iron and myelin in applications. To address this challenge, a new vessel segmentation method for $\chi$-separation is developed. The method comprises three steps: 1) Seed generation from $\textit{R}_2^*$ and the product of $\chi_{para}$ and $|\chi_{dia}|$ maps; 2) Region growing, guided by vessel geometry, creating a vessel mask; 3) Refinement of the vessel mask by excluding non-vessel structures. The performance of the method was compared to conventional vessel segmentation methods both qualitatively and quantitatively. To demonstrate the utility of the method, it was tested in two applications: quantitative evaluation of a neural network-based $\chi$-separation reconstruction method ($\chi$-sepnet-$\textit{R}_2^*$) and population-averaged region of interest (ROI) analysis. The proposed method demonstrates superior performance to the conventional vessel segmentation methods, effectively excluding the non-vessel structures, achieving the highest Dice score coefficient. For the applications, applying vessel masks report notable improvements for the quantitative evaluation of $\chi$-sepnet-$\textit{R}_2^*$ and statistically significant differences in population-averaged ROI analysis. These applications suggest excluding vessels when analyzing the $\chi$-separation maps provide more accurate evaluations. The proposed method has the potential to facilitate various applications, offering reliable analysis through the generation of a high-quality vessel mask.

Multi-LLM Collaborative Caption Generation in Scientific Documents

Jan 05, 2025

Abstract:Scientific figure captioning is a complex task that requires generating contextually appropriate descriptions of visual content. However, existing methods often fall short by utilizing incomplete information, treating the task solely as either an image-to-text or text summarization problem. This limitation hinders the generation of high-quality captions that fully capture the necessary details. Moreover, existing data sourced from arXiv papers contain low-quality captions, posing significant challenges for training large language models (LLMs). In this paper, we introduce a framework called Multi-LLM Collaborative Figure Caption Generation (MLBCAP) to address these challenges by leveraging specialized LLMs for distinct sub-tasks. Our approach unfolds in three key modules: (Quality Assessment) We utilize multimodal LLMs to assess the quality of training data, enabling the filtration of low-quality captions. (Diverse Caption Generation) We then employ a strategy of fine-tuning/prompting multiple LLMs on the captioning task to generate candidate captions. (Judgment) Lastly, we prompt a prominent LLM to select the highest quality caption from the candidates, followed by refining any remaining inaccuracies. Human evaluations demonstrate that informative captions produced by our approach rank better than human-written captions, highlighting its effectiveness. Our code is available at https://github.com/teamreboott/MLBCAP

$χ$-sepnet: Deep neural network for magnetic susceptibility source separation

Sep 24, 2024

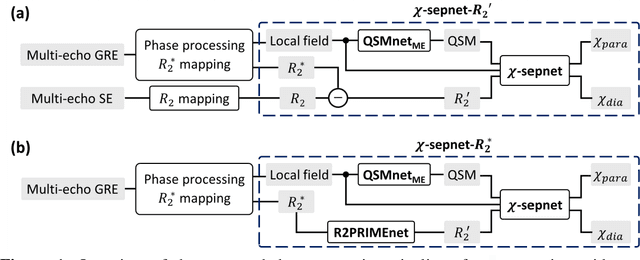

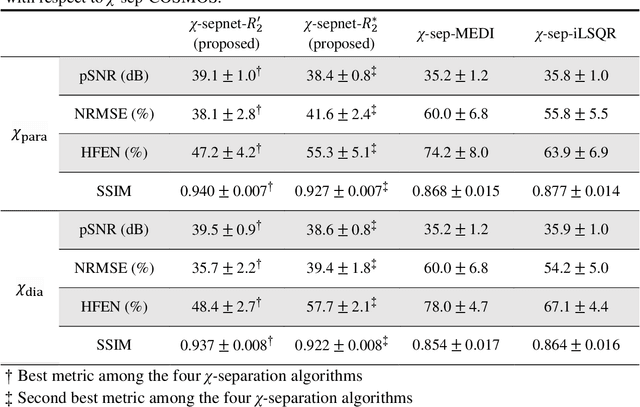

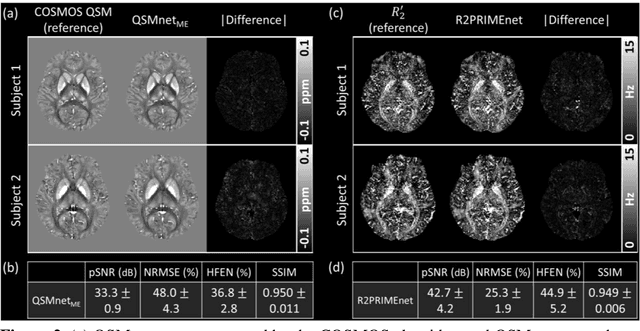

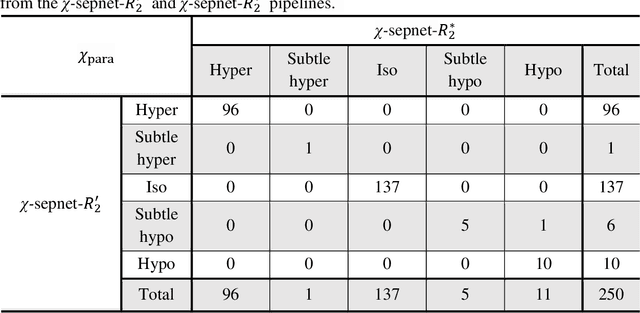

Abstract:Magnetic susceptibility source separation ($\chi$-separation), an advanced quantitative susceptibility mapping (QSM) method, enables the separate estimation of para- and diamagnetic susceptibility source distributions in the brain. The method utilizes reversible transverse relaxation (R2'=R2*-R2) to complement frequency shift information for estimating susceptibility source concentrations, requiring time-consuming data acquisition for R2 in addition R2*. To address this challenge, we develop a new deep learning network, $\chi$-sepnet, and propose two deep learning-based susceptibility source separation pipelines, $\chi$-sepnet-R2' for inputs with multi-echo GRE and multi-echo spin-echo, and $\chi$-sepnet-R2* for input with multi-echo GRE only. $\chi$-sepnet is trained using multiple head orientation data that provide streaking artifact-free labels, generating high-quality $\chi$-separation maps. The evaluation of the pipelines encompasses both qualitative and quantitative assessments in healthy subjects, and visual inspection of lesion characteristics in multiple sclerosis patients. The susceptibility source-separated maps of the proposed pipelines delineate detailed brain structures with substantially reduced artifacts compared to those from conventional regularization-based reconstruction methods. In quantitative analysis, $\chi$-sepnet-R2' achieves the best outcomes followed by $\chi$-sepnet-R2*, outperforming the conventional methods. When the lesions of multiple sclerosis patients are assessed, both pipelines report identical lesion characteristics in most lesions ($\chi$para: 99.6% and $\chi$dia: 98.4% out of 250 lesions). The $\chi$-sepnet-R2* pipeline, which only requires multi-echo GRE data, has demonstrated its potential to offer broad clinical and scientific applications, although further evaluations for various diseases and pathological conditions are necessary.

Adaptive Selection of Sampling-Reconstruction in Fourier Compressed Sensing

Sep 19, 2024Abstract:Compressed sensing (CS) has emerged to overcome the inefficiency of Nyquist sampling. However, traditional optimization-based reconstruction is slow and can not yield an exact image in practice. Deep learning-based reconstruction has been a promising alternative to optimization-based reconstruction, outperforming it in accuracy and computation speed. Finding an efficient sampling method with deep learning-based reconstruction, especially for Fourier CS remains a challenge. Existing joint optimization of sampling-reconstruction works ($\mathcal{H}_1$) optimize the sampling mask but have low potential as it is not adaptive to each data point. Adaptive sampling ($\mathcal{H}_2$) has also disadvantages of difficult optimization and Pareto sub-optimality. Here, we propose a novel adaptive selection of sampling-reconstruction ($\mathcal{H}_{1.5}$) framework that selects the best sampling mask and reconstruction network for each input data. We provide theorems that our method has a higher potential than $\mathcal{H}_1$ and effectively solves the Pareto sub-optimality problem in sampling-reconstruction by using separate reconstruction networks for different sampling masks. To select the best sampling mask, we propose to quantify the high-frequency Bayesian uncertainty of the input, using a super-resolution space generation model. Our method outperforms joint optimization of sampling-reconstruction ($\mathcal{H}_1$) and adaptive sampling ($\mathcal{H}_2$) by achieving significant improvements on several Fourier CS problems.

MOST: MR reconstruction Optimization for multiple downStream Tasks via continual learning

Sep 16, 2024

Abstract:Deep learning-based Magnetic Resonance (MR) reconstruction methods have focused on generating high-quality images but they often overlook the impact on downstream tasks (e.g., segmentation) that utilize the reconstructed images. Cascading separately trained reconstruction network and downstream task network has been shown to introduce performance degradation due to error propagation and domain gaps between training datasets. To mitigate this issue, downstream task-oriented reconstruction optimization has been proposed for a single downstream task. Expanding this optimization to multi-task scenarios is not straightforward. In this work, we extended this optimization to sequentially introduced multiple downstream tasks and demonstrated that a single MR reconstruction network can be optimized for multiple downstream tasks by deploying continual learning (MOST). MOST integrated techniques from replay-based continual learning and image-guided loss to overcome catastrophic forgetting. Comparative experiments demonstrated that MOST outperformed a reconstruction network without finetuning, a reconstruction network with na\"ive finetuning, and conventional continual learning methods. This advancement empowers the application of a single MR reconstruction network for multiple downstream tasks. The source code is available at: https://github.com/SNU-LIST/MOST

Volumetric B1+ field homogenization in 7 Tesla brain MRI using metasurface scattering

Sep 09, 2024Abstract:Ultrahigh field magnetic resonance imaging (UHF MRI) has become an indispensable tool for human brain imaging, offering excellent diagnostic accuracy while avoiding the risks associated with invasive modalities. When the radiofrequency magnetic field of the UHF MRI encounters the multifaceted complexity of the brain, characterized by wavelength-scale, dissipative, and random heterogeneous materials, detrimental mesoscopic challenges such as B1+ field inhomogeneity and local heating arise. Here we develop the metasurface design inspired by scattering theory to achieve the volumetric field homogeneity in the UHF MRI. The method focuses on finding the scattering ansatz systematically and incorporates a pruning technique to achieve the minimum number of participating modes, which guarantees stable practical implementation. Using full-wave analysis of realistic human brain models under a 7 Tesla MRI, we demonstrate more than a twofold improvement in field homogeneity and suppressed local heating, achieving better performance than even the commercial 3 Tesla MRI. The result shows a noninvasive generalization of constant intensity waves in optics, offering a universal methodology applicable to higher Tesla MRI.

RTP-LX: Can LLMs Evaluate Toxicity in Multilingual Scenarios?

Apr 22, 2024

Abstract:Large language models (LLMs) and small language models (SLMs) are being adopted at remarkable speed, although their safety still remains a serious concern. With the advent of multilingual S/LLMs, the question now becomes a matter of scale: can we expand multilingual safety evaluations of these models with the same velocity at which they are deployed? To this end we introduce RTP-LX, a human-transcreated and human-annotated corpus of toxic prompts and outputs in 28 languages. RTP-LX follows participatory design practices, and a portion of the corpus is especially designed to detect culturally-specific toxic language. We evaluate seven S/LLMs on their ability to detect toxic content in a culturally-sensitive, multilingual scenario. We find that, although they typically score acceptably in terms of accuracy, they have low agreement with human judges when judging holistically the toxicity of a prompt, and have difficulty discerning harm in context-dependent scenarios, particularly with subtle-yet-harmful content (e.g. microagressions, bias). We release of this dataset to contribute to further reduce harmful uses of these models and improve their safe deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge