Hayeon Lee

Efficient and robust 3D blind harmonization for large domain gaps

Apr 30, 2025Abstract:Blind harmonization has emerged as a promising technique for MR image harmonization to achieve scale-invariant representations, requiring only target domain data (i.e., no source domain data necessary). However, existing methods face limitations such as inter-slice heterogeneity in 3D, moderate image quality, and limited performance for a large domain gap. To address these challenges, we introduce BlindHarmonyDiff, a novel blind 3D harmonization framework that leverages an edge-to-image model tailored specifically to harmonization. Our framework employs a 3D rectified flow trained on target domain images to reconstruct the original image from an edge map, then yielding a harmonized image from the edge of a source domain image. We propose multi-stride patch training for efficient 3D training and a refinement module for robust inference by suppressing hallucination. Extensive experiments demonstrate that BlindHarmonyDiff outperforms prior arts by harmonizing diverse source domain images to the target domain, achieving higher correspondence to the target domain characteristics. Downstream task-based quality assessments such as tissue segmentation and age prediction on diverse MR scanners further confirm the effectiveness of our approach and demonstrate the capability of our robust and generalizable blind harmonization.

Diffusion-based Neural Network Weights Generation

Feb 28, 2024

Abstract:Transfer learning is a topic of significant interest in recent deep learning research because it enables faster convergence and improved performance on new tasks. While the performance of transfer learning depends on the similarity of the source data to the target data, it is costly to train a model on a large number of datasets. Therefore, pretrained models are generally blindly selected with the hope that they will achieve good performance on the given task. To tackle such suboptimality of the pretrained models, we propose an efficient and adaptive transfer learning scheme through dataset-conditioned pretrained weights sampling. Specifically, we use a latent diffusion model with a variational autoencoder that can reconstruct the neural network weights, to learn the distribution of a set of pretrained weights conditioned on each dataset for transfer learning on unseen datasets. By learning the distribution of a neural network on a variety pretrained models, our approach enables adaptive sampling weights for unseen datasets achieving faster convergence and reaching competitive performance.

Co-training and Co-distillation for Quality Improvement and Compression of Language Models

Nov 07, 2023

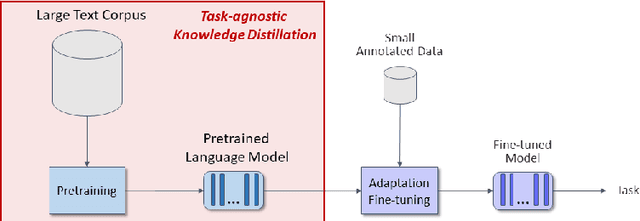

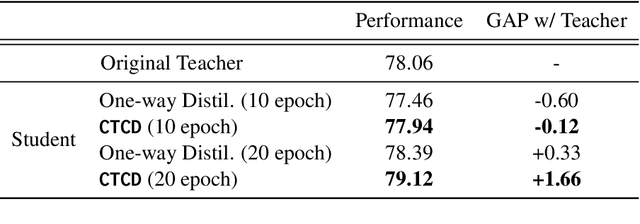

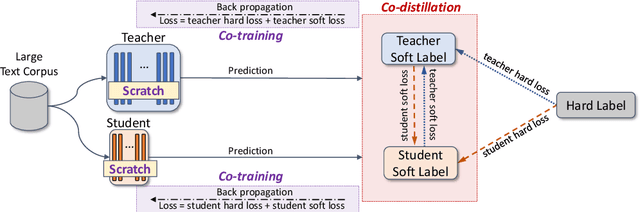

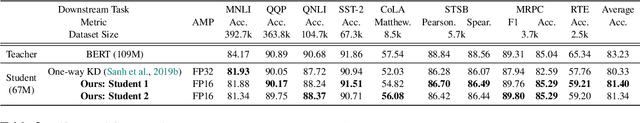

Abstract:Knowledge Distillation (KD) compresses computationally expensive pre-trained language models (PLMs) by transferring their knowledge to smaller models, allowing their use in resource-constrained or real-time settings. However, most smaller models fail to surpass the performance of the original larger model, resulting in sacrificing performance to improve inference speed. To address this issue, we propose Co-Training and Co-Distillation (CTCD), a novel framework that improves performance and inference speed together by co-training two models while mutually distilling knowledge. The CTCD framework successfully achieves this based on two significant findings: 1) Distilling knowledge from the smaller model to the larger model during co-training improves the performance of the larger model. 2) The enhanced performance of the larger model further boosts the performance of the smaller model. The CTCD framework shows promise as it can be combined with existing techniques like architecture design or data augmentation, replacing one-way KD methods, to achieve further performance improvement. Extensive ablation studies demonstrate the effectiveness of CTCD, and the small model distilled by CTCD outperforms the original larger model by a significant margin of 1.66 on the GLUE benchmark.

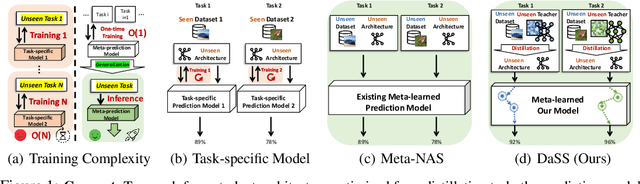

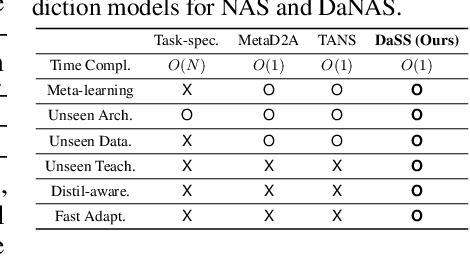

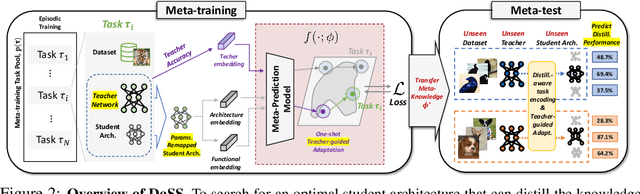

Meta-prediction Model for Distillation-Aware NAS on Unseen Datasets

May 26, 2023

Abstract:Distillation-aware Neural Architecture Search (DaNAS) aims to search for an optimal student architecture that obtains the best performance and/or efficiency when distilling the knowledge from a given teacher model. Previous DaNAS methods have mostly tackled the search for the neural architecture for fixed datasets and the teacher, which are not generalized well on a new task consisting of an unseen dataset and an unseen teacher, thus need to perform a costly search for any new combination of the datasets and the teachers. For standard NAS tasks without KD, meta-learning-based computationally efficient NAS methods have been proposed, which learn the generalized search process over multiple tasks (datasets) and transfer the knowledge obtained over those tasks to a new task. However, since they assume learning from scratch without KD from a teacher, they might not be ideal for DaNAS scenarios. To eliminate the excessive computational cost of DaNAS methods and the sub-optimality of rapid NAS methods, we propose a distillation-aware meta accuracy prediction model, DaSS (Distillation-aware Student Search), which can predict a given architecture's final performances on a dataset when performing KD with a given teacher, without having actually to train it on the target task. The experimental results demonstrate that our proposed meta-prediction model successfully generalizes to multiple unseen datasets for DaNAS tasks, largely outperforming existing meta-NAS methods and rapid NAS baselines. Code is available at https://github.com/CownowAn/DaSS

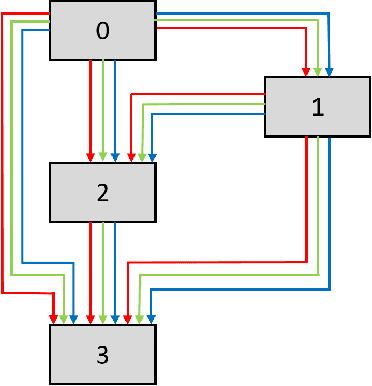

DiffusionNAG: Task-guided Neural Architecture Generation with Diffusion Models

May 26, 2023

Abstract:Neural Architecture Search (NAS) has emerged as a powerful technique for automating neural architecture design. However, existing NAS methods either require an excessive amount of time for repetitive training or sampling of many task-irrelevant architectures. Moreover, they lack generalization across different tasks and usually require searching for optimal architectures for each task from scratch without reusing the knowledge from the previous NAS tasks. To tackle such limitations of existing NAS methods, we propose a novel transferable task-guided Neural Architecture Generation (NAG) framework based on diffusion models, dubbed DiffusionNAG. With the guidance of a surrogate model, such as a performance predictor for a given task, our DiffusionNAG can generate task-optimal architectures for diverse tasks, including unseen tasks. DiffusionNAG is highly efficient as it generates task-optimal neural architectures by leveraging the prior knowledge obtained from the previous tasks and neural architecture distribution. Furthermore, we introduce a score network to ensure the generation of valid architectures represented as directed acyclic graphs, unlike existing graph generative models that focus on generating undirected graphs. Extensive experiments demonstrate that DiffusionNAG significantly outperforms the state-of-the-art transferable NAG model in architecture generation quality, as well as previous NAS methods on four computer vision datasets with largely reduced computational cost.

A Study on Knowledge Distillation from Weak Teacher for Scaling Up Pre-trained Language Models

May 26, 2023Abstract:Distillation from Weak Teacher (DWT) is a method of transferring knowledge from a smaller, weaker teacher model to a larger student model to improve its performance. Previous studies have shown that DWT can be effective in the vision domain and natural language processing (NLP) pre-training stage. Specifically, DWT shows promise in practical scenarios, such as enhancing new generation or larger models using pre-trained yet older or smaller models and lacking a resource budget. However, the optimal conditions for using DWT have yet to be fully investigated in NLP pre-training. Therefore, this study examines three key factors to optimize DWT, distinct from those used in the vision domain or traditional knowledge distillation. These factors are: (i) the impact of teacher model quality on DWT effectiveness, (ii) guidelines for adjusting the weighting value for DWT loss, and (iii) the impact of parameter remapping as a student model initialization technique for DWT.

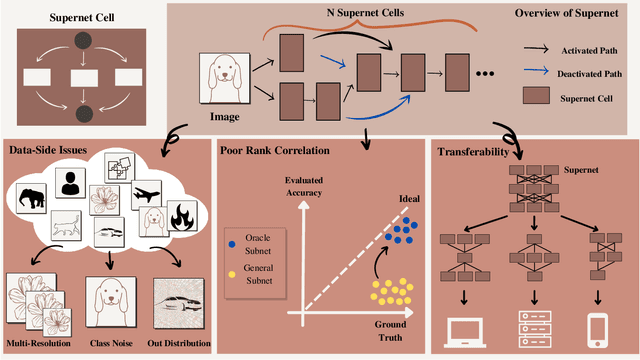

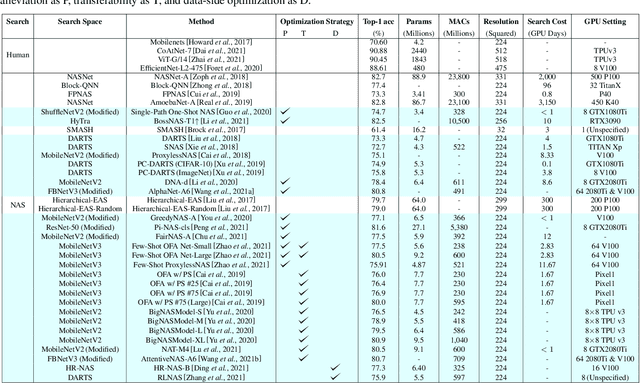

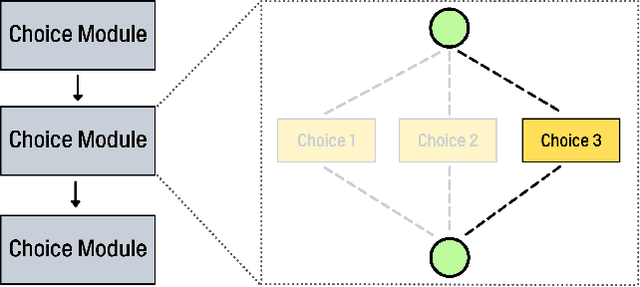

SuperNet in Neural Architecture Search: A Taxonomic Survey

Apr 08, 2022

Abstract:Deep Neural Networks (DNN) have made significant progress in a wide range of visual recognition tasks such as image classification, object detection, and semantic segmentation. The evolution of convolutional architectures has led to better performance by incurring expensive computational costs. In addition, network design has become a difficult task, which is labor-intensive and requires a high level of domain knowledge. To mitigate such issues, there have been studies for a variety of neural architecture search methods that automatically search for optimal architectures, achieving models with impressive performance that outperform human-designed counterparts. This survey aims to provide an overview of existing works in this field of research and specifically focus on the supernet optimization that builds a neural network that assembles all the architectures as its sub models by using weight sharing. We aim to accomplish that by categorizing supernet optimization by proposing them as solutions to the common challenges found in the literature: data-side optimization, poor rank correlation alleviation, and transferable NAS for a number of deployment scenarios.

Online Hyperparameter Meta-Learning with Hypergradient Distillation

Oct 06, 2021

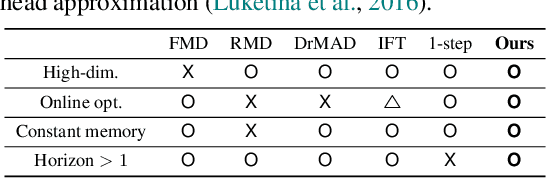

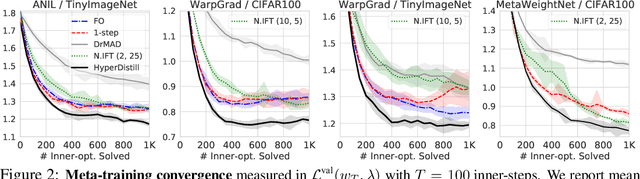

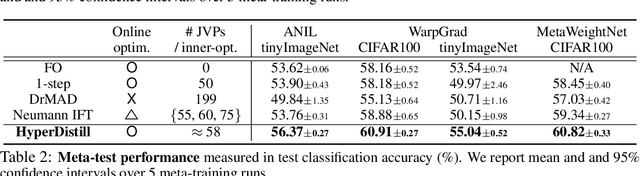

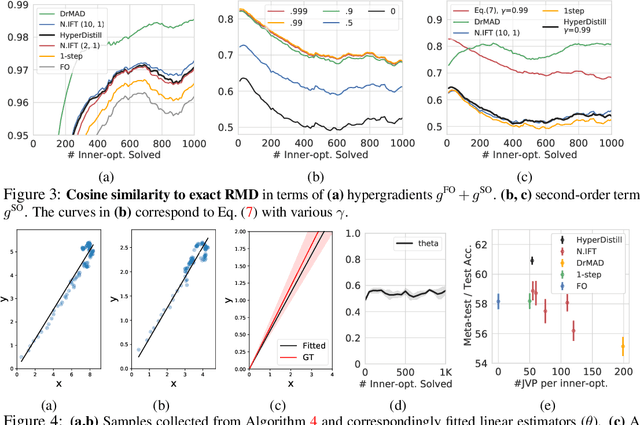

Abstract:Many gradient-based meta-learning methods assume a set of parameters that do not participate in inner-optimization, which can be considered as hyperparameters. Although such hyperparameters can be optimized using the existing gradient-based hyperparameter optimization (HO) methods, they suffer from the following issues. Unrolled differentiation methods do not scale well to high-dimensional hyperparameters or horizon length, Implicit Function Theorem (IFT) based methods are restrictive for online optimization, and short horizon approximations suffer from short horizon bias. In this work, we propose a novel HO method that can overcome these limitations, by approximating the second-order term with knowledge distillation. Specifically, we parameterize a single Jacobian-vector product (JVP) for each HO step and minimize the distance from the true second-order term. Our method allows online optimization and also is scalable to the hyperparameter dimension and the horizon length. We demonstrate the effectiveness of our method on two different meta-learning methods and three benchmark datasets.

Rapid Neural Architecture Search by Learning to Generate Graphs from Datasets

Jul 02, 2021

Abstract:Despite the success of recent Neural Architecture Search (NAS) methods on various tasks which have shown to output networks that largely outperform human-designed networks, conventional NAS methods have mostly tackled the optimization of searching for the network architecture for a single task (dataset), which does not generalize well across multiple tasks (datasets). Moreover, since such task-specific methods search for a neural architecture from scratch for every given task, they incur a large computational cost, which is problematic when the time and monetary budget are limited. In this paper, we propose an efficient NAS framework that is trained once on a database consisting of datasets and pretrained networks and can rapidly search for a neural architecture for a novel dataset. The proposed MetaD2A (Meta Dataset-to-Architecture) model can stochastically generate graphs (architectures) from a given set (dataset) via a cross-modal latent space learned with amortized meta-learning. Moreover, we also propose a meta-performance predictor to estimate and select the best architecture without direct training on target datasets. The experimental results demonstrate that our model meta-learned on subsets of ImageNet-1K and architectures from NAS-Bench 201 search space successfully generalizes to multiple unseen datasets including CIFAR-10 and CIFAR-100, with an average search time of 33 GPU seconds. Even under MobileNetV3 search space, MetaD2A is 5.5K times faster than NSGANetV2, a transferable NAS method, with comparable performance. We believe that the MetaD2A proposes a new research direction for rapid NAS as well as ways to utilize the knowledge from rich databases of datasets and architectures accumulated over the past years. Code is available at https://github.com/HayeonLee/MetaD2A.

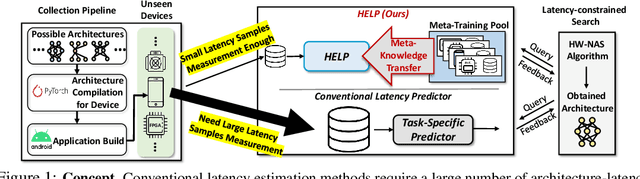

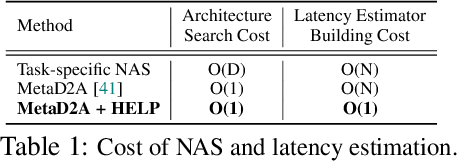

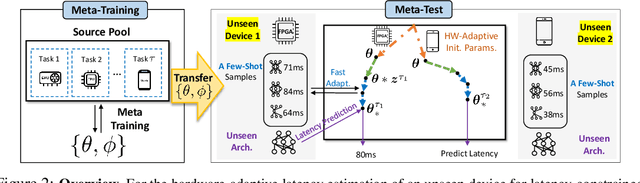

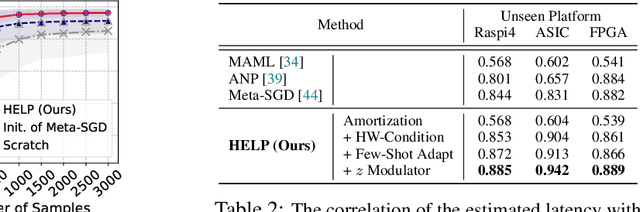

HELP: Hardware-Adaptive Efficient Latency Predictor for NAS via Meta-Learning

Jun 16, 2021

Abstract:For deployment, neural architecture search should be hardware-aware, in order to satisfy the device-specific constraints (e.g., memory usage, latency and energy consumption) and enhance the model efficiency. Existing methods on hardware-aware NAS collect a large number of samples (e.g., accuracy and latency) from a target device, either builds a lookup table or a latency estimator. However, such approach is impractical in real-world scenarios as there exist numerous devices with different hardware specifications, and collecting samples from such a large number of devices will require prohibitive computational and monetary cost. To overcome such limitations, we propose Hardware-adaptive Efficient Latency Predictor (HELP), which formulates the device-specific latency estimation problem as a meta-learning problem, such that we can estimate the latency of a model's performance for a given task on an unseen device with a few samples. To this end, we introduce novel hardware embeddings to embed any devices considering them as black-box functions that output latencies, and meta-learn the hardware-adaptive latency predictor in a device-dependent manner, using the hardware embeddings. We validate the proposed HELP for its latency estimation performance on unseen platforms, on which it achieves high estimation performance with as few as 10 measurement samples, outperforming all relevant baselines. We also validate end-to-end NAS frameworks using HELP against ones without it, and show that it largely reduces the total time cost of the base NAS method, in latency-constrained settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge