Se-Young Yun

MERIT Feedback Elicits Better Bargaining in LLM Negotiators

Feb 12, 2026Abstract:Bargaining is often regarded as a logical arena rather than an art or a matter of intuition, yet Large Language Models (LLMs) still struggle to navigate it due to limited strategic depth and difficulty adapting to complex human factors. Current benchmarks rarely capture this limitation. To bridge this gap, we present an utility feedback centric framework. Our contributions are: (i) AgoraBench, a new benchmark spanning nine challenging settings (e.g., deception, monopoly) that supports diverse strategy modeling; (ii) human-aligned, economically grounded metrics derived from utility theory. This is operationalized via agent utility, negotiation power, and acquisition ratio that implicitly measure how well the negotiation aligns with human preference and (iii) a human preference grounded dataset with learning pipeline that strengthens LLMs' bargaining ability through both prompting and finetuning. Empirical results indicate that baseline LLM strategies often diverge from human preferences, while our mechanism substantially improves negotiation performance, yielding deeper strategic behavior and stronger opponent awareness.

A Jointly Efficient and Optimal Algorithm for Heteroskedastic Generalized Linear Bandits with Adversarial Corruptions

Feb 11, 2026Abstract:We consider the problem of heteroskedastic generalized linear bandits (GLBs) with adversarial corruptions, which subsumes various stochastic contextual bandit settings, including heteroskedastic linear bandits and logistic/Poisson bandits. We propose HCW-GLB-OMD, which consists of two components: an online mirror descent (OMD)-based estimator and Hessian-based confidence weights to achieve corruption robustness. This is computationally efficient in that it only requires ${O}(1)$ space and time complexity per iteration. Under the self-concordance assumption on the link function, we show a regret bound of $\tilde{O}\left( d \sqrt{\sum_t g(τ_t) \dotμ_{t,\star}} + d^2 g_{\max} κ+ d κC \right)$, where $\dotμ_{t,\star}$ is the slope of $μ$ around the optimal arm at time $t$, $g(τ_t)$'s are potentially exogenously time-varying dispersions (e.g., $g(τ_t) = σ_t^2$ for heteroskedastic linear bandits, $g(τ_t) = 1$ for Bernoulli and Poisson), $g_{\max} = \max_{t \in [T]} g(τ_t)$ is the maximum dispersion, and $C \geq 0$ is the total corruption budget of the adversary. We complement this with a lower bound of $\tildeΩ(d \sqrt{\sum_t g(τ_t) \dotμ_{t,\star}} + d C)$, unifying previous problem-specific lower bounds. Thus, our algorithm achieves, up to a $κ$-factor in the corruption term, instance-wise minimax optimality simultaneously across various instances of heteroskedastic GLBs with adversarial corruptions.

Weisfeiler and Lehman Go Categorical

Feb 06, 2026Abstract:While lifting map has significantly enhanced the expressivity of graph neural networks, extending this paradigm to hypergraphs remains fragmented. To address this, we introduce the categorical Weisfeiler-Lehman framework, which formalizes lifting as a functorial mapping from an arbitrary data category to the unifying category of graded posets. When applied to hypergraphs, this perspective allows us to systematically derive Hypergraph Isomorphism Networks, a family of neural architectures where the message passing topology is strictly determined by the choice of functor. We introduce two distinct functors from the category of hypergraphs: an incidence functor and a symmetric simplicial complex functor. While the incidence architecture structurally mirrors standard bipartite schemes, our functorial derivation enforces a richer information flow over the resulting poset, capturing complex intersection geometries often missed by existing methods. We theoretically characterize the expressivity of these models, proving that both the incidence-based and symmetric simplicial approaches subsume the expressive power of the standard Hypergraph Weisfeiler-Lehman test. Extensive experiments on real-world benchmarks validate these theoretical findings.

When and Where to Attack? Stage-wise Attention-Guided Adversarial Attack on Large Vision Language Models

Feb 04, 2026Abstract:Adversarial attacks against Large Vision-Language Models (LVLMs) are crucial for exposing safety vulnerabilities in modern multimodal systems. Recent attacks based on input transformations, such as random cropping, suggest that spatially localized perturbations can be more effective than global image manipulation. However, randomly cropping the entire image is inherently stochastic and fails to use the limited per-pixel perturbation budget efficiently. We make two key observations: (i) regional attention scores are positively correlated with adversarial loss sensitivity, and (ii) attacking high-attention regions induces a structured redistribution of attention toward subsequent salient regions. Based on these findings, we propose Stage-wise Attention-Guided Attack (SAGA), an attention-guided framework that progressively concentrates perturbations on high-attention regions. SAGA enables more efficient use of constrained perturbation budgets, producing highly imperceptible adversarial examples while consistently achieving state-of-the-art attack success rates across ten LVLMs. The source code is available at https://github.com/jackwaky/SAGA.

Generative Visual Code Mobile World Models

Feb 02, 2026Abstract:Mobile Graphical User Interface (GUI) World Models (WMs) offer a promising path for improving mobile GUI agent performance at train- and inference-time. However, current approaches face a critical trade-off: text-based WMs sacrifice visual fidelity, while the inability of visual WMs in precise text rendering led to their reliance on slow, complex pipelines dependent on numerous external models. We propose a novel paradigm: visual world modeling via renderable code generation, where a single Vision-Language Model (VLM) predicts the next GUI state as executable web code that renders to pixels, rather than generating pixels directly. This combines the strengths of both approaches: VLMs retain their linguistic priors for precise text rendering while their pre-training on structured web code enables high-fidelity visual generation. We introduce gWorld (8B, 32B), the first open-weight visual mobile GUI WMs built on this paradigm, along with a data generation framework (gWorld) that automatically synthesizes code-based training data. In extensive evaluation across 4 in- and 2 out-of-distribution benchmarks, gWorld sets a new pareto frontier in accuracy versus model size, outperforming 8 frontier open-weight models over 50.25x larger. Further analyses show that (1) scaling training data via gWorld yields meaningful gains, (2) each component of our pipeline improves data quality, and (3) stronger world modeling improves downstream mobile GUI policy performance.

KLASS: KL-Guided Fast Inference in Masked Diffusion Models

Nov 07, 2025Abstract:Masked diffusion models have demonstrated competitive results on various tasks including language generation. However, due to its iterative refinement process, the inference is often bottlenecked by slow and static sampling speed. To overcome this problem, we introduce `KL-Adaptive Stability Sampling' (KLASS), a fast yet effective sampling method that exploits token-level KL divergence to identify stable, high-confidence predictions. By unmasking multiple tokens in each iteration without any additional model training, our approach speeds up generation significantly while maintaining sample quality. On reasoning benchmarks, KLASS achieves up to $2.78\times$ wall-clock speedups while improving performance over standard greedy decoding, attaining state-of-the-art results among diffusion-based samplers. We further validate KLASS across diverse domains, including text, image, and molecular generation, showing its effectiveness as a broadly applicable sampler across different models.

QuRe: Query-Relevant Retrieval through Hard Negative Sampling in Composed Image Retrieval

Jul 16, 2025

Abstract:Composed Image Retrieval (CIR) retrieves relevant images based on a reference image and accompanying text describing desired modifications. However, existing CIR methods only focus on retrieving the target image and disregard the relevance of other images. This limitation arises because most methods employing contrastive learning-which treats the target image as positive and all other images in the batch as negatives-can inadvertently include false negatives. This may result in retrieving irrelevant images, reducing user satisfaction even when the target image is retrieved. To address this issue, we propose Query-Relevant Retrieval through Hard Negative Sampling (QuRe), which optimizes a reward model objective to reduce false negatives. Additionally, we introduce a hard negative sampling strategy that selects images positioned between two steep drops in relevance scores following the target image, to effectively filter false negatives. In order to evaluate CIR models on their alignment with human satisfaction, we create Human-Preference FashionIQ (HP-FashionIQ), a new dataset that explicitly captures user preferences beyond target retrieval. Extensive experiments demonstrate that QuRe achieves state-of-the-art performance on FashionIQ and CIRR datasets while exhibiting the strongest alignment with human preferences on the HP-FashionIQ dataset. The source code is available at https://github.com/jackwaky/QuRe.

Efficient Parametric SVD of Koopman Operator for Stochastic Dynamical Systems

Jul 09, 2025

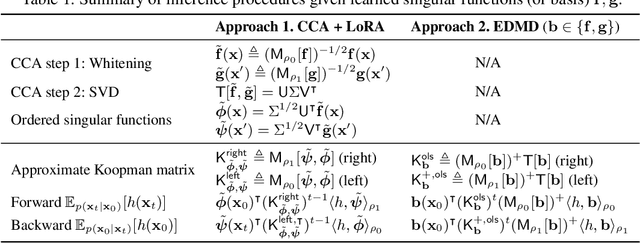

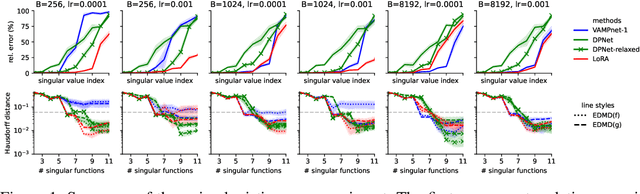

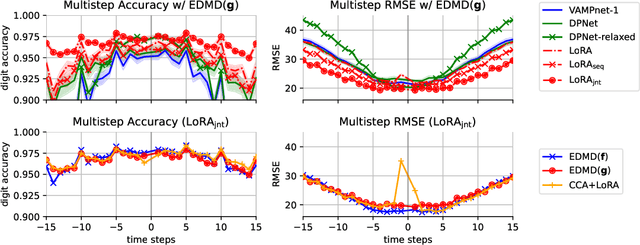

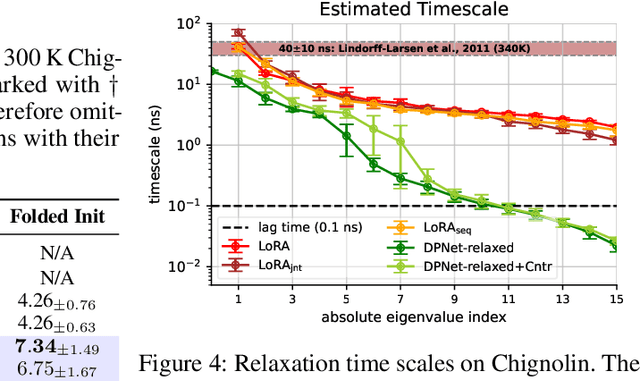

Abstract:The Koopman operator provides a principled framework for analyzing nonlinear dynamical systems through linear operator theory. Recent advances in dynamic mode decomposition (DMD) have shown that trajectory data can be used to identify dominant modes of a system in a data-driven manner. Building on this idea, deep learning methods such as VAMPnet and DPNet have been proposed to learn the leading singular subspaces of the Koopman operator. However, these methods require backpropagation through potentially numerically unstable operations on empirical second moment matrices, such as singular value decomposition and matrix inversion, during objective computation, which can introduce biased gradient estimates and hinder scalability to large systems. In this work, we propose a scalable and conceptually simple method for learning the top-k singular functions of the Koopman operator for stochastic dynamical systems based on the idea of low-rank approximation. Our approach eliminates the need for unstable linear algebraic operations and integrates easily into modern deep learning pipelines. Empirical results demonstrate that the learned singular subspaces are both reliable and effective for downstream tasks such as eigen-analysis and multi-step prediction.

Automated Skill Discovery for Language Agents through Exploration and Iterative Feedback

Jun 04, 2025Abstract:Training large language model (LLM) agents to acquire necessary skills and perform diverse tasks within an environment is gaining interest as a means to enable open-endedness. However, creating the training dataset for their skill acquisition faces several challenges. Manual trajectory collection requires significant human effort. Another approach, where LLMs directly propose tasks to learn, is often invalid, as the LLMs lack knowledge of which tasks are actually feasible. Moreover, the generated data may not provide a meaningful learning signal, as agents often already perform well on the proposed tasks. To address this, we propose a novel automatic skill discovery framework EXIF for LLM-powered agents, designed to improve the feasibility of generated target behaviors while accounting for the agents' capabilities. Our method adopts an exploration-first strategy by employing an exploration agent (Alice) to train the target agent (Bob) to learn essential skills in the environment. Specifically, Alice first interacts with the environment to retrospectively generate a feasible, environment-grounded skill dataset, which is then used to train Bob. Crucially, we incorporate an iterative feedback loop, where Alice evaluates Bob's performance to identify areas for improvement. This feedback then guides Alice's next round of exploration, forming a closed-loop data generation process. Experiments on Webshop and Crafter demonstrate EXIF's ability to effectively discover meaningful skills and iteratively expand the capabilities of the trained agent without any human intervention, achieving substantial performance improvements. Interestingly, we observe that setting Alice to the same model as Bob also notably improves performance, demonstrating EXIF's potential for building a self-evolving system.

LLM Agents for Bargaining with Utility-based Feedback

May 29, 2025Abstract:Bargaining, a critical aspect of real-world interactions, presents challenges for large language models (LLMs) due to limitations in strategic depth and adaptation to complex human factors. Existing benchmarks often fail to capture this real-world complexity. To address this and enhance LLM capabilities in realistic bargaining, we introduce a comprehensive framework centered on utility-based feedback. Our contributions are threefold: (1) BargainArena, a novel benchmark dataset with six intricate scenarios (e.g., deceptive practices, monopolies) to facilitate diverse strategy modeling; (2) human-aligned, economically-grounded evaluation metrics inspired by utility theory, incorporating agent utility and negotiation power, which implicitly reflect and promote opponent-aware reasoning (OAR); and (3) a structured feedback mechanism enabling LLMs to iteratively refine their bargaining strategies. This mechanism can positively collaborate with in-context learning (ICL) prompts, including those explicitly designed to foster OAR. Experimental results show that LLMs often exhibit negotiation strategies misaligned with human preferences, and that our structured feedback mechanism significantly improves their performance, yielding deeper strategic and opponent-aware reasoning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge