Jiye Kim

Deep learning water-unsuppressed MRSI at ultra-high field for simultaneous quantitative metabolic, susceptibility and myelin water imaging

Dec 16, 2025

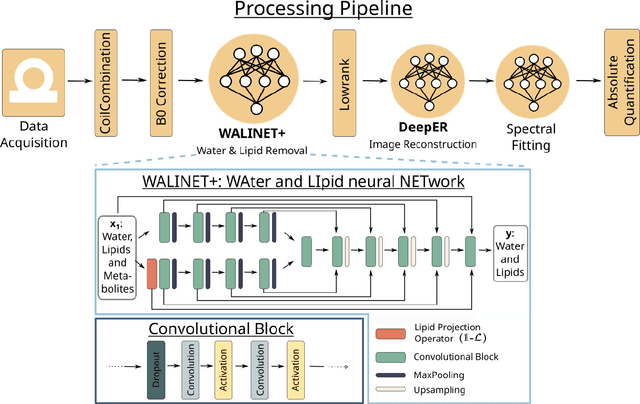

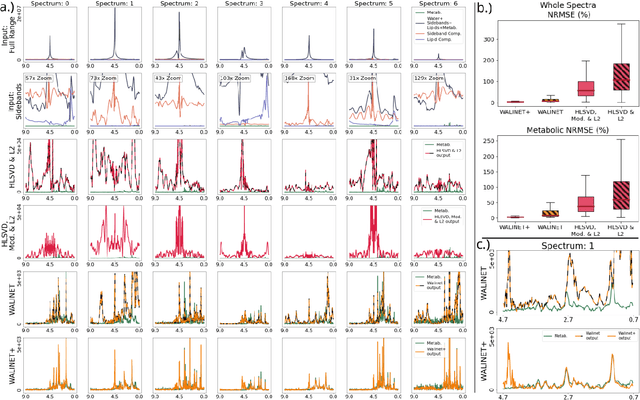

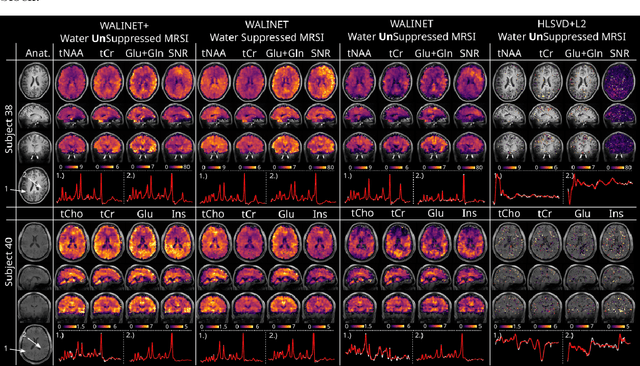

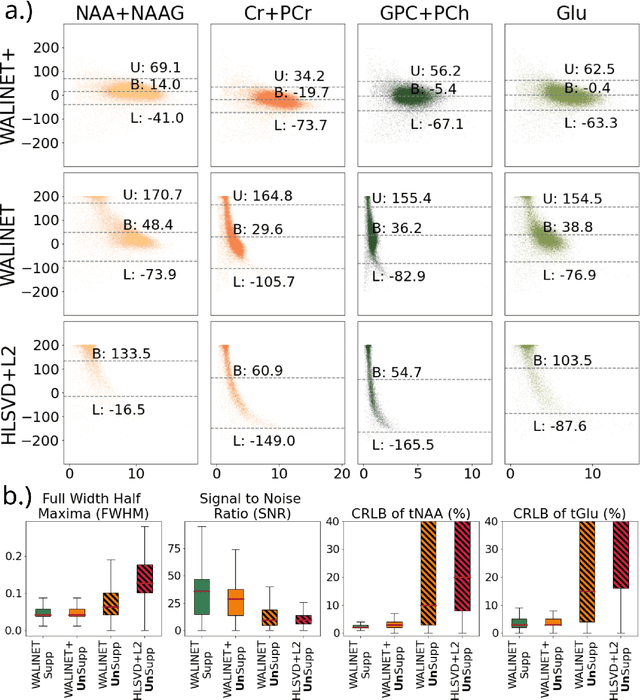

Abstract:Purpose: Magnetic Resonance Spectroscopic Imaging (MRSI) maps endogenous brain metabolism while suppressing the overwhelming water signal. Water-unsuppressed MRSI (wu-MRSI) allows simultaneous imaging of water and metabolites, but large water sidebands cause challenges for metabolic fitting. We developed an end-to-end deep-learning pipeline to overcome these challenges at ultra-high field. Methods:Fast high-resolution wu-MRSI was acquired at 7T with non-cartesian ECCENTRIC sampling and ultra-short echo time. A water and lipid removal network (WALINET+) was developed to remove lipids, water signal, and sidebands. MRSI reconstruction was performed by DeepER and a physics-informed network for metabolite fitting. Water signal was used for absolute metabolite quantification, quantitative susceptibility mapping (QSM), and myelin water fraction imaging (MWF). Results: WALINET+ provided the lowest NRMSE (< 2%) in simulations and in vivo the smallest bias (< 20%) and limits-of-agreement (+-63%) between wu-MRSI and ws-MRSI scans. Several metabolites such as creatine and glutamate showed higher SNR in wu-MRSI. QSM and MWF obtained from wu-MRSI and GRE showed good agreement with 0 ppm/5.5% bias and +-0.05 ppm/ +- 12.75% limits-of-agreement. Conclusion: High-quality metabolic, QSM, and MWF mapping of the human brain can be obtained simultaneously by ECCENTRIC wu-MRSI at 7T with 2 mm isotropic resolution in 12 min. WALINET+ robustly removes water sidebands while preserving metabolite signal, eliminating the need for water suppression and separate water acquisitions.

MIMOSA: Multi-parametric Imaging using Multiple-echoes with Optimized Simultaneous Acquisition for highly-efficient quantitative MRI

Aug 13, 2025

Abstract:Purpose: To develop a new sequence, MIMOSA, for highly-efficient T1, T2, T2*, proton density (PD), and source separation quantitative susceptibility mapping (QSM). Methods: MIMOSA was developed based on 3D-quantification using an interleaved Look-Locker acquisition sequence with T2 preparation pulse (3D-QALAS) by combining 3D turbo Fast Low Angle Shot (FLASH) and multi-echo gradient echo acquisition modules with a spiral-like Cartesian trajectory to facilitate highly-efficient acquisition. Simulations were performed to optimize the sequence. Multi-contrast/-slice zero-shot self-supervised learning algorithm was employed for reconstruction. The accuracy of quantitative mapping was assessed by comparing MIMOSA with 3D-QALAS and reference techniques in both ISMRM/NIST phantom and in-vivo experiments. MIMOSA's acceleration capability was assessed at R = 3.3, 6.5, and 11.8 in in-vivo experiments, with repeatability assessed through scan-rescan studies. Beyond the 3T experiments, mesoscale quantitative mapping was performed at 750 um isotropic resolution at 7T. Results: Simulations demonstrated that MIMOSA achieved improved parameter estimation accuracy compared to 3D-QALAS. Phantom experiments indicated that MIMOSA exhibited better agreement with the reference techniques than 3D-QALAS. In-vivo experiments demonstrated that an acceleration factor of up to R = 11.8-fold can be achieved while preserving parameter estimation accuracy, with intra-class correlation coefficients of 0.998 (T1), 0.973 (T2), 0.947 (T2*), 0.992 (QSM), 0.987 (paramagnetic susceptibility), and 0.977 (diamagnetic susceptibility) in scan-rescan studies. Whole-brain T1, T2, T2*, PD, source separation QSM were obtained with 1 mm isotropic resolution in 3 min at 3T and 750 um isotropic resolution in 13 min at 7T. Conclusion: MIMOSA demonstrated potential for highly-efficient multi-parametric mapping.

MolMole: Molecule Mining from Scientific Literature

May 08, 2025Abstract:The extraction of molecular structures and reaction data from scientific documents is challenging due to their varied, unstructured chemical formats and complex document layouts. To address this, we introduce MolMole, a vision-based deep learning framework that unifies molecule detection, reaction diagram parsing, and optical chemical structure recognition (OCSR) into a single pipeline for automating the extraction of chemical data directly from page-level documents. Recognizing the lack of a standard page-level benchmark and evaluation metric, we also present a testset of 550 pages annotated with molecule bounding boxes, reaction labels, and MOLfiles, along with a novel evaluation metric. Experimental results demonstrate that MolMole outperforms existing toolkits on both our benchmark and public datasets. The benchmark testset will be publicly available, and the MolMole toolkit will be accessible soon through an interactive demo on the LG AI Research website. For commercial inquiries, please contact us at \href{mailto:contact_ddu@lgresearch.ai}{contact\_ddu@lgresearch.ai}.

Enhancing Free-hand 3D Photoacoustic and Ultrasound Reconstruction using Deep Learning

Feb 05, 2025

Abstract:This study introduces a motion-based learning network with a global-local self-attention module (MoGLo-Net) to enhance 3D reconstruction in handheld photoacoustic and ultrasound (PAUS) imaging. Standard PAUS imaging is often limited by a narrow field of view and the inability to effectively visualize complex 3D structures. The 3D freehand technique, which aligns sequential 2D images for 3D reconstruction, faces significant challenges in accurate motion estimation without relying on external positional sensors. MoGLo-Net addresses these limitations through an innovative adaptation of the self-attention mechanism, which effectively exploits the critical regions, such as fully-developed speckle area or high-echogenic tissue area within successive ultrasound images to accurately estimate motion parameters. This facilitates the extraction of intricate features from individual frames. Additionally, we designed a patch-wise correlation operation to generate a correlation volume that is highly correlated with the scanning motion. A custom loss function was also developed to ensure robust learning with minimized bias, leveraging the characteristics of the motion parameters. Experimental evaluations demonstrated that MoGLo-Net surpasses current state-of-the-art methods in both quantitative and qualitative performance metrics. Furthermore, we expanded the application of 3D reconstruction technology beyond simple B-mode ultrasound volumes to incorporate Doppler ultrasound and photoacoustic imaging, enabling 3D visualization of vasculature. The source code for this study is publicly available at: https://github.com/guhong3648/US3D

Vessel segmentation for X-separation

Feb 03, 2025

Abstract:$\chi$-separation is an advanced quantitative susceptibility mapping (QSM) method that is designed to generate paramagnetic ($\chi_{para}$) and diamagnetic ($|\chi_{dia}|$) susceptibility maps, reflecting the distribution of iron and myelin in the brain. However, vessels have shown artifacts, interfering with the accurate quantification of iron and myelin in applications. To address this challenge, a new vessel segmentation method for $\chi$-separation is developed. The method comprises three steps: 1) Seed generation from $\textit{R}_2^*$ and the product of $\chi_{para}$ and $|\chi_{dia}|$ maps; 2) Region growing, guided by vessel geometry, creating a vessel mask; 3) Refinement of the vessel mask by excluding non-vessel structures. The performance of the method was compared to conventional vessel segmentation methods both qualitatively and quantitatively. To demonstrate the utility of the method, it was tested in two applications: quantitative evaluation of a neural network-based $\chi$-separation reconstruction method ($\chi$-sepnet-$\textit{R}_2^*$) and population-averaged region of interest (ROI) analysis. The proposed method demonstrates superior performance to the conventional vessel segmentation methods, effectively excluding the non-vessel structures, achieving the highest Dice score coefficient. For the applications, applying vessel masks report notable improvements for the quantitative evaluation of $\chi$-sepnet-$\textit{R}_2^*$ and statistically significant differences in population-averaged ROI analysis. These applications suggest excluding vessels when analyzing the $\chi$-separation maps provide more accurate evaluations. The proposed method has the potential to facilitate various applications, offering reliable analysis through the generation of a high-quality vessel mask.

$χ$-sepnet: Deep neural network for magnetic susceptibility source separation

Sep 24, 2024

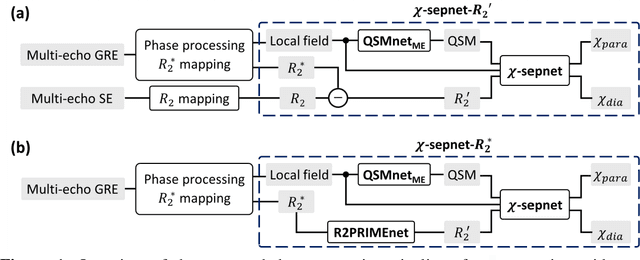

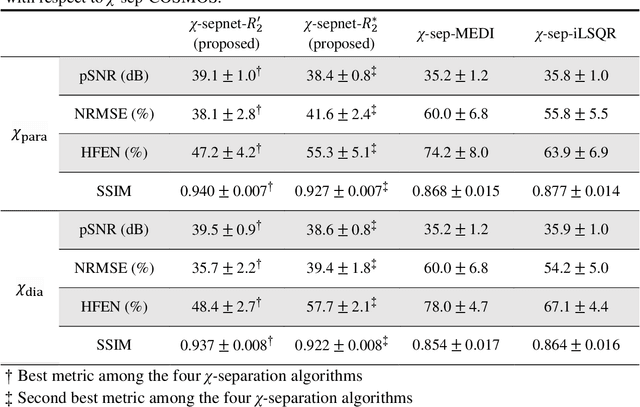

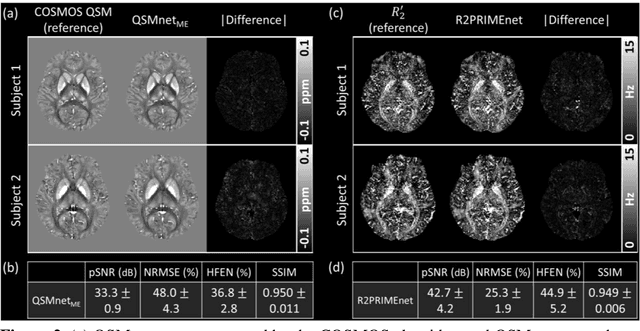

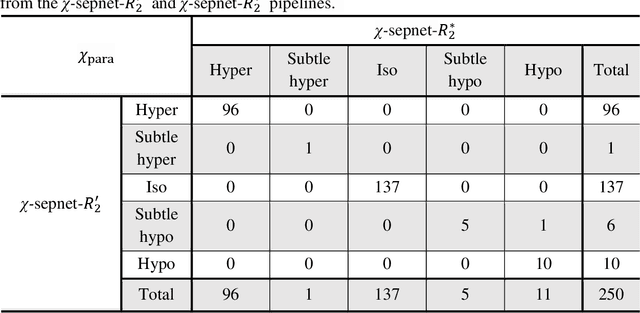

Abstract:Magnetic susceptibility source separation ($\chi$-separation), an advanced quantitative susceptibility mapping (QSM) method, enables the separate estimation of para- and diamagnetic susceptibility source distributions in the brain. The method utilizes reversible transverse relaxation (R2'=R2*-R2) to complement frequency shift information for estimating susceptibility source concentrations, requiring time-consuming data acquisition for R2 in addition R2*. To address this challenge, we develop a new deep learning network, $\chi$-sepnet, and propose two deep learning-based susceptibility source separation pipelines, $\chi$-sepnet-R2' for inputs with multi-echo GRE and multi-echo spin-echo, and $\chi$-sepnet-R2* for input with multi-echo GRE only. $\chi$-sepnet is trained using multiple head orientation data that provide streaking artifact-free labels, generating high-quality $\chi$-separation maps. The evaluation of the pipelines encompasses both qualitative and quantitative assessments in healthy subjects, and visual inspection of lesion characteristics in multiple sclerosis patients. The susceptibility source-separated maps of the proposed pipelines delineate detailed brain structures with substantially reduced artifacts compared to those from conventional regularization-based reconstruction methods. In quantitative analysis, $\chi$-sepnet-R2' achieves the best outcomes followed by $\chi$-sepnet-R2*, outperforming the conventional methods. When the lesions of multiple sclerosis patients are assessed, both pipelines report identical lesion characteristics in most lesions ($\chi$para: 99.6% and $\chi$dia: 98.4% out of 250 lesions). The $\chi$-sepnet-R2* pipeline, which only requires multi-echo GRE data, has demonstrated its potential to offer broad clinical and scientific applications, although further evaluations for various diseases and pathological conditions are necessary.

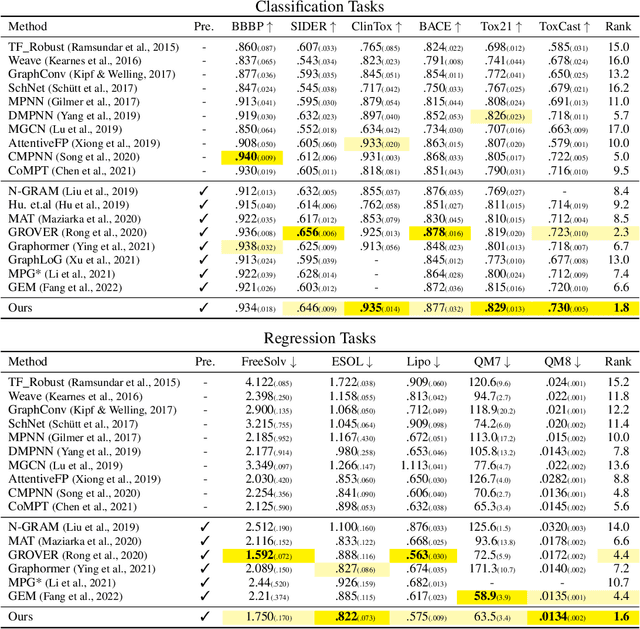

Substructure-Atom Cross Attention for Molecular Representation Learning

Oct 15, 2022

Abstract:Designing a neural network architecture for molecular representation is crucial for AI-driven drug discovery and molecule design. In this work, we propose a new framework for molecular representation learning. Our contribution is threefold: (a) demonstrating the usefulness of incorporating substructures to node-wise features from molecules, (b) designing two branch networks consisting of a transformer and a graph neural network so that the networks fused with asymmetric attention, and (c) not requiring heuristic features and computationally-expensive information from molecules. Using 1.8 million molecules collected from ChEMBL and PubChem database, we pretrain our network to learn a general representation of molecules with minimal supervision. The experimental results show that our pretrained network achieves competitive performance on 11 downstream tasks for molecular property prediction.

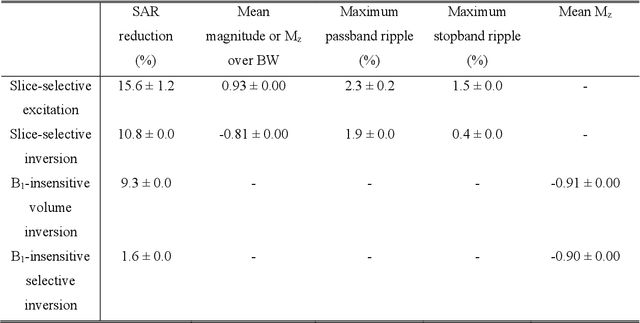

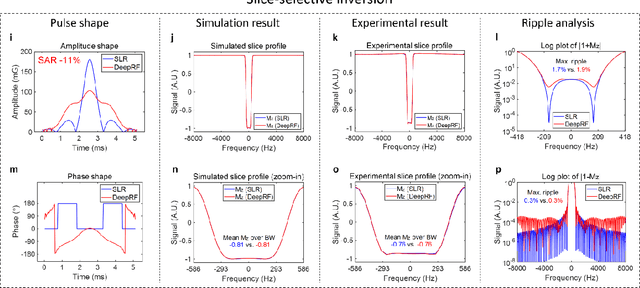

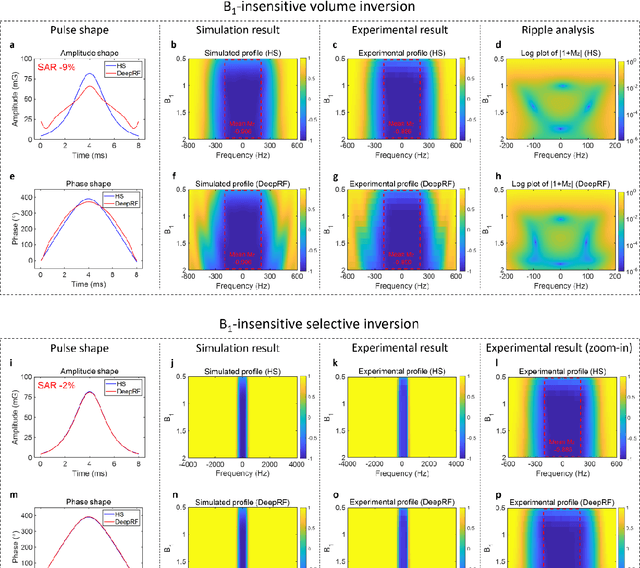

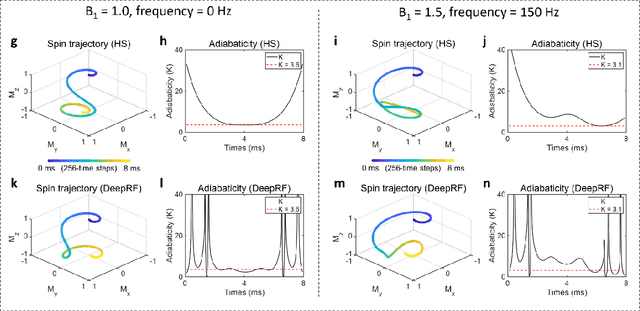

DeepRF: Deep Reinforcement Learning Designed RadioFrequency Waveform in MRI

May 07, 2021

Abstract:A carefully engineered radiofrequency (RF) pulse plays a key role in a number of systems such as mobile phone, radar, and magnetic resonance imaging (MRI). The design of an RF waveform, however, is often posed as an inverse problem that has no general solution. As a result, various design methods each with a specific purpose have been developed based on the intuition of human experts. In this work, we propose an artificial intelligence-powered RF pulse design framework, DeepRF, which utilizes the self-learning characteristics of deep reinforcement learning (DRL) to generate a novel RF beyond human intuition. Additionally, the method can design various types of RF pulses via customized reward functions. The algorithm of DeepRF consists of two modules: the RF generation module, which utilizes DRL to explore new RF pulses, and the RF refinement module, which optimizes the seed RF pulses from the generation module via gradient ascent. The effectiveness of DeepRF is demonstrated using four exemplary RF pulses, slice-selective excitation pulse, slice-selective inversion pulse, B1-insensitive volume inversion pulse, and B1-insensitive selective inversion pulse, that are commonly used in MRI. The results show that the DeepRF-designed pulses successfully satisfy the design criteria while improving specific absorption rates when compared to those of the conventional RF pulses. Further analyses suggest that the DeepRF-designed pulses utilize new mechanisms of magnetization manipulation that are difficult to be explained by conventional theory, suggesting the potentials of DeepRF in discovering unseen design dimensions beyond human intuition. This work may lay the foundation for an emerging field of AI-driven RF waveform design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge