Minjun Kim

Department of Electrical and Computer Engineering, Seoul National University, Seoul, Republic of Korea

ELO: Efficient Layer-Specific Optimization for Continual Pretraining of Multilingual LLMs

Jan 07, 2026Abstract:We propose an efficient layer-specific optimization (ELO) method designed to enhance continual pretraining (CP) for specific languages in multilingual large language models (MLLMs). This approach addresses the common challenges of high computational cost and degradation of source language performance associated with traditional CP. The ELO method consists of two main stages: (1) ELO Pretraining, where a small subset of specific layers, identified in our experiments as the critically important first and last layers, are detached from the original MLLM and trained with the target language. This significantly reduces not only the number of trainable parameters but also the total parameters computed during the forward pass, minimizing GPU memory consumption and accelerating the training process. (2) Layer Alignment, where the newly trained layers are reintegrated into the original model, followed by a brief full fine-tuning step on a small dataset to align the parameters. Experimental results demonstrate that the ELO method achieves a training speedup of up to 6.46 times compared to existing methods, while improving target language performance by up to 6.2\% on qualitative benchmarks and effectively preserving source language (English) capabilities.

LampQ: Towards Accurate Layer-wise Mixed Precision Quantization for Vision Transformers

Nov 14, 2025

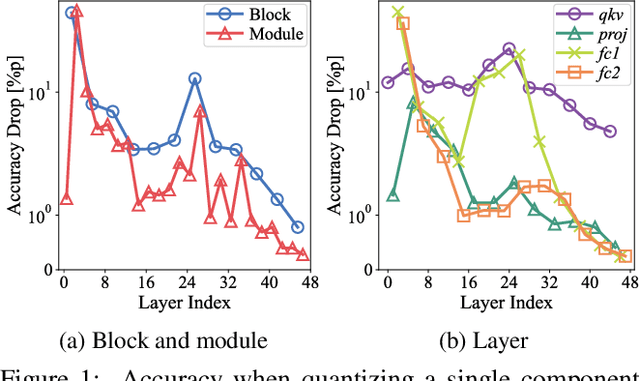

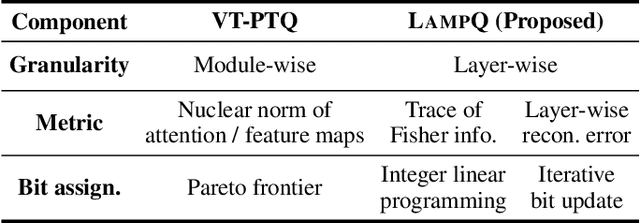

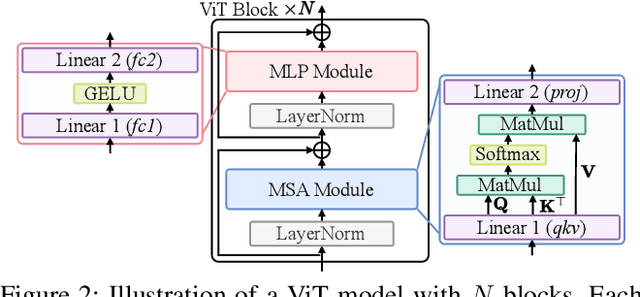

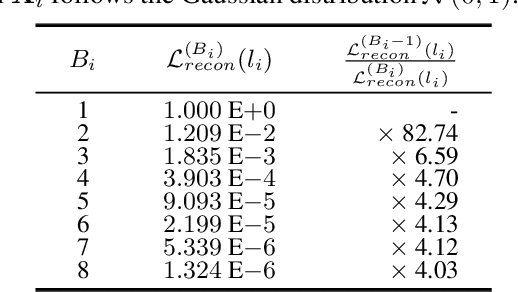

Abstract:How can we accurately quantize a pre-trained Vision Transformer model? Quantization algorithms compress Vision Transformers (ViTs) into low-bit formats, reducing memory and computation demands with minimal accuracy degradation. However, existing methods rely on uniform precision, ignoring the diverse sensitivity of ViT components to quantization. Metric-based Mixed Precision Quantization (MPQ) is a promising alternative, but previous MPQ methods for ViTs suffer from three major limitations: 1) coarse granularity, 2) mismatch in metric scale across component types, and 3) quantization-unaware bit allocation. In this paper, we propose LampQ (Layer-wise Mixed Precision Quantization for Vision Transformers), an accurate metric-based MPQ method for ViTs to overcome these limitations. LampQ performs layer-wise quantization to achieve both fine-grained control and efficient acceleration, incorporating a type-aware Fisher-based metric to measure sensitivity. Then, LampQ assigns bit-widths optimally through integer linear programming and further updates them iteratively. Extensive experiments show that LampQ provides the state-of-the-art performance in quantizing ViTs pre-trained on various tasks such as image classification, object detection, and zero-shot quantization.

KORMo: Korean Open Reasoning Model for Everyone

Oct 10, 2025

Abstract:This work presents the first large-scale investigation into constructing a fully open bilingual large language model (LLM) for a non-English language, specifically Korean, trained predominantly on synthetic data. We introduce KORMo-10B, a 10.8B-parameter model trained from scratch on a Korean-English corpus in which 68.74% of the Korean portion is synthetic. Through systematic experimentation, we demonstrate that synthetic data, when carefully curated with balanced linguistic coverage and diverse instruction styles, does not cause instability or degradation during large-scale pretraining. Furthermore, the model achieves performance comparable to that of contemporary open-weight multilingual baselines across a wide range of reasoning, knowledge, and instruction-following benchmarks. Our experiments reveal two key findings: (1) synthetic data can reliably sustain long-horizon pretraining without model collapse, and (2) bilingual instruction tuning enables near-native reasoning and discourse coherence in Korean. By fully releasing all components including data, code, training recipes, and logs, this work establishes a transparent framework for developing synthetic data-driven fully open models (FOMs) in low-resource settings and sets a reproducible precedent for future multilingual LLM research.

Unifying Uniform and Binary-coding Quantization for Accurate Compression of Large Language Models

Jun 04, 2025Abstract:How can we quantize large language models while preserving accuracy? Quantization is essential for deploying large language models (LLMs) efficiently. Binary-coding quantization (BCQ) and uniform quantization (UQ) are promising quantization schemes that have strong expressiveness and optimizability, respectively. However, neither scheme leverages both advantages. In this paper, we propose UniQuanF (Unified Quantization with Flexible Mapping), an accurate quantization method for LLMs. UniQuanF harnesses both strong expressiveness and optimizability by unifying the flexible mapping technique in UQ and non-uniform quantization levels of BCQ. We propose unified initialization, and local and periodic mapping techniques to optimize the parameters in UniQuanF precisely. After optimization, our unification theorem removes computational and memory overhead, allowing us to utilize the superior accuracy of UniQuanF without extra deployment costs induced by the unification. Experimental results demonstrate that UniQuanF outperforms existing UQ and BCQ methods, achieving up to 4.60% higher accuracy on GSM8K benchmark.

Context Robust Knowledge Editing for Language Models

May 29, 2025Abstract:Knowledge editing (KE) methods offer an efficient way to modify knowledge in large language models. Current KE evaluations typically assess editing success by considering only the edited knowledge without any preceding contexts. In real-world applications, however, preceding contexts often trigger the retrieval of the original knowledge and undermine the intended edit. To address this issue, we develop CHED -- a benchmark designed to evaluate the context robustness of KE methods. Evaluations on CHED show that they often fail when preceding contexts are present. To mitigate this shortcoming, we introduce CoRE, a KE method designed to strengthen context robustness by minimizing context-sensitive variance in hidden states of the model for edited knowledge. This method not only improves the editing success rate in situations where a preceding context is present but also preserves the overall capabilities of the model. We provide an in-depth analysis of the differing impacts of preceding contexts when introduced as user utterances versus assistant responses, and we dissect attention-score patterns to assess how specific tokens influence editing success.

Zero-shot Quantization: A Comprehensive Survey

May 14, 2025Abstract:Network quantization has proven to be a powerful approach to reduce the memory and computational demands of deep learning models for deployment on resource-constrained devices. However, traditional quantization methods often rely on access to training data, which is impractical in many real-world scenarios due to privacy, security, or regulatory constraints. Zero-shot Quantization (ZSQ) emerges as a promising solution, achieving quantization without requiring any real data. In this paper, we provide a comprehensive overview of ZSQ methods and their recent advancements. First, we provide a formal definition of the ZSQ problem and highlight the key challenges. Then, we categorize the existing ZSQ methods into classes based on data generation strategies, and analyze their motivations, core ideas, and key takeaways. Lastly, we suggest future research directions to address the remaining limitations and advance the field of ZSQ. To the best of our knowledge, this paper is the first in-depth survey on ZSQ.

The Iterative Chainlet Partitioning Algorithm for the Traveling Salesman Problem with Drone and Neural Acceleration

Apr 21, 2025Abstract:This study introduces the Iterative Chainlet Partitioning (ICP) algorithm and its neural acceleration for solving the Traveling Salesman Problem with Drone (TSP-D). The proposed ICP algorithm decomposes a TSP-D solution into smaller segments called chainlets, each optimized individually by a dynamic programming subroutine. The chainlet with the highest improvement is updated and the procedure is repeated until no further improvement is possible. The number of subroutine calls is bounded linearly in problem size for the first iteration and remains constant in subsequent iterations, ensuring algorithmic scalability. Empirical results show that ICP outperforms existing algorithms in both solution quality and computational time. Tested over 1,059 benchmark instances, ICP yields an average improvement of 2.75% in solution quality over the previous state-of-the-art algorithm while reducing computational time by 79.8%. The procedure is deterministic, ensuring reliability without requiring multiple runs. The subroutine is the computational bottleneck in the already efficient ICP algorithm. To reduce the necessity of subroutine calls, we integrate a graph neural network (GNN) to predict incremental improvements. We demonstrate that the resulting Neuro ICP (NICP) achieves substantial acceleration while maintaining solution quality. Compared to ICP, NICP reduces the total computational time by 49.7%, while the objective function value increase is limited to 0.12%. The framework's adaptability to various operational constraints makes it a valuable foundation for developing efficient algorithms for truck-drone synchronized routing problems.

AugWard: Augmentation-Aware Representation Learning for Accurate Graph Classification

Mar 27, 2025Abstract:How can we accurately classify graphs? Graph classification is a pivotal task in data mining with applications in social network analysis, web analysis, drug discovery, molecular property prediction, etc. Graph neural networks have achieved the state-of-the-art performance in graph classification, but they consistently struggle with overfitting. To mitigate overfitting, researchers have introduced various representation learning methods utilizing graph augmentation. However, existing methods rely on simplistic use of graph augmentation, which loses augmentation-induced differences and limits the expressiveness of representations. In this paper, we propose AugWard (Augmentation-Aware Training with Graph Distance and Consistency Regularization), a novel graph representation learning framework that carefully considers the diversity introduced by graph augmentation. AugWard applies augmentation-aware training to predict the graph distance between the augmented graph and its original one, aligning the representation difference directly with graph distance at both feature and structure levels. Furthermore, AugWard employs consistency regularization to encourage the classifier to handle richer representations. Experimental results show that AugWard gives the state-of-the-art performance in supervised, semi-supervised graph classification, and transfer learning.

DTA: Dual Temporal-channel-wise Attention for Spiking Neural Networks

Mar 13, 2025Abstract:Spiking Neural Networks (SNNs) present a more energy-efficient alternative to Artificial Neural Networks (ANNs) by harnessing spatio-temporal dynamics and event-driven spikes. Effective utilization of temporal information is crucial for SNNs, leading to the exploration of attention mechanisms to enhance this capability. Conventional attention operations either apply identical operation or employ non-identical operations across target dimensions. We identify that these approaches provide distinct perspectives on temporal information. To leverage the strengths of both operations, we propose a novel Dual Temporal-channel-wise Attention (DTA) mechanism that integrates both identical/non-identical attention strategies. To the best of our knowledge, this is the first attempt to concentrate on both the correlation and dependency of temporal-channel using both identical and non-identical attention operations. Experimental results demonstrate that the DTA mechanism achieves state-of-the-art performance on both static datasets (CIFAR10, CIFAR100, ImageNet-1k) and dynamic dataset (CIFAR10-DVS), elevating spike representation and capturing complex temporal-channel relationship. We open-source our code: https://github.com/MnJnKIM/DTA-SNN.

Vessel segmentation for X-separation

Feb 03, 2025

Abstract:$\chi$-separation is an advanced quantitative susceptibility mapping (QSM) method that is designed to generate paramagnetic ($\chi_{para}$) and diamagnetic ($|\chi_{dia}|$) susceptibility maps, reflecting the distribution of iron and myelin in the brain. However, vessels have shown artifacts, interfering with the accurate quantification of iron and myelin in applications. To address this challenge, a new vessel segmentation method for $\chi$-separation is developed. The method comprises three steps: 1) Seed generation from $\textit{R}_2^*$ and the product of $\chi_{para}$ and $|\chi_{dia}|$ maps; 2) Region growing, guided by vessel geometry, creating a vessel mask; 3) Refinement of the vessel mask by excluding non-vessel structures. The performance of the method was compared to conventional vessel segmentation methods both qualitatively and quantitatively. To demonstrate the utility of the method, it was tested in two applications: quantitative evaluation of a neural network-based $\chi$-separation reconstruction method ($\chi$-sepnet-$\textit{R}_2^*$) and population-averaged region of interest (ROI) analysis. The proposed method demonstrates superior performance to the conventional vessel segmentation methods, effectively excluding the non-vessel structures, achieving the highest Dice score coefficient. For the applications, applying vessel masks report notable improvements for the quantitative evaluation of $\chi$-sepnet-$\textit{R}_2^*$ and statistically significant differences in population-averaged ROI analysis. These applications suggest excluding vessels when analyzing the $\chi$-separation maps provide more accurate evaluations. The proposed method has the potential to facilitate various applications, offering reliable analysis through the generation of a high-quality vessel mask.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge