Jiazhen Pan

Reconstruction-free segmentation from undersampled k-space using transformers

Nov 05, 2025Abstract:Motivation: High acceleration factors place a limit on MRI image reconstruction. This limit is extended to segmentation models when treating these as subsequent independent processes. Goal: Our goal is to produce segmentations directly from sparse k-space measurements without the need for intermediate image reconstruction. Approach: We employ a transformer architecture to encode global k-space information into latent features. The produced latent vectors condition queried coordinates during decoding to generate segmentation class probabilities. Results: The model is able to produce better segmentations across high acceleration factors than image-based segmentation baselines. Impact: Cardiac segmentation directly from undersampled k-space samples circumvents the need for an intermediate image reconstruction step. This allows the potential to assess myocardial structure and function on higher acceleration factors than methods that rely on images as input.

AortaDiff: A Unified Multitask Diffusion Framework For Contrast-Free AAA Imaging

Oct 01, 2025Abstract:While contrast-enhanced CT (CECT) is standard for assessing abdominal aortic aneurysms (AAA), the required iodinated contrast agents pose significant risks, including nephrotoxicity, patient allergies, and environmental harm. To reduce contrast agent use, recent deep learning methods have focused on generating synthetic CECT from non-contrast CT (NCCT) scans. However, most adopt a multi-stage pipeline that first generates images and then performs segmentation, which leads to error accumulation and fails to leverage shared semantic and anatomical structures. To address this, we propose a unified deep learning framework that generates synthetic CECT images from NCCT scans while simultaneously segmenting the aortic lumen and thrombus. Our approach integrates conditional diffusion models (CDM) with multi-task learning, enabling end-to-end joint optimization of image synthesis and anatomical segmentation. Unlike previous multitask diffusion models, our approach requires no initial predictions (e.g., a coarse segmentation mask), shares both encoder and decoder parameters across tasks, and employs a semi-supervised training strategy to learn from scans with missing segmentation labels, a common constraint in real-world clinical data. We evaluated our method on a cohort of 264 patients, where it consistently outperformed state-of-the-art single-task and multi-stage models. For image synthesis, our model achieved a PSNR of 25.61 dB, compared to 23.80 dB from a single-task CDM. For anatomical segmentation, it improved the lumen Dice score to 0.89 from 0.87 and the challenging thrombus Dice score to 0.53 from 0.48 (nnU-Net). These segmentation enhancements led to more accurate clinical measurements, reducing the lumen diameter MAE to 4.19 mm from 5.78 mm and the thrombus area error to 33.85% from 41.45% when compared to nnU-Net. Code is available at https://github.com/yuxuanou623/AortaDiff.git.

Beyond Distillation: Pushing the Limits of Medical LLM Reasoning with Minimalist Rule-Based RL

May 23, 2025

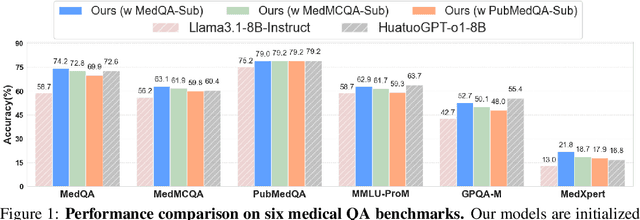

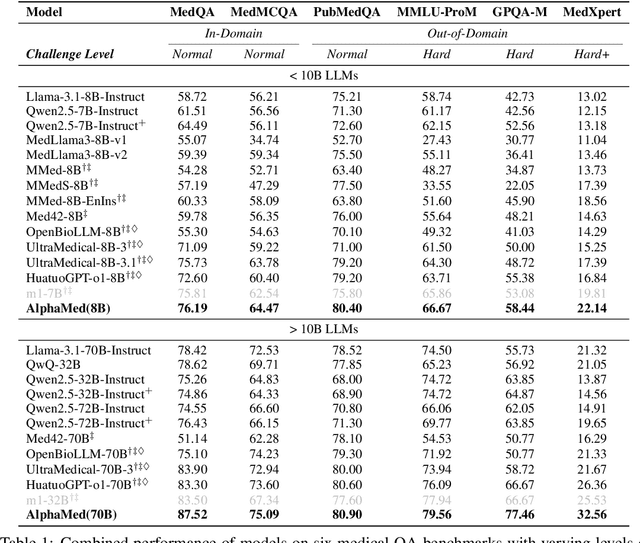

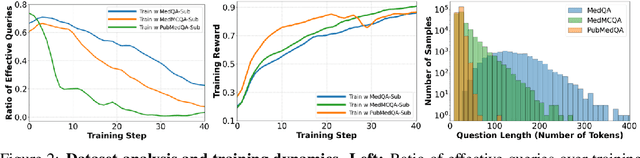

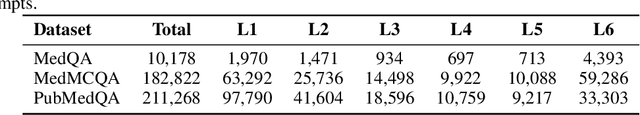

Abstract:Improving performance on complex tasks and enabling interpretable decision making in large language models (LLMs), especially for clinical applications, requires effective reasoning. Yet this remains challenging without supervised fine-tuning (SFT) on costly chain-of-thought (CoT) data distilled from closed-source models (e.g., GPT-4o). In this work, we present AlphaMed, the first medical LLM to show that reasoning capability can emerge purely through reinforcement learning (RL), using minimalist rule-based rewards on public multiple-choice QA datasets, without relying on SFT or distilled CoT data. AlphaMed achieves state-of-the-art results on six medical QA benchmarks, outperforming models trained with conventional SFT+RL pipelines. On challenging benchmarks (e.g., MedXpert), AlphaMed even surpasses larger or closed-source models such as DeepSeek-V3-671B and Claude-3.5-Sonnet. To understand the factors behind this success, we conduct a comprehensive data-centric analysis guided by three questions: (i) Can minimalist rule-based RL incentivize reasoning without distilled CoT supervision? (ii) How do dataset quantity and diversity impact reasoning? (iii) How does question difficulty shape the emergence and generalization of reasoning? Our findings show that dataset informativeness is a key driver of reasoning performance, and that minimalist RL on informative, multiple-choice QA data is effective at inducing reasoning without CoT supervision. We also observe divergent trends across benchmarks, underscoring limitations in current evaluation and the need for more challenging, reasoning-oriented medical QA benchmarks.

Towards Cardiac MRI Foundation Models: Comprehensive Visual-Tabular Representations for Whole-Heart Assessment and Beyond

Apr 18, 2025Abstract:Cardiac magnetic resonance imaging is the gold standard for non-invasive cardiac assessment, offering rich spatio-temporal views of the cardiac anatomy and physiology. Patient-level health factors, such as demographics, metabolic, and lifestyle, are known to substantially influence cardiovascular health and disease risk, yet remain uncaptured by CMR alone. To holistically understand cardiac health and to enable the best possible interpretation of an individual's disease risk, CMR and patient-level factors must be jointly exploited within an integrated framework. Recent multi-modal approaches have begun to bridge this gap, yet they often rely on limited spatio-temporal data and focus on isolated clinical tasks, thereby hindering the development of a comprehensive representation for cardiac health evaluation. To overcome these limitations, we introduce ViTa, a step toward foundation models that delivers a comprehensive representation of the heart and a precise interpretation of individual disease risk. Leveraging data from 42,000 UK Biobank participants, ViTa integrates 3D+T cine stacks from short-axis and long-axis views, enabling a complete capture of the cardiac cycle. These imaging data are then fused with detailed tabular patient-level factors, enabling context-aware insights. This multi-modal paradigm supports a wide spectrum of downstream tasks, including cardiac phenotype and physiological feature prediction, segmentation, and classification of cardiac and metabolic diseases within a single unified framework. By learning a shared latent representation that bridges rich imaging features and patient context, ViTa moves beyond traditional, task-specific models toward a universal, patient-specific understanding of cardiac health, highlighting its potential to advance clinical utility and scalability in cardiac analysis.

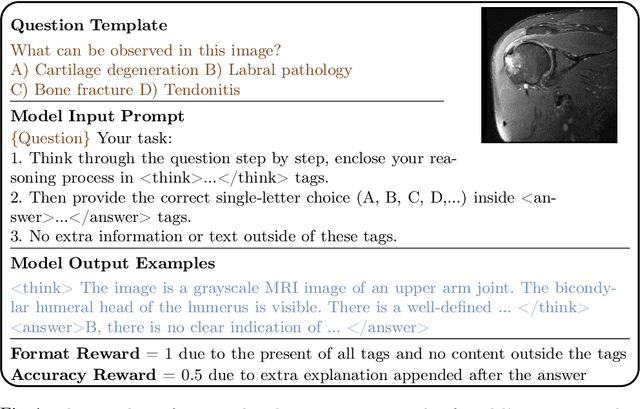

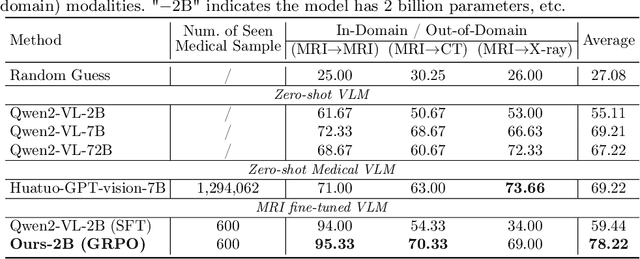

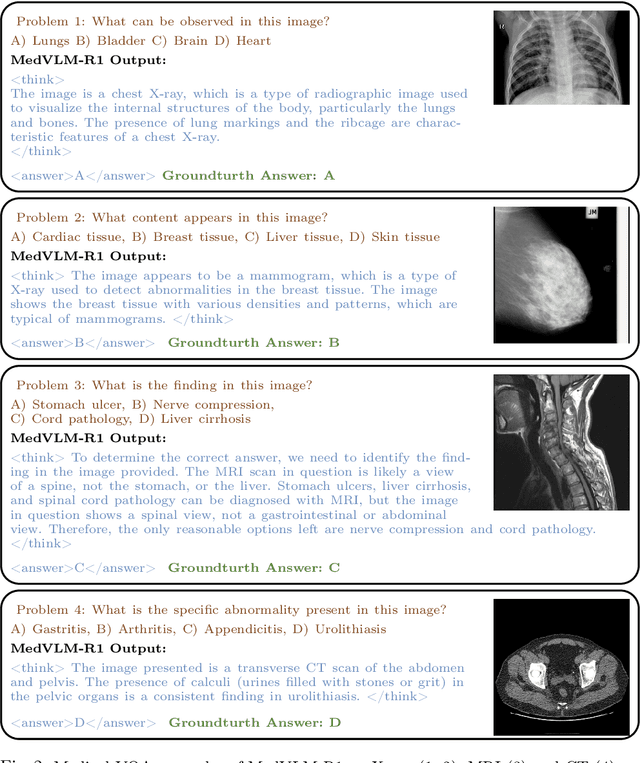

MedVLM-R1: Incentivizing Medical Reasoning Capability of Vision-Language Models (VLMs) via Reinforcement Learning

Feb 26, 2025

Abstract:Reasoning is a critical frontier for advancing medical image analysis, where transparency and trustworthiness play a central role in both clinician trust and regulatory approval. Although Medical Visual Language Models (VLMs) show promise for radiological tasks, most existing VLMs merely produce final answers without revealing the underlying reasoning. To address this gap, we introduce MedVLM-R1, a medical VLM that explicitly generates natural language reasoning to enhance transparency and trustworthiness. Instead of relying on supervised fine-tuning (SFT), which often suffers from overfitting to training distributions and fails to foster genuine reasoning, MedVLM-R1 employs a reinforcement learning framework that incentivizes the model to discover human-interpretable reasoning paths without using any reasoning references. Despite limited training data (600 visual question answering samples) and model parameters (2B), MedVLM-R1 boosts accuracy from 55.11% to 78.22% across MRI, CT, and X-ray benchmarks, outperforming larger models trained on over a million samples. It also demonstrates robust domain generalization under out-of-distribution tasks. By unifying medical image analysis with explicit reasoning, MedVLM-R1 marks a pivotal step toward trustworthy and interpretable AI in clinical practice.

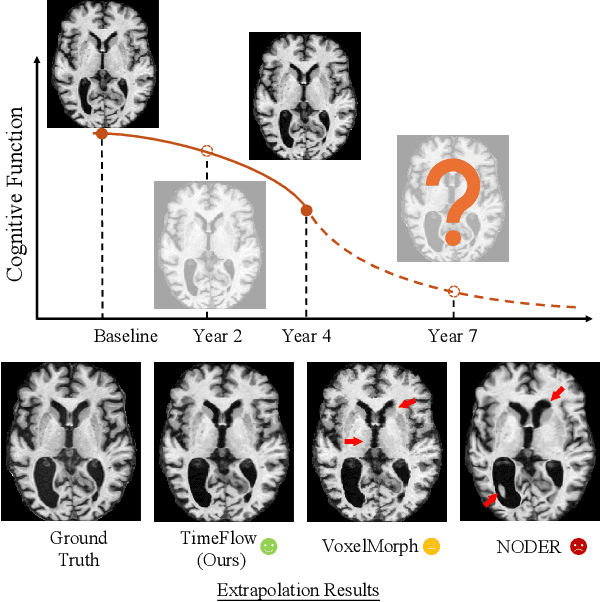

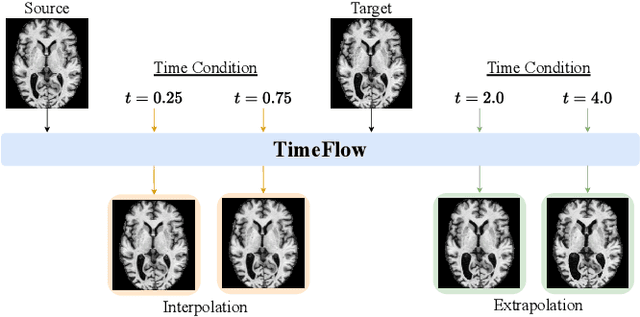

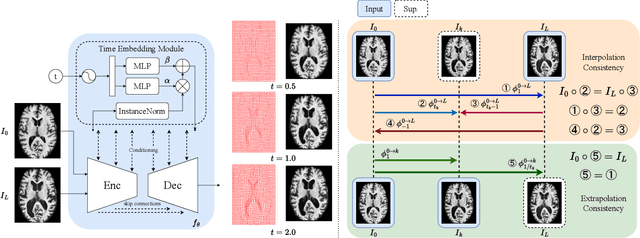

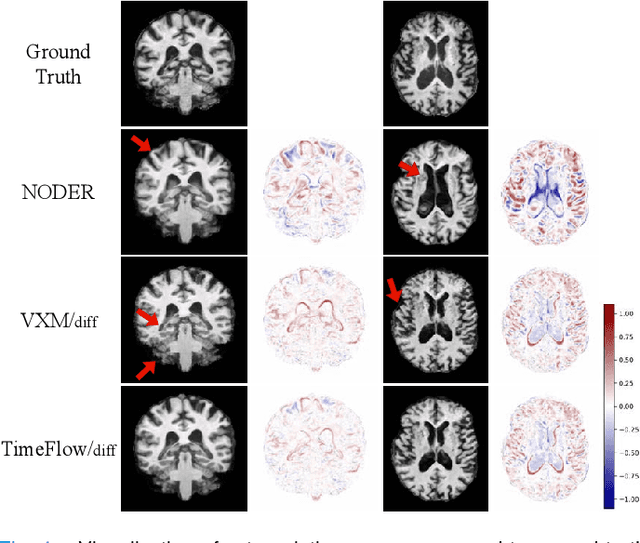

TimeFlow: Longitudinal Brain Image Registration and Aging Progression Analysis

Jan 15, 2025

Abstract:Predicting future brain states is crucial for understanding healthy aging and neurodegenerative diseases. Longitudinal brain MRI registration, a cornerstone for such analyses, has long been limited by its inability to forecast future developments, reliance on extensive, dense longitudinal data, and the need to balance registration accuracy with temporal smoothness. In this work, we present \emph{TimeFlow}, a novel framework for longitudinal brain MRI registration that overcomes all these challenges. Leveraging a U-Net architecture with temporal conditioning inspired by diffusion models, TimeFlow enables accurate longitudinal registration and facilitates prospective analyses through future image prediction. Unlike traditional methods that depend on explicit smoothness regularizers and dense sequential data, TimeFlow achieves temporal consistency and continuity without these constraints. Experimental results highlight its superior performance in both future timepoint prediction and registration accuracy compared to state-of-the-art methods. Additionally, TimeFlow supports novel biological brain aging analyses, effectively differentiating neurodegenerative conditions from healthy aging. It eliminates the need for segmentation, thereby avoiding the challenges of non-trivial annotation and inconsistent segmentation errors. TimeFlow paves the way for accurate, data-efficient, and annotation-free prospective analyses of brain aging and chronic diseases.

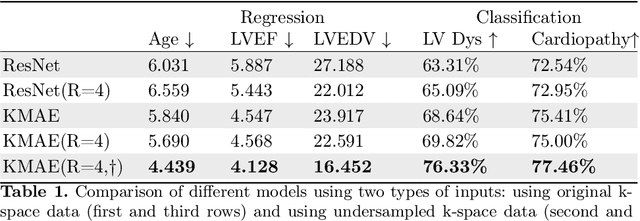

Classification, Regression and Segmentation directly from k-Space in Cardiac MRI

Jul 29, 2024

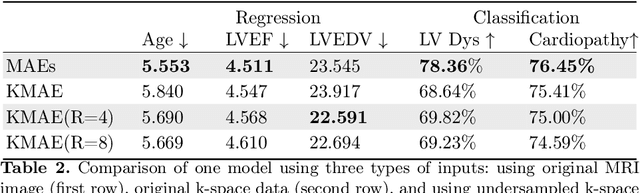

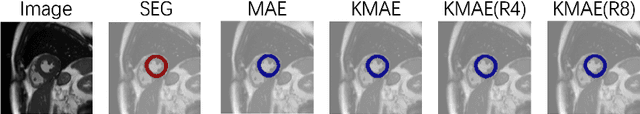

Abstract:Cardiac Magnetic Resonance Imaging (CMR) is the gold standard for diagnosing cardiovascular diseases. Clinical diagnoses predominantly rely on magnitude-only Digital Imaging and Communications in Medicine (DICOM) images, omitting crucial phase information that might provide additional diagnostic benefits. In contrast, k-space is complex-valued and encompasses both magnitude and phase information, while humans cannot directly perceive. In this work, we propose KMAE, a Transformer-based model specifically designed to process k-space data directly, eliminating conventional intermediary conversion steps to the image domain. KMAE can handle critical cardiac disease classification, relevant phenotype regression, and cardiac morphology segmentation tasks. We utilize this model to investigate the potential of k-space-based diagnosis in cardiac MRI. Notably, this model achieves competitive classification and regression performance compared to image-domain methods e.g. Masked Autoencoders (MAEs) and delivers satisfactory segmentation performance with a myocardium dice score of 0.884. Last but not least, our model exhibits robust performance with consistent results even when the k-space is 8* undersampled. We encourage the MR community to explore the untapped potential of k-space and pursue end-to-end, automated diagnosis with reduced human intervention.

Mamba? Catch The Hype Or Rethink What Really Helps for Image Registration

Jul 27, 2024

Abstract:Our findings indicate that adopting "advanced" computational elements fails to significantly improve registration accuracy. Instead, well-established registration-specific designs offer fair improvements, enhancing results by a marginal 1.5\% over the baseline. Our findings emphasize the importance of rigorous, unbiased evaluation and contribution disentanglement of all low- and high-level registration components, rather than simply following the computer vision trends with "more advanced" computational blocks. We advocate for simpler yet effective solutions and novel evaluation metrics that go beyond conventional registration accuracy, warranting further research across diverse organs and modalities. The code is available at \url{https://github.com/BailiangJ/rethink-reg}.

Whole Heart 3D+T Representation Learning Through Sparse 2D Cardiac MR Images

Jun 01, 2024

Abstract:Cardiac Magnetic Resonance (CMR) imaging serves as the gold-standard for evaluating cardiac morphology and function. Typically, a multi-view CMR stack, covering short-axis (SA) and 2/3/4-chamber long-axis (LA) views, is acquired for a thorough cardiac assessment. However, efficiently streamlining the complex, high-dimensional 3D+T CMR data and distilling compact, coherent representation remains a challenge. In this work, we introduce a whole-heart self-supervised learning framework that utilizes masked imaging modeling to automatically uncover the correlations between spatial and temporal patches throughout the cardiac stacks. This process facilitates the generation of meaningful and well-clustered heart representations without relying on the traditionally required, and often costly, labeled data. The learned heart representation can be directly used for various downstream tasks. Furthermore, our method demonstrates remarkable robustness, ensuring consistent representations even when certain CMR planes are missing/flawed. We train our model on 14,000 unlabeled CMR data from UK BioBank and evaluate it on 1,000 annotated data. The proposed method demonstrates superior performance to baselines in tasks that demand comprehensive 3D+T cardiac information, e.g. cardiac phenotype (ejection fraction and ventricle volume) prediction and multi-plane/multi-frame CMR segmentation, highlighting its effectiveness in extracting comprehensive cardiac features that are both anatomically and pathologically relevant.

Direct Cardiac Segmentation from Undersampled K-space Using Transformers

May 31, 2024

Abstract:The prevailing deep learning-based methods of predicting cardiac segmentation involve reconstructed magnetic resonance (MR) images. The heavy dependency of segmentation approaches on image quality significantly limits the acceleration rate in fast MR reconstruction. Moreover, the practice of treating reconstruction and segmentation as separate sequential processes leads to artifact generation and information loss in the intermediate stage. These issues pose a great risk to achieving high-quality outcomes. To leverage the redundant k-space information overlooked in this dual-step pipeline, we introduce a novel approach to directly deriving segmentations from sparse k-space samples using a transformer (DiSK). DiSK operates by globally extracting latent features from 2D+time k-space data with attention blocks and subsequently predicting the segmentation label of query points. We evaluate our model under various acceleration factors (ranging from 4 to 64) and compare against two image-based segmentation baselines. Our model consistently outperforms the baselines in Dice and Hausdorff distances across foreground classes for all presented sampling rates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge