Jiang Yang

Sinc Kolmogorov-Arnold Network and Its Applications on Physics-informed Neural Networks

Oct 05, 2024

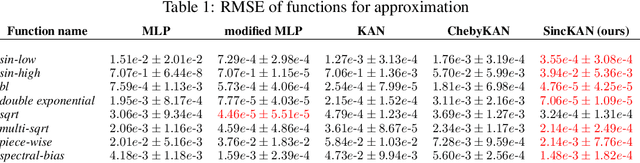

Abstract:In this paper, we propose to use Sinc interpolation in the context of Kolmogorov-Arnold Networks, neural networks with learnable activation functions, which recently gained attention as alternatives to multilayer perceptron. Many different function representations have already been tried, but we show that Sinc interpolation proposes a viable alternative, since it is known in numerical analysis to represent well both smooth functions and functions with singularities. This is important not only for function approximation but also for the solutions of partial differential equations with physics-informed neural networks. Through a series of experiments, we show that SincKANs provide better results in almost all of the examples we have considered.

Action Sensitivity Learning for Temporal Action Localization

May 25, 2023Abstract:Temporal action localization (TAL), which involves recognizing and locating action instances, is a challenging task in video understanding. Most existing approaches directly predict action classes and regress offsets to boundaries, while overlooking the discrepant importance of each frame. In this paper, we propose an Action Sensitivity Learning framework (ASL) to tackle this task, which aims to assess the value of each frame and then leverage the generated action sensitivity to recalibrate the training procedure. We first introduce a lightweight Action Sensitivity Evaluator to learn the action sensitivity at the class level and instance level, respectively. The outputs of the two branches are combined to reweight the gradient of the two sub-tasks. Moreover, based on the action sensitivity of each frame, we design an Action Sensitive Contrastive Loss to enhance features, where the action-aware frames are sampled as positive pairs to push away the action-irrelevant frames. The extensive studies on various action localization benchmarks (i.e., MultiThumos, Charades, Ego4D-Moment Queries v1.0, Epic-Kitchens 100, Thumos14 and ActivityNet1.3) show that ASL surpasses the state-of-the-art in terms of average-mAP under multiple types of scenarios, e.g., single-labeled, densely-labeled and egocentric.

Life Regression based Patch Slimming for Vision Transformers

Apr 11, 2023Abstract:Vision transformers have achieved remarkable success in computer vision tasks by using multi-head self-attention modules to capture long-range dependencies within images. However, the high inference computation cost poses a new challenge. Several methods have been proposed to address this problem, mainly by slimming patches. In the inference stage, these methods classify patches into two classes, one to keep and the other to discard in multiple layers. This approach results in additional computation at every layer where patches are discarded, which hinders inference acceleration. In this study, we tackle the patch slimming problem from a different perspective by proposing a life regression module that determines the lifespan of each image patch in one go. During inference, the patch is discarded once the current layer index exceeds its life. Our proposed method avoids additional computation and parameters in multiple layers to enhance inference speed while maintaining competitive performance. Additionally, our approach requires fewer training epochs than other patch slimming methods.

Multi-core fiber enabled fading noise suppression in φ-OFDR based quantitative distributed vibration sensing

May 03, 2022

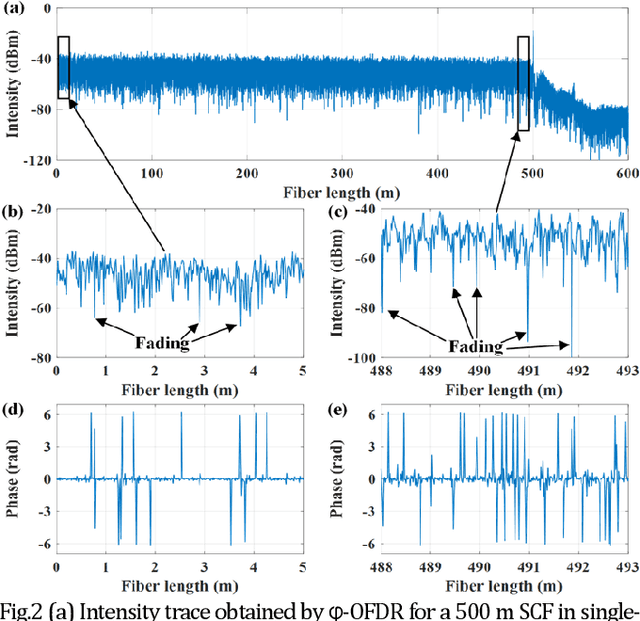

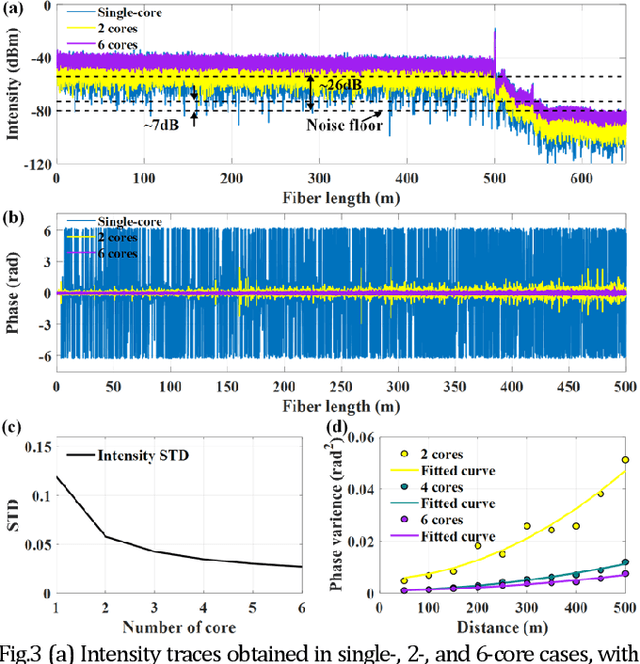

Abstract:Coherent fading has been regarded as a critical issue in phase-sensitive optical frequency domain reflectometry ({\phi}-OFDR) based distributed fiber-optic sensing. Here, we report on an approach for fading noise suppression in {\phi}-OFDR with multi-core fiber. By exploiting the independent nature of the randomness in the distribution of reflective index in each of the cores, the drastic phase fluctuations due to the fading phenomina can be effectively alleviated by applying weighted vectorial averaging for the Rayleigh backscattering traces from each of the cores with distinct fading distributions. With the consistent linear response with respect to external excitation of interest for each of the cores, demonstration for the propsoed {\phi}-OFDR with a commercial seven-core fiber has achieved highly sensitive quantitative distributed vibration sensing with about 2.2 nm length precision and 2 cm sensing resolution along the 500 m fiber, corresponding to a range resolution factor as high as about about 4E-5. Featuring long distance, high sensitivity, high resolution, and fading robustness, this approach has shown promising potentials in various sensing techniques for a wide range of practical scenarios.

Generalization Error Analysis of Neural networks with Gradient Based Regularization

Jul 06, 2021

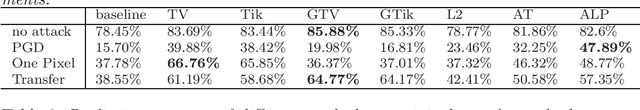

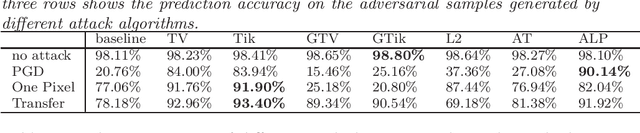

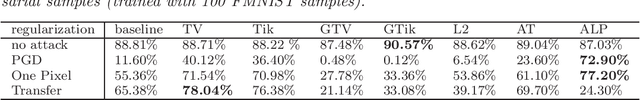

Abstract:We study gradient-based regularization methods for neural networks. We mainly focus on two regularization methods: the total variation and the Tikhonov regularization. Applying these methods is equivalent to using neural networks to solve some partial differential equations, mostly in high dimensions in practical applications. In this work, we introduce a general framework to analyze the generalization error of regularized networks. The error estimate relies on two assumptions on the approximation error and the quadrature error. Moreover, we conduct some experiments on the image classification tasks to show that gradient-based methods can significantly improve the generalization ability and adversarial robustness of neural networks. A graphical extension of the gradient-based methods are also considered in the experiments.

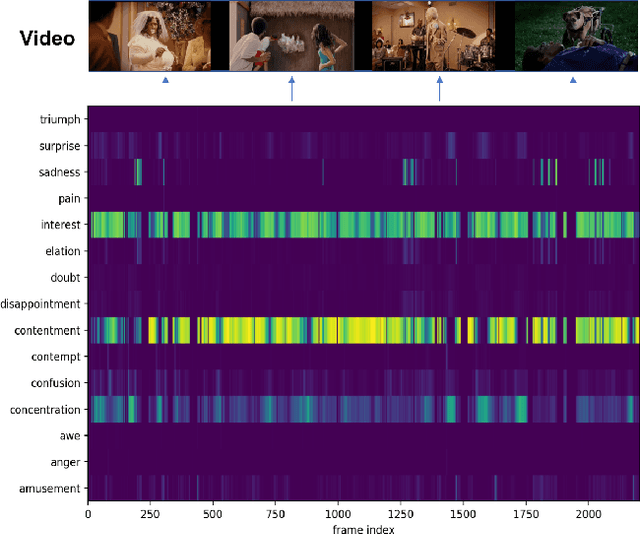

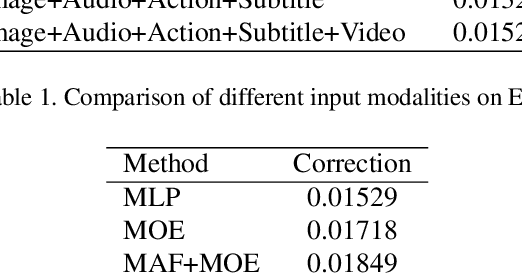

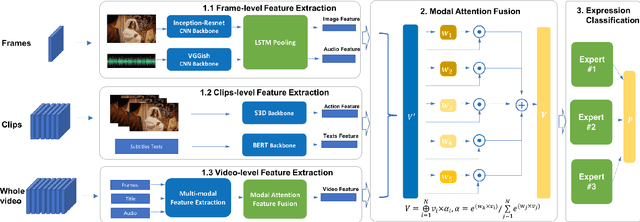

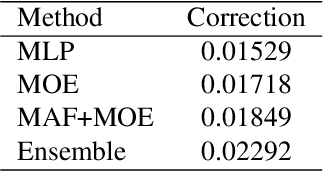

Multi-Granularity Network with Modal Attention for Dense Affective Understanding

Jun 18, 2021

Abstract:Video affective understanding, which aims to predict the evoked expressions by the video content, is desired for video creation and recommendation. In the recent EEV challenge, a dense affective understanding task is proposed and requires frame-level affective prediction. In this paper, we propose a multi-granularity network with modal attention (MGN-MA), which employs multi-granularity features for better description of the target frame. Specifically, the multi-granularity features could be divided into frame-level, clips-level and video-level features, which corresponds to visual-salient content, semantic-context and video theme information. Then the modal attention fusion module is designed to fuse the multi-granularity features and emphasize more affection-relevant modals. Finally, the fused feature is fed into a Mixtures Of Experts (MOE) classifier to predict the expressions. Further employing model-ensemble post-processing, the proposed method achieves the correlation score of 0.02292 in the EEV challenge.

Augmented Bi-path Network for Few-shot Learning

Jul 15, 2020

Abstract:Few-shot Learning (FSL) which aims to learn from few labeled training data is becoming a popular research topic, due to the expensive labeling cost in many real-world applications. One kind of successful FSL method learns to compare the testing (query) image and training (support) image by simply concatenating the features of two images and feeding it into the neural network. However, with few labeled data in each class, the neural network has difficulty in learning or comparing the local features of two images. Such simple image-level comparison may cause serious mis-classification. To solve this problem, we propose Augmented Bi-path Network (ABNet) for learning to compare both global and local features on multi-scales. Specifically, the salient patches are extracted and embedded as the local features for every image. Then, the model learns to augment the features for better robustness. Finally, the model learns to compare global and local features separately, i.e., in two paths, before merging the similarities. Extensive experiments show that the proposed ABNet outperforms the state-of-the-art methods. Both quantitative and visual ablation studies are provided to verify that the proposed modules lead to more precise comparison results.

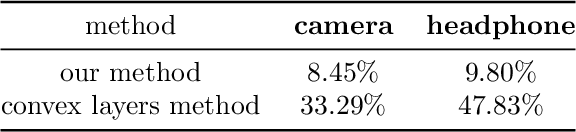

A level set representation method for N-dimensional convex shape and applications

Mar 21, 2020

Abstract:In this work, we present a new efficient method for convex shape representation, which is regardless of the dimension of the concerned objects, using level-set approaches. Convexity prior is very useful for object completion in computer vision. It is a very challenging task to design an efficient method for high dimensional convex objects representation. In this paper, we prove that the convexity of the considered object is equivalent to the convexity of the associated signed distance function. Then, the second order condition of convex functions is used to characterize the shape convexity equivalently. We apply this new method to two applications: object segmentation with convexity prior and convex hull problem (especially with outliers). For both applications, the involved problems can be written as a general optimization problem with three constraints. Efficient algorithm based on alternating direction method of multipliers is presented for the optimization problem. Numerical experiments are conducted to verify the effectiveness and efficiency of the proposed representation method and algorithm.

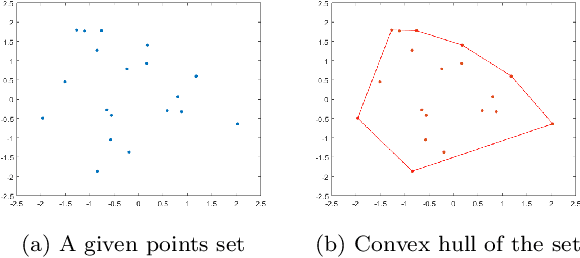

Convex hull algorithms based on some variational models

Aug 09, 2019

Abstract:Seeking the convex hull of an object is a very fundamental problem arising from various tasks. In this work, we propose two variational convex hull models using level set representation for 2-dimensional data. The first one is an exact model, which can get the convex hull of one or multiple objects. In this model, the convex hull is characterized by the zero sublevel-set of a convex level set function, which is non-positive at every given point. By minimizing the area of the zero sublevel-set, we can find the desired convex hull. The second one is intended to get convex hull of objects with outliers. Instead of requiring all the given points are included, this model penalizes the distance from each given point to the zero sublevel-set. Literature methods are not able to handle outliers. For the solution of these models, we develop efficient numerical schemes using alternating direction method of multipliers. Numerical examples are given to demonstrate the advantages of the proposed methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge