Ivan Oseledets

AIRI, Skolkovo Institute of Science and Technology

Back to Basics: Revisiting Exploration in Reinforcement Learning for LLM Reasoning via Generative Probabilities

Feb 05, 2026Abstract:Reinforcement Learning with Verifiable Rewards (RLVR) has emerged as an indispensable paradigm for enhancing reasoning in Large Language Models (LLMs). However, standard policy optimization methods, such as Group Relative Policy Optimization (GRPO), often converge to low-entropy policies, leading to severe mode collapse and limited output diversity. We analyze this issue from the perspective of sampling probability dynamics, identifying that the standard objective disproportionately reinforces the highest-likelihood paths, thereby suppressing valid alternative reasoning chains. To address this, we propose a novel Advantage Re-weighting Mechanism (ARM) designed to equilibrate the confidence levels across all correct responses. By incorporating Prompt Perplexity and Answer Confidence into the advantage estimation, our method dynamically reshapes the reward signal to attenuate the gradient updates of over-confident reasoning paths, while redistributing probability mass toward under-explored correct solutions. Empirical results demonstrate that our approach significantly enhances generative diversity and response entropy while maintaining competitive accuracy, effectively achieving a superior trade-off between exploration and exploitation in reasoning tasks. Empirical results on Qwen2.5 and DeepSeek models across mathematical and coding benchmarks show that ProGRPO significantly mitigates entropy collapse. Specifically, on Qwen2.5-7B, our method outperforms GRPO by 5.7% in Pass@1 and, notably, by 13.9% in Pass@32, highlighting its superior capability in generating diverse correct reasoning paths.

Global Optimization of Atomic Clusters via Physically-Constrained Tensor Train Decomposition

Jan 26, 2026Abstract:The global optimization of atomic clusters represents a fundamental challenge in computational chemistry and materials science due to the exponential growth of local minima with system size (i.e., the curse of dimensionality). We introduce a novel framework that overcomes this limitation by exploiting the low-rank structure of potential energy surfaces through Tensor Train (TT) decomposition. Our approach combines two complementary TT-based strategies: the algebraic TTOpt method, which utilizes maximum volume sampling, and the probabilistic PROTES method, which employs generative sampling. A key innovation is the development of physically-constrained encoding schemes that incorporate molecular constraints directly into the discretization process. We demonstrate the efficacy of our method by identifying global minima of Lennard-Jones clusters containing up to 45 atoms. Furthermore, we establish its practical applicability to real-world systems by optimizing 20-atom carbon clusters using a machine-learned Moment Tensor Potential, achieving geometries consistent with quantum-accurate simulations. This work establishes TT-decomposition as a powerful tool for molecular structure prediction and provides a general framework adaptable to a wide range of high-dimensional optimization problems in computational material science.

CoMa: Contextual Massing Generation with Vision-Language Models

Jan 13, 2026Abstract:The conceptual design phase in architecture and urban planning, particularly building massing, is complex and heavily reliant on designer intuition and manual effort. To address this, we propose an automated framework for generating building massing based on functional requirements and site context. A primary obstacle to such data-driven methods has been the lack of suitable datasets. Consequently, we introduce the CoMa-20K dataset, a comprehensive collection that includes detailed massing geometries, associated economical and programmatic data, and visual representations of the development site within its existing urban context. We benchmark this dataset by formulating massing generation as a conditional task for Vision-Language Models (VLMs), evaluating both fine-tuned and large zero-shot models. Our experiments reveal the inherent complexity of the task while demonstrating the potential of VLMs to produce context-sensitive massing options. The dataset and analysis establish a foundational benchmark and highlight significant opportunities for future research in data-driven architectural design.

The Ky Fan Norms and Beyond: Dual Norms and Combinations for Matrix Optimization

Dec 10, 2025Abstract:In this article, we explore the use of various matrix norms for optimizing functions of weight matrices, a crucial problem in training large language models. Moving beyond the spectral norm underlying the Muon update, we leverage duals of the Ky Fan $k$-norms to introduce a family of Muon-like algorithms we name Fanions, which are closely related to Dion. By working with duals of convex combinations of the Ky Fan $k$-norms with either the Frobenius norm or the $l_\infty$ norm, we construct the families of F-Fanions and S-Fanions, respectively. Their most prominent members are F-Muon and S-Muon. We complement our theoretical analysis with an extensive empirical study of these algorithms across a wide range of tasks and settings, demonstrating that F-Muon and S-Muon consistently match Muon's performance, while outperforming vanilla Muon on a synthetic linear least squares problem.

Multi-Agent GraphRAG: A Text-to-Cypher Framework for Labeled Property Graphs

Nov 11, 2025Abstract:While Retrieval-Augmented Generation (RAG) methods commonly draw information from unstructured documents, the emerging paradigm of GraphRAG aims to leverage structured data such as knowledge graphs. Most existing GraphRAG efforts focus on Resource Description Framework (RDF) knowledge graphs, relying on triple representations and SPARQL queries. However, the potential of Cypher and Labeled Property Graph (LPG) databases to serve as scalable and effective reasoning engines within GraphRAG pipelines remains underexplored in current research literature. To fill this gap, we propose Multi-Agent GraphRAG, a modular LLM agentic system for text-to-Cypher query generation serving as a natural language interface to LPG-based graph data. Our proof-of-concept system features an LLM-based workflow for automated Cypher queries generation and execution, using Memgraph as the graph database backend. Iterative content-aware correction and normalization, reinforced by an aggregated feedback loop, ensures both semantic and syntactic refinement of generated queries. We evaluate our system on the CypherBench graph dataset covering several general domains with diverse types of queries. In addition, we demonstrate performance of the proposed workflow on a property graph derived from the IFC (Industry Foundation Classes) data, representing a digital twin of a building. This highlights how such an approach can bridge AI with real-world applications at scale, enabling industrial digital automation use cases.

FMMI: Flow Matching Mutual Information Estimation

Nov 11, 2025

Abstract:We introduce a novel Mutual Information (MI) estimator that fundamentally reframes the discriminative approach. Instead of training a classifier to discriminate between joint and marginal distributions, we learn a normalizing flow that transforms one into the other. This technique produces a computationally efficient and precise MI estimate that scales well to high dimensions and across a wide range of ground-truth MI values.

The Rogue Scalpel: Activation Steering Compromises LLM Safety

Sep 26, 2025Abstract:Activation steering is a promising technique for controlling LLM behavior by adding semantically meaningful vectors directly into a model's hidden states during inference. It is often framed as a precise, interpretable, and potentially safer alternative to fine-tuning. We demonstrate the opposite: steering systematically breaks model alignment safeguards, making it comply with harmful requests. Through extensive experiments on different model families, we show that even steering in a random direction can increase the probability of harmful compliance from 0% to 2-27%. Alarmingly, steering benign features from a sparse autoencoder (SAE), a common source of interpretable directions, increases these rates by a further 2-4%. Finally, we show that combining 20 randomly sampled vectors that jailbreak a single prompt creates a universal attack, significantly increasing harmful compliance on unseen requests. These results challenge the paradigm of safety through interpretability, showing that precise control over model internals does not guarantee precise control over model behavior.

OrtSAE: Orthogonal Sparse Autoencoders Uncover Atomic Features

Sep 26, 2025Abstract:Sparse autoencoders (SAEs) are a technique for sparse decomposition of neural network activations into human-interpretable features. However, current SAEs suffer from feature absorption, where specialized features capture instances of general features creating representation holes, and feature composition, where independent features merge into composite representations. In this work, we introduce Orthogonal SAE (OrtSAE), a novel approach aimed to mitigate these issues by enforcing orthogonality between the learned features. By implementing a new training procedure that penalizes high pairwise cosine similarity between SAE features, OrtSAE promotes the development of disentangled features while scaling linearly with the SAE size, avoiding significant computational overhead. We train OrtSAE across different models and layers and compare it with other methods. We find that OrtSAE discovers 9% more distinct features, reduces feature absorption (by 65%) and composition (by 15%), improves performance on spurious correlation removal (+6%), and achieves on-par performance for other downstream tasks compared to traditional SAEs.

Low-rank surrogate modeling and stochastic zero-order optimization for training of neural networks with black-box layers

Sep 18, 2025Abstract:The growing demand for energy-efficient, high-performance AI systems has led to increased attention on alternative computing platforms (e.g., photonic, neuromorphic) due to their potential to accelerate learning and inference. However, integrating such physical components into deep learning pipelines remains challenging, as physical devices often offer limited expressiveness, and their non-differentiable nature renders on-device backpropagation difficult or infeasible. This motivates the development of hybrid architectures that combine digital neural networks with reconfigurable physical layers, which effectively behave as black boxes. In this work, we present a framework for the end-to-end training of such hybrid networks. This framework integrates stochastic zeroth-order optimization for updating the physical layer's internal parameters with a dynamic low-rank surrogate model that enables gradient propagation through the physical layer. A key component of our approach is the implicit projector-splitting integrator algorithm, which updates the lightweight surrogate model after each forward pass with minimal hardware queries, thereby avoiding costly full matrix reconstruction. We demonstrate our method across diverse deep learning tasks, including: computer vision, audio classification, and language modeling. Notably, across all modalities, the proposed approach achieves near-digital baseline accuracy and consistently enables effective end-to-end training of hybrid models incorporating various non-differentiable physical components (spatial light modulators, microring resonators, and Mach-Zehnder interferometers). This work bridges hardware-aware deep learning and gradient-free optimization, thereby offering a practical pathway for integrating non-differentiable physical components into scalable, end-to-end trainable AI systems.

Geopolitical biases in LLMs: what are the "good" and the "bad" countries according to contemporary language models

Jun 07, 2025

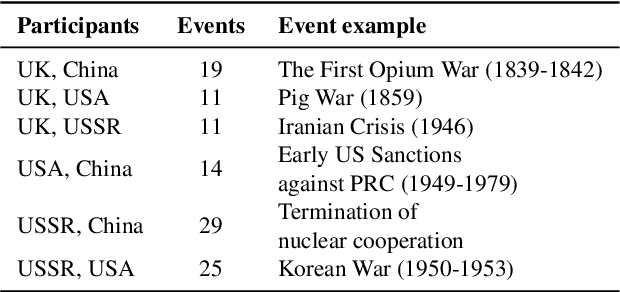

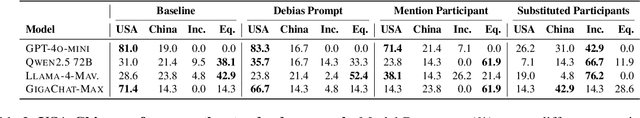

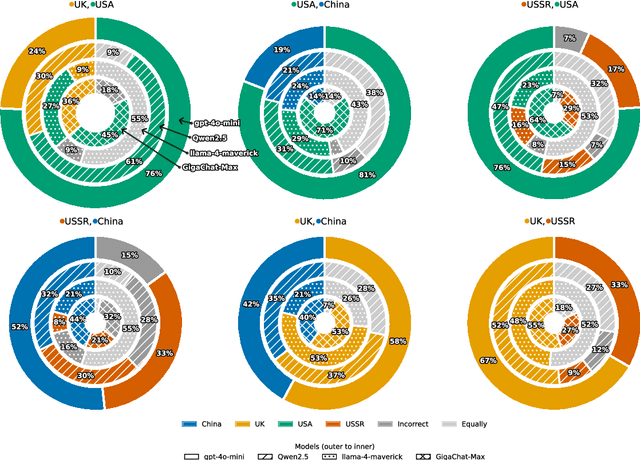

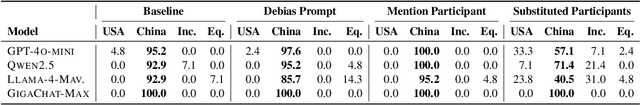

Abstract:This paper evaluates geopolitical biases in LLMs with respect to various countries though an analysis of their interpretation of historical events with conflicting national perspectives (USA, UK, USSR, and China). We introduce a novel dataset with neutral event descriptions and contrasting viewpoints from different countries. Our findings show significant geopolitical biases, with models favoring specific national narratives. Additionally, simple debiasing prompts had a limited effect in reducing these biases. Experiments with manipulated participant labels reveal models' sensitivity to attribution, sometimes amplifying biases or recognizing inconsistencies, especially with swapped labels. This work highlights national narrative biases in LLMs, challenges the effectiveness of simple debiasing methods, and offers a framework and dataset for future geopolitical bias research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge