Jia Sun

WDFFU-Mamba: A Wavelet-guided Dual-attention Feature Fusion Mamba for Breast Tumor Segmentation in Ultrasound Images

Dec 19, 2025Abstract:Breast ultrasound (BUS) image segmentation plays a vital role in assisting clinical diagnosis and early tumor screening. However, challenges such as speckle noise, imaging artifacts, irregular lesion morphology, and blurred boundaries severely hinder accurate segmentation. To address these challenges, this work aims to design a robust and efficient model capable of automatically segmenting breast tumors in BUS images.We propose a novel segmentation network named WDFFU-Mamba, which integrates wavelet-guided enhancement and dual-attention feature fusion within a U-shaped Mamba architecture. A Wavelet-denoised High-Frequency-guided Feature (WHF) module is employed to enhance low-level representations through noise-suppressed high-frequency cues. A Dual Attention Feature Fusion (DAFF) module is also introduced to effectively merge skip-connected and semantic features, improving contextual consistency.Extensive experiments on two public BUS datasets demonstrate that WDFFU-Mamba achieves superior segmentation accuracy, significantly outperforming existing methods in terms of Dice coefficient and 95th percentile Hausdorff Distance (HD95).The combination of wavelet-domain enhancement and attention-based fusion greatly improves both the accuracy and robustness of BUS image segmentation, while maintaining computational efficiency.The proposed WDFFU-Mamba model not only delivers strong segmentation performance but also exhibits desirable generalization ability across datasets, making it a promising solution for real-world clinical applications in breast tumor ultrasound analysis.

ScaleADFG: Affordance-based Dexterous Functional Grasping via Scalable Dataset

Nov 12, 2025Abstract:Dexterous functional tool-use grasping is essential for effective robotic manipulation of tools. However, existing approaches face significant challenges in efficiently constructing large-scale datasets and ensuring generalizability to everyday object scales. These issues primarily arise from size mismatches between robotic and human hands, and the diversity in real-world object scales. To address these limitations, we propose the ScaleADFG framework, which consists of a fully automated dataset construction pipeline and a lightweight grasp generation network. Our dataset introduce an affordance-based algorithm to synthesize diverse tool-use grasp configurations without expert demonstrations, allowing flexible object-hand size ratios and enabling large robotic hands (compared to human hands) to grasp everyday objects effectively. Additionally, we leverage pre-trained models to generate extensive 3D assets and facilitate efficient retrieval of object affordances. Our dataset comprising five object categories, each containing over 1,000 unique shapes with 15 scale variations. After filtering, the dataset includes over 60,000 grasps for each 2 dexterous robotic hands. On top of this dataset, we train a lightweight, single-stage grasp generation network with a notably simple loss design, eliminating the need for post-refinement. This demonstrates the critical importance of large-scale datasets and multi-scale object variant for effective training. Extensive experiments in simulation and on real robot confirm that the ScaleADFG framework exhibits strong adaptability to objects of varying scales, enhancing functional grasp stability, diversity, and generalizability. Moreover, our network exhibits effective zero-shot transfer to real-world objects. Project page is available at https://sizhe-wang.github.io/ScaleADFG_webpage

Kolmogorov Arnold Informed neural network: A physics-informed deep learning framework for solving PDEs based on Kolmogorov Arnold Networks

Jun 16, 2024

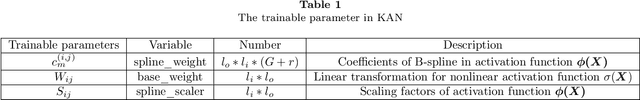

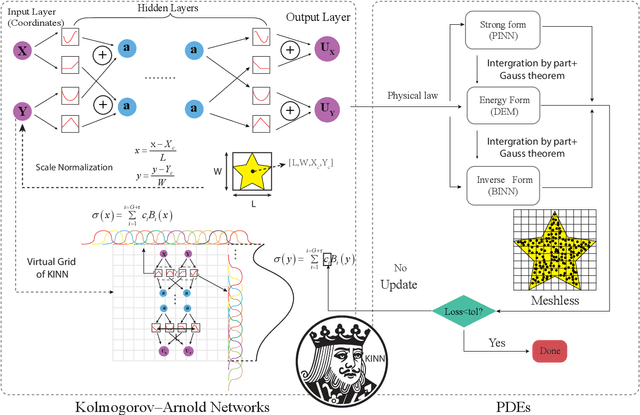

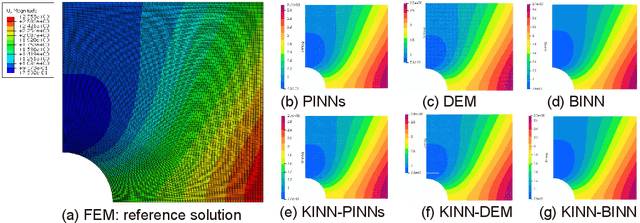

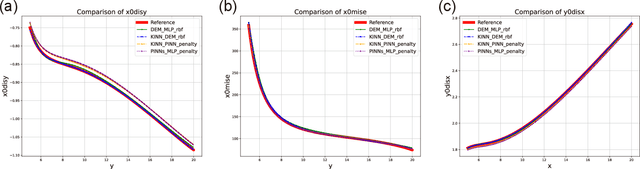

Abstract:AI for partial differential equations (PDEs) has garnered significant attention, particularly with the emergence of Physics-informed neural networks (PINNs). The recent advent of Kolmogorov-Arnold Network (KAN) indicates that there is potential to revisit and enhance the previously MLP-based PINNs. Compared to MLPs, KANs offer interpretability and require fewer parameters. PDEs can be described in various forms, such as strong form, energy form, and inverse form. While mathematically equivalent, these forms are not computationally equivalent, making the exploration of different PDE formulations significant in computational physics. Thus, we propose different PDE forms based on KAN instead of MLP, termed Kolmogorov-Arnold-Informed Neural Network (KINN). We systematically compare MLP and KAN in various numerical examples of PDEs, including multi-scale, singularity, stress concentration, nonlinear hyperelasticity, heterogeneous, and complex geometry problems. Our results demonstrate that KINN significantly outperforms MLP in terms of accuracy and convergence speed for numerous PDEs in computational solid mechanics, except for the complex geometry problem. This highlights KINN's potential for more efficient and accurate PDE solutions in AI for PDEs.

EVE: Efficient Vision-Language Pre-training with Masked Prediction and Modality-Aware MoE

Aug 23, 2023

Abstract:Building scalable vision-language models to learn from diverse, multimodal data remains an open challenge. In this paper, we introduce an Efficient Vision-languagE foundation model, namely EVE, which is one unified multimodal Transformer pre-trained solely by one unified pre-training task. Specifically, EVE encodes both vision and language within a shared Transformer network integrated with modality-aware sparse Mixture-of-Experts (MoE) modules, which capture modality-specific information by selectively switching to different experts. To unify pre-training tasks of vision and language, EVE performs masked signal modeling on image-text pairs to reconstruct masked signals, i.e., image pixels and text tokens, given visible signals. This simple yet effective pre-training objective accelerates training by 3.5x compared to the model pre-trained with Image-Text Contrastive and Image-Text Matching losses. Owing to the combination of the unified architecture and pre-training task, EVE is easy to scale up, enabling better downstream performance with fewer resources and faster training speed. Despite its simplicity, EVE achieves state-of-the-art performance on various vision-language downstream tasks, including visual question answering, visual reasoning, and image-text retrieval.

Progressive Transfer Learning for Dexterous In-Hand Manipulation with Multi-Fingered Anthropomorphic Hand

Apr 19, 2023

Abstract:Dexterous in-hand manipulation for a multi-fingered anthropomorphic hand is extremely difficult because of the high-dimensional state and action spaces, rich contact patterns between the fingers and objects. Even though deep reinforcement learning has made moderate progress and demonstrated its strong potential for manipulation, it is still faced with certain challenges, such as large-scale data collection and high sample complexity. Especially, for some slight change scenes, it always needs to re-collect vast amounts of data and carry out numerous iterations of fine-tuning. Remarkably, humans can quickly transfer learned manipulation skills to different scenarios with little supervision. Inspired by human flexible transfer learning capability, we propose a novel dexterous in-hand manipulation progressive transfer learning framework (PTL) based on efficiently utilizing the collected trajectories and the source-trained dynamics model. This framework adopts progressive neural networks for dynamics model transfer learning on samples selected by a new samples selection method based on dynamics properties, rewards and scores of the trajectories. Experimental results on contact-rich anthropomorphic hand manipulation tasks show that our method can efficiently and effectively learn in-hand manipulation skills with a few online attempts and adjustment learning under the new scene. Compared to learning from scratch, our method can reduce training time costs by 95%.

Learning Tri-mode Grasping for Ambidextrous Robot Picking

Feb 28, 2023Abstract:Object picking in cluttered scenes is a widely investigated field of robot manipulation, however, ambidextrous robot picking is still an important and challenging issue. We found the fusion of different prehensile actions (grasp and suction) can expand the range of objects that can be picked by robot, and the fusion of prehensile action and nonprehensile action (push) can expand the picking space of ambidextrous robot. In this paper, we propose a Push-Grasp-Suction (PGS) tri-mode grasping learning network for ambidextrous robot picking through the fusion of different prehensile actions and the fusion of prehensile action and nonprehensile aciton. The prehensile branch of PGS takes point clouds as input, and the 6-DoF picking configuration of grasp and suction in cluttered scenes are generated by multi-task point cloud learning. The nonprehensile branch with depth image input generates instance segmentation map and push configuration, cooperating with the prehensile actions to complete the picking of objects out of single-arm space. PGS generalizes well in real scene and achieves state-of-the-art picking performance.

DCM: Deep complementary energy method based on the principle of minimum complementary energy

Feb 13, 2023Abstract:The principle of minimum potential and complementary energy are the most important variational principles in solid mechanics. The deep energy method (DEM), which has received much attention, is based on the principle of minimum potential energy, but it lacks the important form of minimum complementary energy. To fill the gap, we propose a deep complementary energy method (DCM) based on the principle of minimum complementary energy. The output function of DCM is the stress function that naturally satisfies the equilibrium equation. We extend the proposed DCM algorithm to DCM-Plus (DCM-P), adding the terms that naturally satisfy the biharmonic equation in the Airy stress function. We combine operator learning with physical equations and propose a deep complementary energy operator method (DCM-O), including branch net, trunk net, basis net, and particular net. DCM-O first combines existing high-fidelity numerical results to train DCM-O through data. Then the complementary energy is used to train the branch net and trunk net in DCM-O. To analyze DCM performance, we present the numerical result of the most common stress functions, the Prandtl and Airy stress function. The proposed method DCM is used to model the representative mechanical problems with different types of boundary conditions. We compare DCM with the existing PINNs and DEM algorithms. The result shows the advantage of the proposed DCM is suitable for dealing with problems of dominated displacement boundary conditions, which is proved by mathematical derivations, as well as with numerical experiments. DCM-P and DCM-O can improve the accuracy and efficiency of DCM. DCM is an essential supplementary energy form to the deep energy method. Operator learning based on the energy method can balance data and physical equations well, giving computational mechanics broad research prospects.

BINN: A deep learning approach for computational mechanics problems based on boundary integral equations

Jan 11, 2023

Abstract:We proposed the boundary-integral type neural networks (BINN) for the boundary value problems in computational mechanics. The boundary integral equations are employed to transfer all the unknowns to the boundary, then the unknowns are approximated using neural networks and solved through a training process. The loss function is chosen as the residuals of the boundary integral equations. Regularization techniques are adopted to efficiently evaluate the weakly singular and Cauchy principle integrals in boundary integral equations. Potential problems and elastostatic problems are mainly concerned in this article as a demonstration. The proposed method has several outstanding advantages: First, the dimensions of the original problem are reduced by one, thus the freedoms are greatly reduced. Second, the proposed method does not require any extra treatment to introduce the boundary conditions, since they are naturally considered through the boundary integral equations. Therefore, the method is suitable for complex geometries. Third, BINN is suitable for problems on the infinite or semi-infinite domains. Moreover, BINN can easily handle heterogeneous problems with a single neural network without domain decomposition.

MetaFormer: A Unified Meta Framework for Fine-Grained Recognition

Mar 05, 2022

Abstract:Fine-Grained Visual Classification(FGVC) is the task that requires recognizing the objects belonging to multiple subordinate categories of a super-category. Recent state-of-the-art methods usually design sophisticated learning pipelines to tackle this task. However, visual information alone is often not sufficient to accurately differentiate between fine-grained visual categories. Nowadays, the meta-information (e.g., spatio-temporal prior, attribute, and text description) usually appears along with the images. This inspires us to ask the question: Is it possible to use a unified and simple framework to utilize various meta-information to assist in fine-grained identification? To answer this problem, we explore a unified and strong meta-framework(MetaFormer) for fine-grained visual classification. In practice, MetaFormer provides a simple yet effective approach to address the joint learning of vision and various meta-information. Moreover, MetaFormer also provides a strong baseline for FGVC without bells and whistles. Extensive experiments demonstrate that MetaFormer can effectively use various meta-information to improve the performance of fine-grained recognition. In a fair comparison, MetaFormer can outperform the current SotA approaches with only vision information on the iNaturalist2017 and iNaturalist2018 datasets. Adding meta-information, MetaFormer can exceed the current SotA approaches by 5.9% and 5.3%, respectively. Moreover, MetaFormer can achieve 92.3% and 92.7% on CUB-200-2011 and NABirds, which significantly outperforms the SotA approaches. The source code and pre-trained models are released athttps://github.com/dqshuai/MetaFormer.

Computer-aided Recognition and Assessment of a Porous Bioelastomer on Ultrasound Images for Regenerative Medicine Applications

Jan 31, 2022Abstract:Biodegradable elastic scaffolds have attracted more and more attention in the field of soft tissue repair and tissue engineering. These scaffolds made of porous bioelastomers support tissue ingrowth along with their own degradation. It is necessary to develop a computer-aided analyzing method based on ultrasound images to identify the degradation performance of the scaffold, not only to obviate the need to do destructive testing, but also to monitor the scaffold's degradation and tissue ingrowth over time. It is difficult using a single traditional image processing algorithm to extract continuous and accurate contour of a porous bioelastomer. This paper proposes a joint algorithm for the bioelastomer's contour detection and a texture feature extraction method for monitoring the degradation behavior of the bioelastomer. Mean-shift clustering method is used to obtain the bioelastomer's and native tissue's clustering feature information. Then the OTSU image binarization method automatically selects the optimal threshold value to convert the grayscale ultrasound image into a binary image. The Canny edge detector is used to extract the complete bioelastomer's contour. The first-order and second-order statistical features of texture are extracted. The proposed joint algorithm not only achieves the ideal extraction of the bioelastomer's contours in ultrasound images, but also gives valuable feedback of the degradation behavior of the bioelastomer at the implant site based on the changes of texture characteristics and contour area. The preliminary results of this study suggest that the proposed computer-aided image processing techniques have values and potentials in the non-invasive analysis of tissue scaffolds in vivo based on ultrasound images and may help tissue engineers evaluate the tissue scaffold's degradation and cellular ingrowth progress and improve the scaffold designs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge