Guangyun Xu

Learning Tri-mode Grasping for Ambidextrous Robot Picking

Feb 28, 2023Abstract:Object picking in cluttered scenes is a widely investigated field of robot manipulation, however, ambidextrous robot picking is still an important and challenging issue. We found the fusion of different prehensile actions (grasp and suction) can expand the range of objects that can be picked by robot, and the fusion of prehensile action and nonprehensile action (push) can expand the picking space of ambidextrous robot. In this paper, we propose a Push-Grasp-Suction (PGS) tri-mode grasping learning network for ambidextrous robot picking through the fusion of different prehensile actions and the fusion of prehensile action and nonprehensile aciton. The prehensile branch of PGS takes point clouds as input, and the 6-DoF picking configuration of grasp and suction in cluttered scenes are generated by multi-task point cloud learning. The nonprehensile branch with depth image input generates instance segmentation map and push configuration, cooperating with the prehensile actions to complete the picking of objects out of single-arm space. PGS generalizes well in real scene and achieves state-of-the-art picking performance.

GPR: Grasp Pose Refinement Network for Cluttered Scenes

May 18, 2021

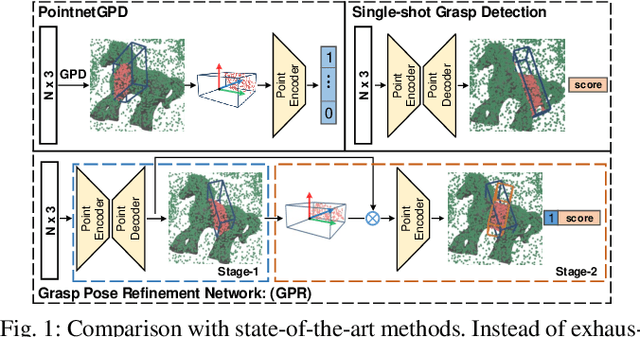

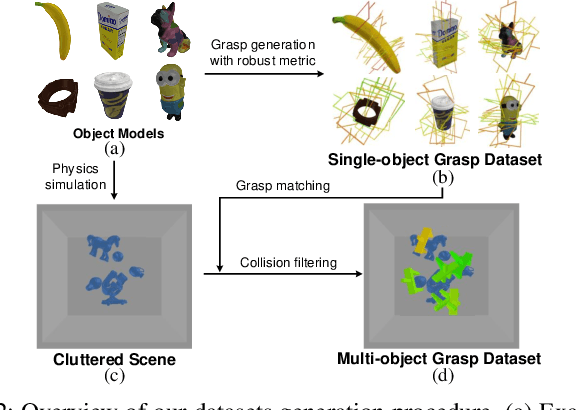

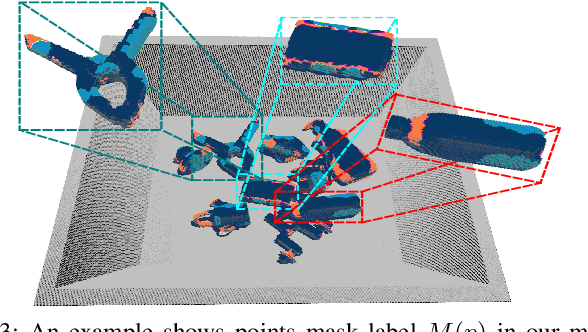

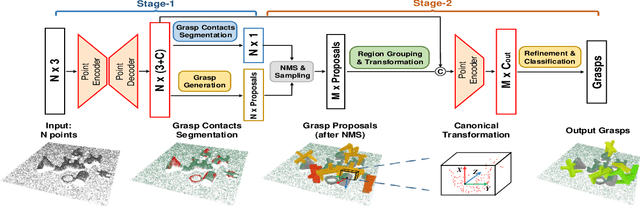

Abstract:Object grasping in cluttered scenes is a widely investigated field of robot manipulation. Most of the current works focus on estimating grasp pose from point clouds based on an efficient single-shot grasp detection network. However, due to the lack of geometry awareness of the local grasping area, it may cause severe collisions and unstable grasp configurations. In this paper, we propose a two-stage grasp pose refinement network which detects grasps globally while fine-tuning low-quality grasps and filtering noisy grasps locally. Furthermore, we extend the 6-DoF grasp with an extra dimension as grasp width which is critical for collisionless grasping in cluttered scenes. It takes a single-view point cloud as input and predicts dense and precise grasp configurations. To enhance the generalization ability, we build a synthetic single-object grasp dataset including 150 commodities of various shapes, and a multi-object cluttered scene dataset including 100k point clouds with robust, dense grasp poses and mask annotations. Experiments conducted on Yumi IRB-1400 Robot demonstrate that the model trained on our dataset performs well in real environments and outperforms previous methods by a large margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge