He Kong

Intention-Aware Diffusion Model for Pedestrian Trajectory Prediction

Aug 10, 2025

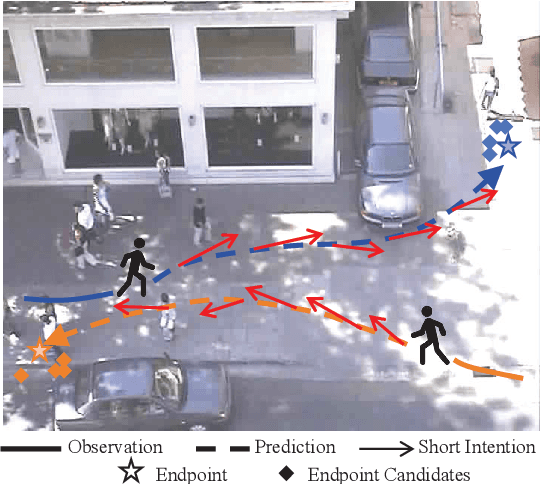

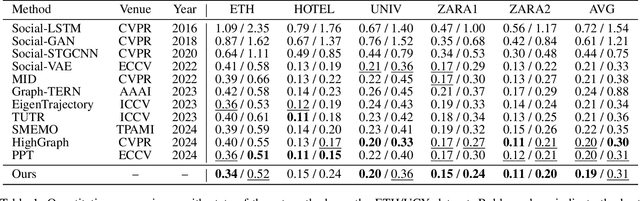

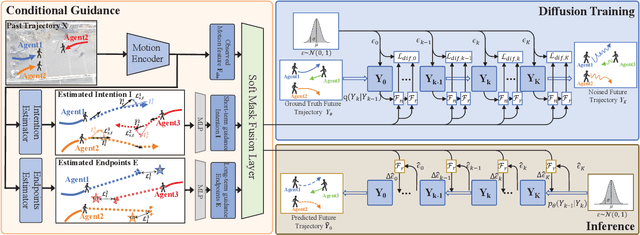

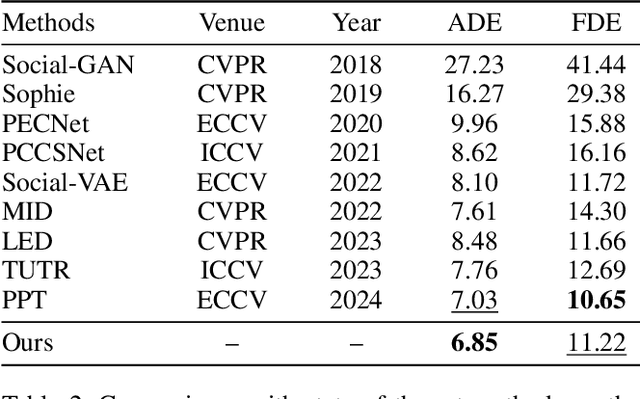

Abstract:Predicting pedestrian motion trajectories is critical for the path planning and motion control of autonomous vehicles. Recent diffusion-based models have shown promising results in capturing the inherent stochasticity of pedestrian behavior for trajectory prediction. However, the absence of explicit semantic modelling of pedestrian intent in many diffusion-based methods may result in misinterpreted behaviors and reduced prediction accuracy. To address the above challenges, we propose a diffusion-based pedestrian trajectory prediction framework that incorporates both short-term and long-term motion intentions. Short-term intent is modelled using a residual polar representation, which decouples direction and magnitude to capture fine-grained local motion patterns. Long-term intent is estimated through a learnable, token-based endpoint predictor that generates multiple candidate goals with associated probabilities, enabling multimodal and context-aware intention modelling. Furthermore, we enhance the diffusion process by incorporating adaptive guidance and a residual noise predictor that dynamically refines denoising accuracy. The proposed framework is evaluated on the widely used ETH, UCY, and SDD benchmarks, demonstrating competitive results against state-of-the-art methods.

Pentest-R1: Towards Autonomous Penetration Testing Reasoning Optimized via Two-Stage Reinforcement Learning

Aug 10, 2025Abstract:Automating penetration testing is crucial for enhancing cybersecurity, yet current Large Language Models (LLMs) face significant limitations in this domain, including poor error handling, inefficient reasoning, and an inability to perform complex end-to-end tasks autonomously. To address these challenges, we introduce Pentest-R1, a novel framework designed to optimize LLM reasoning capabilities for this task through a two-stage reinforcement learning pipeline. We first construct a dataset of over 500 real-world, multi-step walkthroughs, which Pentest-R1 leverages for offline reinforcement learning (RL) to instill foundational attack logic. Subsequently, the LLM is fine-tuned via online RL in an interactive Capture The Flag (CTF) environment, where it learns directly from environmental feedback to develop robust error self-correction and adaptive strategies. Our extensive experiments on the Cybench and AutoPenBench benchmarks demonstrate the framework's effectiveness. On AutoPenBench, Pentest-R1 achieves a 24.2\% success rate, surpassing most state-of-the-art models and ranking second only to Gemini 2.5 Flash. On Cybench, it attains a 15.0\% success rate in unguided tasks, establishing a new state-of-the-art for open-source LLMs and matching the performance of top proprietary models. Ablation studies confirm that the synergy of both training stages is critical to its success.

Intention Enhanced Diffusion Model for Multimodal Pedestrian Trajectory Prediction

Aug 06, 2025

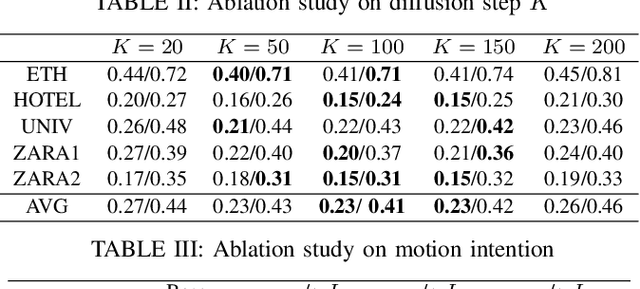

Abstract:Predicting pedestrian motion trajectories is critical for path planning and motion control of autonomous vehicles. However, accurately forecasting crowd trajectories remains a challenging task due to the inherently multimodal and uncertain nature of human motion. Recent diffusion-based models have shown promising results in capturing the stochasticity of pedestrian behavior for trajectory prediction. However, few diffusion-based approaches explicitly incorporate the underlying motion intentions of pedestrians, which can limit the interpretability and precision of prediction models. In this work, we propose a diffusion-based multimodal trajectory prediction model that incorporates pedestrians' motion intentions into the prediction framework. The motion intentions are decomposed into lateral and longitudinal components, and a pedestrian intention recognition module is introduced to enable the model to effectively capture these intentions. Furthermore, we adopt an efficient guidance mechanism that facilitates the generation of interpretable trajectories. The proposed framework is evaluated on two widely used human trajectory prediction benchmarks, ETH and UCY, on which it is compared against state-of-the-art methods. The experimental results demonstrate that our method achieves competitive performance.

Observability-Aware Active Calibration of Multi-Sensor Extrinsics for Ground Robots via Online Trajectory Optimization

Jun 16, 2025Abstract:Accurate calibration of sensor extrinsic parameters for ground robotic systems (i.e., relative poses) is crucial for ensuring spatial alignment and achieving high-performance perception. However, existing calibration methods typically require complex and often human-operated processes to collect data. Moreover, most frameworks neglect acoustic sensors, thereby limiting the associated systems' auditory perception capabilities. To alleviate these issues, we propose an observability-aware active calibration method for ground robots with multimodal sensors, including a microphone array, a LiDAR (exteroceptive sensors), and wheel encoders (proprioceptive sensors). Unlike traditional approaches, our method enables active trajectory optimization for online data collection and calibration, contributing to the development of more intelligent robotic systems. Specifically, we leverage the Fisher information matrix (FIM) to quantify parameter observability and adopt its minimum eigenvalue as an optimization metric for trajectory generation via B-spline curves. Through planning and replanning of robot trajectory online, the method enhances the observability of multi-sensor extrinsic parameters. The effectiveness and advantages of our method have been demonstrated through numerical simulations and real-world experiments. For the benefit of the community, we have also open-sourced our code and data at https://github.com/AISLAB-sustech/Multisensor-Calibration.

TGRPO :Fine-tuning Vision-Language-Action Model via Trajectory-wise Group Relative Policy Optimization

Jun 11, 2025

Abstract:Recent advances in Vision-Language-Action (VLA) model have demonstrated strong generalization capabilities across diverse scenes, tasks, and robotic platforms when pretrained at large-scale datasets. However, these models still require task-specific fine-tuning in novel environments, a process that relies almost exclusively on supervised fine-tuning (SFT) using static trajectory datasets. Such approaches neither allow robot to interact with environment nor do they leverage feedback from live execution. Also, their success is critically dependent on the size and quality of the collected trajectories. Reinforcement learning (RL) offers a promising alternative by enabling closed-loop interaction and aligning learned policies directly with task objectives. In this work, we draw inspiration from the ideas of GRPO and propose the Trajectory-wise Group Relative Policy Optimization (TGRPO) method. By fusing step-level and trajectory-level advantage signals, this method improves GRPO's group-level advantage estimation, thereby making the algorithm more suitable for online reinforcement learning training of VLA. Experimental results on ten manipulation tasks from the libero-object benchmark demonstrate that TGRPO consistently outperforms various baseline methods, capable of generating more robust and efficient policies across multiple tested scenarios. Our source codes are available at: https://github.com/hahans/TGRPO

Listen to Extract: Onset-Prompted Target Speaker Extraction

May 08, 2025

Abstract:We propose $\textit{listen to extract}$ (LExt), a highly-effective while extremely-simple algorithm for monaural target speaker extraction (TSE). Given an enrollment utterance of a target speaker, LExt aims at extracting the target speaker from the speaker's mixed speech with other speakers. For each mixture, LExt concatenates an enrollment utterance of the target speaker to the mixture signal at the waveform level, and trains deep neural networks (DNN) to extract the target speech based on the concatenated mixture signal. The rationale is that, this way, an artificial speech onset is created for the target speaker and it could prompt the DNN (a) which speaker is the target to extract; and (b) spectral-temporal patterns of the target speaker that could help extraction. This simple approach produces strong TSE performance on multiple public TSE datasets including WSJ0-2mix, WHAM! and WHAMR!.

Calibration of Multiple Asynchronous Microphone Arrays using Hybrid TDOA

Feb 10, 2025

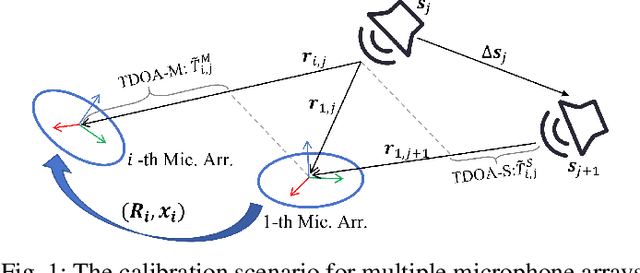

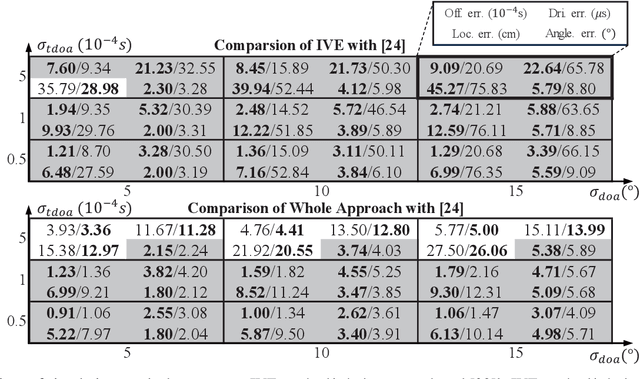

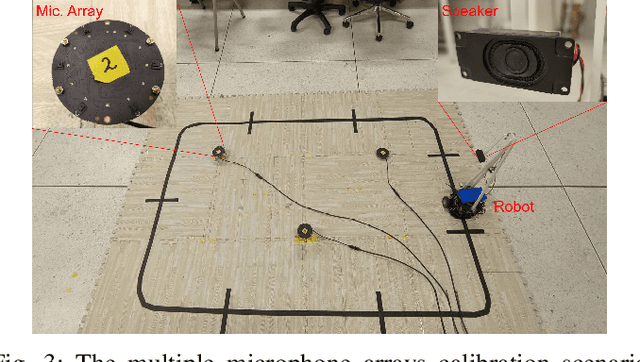

Abstract:Accurate calibration of acoustic sensing systems made of multiple asynchronous microphone arrays is essential for satisfactory performance in sound source localization and tracking. State-of-the-art calibration methods for this type of system rely on the time difference of arrival and direction of arrival measurements among the microphone arrays (denoted as TDOA-M and DOA, respectively). In this paper, to enhance calibration accuracy, we propose to incorporate the time difference of arrival measurements between adjacent sound events (TDOAS) with respect to the microphone arrays. More specifically, we propose a two-stage calibration approach, including an initial value estimation (IVE) procedure and the final joint optimization step. The IVE stage first initializes all parameters except for microphone array orientations, using hybrid TDOA (i.e., TDOAM and TDOA-S), odometer data from a moving robot carrying a speaker, and DOA. Subsequently, microphone orientations are estimated through the iterative closest point method. The final joint optimization step estimates multiple microphone array locations, orientations, time offsets, clock drift rates, and sound source locations simultaneously. Both simulation and experiment results show that for scenarios with low or moderate TDOA noise levels, our approach outperforms existing methods in terms of accuracy. All code and data are available at https://github.com/AISLABsustech/Hybrid-TDOA-Multi-Calib.

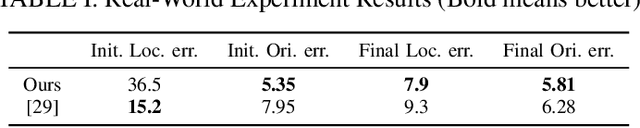

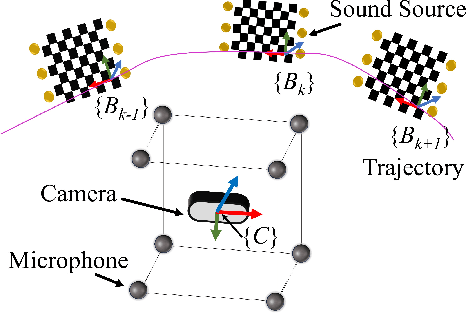

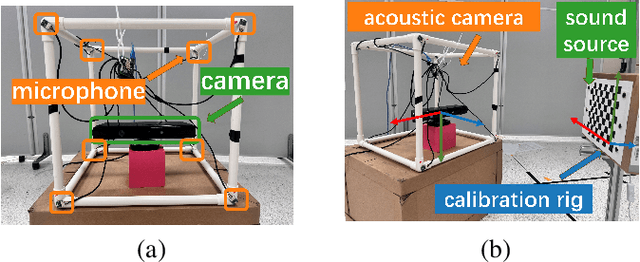

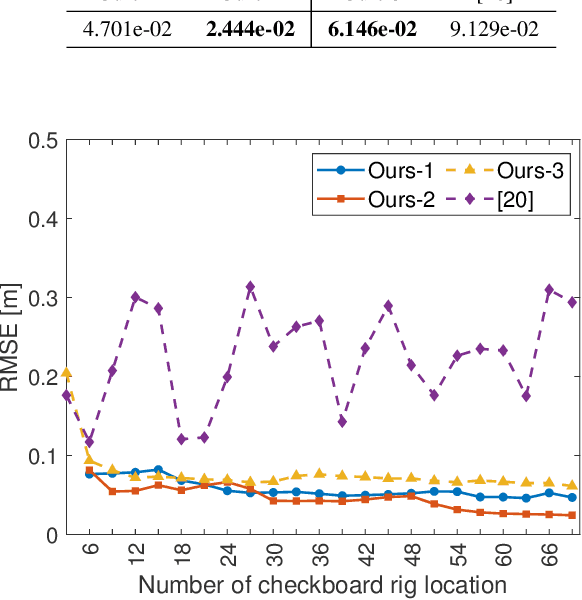

Improved Extrinsic Calibration of Acoustic Cameras via Batch Optimization

Feb 10, 2025

Abstract:Acoustic cameras have found many applications in practice. Accurate and reliable extrinsic calibration of the microphone array and visual sensors within acoustic cameras is crucial for fusing visual and auditory measurements. Existing calibration methods either require prior knowledge of the microphone array geometry or rely on grid search which suffers from slow iteration speed or poor convergence. To overcome these limitations, in this paper, we propose an automatic calibration technique using a calibration board with both visual and acoustic markers to identify each microphone position in the camera frame. We formulate the extrinsic calibration problem (between microphones and the visual sensor) as a nonlinear least squares problem and employ a batch optimization strategy to solve the associated problem. Extensive numerical simulations and realworld experiments show that the proposed method improves both the accuracy and robustness of extrinsic parameter calibration for acoustic cameras, in comparison to existing methods. To benefit the community, we open-source all the codes and data at https://github.com/AISLAB-sustech/AcousticCamera.

Cogito, ergo sum: A Neurobiologically-Inspired Cognition-Memory-Growth System for Code Generation

Jan 30, 2025

Abstract:Large language models based Multi Agent Systems (MAS) have demonstrated promising performance for enhancing the efficiency and accuracy of code generation tasks. However,most existing methods follow a conventional sequence of planning, coding, and debugging,which contradicts the growth-driven nature of human learning process. Additionally,the frequent information interaction between multiple agents inevitably involves high computational costs. In this paper,we propose Cogito,a neurobiologically inspired multi-agent framework to enhance the problem-solving capabilities in code generation tasks with lower cost. Specifically,Cogito adopts a reverse sequence: it first undergoes debugging, then coding,and finally planning. This approach mimics human learning and development,where knowledge is acquired progressively. Accordingly,a hippocampus-like memory module with different functions is designed to work with the pipeline to provide quick retrieval in similar tasks. Through this growth-based learning model,Cogito accumulates knowledge and cognitive skills at each stage,ultimately forming a Super Role an all capable agent to perform the code generation task. Extensive experiments against representative baselines demonstrate the superior performance and efficiency of Cogito. The code is publicly available at https://anonymous.4open.science/r/Cogito-0083.

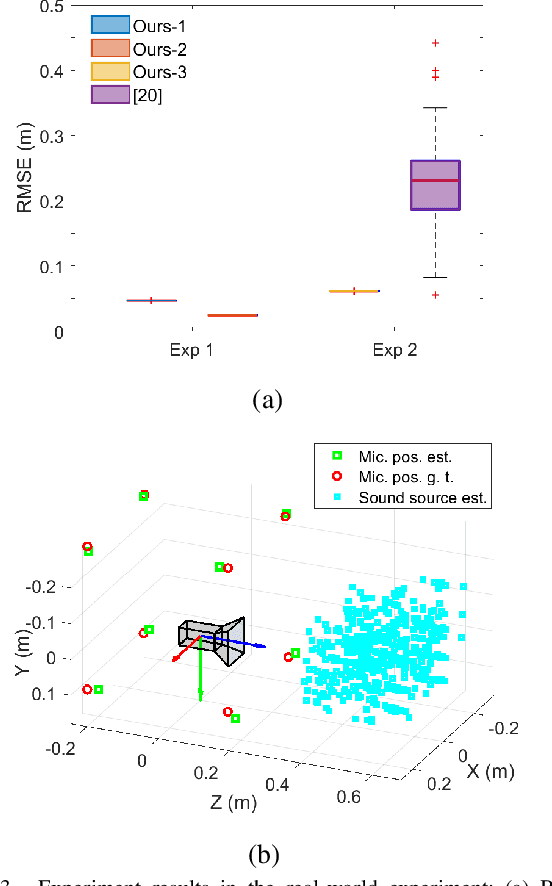

SLAM-based Joint Calibration of Multiple Asynchronous Microphone Arrays and Sound Source Localization

May 30, 2024

Abstract:Robot audition systems with multiple microphone arrays have many applications in practice. However, accurate calibration of multiple microphone arrays remains challenging because there are many unknown parameters to be identified, including the relative transforms (i.e., orientation, translation) and asynchronous factors (i.e., initial time offset and sampling clock difference) between microphone arrays. To tackle these challenges, in this paper, we adopt batch simultaneous localization and mapping (SLAM) for joint calibration of multiple asynchronous microphone arrays and sound source localization. Using the Fisher information matrix (FIM) approach, we first conduct the observability analysis (i.e., parameter identifiability) of the above-mentioned calibration problem and establish necessary/sufficient conditions under which the FIM and the Jacobian matrix have full column rank, which implies the identifiability of the unknown parameters. We also discover several scenarios where the unknown parameters are not uniquely identifiable. Subsequently, we propose an effective framework to initialize the unknown parameters, which is used as the initial guess in batch SLAM for multiple microphone arrays calibration, aiming to further enhance optimization accuracy and convergence. Extensive numerical simulations and real experiments have been conducted to verify the performance of the proposed method. The experiment results show that the proposed pipeline achieves higher accuracy with fast convergence in comparison to methods that use the noise-corrupted ground truth of the unknown parameters as the initial guess in the optimization and other existing frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge