Fanyi Wang

FastMap: Fast Queries Initialization Based Vectorized HD Map Reconstruction Framework

Mar 07, 2025

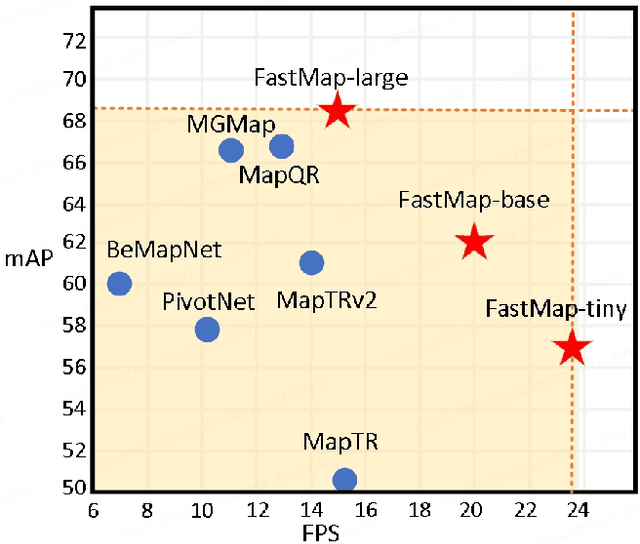

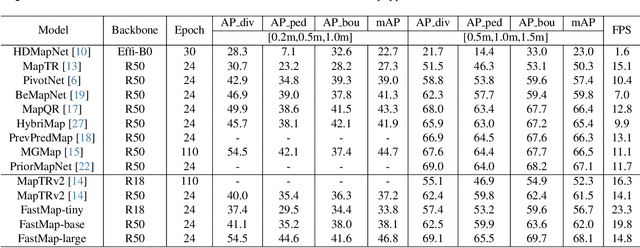

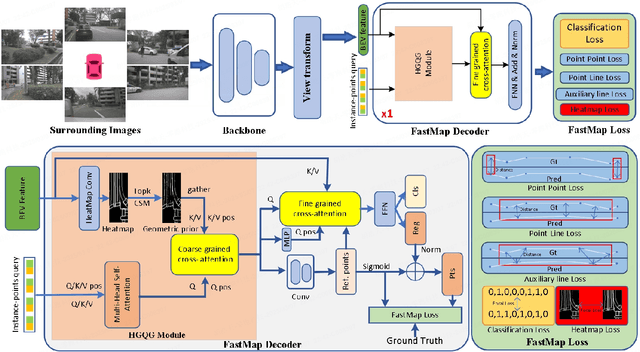

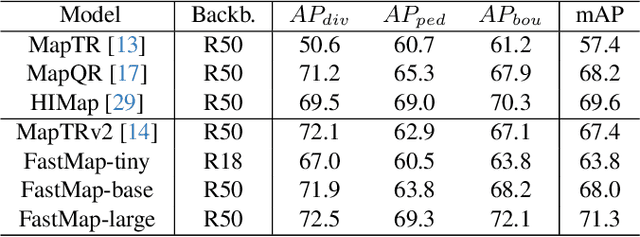

Abstract:Reconstruction of high-definition maps is a crucial task in perceiving the autonomous driving environment, as its accuracy directly impacts the reliability of prediction and planning capabilities in downstream modules. Current vectorized map reconstruction methods based on the DETR framework encounter limitations due to the redundancy in the decoder structure, necessitating the stacking of six decoder layers to maintain performance, which significantly hampers computational efficiency. To tackle this issue, we introduce FastMap, an innovative framework designed to reduce decoder redundancy in existing approaches. FastMap optimizes the decoder architecture by employing a single-layer, two-stage transformer that achieves multilevel representation capabilities. Our framework eliminates the conventional practice of randomly initializing queries and instead incorporates a heatmap-guided query generation module during the decoding phase, which effectively maps image features into structured query vectors using learnable positional encoding. Additionally, we propose a geometry-constrained point-to-line loss mechanism for FastMap, which adeptly addresses the challenge of distinguishing highly homogeneous features that often arise in traditional point-to-point loss computations. Extensive experiments demonstrate that FastMap achieves state-of-the-art performance in both nuScenes and Argoverse2 datasets, with its decoder operating 3.2 faster than the baseline. Code and more demos are available at https://github.com/hht1996ok/FastMap.

MagicStyle: Portrait Stylization Based on Reference Image

Sep 12, 2024

Abstract:The development of diffusion models has significantly advanced the research on image stylization, particularly in the area of stylizing a content image based on a given style image, which has attracted many scholars. The main challenge in this reference image stylization task lies in how to maintain the details of the content image while incorporating the color and texture features of the style image. This challenge becomes even more pronounced when the content image is a portrait which has complex textural details. To address this challenge, we propose a diffusion model-based reference image stylization method specifically for portraits, called MagicStyle. MagicStyle consists of two phases: Content and Style DDIM Inversion (CSDI) and Feature Fusion Forward (FFF). The CSDI phase involves a reverse denoising process, where DDIM Inversion is performed separately on the content image and the style image, storing the self-attention query, key and value features of both images during the inversion process. The FFF phase executes forward denoising, harmoniously integrating the texture and color information from the pre-stored feature queries, keys and values into the diffusion generation process based on our Well-designed Feature Fusion Attention (FFA). We conducted comprehensive comparative and ablation experiments to validate the effectiveness of our proposed MagicStyle and FFA.

MagicID: Flexible ID Fidelity Generation System

Aug 20, 2024

Abstract:Portrait Fidelity Generation is a prominent research area in generative models, with a primary focus on enhancing both controllability and fidelity. Current methods face challenges in generating high-fidelity portrait results when faces occupy a small portion of the image with a low resolution, especially in multi-person group photo settings. To tackle these issues, we propose a systematic solution called MagicID, based on a self-constructed million-level multi-modal dataset named IDZoom. MagicID consists of Multi-Mode Fusion training strategy (MMF) and DDIM Inversion based ID Restoration inference framework (DIIR). During training, MMF iteratively uses the skeleton and landmark modalities from IDZoom as conditional guidance. By introducing the Clone Face Tuning in training stage and Mask Guided Multi-ID Cross Attention (MGMICA) in inference stage, explicit constraints on face positional features are achieved for multi-ID group photo generation. The DIIR aims to address the issue of artifacts. The DDIM Inversion is used in conjunction with face landmarks, global and local face features to achieve face restoration while keeping the background unchanged. Additionally, DIIR is plug-and-play and can be applied to any diffusion-based portrait generation method. To validate the effectiveness of MagicID, we conducted extensive comparative and ablation experiments. The experimental results demonstrate that MagicID has significant advantages in both subjective and objective metrics, and achieves controllable generation in multi-person scenarios.

RepControlNet: ControlNet Reparameterization

Aug 17, 2024

Abstract:With the wide application of diffusion model, the high cost of inference resources has became an important bottleneck for its universal application. Controllable generation, such as ControlNet, is one of the key research directions of diffusion model, and the research related to inference acceleration and model compression is more important. In order to solve this problem, this paper proposes a modal reparameterization method, RepControlNet, to realize the controllable generation of diffusion models without increasing computation. In the training process, RepControlNet uses the adapter to modulate the modal information into the feature space, copy the CNN and MLP learnable layers of the original diffusion model as the modal network, and initialize these weights based on the original weights and coefficients. The training process only optimizes the parameters of the modal network. In the inference process, the weights of the neutralization original diffusion model in the modal network are reparameterized, which can be compared with or even surpass the methods such as ControlNet, which use additional parameters and computational quantities, without increasing the number of parameters. We have carried out a large number of experiments on both SD1.5 and SDXL, and the experimental results show the effectiveness and efficiency of the proposed RepControlNet.

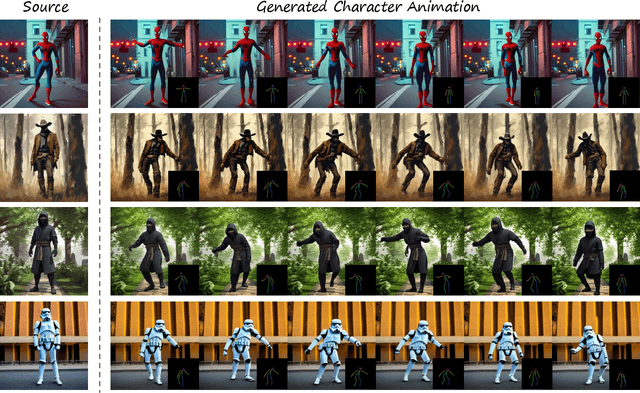

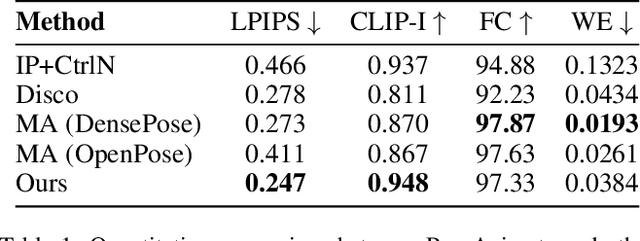

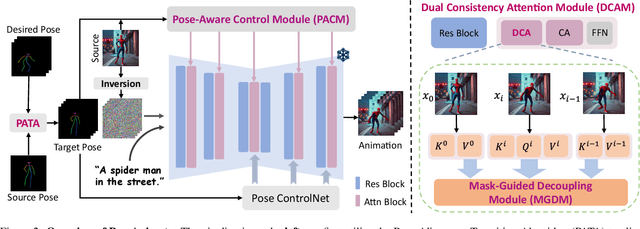

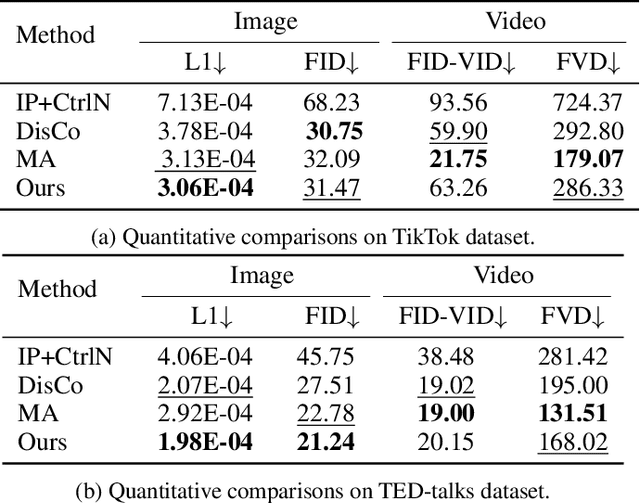

PoseAnimate: Zero-shot high fidelity pose controllable character animation

Apr 30, 2024

Abstract:Image-to-video(I2V) generation aims to create a video sequence from a single image, which requires high temporal coherence and visual fidelity with the source image.However, existing approaches suffer from character appearance inconsistency and poor preservation of fine details. Moreover, they require a large amount of video data for training, which can be computationally demanding.To address these limitations,we propose PoseAnimate, a novel zero-shot I2V framework for character animation.PoseAnimate contains three key components: 1) Pose-Aware Control Module (PACM) incorporates diverse pose signals into conditional embeddings, to preserve character-independent content and maintain precise alignment of actions.2) Dual Consistency Attention Module (DCAM) enhances temporal consistency, and retains character identity and intricate background details.3) Mask-Guided Decoupling Module (MGDM) refines distinct feature perception, improving animation fidelity by decoupling the character and background.We also propose a Pose Alignment Transition Algorithm (PATA) to ensure smooth action transition.Extensive experiment results demonstrate that our approach outperforms the state-of-the-art training-based methods in terms of character consistency and detail fidelity. Moreover, it maintains a high level of temporal coherence throughout the generated animations.

LoopAnimate: Loopable Salient Object Animation

Apr 14, 2024Abstract:Research on diffusion model-based video generation has advanced rapidly. However, limitations in object fidelity and generation length hinder its practical applications. Additionally, specific domains like animated wallpapers require seamless looping, where the first and last frames of the video match seamlessly. To address these challenges, this paper proposes LoopAnimate, a novel method for generating videos with consistent start and end frames. To enhance object fidelity, we introduce a framework that decouples multi-level image appearance and textual semantic information. Building upon an image-to-image diffusion model, our approach incorporates both pixel-level and feature-level information from the input image, injecting image appearance and textual semantic embeddings at different positions of the diffusion model. Existing UNet-based video generation models require to input the entire videos during training to encode temporal and positional information at once. However, due to limitations in GPU memory, the number of frames is typically restricted to 16. To address this, this paper proposes a three-stage training strategy with progressively increasing frame numbers and reducing fine-tuning modules. Additionally, we introduce the Temporal E nhanced Motion Module(TEMM) to extend the capacity for encoding temporal and positional information up to 36 frames. The proposed LoopAnimate, which for the first time extends the single-pass generation length of UNet-based video generation models to 35 frames while maintaining high-quality video generation. Experiments demonstrate that LoopAnimate achieves state-of-the-art performance in both objective metrics, such as fidelity and temporal consistency, and subjective evaluation results.

ADMap: Anti-disturbance framework for reconstructing online vectorized HD map

Jan 24, 2024

Abstract:In the field of autonomous driving, online high-definition (HD) map reconstruction is crucial for planning tasks. Recent research has developed several high-performance HD map reconstruction models to meet this necessity. However, the point sequences within the instance vectors may be jittery or jagged due to prediction bias, which can impact subsequent tasks. Therefore, this paper proposes the Anti-disturbance Map reconstruction framework (ADMap). To mitigate point-order jitter, the framework consists of three modules: Multi-Scale Perception Neck, Instance Interactive Attention (IIA), and Vector Direction Difference Loss (VDDL). By exploring the point-order relationships between and within instances in a cascading manner, the model can monitor the point-order prediction process more effectively. ADMap achieves state-of-the-art performance on the nuScenes and Argoverse2 datasets. Extensive results demonstrate its ability to produce stable and reliable map elements in complex and changing driving scenarios. Code and more demos are available at https://github.com/hht1996ok/ADMap.

Lightweight high-resolution Subject Matting in the Real World

Dec 12, 2023

Abstract:Existing saliency object detection (SOD) methods struggle to satisfy fast inference and accurate results simultaneously in high resolution scenes. They are limited by the quality of public datasets and efficient network modules for high-resolution images. To alleviate these issues, we propose to construct a saliency object matting dataset HRSOM and a lightweight network PSUNet. Considering efficient inference of mobile depolyment framework, we design a symmetric pixel shuffle module and a lightweight module TRSU. Compared to 13 SOD methods, the proposed PSUNet has the best objective performance on the high-resolution benchmark dataset. Evaluation results of objective assessment are superior compared to U$^2$Net that has 10 times of parameter amount of our network. On Snapdragon 8 Gen 2 Mobile Platform, inference a single 640$\times$640 image only takes 113ms. And on the subjective assessment, evaluation results are better than the industry benchmark IOS16 (Lift subject from background).

BARET : Balanced Attention based Real image Editing driven by Target-text Inversion

Dec 09, 2023

Abstract:Image editing approaches with diffusion models have been rapidly developed, yet their applicability are subject to requirements such as specific editing types (e.g., foreground or background object editing, style transfer), multiple conditions (e.g., mask, sketch, caption), and time consuming fine-tuning of diffusion models. For alleviating these limitations and realizing efficient real image editing, we propose a novel editing technique that only requires an input image and target text for various editing types including non-rigid edits without fine-tuning diffusion model. Our method contains three novelties:(I) Target-text Inversion Schedule (TTIS) is designed to fine-tune the input target text embedding to achieve fast image reconstruction without image caption and acceleration of convergence.(II) Progressive Transition Scheme applies progressive linear interpolation between target text embedding and its fine-tuned version to generate transition embedding for maintaining non-rigid editing capability.(III) Balanced Attention Module (BAM) balances the tradeoff between textual description and image semantics.By the means of combining self-attention map from reconstruction process and cross-attention map from transition process, the guidance of target text embeddings in diffusion process is optimized.In order to demonstrate editing capability, effectiveness and efficiency of the proposed BARET, we have conducted extensive qualitative and quantitative experiments. Moreover, results derived from user study and ablation study further prove the superiority over other methods.

A Machine Vision Method for Correction of Eccentric Error: Based on Adaptive Enhancement Algorithm

Sep 01, 2023Abstract:In the procedure of surface defects detection for large-aperture aspherical optical elements, it is of vital significance to adjust the optical axis of the element to be coaxial with the mechanical spin axis accurately. Therefore, a machine vision method for eccentric error correction is proposed in this paper. Focusing on the severe defocus blur of reference crosshair image caused by the imaging characteristic of the aspherical optical element, which may lead to the failure of correction, an Adaptive Enhancement Algorithm (AEA) is proposed to strengthen the crosshair image. AEA is consisted of existed Guided Filter Dark Channel Dehazing Algorithm (GFA) and proposed lightweight Multi-scale Densely Connected Network (MDC-Net). The enhancement effect of GFA is excellent but time-consuming, and the enhancement effect of MDC-Net is slightly inferior but strongly real-time. As AEA will be executed dozens of times during each correction procedure, its real-time performance is very important. Therefore, by setting the empirical threshold of definition evaluation function SMD2, GFA and MDC-Net are respectively applied to highly and slightly blurred crosshair images so as to ensure the enhancement effect while saving as much time as possible. AEA has certain robustness in time-consuming performance, which takes an average time of 0.2721s and 0.0963s to execute GFA and MDC-Net separately on ten 200pixels 200pixels Region of Interest (ROI) images with different degrees of blur. And the eccentricity error can be reduced to within 10um by our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge