Fang-Chieh Chou

Reachability Analysis for FollowerStopper: Safety Analysis and Experimental Results

Dec 29, 2021

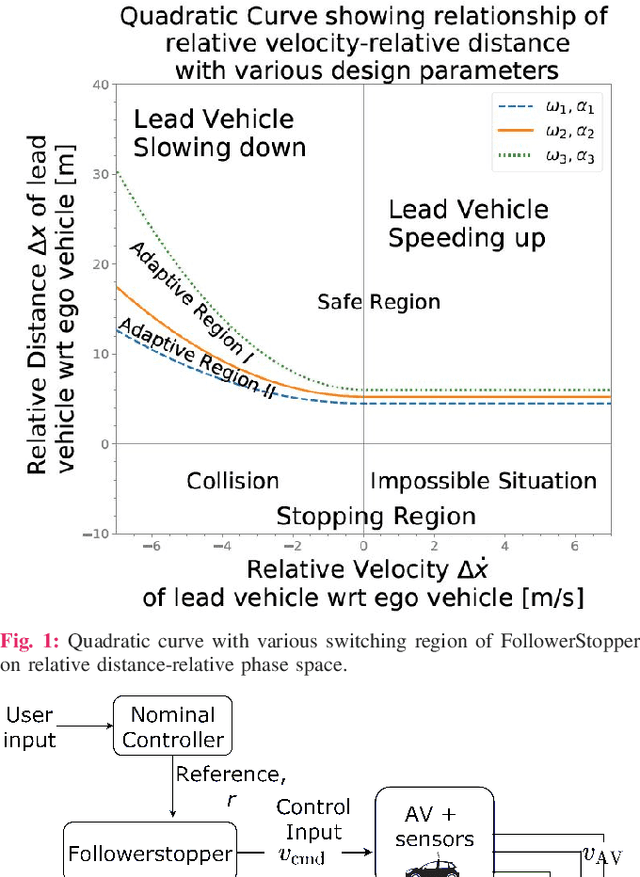

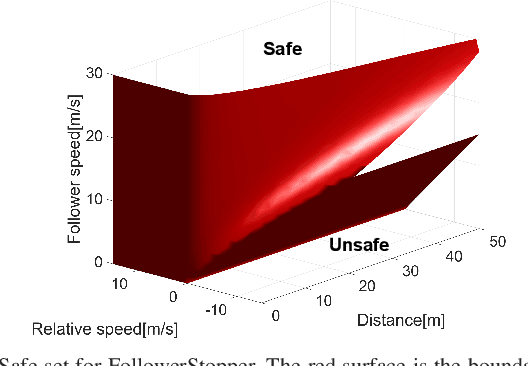

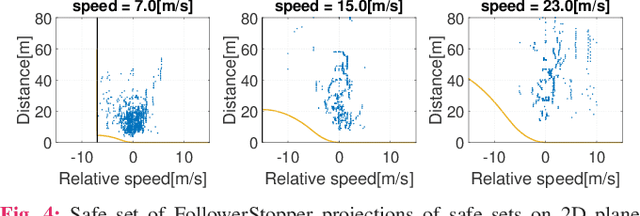

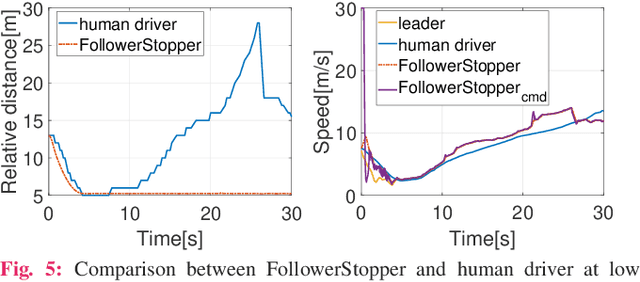

Abstract:Motivated by earlier work and the developer of a new algorithm, the FollowerStopper, this article uses reachability analysis to verify the safety of the FollowerStopper algorithm, which is a controller designed for dampening stop- and-go traffic waves. With more than 1100 miles of driving data collected by our physical platform, we validate our analysis results by comparing it to human driving behaviors. The FollowerStopper controller has been demonstrated to dampen stop-and-go traffic waves at low speed, but previous analysis on its relative safety has been limited to upper and lower bounds of acceleration. To expand upon previous analysis, reachability analysis is used to investigate the safety at the speeds it was originally tested and also at higher speeds. Two formulations of safety analysis with different criteria are shown: distance-based and time headway-based. The FollowerStopper is considered safe with distance-based criterion. However, simulation results demonstrate that the FollowerStopper is not representative of human drivers - it follows too closely behind vehicles, specifically at a distance human would deem as unsafe. On the other hand, under the time headway-based safety analysis, the FollowerStopper is not considered safe anymore. A modified FollowerStopper is proposed to satisfy time-based safety criterion. Simulation results of the proposed FollowerStopper shows that its response represents human driver behavior better.

Investigating the Effect of Sensor Modalities in Multi-Sensor Detection-Prediction Models

Jan 09, 2021

Abstract:Detection of surrounding objects and their motion prediction are critical components of a self-driving system. Recently proposed models that jointly address these tasks rely on a number of sensors to achieve state-of-the-art performance. However, this increases system complexity and may result in a brittle model that overfits to any single sensor modality while ignoring others, leading to reduced generalization. We focus on this important problem and analyze the contribution of sensor modalities towards the model performance. In addition, we investigate the use of sensor dropout to mitigate the above-mentioned issues, leading to a more robust, better-performing model on real-world driving data.

Uncertainty-Aware Vehicle Orientation Estimation for Joint Detection-Prediction Models

Nov 05, 2020

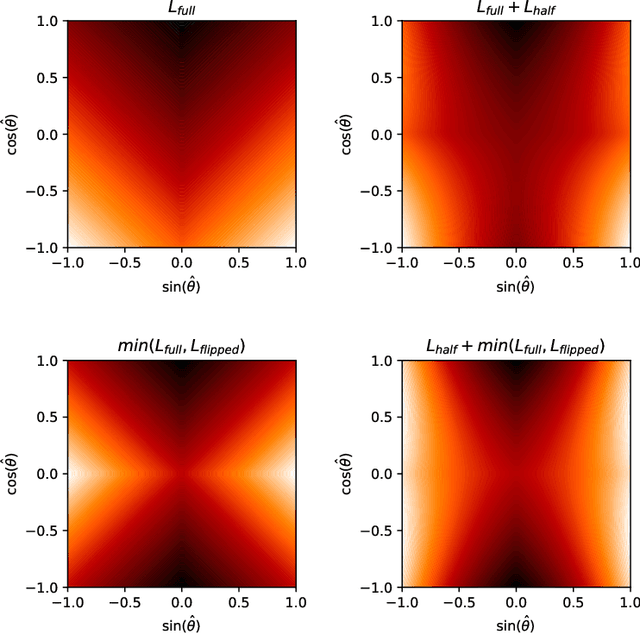

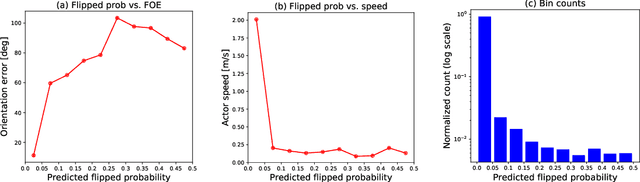

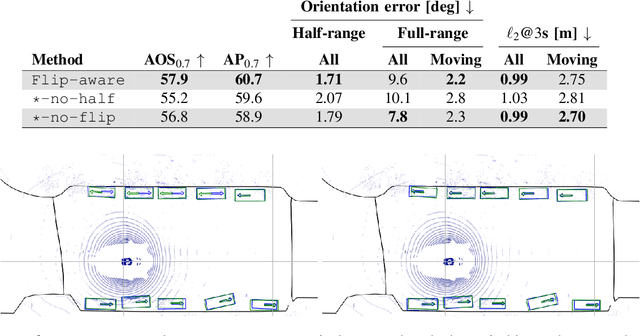

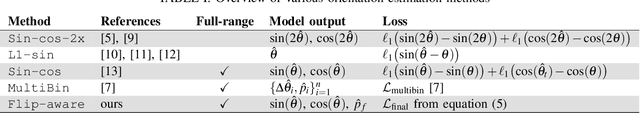

Abstract:Object detection is a critical component of a self-driving system, tasked with inferring the current states of the surrounding traffic actors. While there exist a number of studies on the problem of inferring the position and shape of vehicle actors, understanding actors' orientation remains a challenge for existing state-of-the-art detectors. Orientation is an important property for downstream modules of an autonomous system, particularly relevant for motion prediction of stationary or reversing actors where current approaches struggle. We focus on this task and present a method that extends the existing models that perform joint object detection and motion prediction, allowing us to more accurately infer vehicle orientations. In addition, the approach is able to quantify prediction uncertainty, outputting the probability that the inferred orientation is flipped, which allows for improved motion prediction and safer autonomous operations. Empirical results show the benefits of the approach, obtaining state-of-the-art performance on the open-sourced nuScenes data set.

Multi-View Fusion of Sensor Data for Improved Perception and Prediction in Autonomous Driving

Aug 27, 2020

Abstract:We present an end-to-end method for object detection and trajectory prediction utilizing multi-view representations of LiDAR returns. Our method builds on a state-of-the-art Bird's-Eye View (BEV) network that fuses voxelized features from a sequence of historical LiDAR data as well as rasterized high-definition map to perform detection and prediction tasks. We extend the BEV network with additional LiDAR Range-View (RV) features that use the raw LiDAR information in its native, non-quantized representation. The RV feature map is projected into BEV and fused with the BEV features computed from LiDAR and high-definition map. The fused features are then further processed to output the final detections and trajectories, within a single end-to-end trainable network. In addition, using this framework the RV fusion of LiDAR and camera is performed in a straightforward and computational efficient manner. The proposed approach improves the state-of-the-art on proprietary large-scale real-world data collected by a fleet of self-driving vehicles, as well as on the public nuScenes data set.

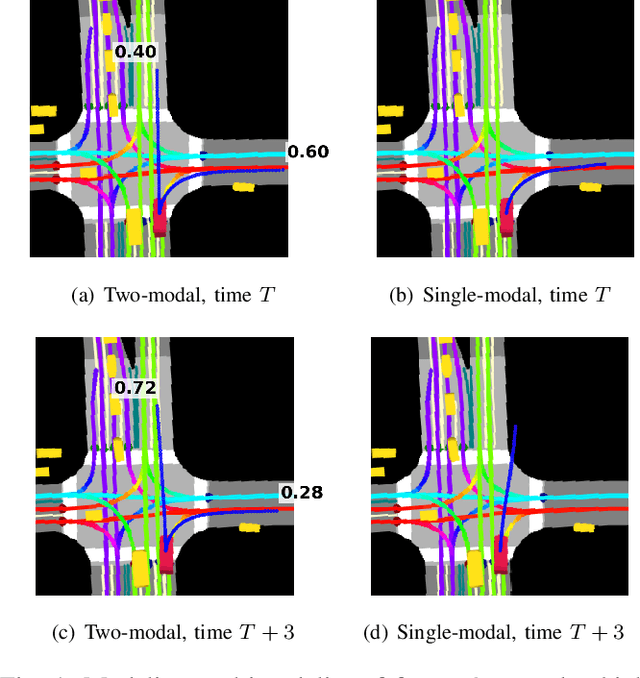

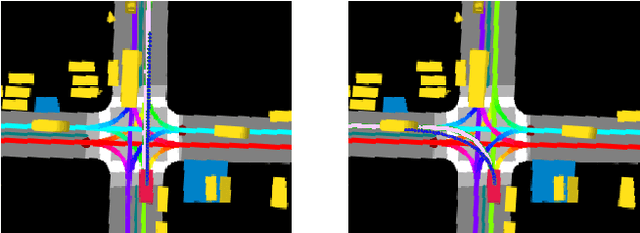

MultiXNet: Multiclass Multistage Multimodal Motion Prediction

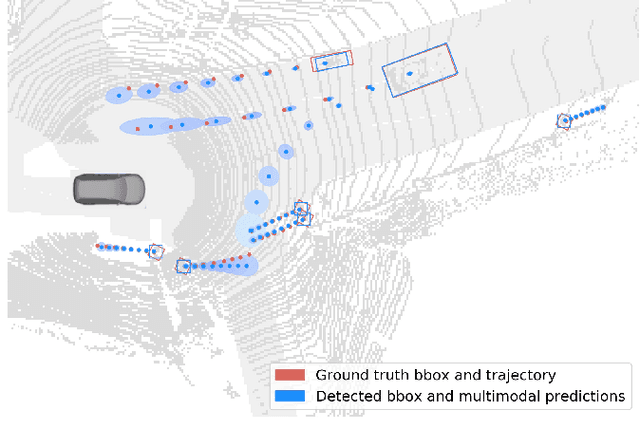

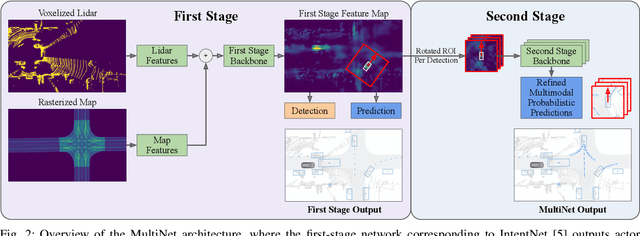

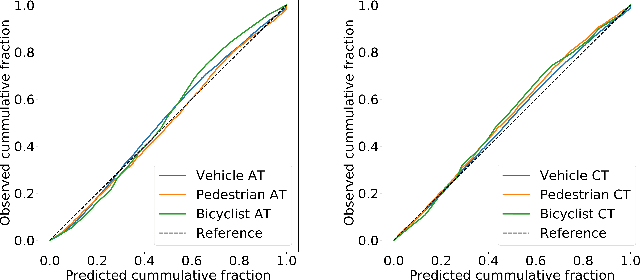

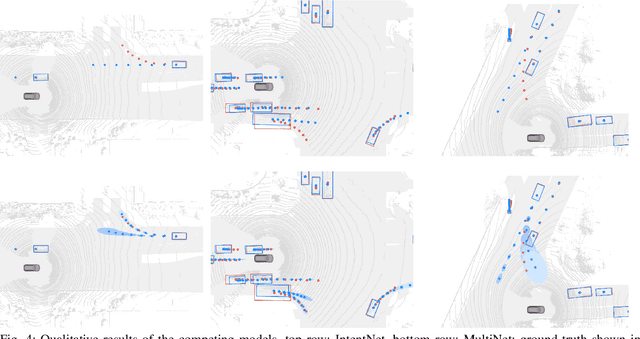

Jun 10, 2020

Abstract:One of the critical pieces of the self-driving puzzle is understanding the surroundings of the self-driving vehicle (SDV) and predicting how these surroundings will change in the near future. To address this task we propose MultiXNet, an end-to-end approach for detection and motion prediction based directly on lidar sensor data. This approach builds on prior work by handling multiple classes of traffic actors, adding a jointly trained second-stage trajectory refinement step, and producing a multimodal probability distribution over future actor motion that includes both multiple discrete traffic behaviors and calibrated continuous uncertainties. The method was evaluated on a large-scale, real-world data set collected by a fleet of SDVs in several cities, with the results indicating that it outperforms existing state-of-the-art approaches.

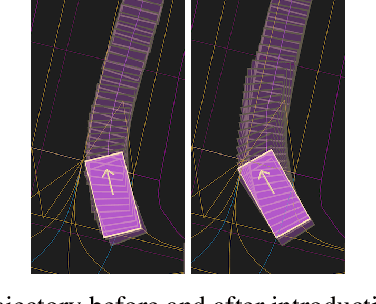

Improving Movement Predictions of Traffic Actors in Bird's-Eye View Models using GANs and Differentiable Trajectory Rasterization

Apr 14, 2020

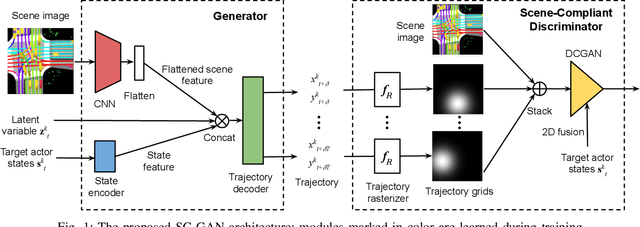

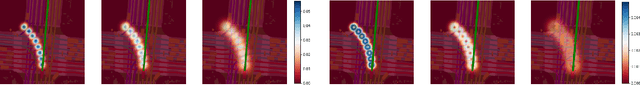

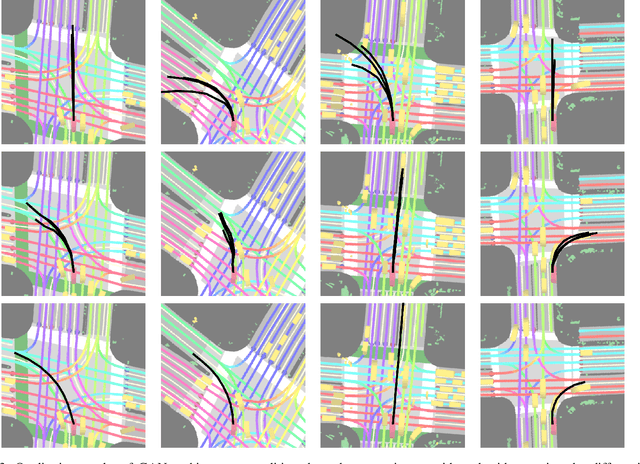

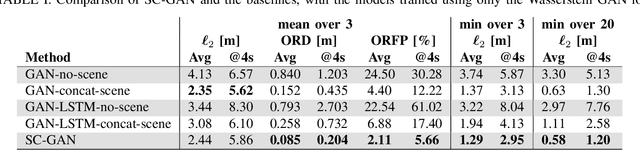

Abstract:One of the most critical pieces of the self-driving puzzle is the task of predicting future movement of surrounding traffic actors, which allows the autonomous vehicle to safely and effectively plan its future route in a complex world. Recently, a number of algorithms have been proposed to address this important problem, spurred by a growing interest of researchers from both industry and academia. Methods based on top-down scene rasterization on one side and Generative Adversarial Networks (GANs) on the other have shown to be particularly successful, obtaining state-of-the-art accuracies on the task of traffic movement prediction. In this paper we build upon these two directions and propose a raster-based conditional GAN architecture, powered by a novel differentiable rasterizer module at the input of the conditional discriminator that maps generated trajectories into the raster space in a differentiable manner. This simplifies the task for the discriminator as trajectories that are not scene-compliant are easier to discern, and allows the gradients to flow back forcing the generator to output better, more realistic trajectories. We evaluated the proposed method on a large-scale, real-world data set, showing that it outperforms state-of-the-art GAN-based baselines.

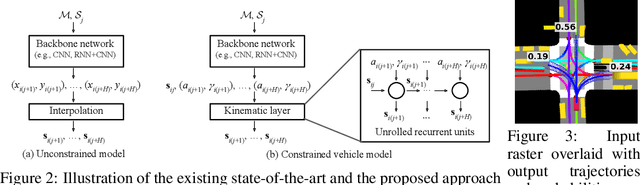

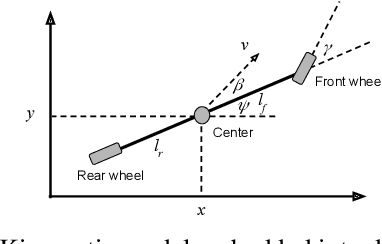

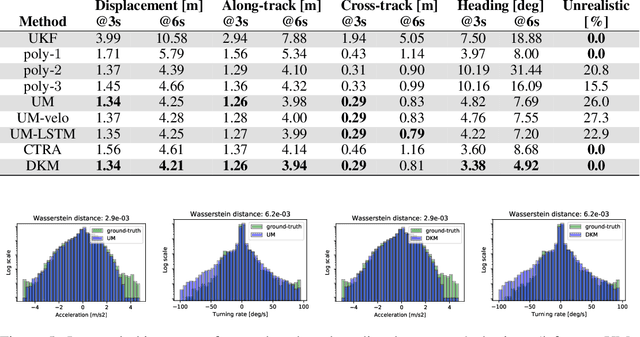

Deep Kinematic Models for Physically Realistic Prediction of Vehicle Trajectories

Aug 01, 2019

Abstract:Self-driving vehicles (SDVs) hold great potential for improving traffic safety and are poised to positively affect the quality of life of millions of people. One of the critical aspects of the autonomous technology is understanding and predicting future movement of vehicles surrounding the SDV. This work presents a deep-learning-based method for physically realistic motion prediction of such traffic actors. Previous work did not explicitly encode physical realism and instead relied on the models to learn the laws of physics directly from the data, potentially resulting in implausible trajectory predictions. To account for this issue we propose a method that seamlessly combines ideas from the AI with physically grounded vehicle motion models. In this way we employ best of the both worlds, coupling powerful learning models with strong physical guarantees for their outputs. The proposed approach is general, being applicable to any type of learning method. Extensive experiments using deep convnets on large-scale, real-world data strongly indicate its benefits, outperforming the existing state-of-the-art.

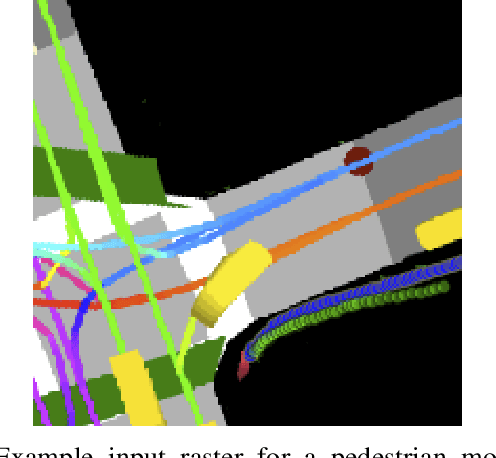

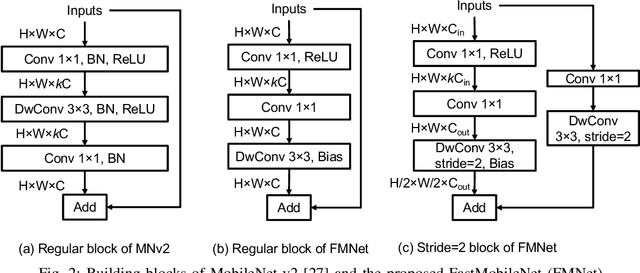

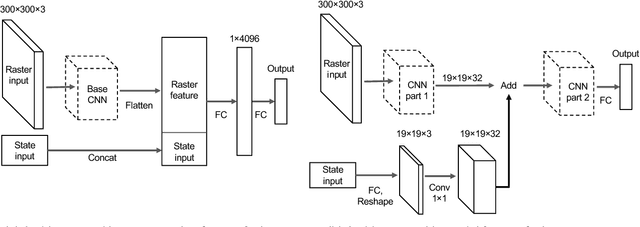

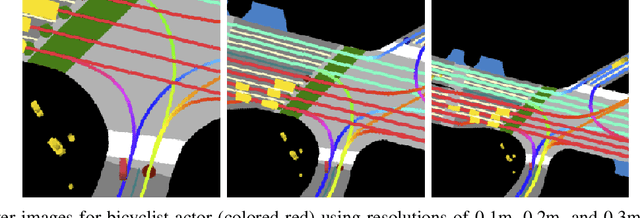

Predicting Motion of Vulnerable Road Users using High-Definition Maps and Efficient ConvNets

Jun 20, 2019

Abstract:Following detection and tracking of traffic actors, prediction of their future motion is the next critical component of a self-driving vehicle (SDV) technology, allowing the SDV to operate safely and efficiently in its environment. This is particularly important when it comes to vulnerable road users (VRUs), such as pedestrians and bicyclists. These actors need to be handled with special care due to an increased risk of injury, as well as the fact that their behavior is less predictable than that of motorized actors. To address this issue, in this paper we present a deep learning-based method for predicting VRU movement, where we rasterize high-definition maps and actor's surroundings into bird's-eye view image used as an input to deep convolutional networks. In addition, we propose a fast architecture suitable for real-time inference, and present a detailed ablation study of various rasterization choices. The results strongly indicate benefits of using the proposed approach for motion prediction of VRUs, both in terms of accuracy and latency.

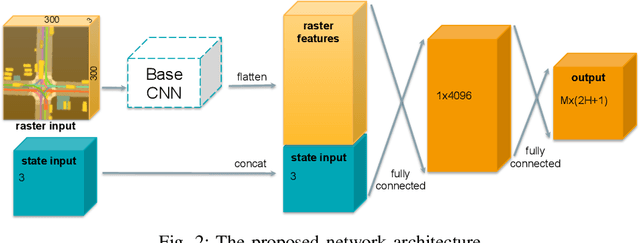

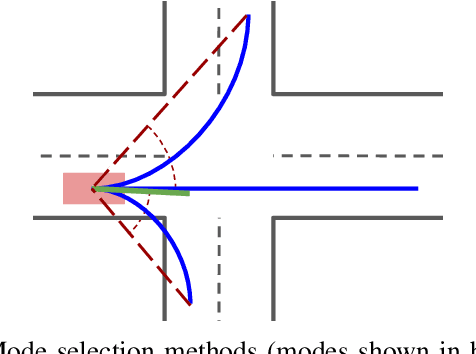

Multimodal Trajectory Predictions for Autonomous Driving using Deep Convolutional Networks

Mar 01, 2019

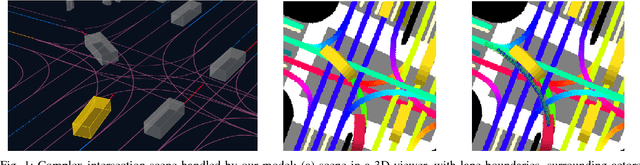

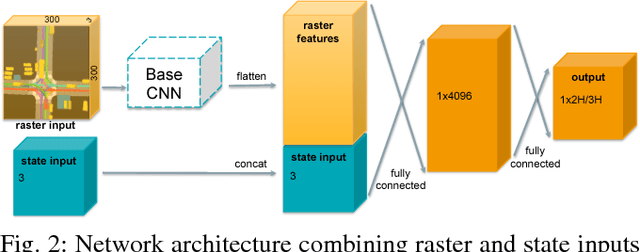

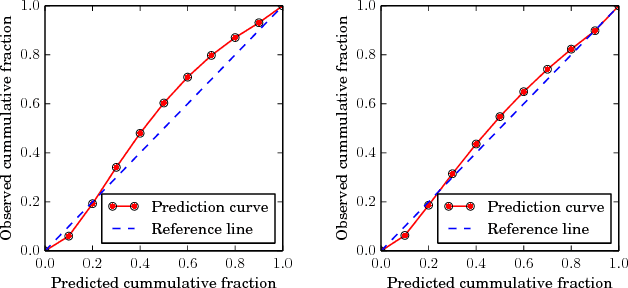

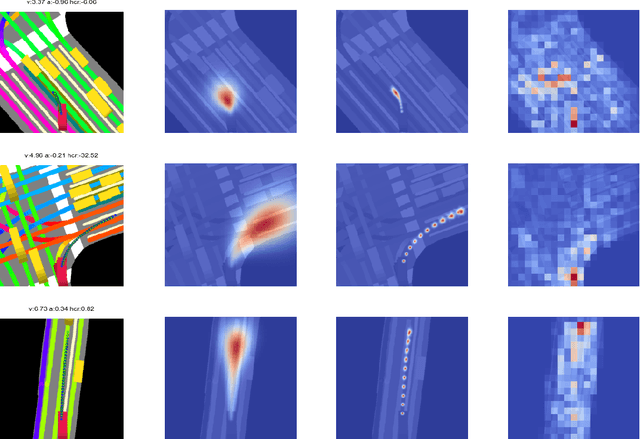

Abstract:Autonomous driving presents one of the largest problems that the robotics and artificial intelligence communities are facing at the moment, both in terms of difficulty and potential societal impact. Self-driving vehicles (SDVs) are expected to prevent road accidents and save millions of lives while improving the livelihood and life quality of many more. However, despite large interest and a number of industry players working in the autonomous domain, there still remains more to be done in order to develop a system capable of operating at a level comparable to best human drivers. One reason for this is high uncertainty of traffic behavior and large number of situations that an SDV may encounter on the roads, making it very difficult to create a fully generalizable system. To ensure safe and efficient operations, an autonomous vehicle is required to account for this uncertainty and to anticipate a multitude of possible behaviors of traffic actors in its surrounding. We address this critical problem and present a method to predict multiple possible trajectories of actors while also estimating their probabilities. The method encodes each actor's surrounding context into a raster image, used as input by deep convolutional networks to automatically derive relevant features for the task. Following extensive offline evaluation and comparison to state-of-the-art baselines, the method was successfully tested on SDVs in closed-course tests.

Short-term Motion Prediction of Traffic Actors for Autonomous Driving using Deep Convolutional Networks

Sep 16, 2018

Abstract:Despite its ubiquity in our daily lives, AI is only just starting to make advances in what may arguably have the largest societal impact thus far, the nascent field of autonomous driving. In this work we discuss this important topic and address one of crucial aspects of the emerging area, the problem of predicting future state of autonomous vehicle's surrounding necessary for safe and efficient operations. We introduce a deep learning-based approach that takes into account current world state and produces rasterized representations of each actor's vicinity. The raster images are then used by deep convolutional models to infer future movement of actors while accounting for inherent uncertainty of the prediction task. Extensive experiments on real-world data strongly suggest benefits of the proposed approach. Moreover, following successful tests the system was deployed to a fleet of autonomous vehicles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge