Sudeep Fadadu

Robust Long-Range Perception Against Sensor Misalignment in Autonomous Vehicles

Aug 20, 2024

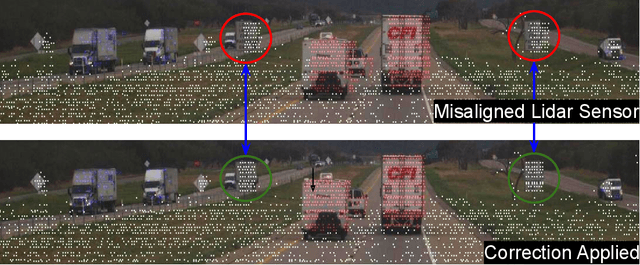

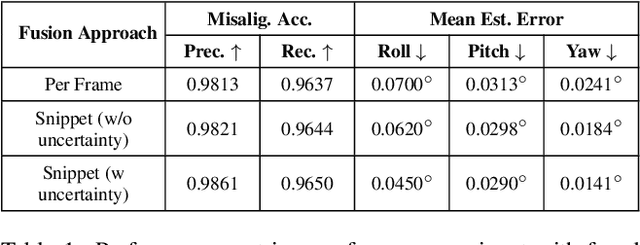

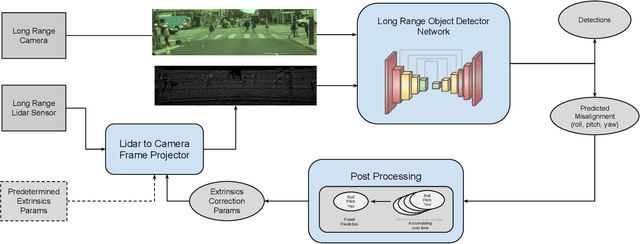

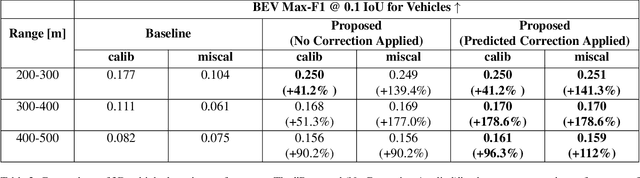

Abstract:Advances in machine learning algorithms for sensor fusion have significantly improved the detection and prediction of other road users, thereby enhancing safety. However, even a small angular displacement in the sensor's placement can cause significant degradation in output, especially at long range. In this paper, we demonstrate a simple yet generic and efficient multi-task learning approach that not only detects misalignment between different sensor modalities but is also robust against them for long-range perception. Along with the amount of misalignment, our method also predicts calibrated uncertainty, which can be useful for filtering and fusing predicted misalignment values over time. In addition, we show that the predicted misalignment parameters can be used for self-correcting input sensor data, further improving the perception performance under sensor misalignment.

Multi-View Fusion of Sensor Data for Improved Perception and Prediction in Autonomous Driving

Aug 27, 2020

Abstract:We present an end-to-end method for object detection and trajectory prediction utilizing multi-view representations of LiDAR returns. Our method builds on a state-of-the-art Bird's-Eye View (BEV) network that fuses voxelized features from a sequence of historical LiDAR data as well as rasterized high-definition map to perform detection and prediction tasks. We extend the BEV network with additional LiDAR Range-View (RV) features that use the raw LiDAR information in its native, non-quantized representation. The RV feature map is projected into BEV and fused with the BEV features computed from LiDAR and high-definition map. The fused features are then further processed to output the final detections and trajectories, within a single end-to-end trainable network. In addition, using this framework the RV fusion of LiDAR and camera is performed in a straightforward and computational efficient manner. The proposed approach improves the state-of-the-art on proprietary large-scale real-world data collected by a fleet of self-driving vehicles, as well as on the public nuScenes data set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge