Zi-Xiang Xia

Robust Long-Range Perception Against Sensor Misalignment in Autonomous Vehicles

Aug 20, 2024

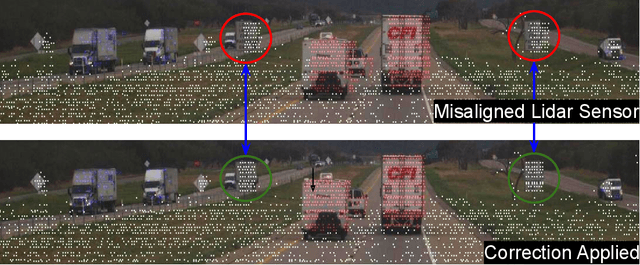

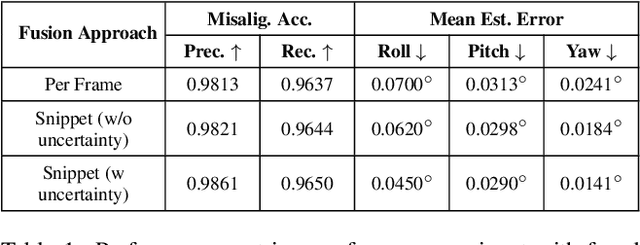

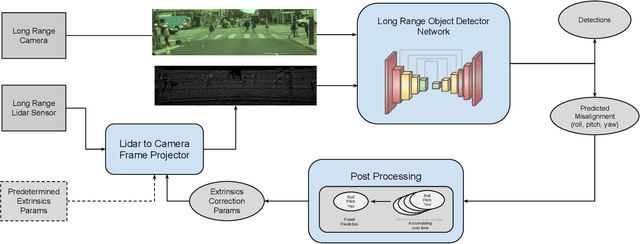

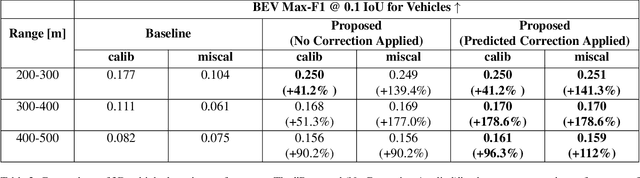

Abstract:Advances in machine learning algorithms for sensor fusion have significantly improved the detection and prediction of other road users, thereby enhancing safety. However, even a small angular displacement in the sensor's placement can cause significant degradation in output, especially at long range. In this paper, we demonstrate a simple yet generic and efficient multi-task learning approach that not only detects misalignment between different sensor modalities but is also robust against them for long-range perception. Along with the amount of misalignment, our method also predicts calibrated uncertainty, which can be useful for filtering and fusing predicted misalignment values over time. In addition, we show that the predicted misalignment parameters can be used for self-correcting input sensor data, further improving the perception performance under sensor misalignment.

SpotNet: An Image Centric, Lidar Anchored Approach To Long Range Perception

May 24, 2024

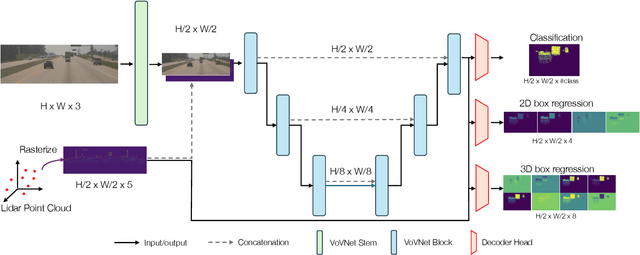

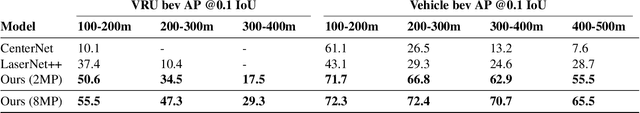

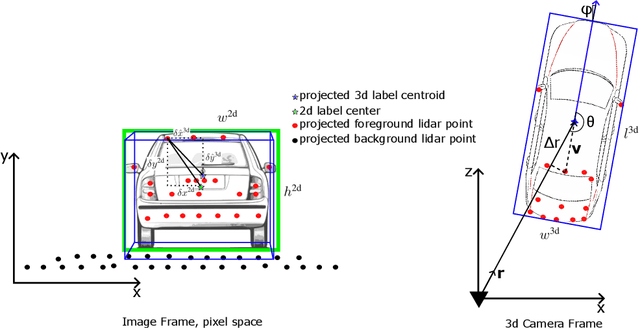

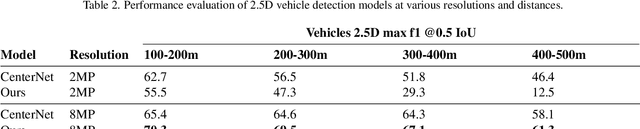

Abstract:In this paper, we propose SpotNet: a fast, single stage, image-centric but LiDAR anchored approach for long range 3D object detection. We demonstrate that our approach to LiDAR/image sensor fusion, combined with the joint learning of 2D and 3D detection tasks, can lead to accurate 3D object detection with very sparse LiDAR support. Unlike more recent bird's-eye-view (BEV) sensor-fusion methods which scale with range $r$ as $O(r^2)$, SpotNet scales as $O(1)$ with range. We argue that such an architecture is ideally suited to leverage each sensor's strength, i.e. semantic understanding from images and accurate range finding from LiDAR data. Finally we show that anchoring detections on LiDAR points removes the need to regress distances, and so the architecture is able to transfer from 2MP to 8MP resolution images without re-training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge