Marsalis Gibson

Hacking Predictors Means Hacking Cars: Using Sensitivity Analysis to Identify Trajectory Prediction Vulnerabilities for Autonomous Driving Security

Jan 18, 2024Abstract:Adversarial attacks on learning-based trajectory predictors have already been demonstrated. However, there are still open questions about the effects of perturbations on trajectory predictor inputs other than state histories, and how these attacks impact downstream planning and control. In this paper, we conduct a sensitivity analysis on two trajectory prediction models, Trajectron++ and AgentFormer. We observe that between all inputs, almost all of the perturbation sensitivities for Trajectron++ lie only within the most recent state history time point, while perturbation sensitivities for AgentFormer are spread across state histories over time. We additionally demonstrate that, despite dominant sensitivity on state history perturbations, an undetectable image map perturbation made with the Fast Gradient Sign Method can induce large prediction error increases in both models. Even though image maps may contribute slightly to the prediction output of both models, this result reveals that rather than being robust to adversarial image perturbations, trajectory predictors are susceptible to image attacks. Using an optimization-based planner and example perturbations crafted from sensitivity results, we show how this vulnerability can cause a vehicle to come to a sudden stop from moderate driving speeds.

Reachability Analysis for FollowerStopper: Safety Analysis and Experimental Results

Dec 29, 2021

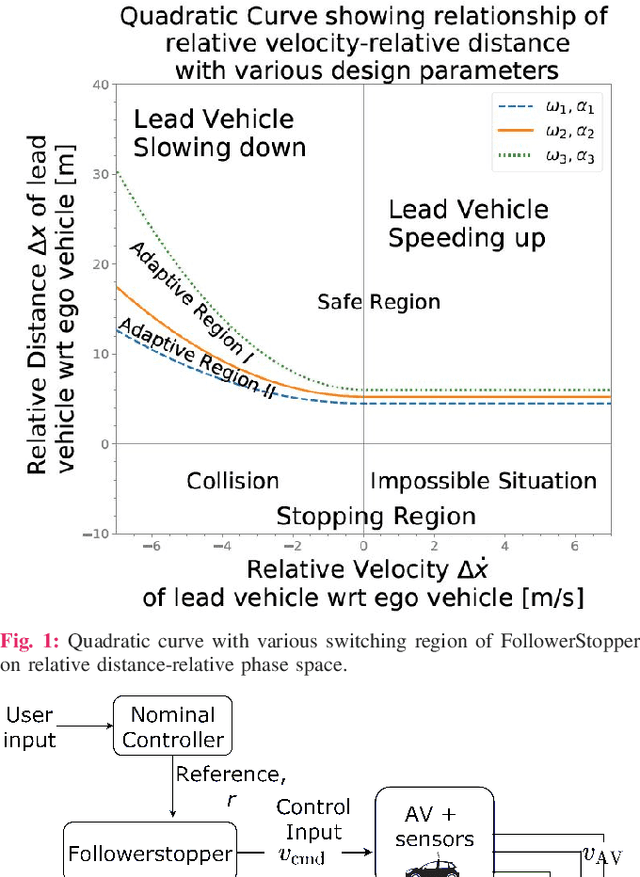

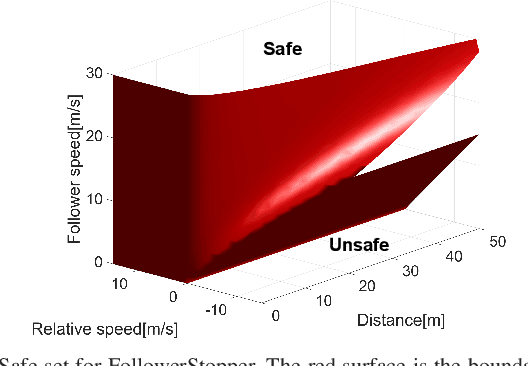

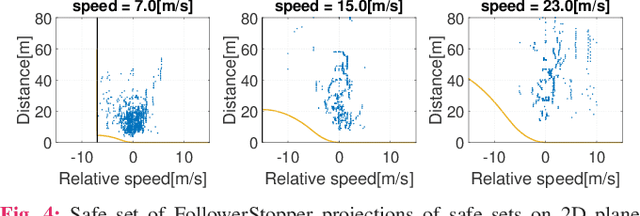

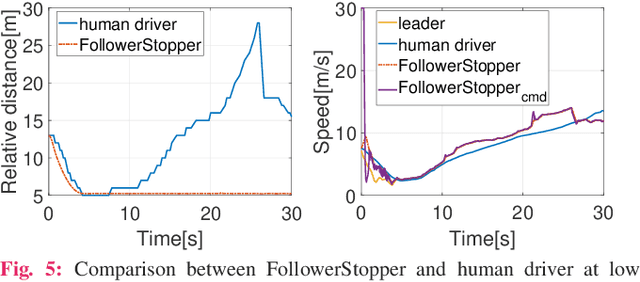

Abstract:Motivated by earlier work and the developer of a new algorithm, the FollowerStopper, this article uses reachability analysis to verify the safety of the FollowerStopper algorithm, which is a controller designed for dampening stop- and-go traffic waves. With more than 1100 miles of driving data collected by our physical platform, we validate our analysis results by comparing it to human driving behaviors. The FollowerStopper controller has been demonstrated to dampen stop-and-go traffic waves at low speed, but previous analysis on its relative safety has been limited to upper and lower bounds of acceleration. To expand upon previous analysis, reachability analysis is used to investigate the safety at the speeds it was originally tested and also at higher speeds. Two formulations of safety analysis with different criteria are shown: distance-based and time headway-based. The FollowerStopper is considered safe with distance-based criterion. However, simulation results demonstrate that the FollowerStopper is not representative of human drivers - it follows too closely behind vehicles, specifically at a distance human would deem as unsafe. On the other hand, under the time headway-based safety analysis, the FollowerStopper is not considered safe anymore. A modified FollowerStopper is proposed to satisfy time-based safety criterion. Simulation results of the proposed FollowerStopper shows that its response represents human driver behavior better.

Multi-Adversarial Safety Analysis for Autonomous Vehicles

Dec 29, 2021

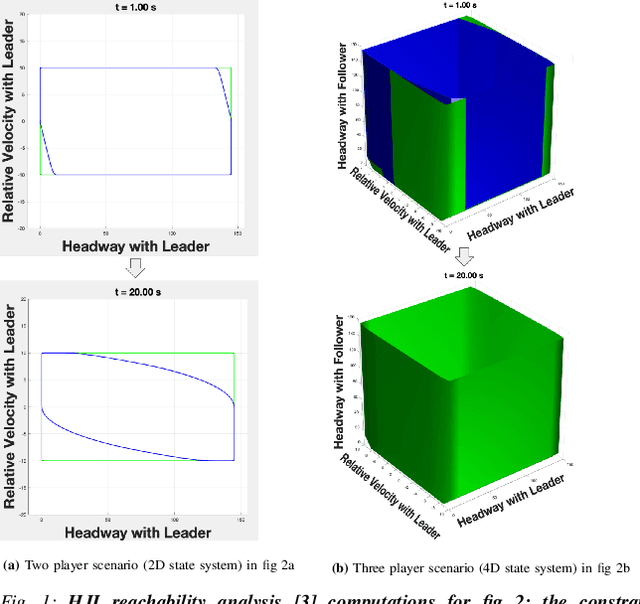

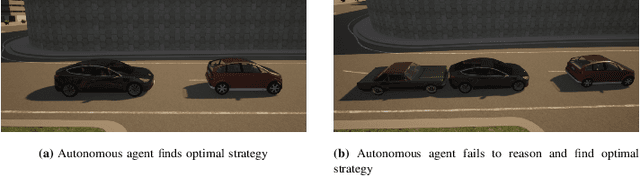

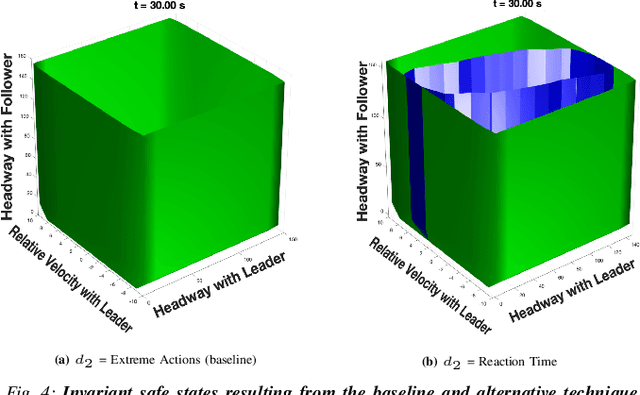

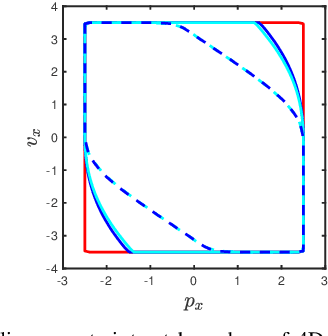

Abstract:This work in progress considers reachability-based safety analysis in the domain of autonomous driving in multi-agent systems. We formulate the safety problem for a car following scenario as a differential game and study how different modelling strategies yield very different behaviors regardless of the validity of the strategies in other scenarios. Given the nature of real-life driving scenarios, we propose a modeling strategy in our formulation that accounts for subtle interactions between agents, and compare its Hamiltonian results to other baselines. Our formulation encourages reduction of conservativeness in Hamilton-Jacobi safety analysis to provide better safety guarantees during navigation.

Scalable Learning of Safety Guarantees for Autonomous Systems using Hamilton-Jacobi Reachability

Jan 15, 2021

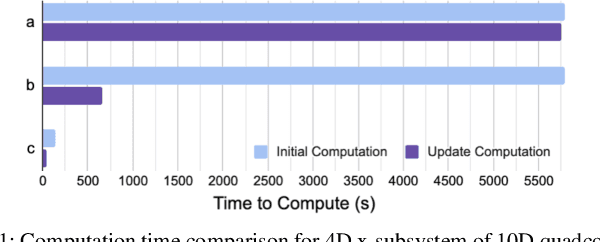

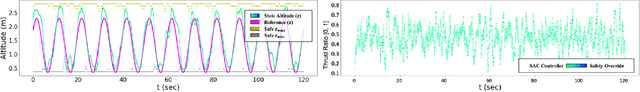

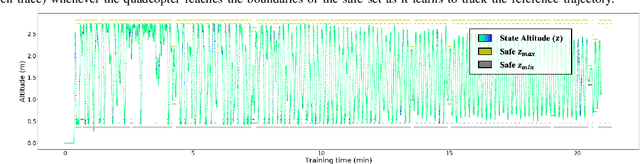

Abstract:Autonomous systems like aircraft and assistive robots often operate in scenarios where guaranteeing safety is critical. Methods like Hamilton-Jacobi reachability can provide guaranteed safe sets and controllers for such systems. However, often these same scenarios have unknown or uncertain environments, system dynamics, or predictions of other agents. As the system is operating, it may learn new knowledge about these uncertainties and should therefore update its safety analysis accordingly. However, work to learn and update safety analysis is limited to small systems of about two dimensions due to the computational complexity of the analysis. In this paper we synthesize several techniques to speed up computation: decomposition, warm-starting, and adaptive grids. Using this new framework we can update safe sets by one or more orders of magnitude faster than prior work, making this technique practical for many realistic systems. We demonstrate our results on simulated 2D and 10D near-hover quadcopters operating in a windy environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge