Uncertainty-Aware Vehicle Orientation Estimation for Joint Detection-Prediction Models

Paper and Code

Nov 05, 2020

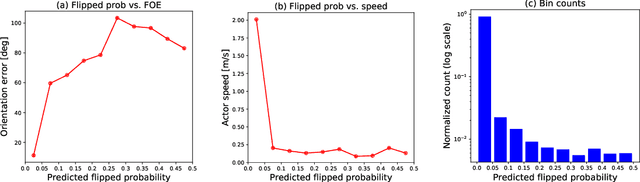

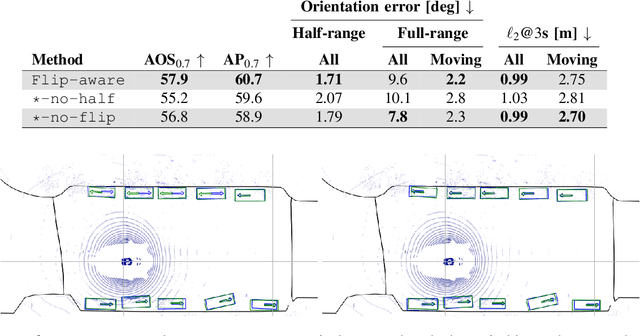

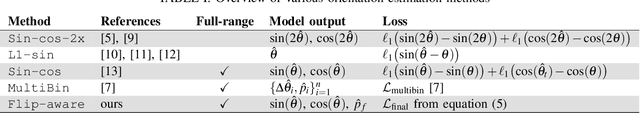

Object detection is a critical component of a self-driving system, tasked with inferring the current states of the surrounding traffic actors. While there exist a number of studies on the problem of inferring the position and shape of vehicle actors, understanding actors' orientation remains a challenge for existing state-of-the-art detectors. Orientation is an important property for downstream modules of an autonomous system, particularly relevant for motion prediction of stationary or reversing actors where current approaches struggle. We focus on this task and present a method that extends the existing models that perform joint object detection and motion prediction, allowing us to more accurately infer vehicle orientations. In addition, the approach is able to quantify prediction uncertainty, outputting the probability that the inferred orientation is flipped, which allows for improved motion prediction and safer autonomous operations. Empirical results show the benefits of the approach, obtaining state-of-the-art performance on the open-sourced nuScenes data set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge