Dong Xie

ARB-LLM: Alternating Refined Binarizations for Large Language Models

Oct 04, 2024

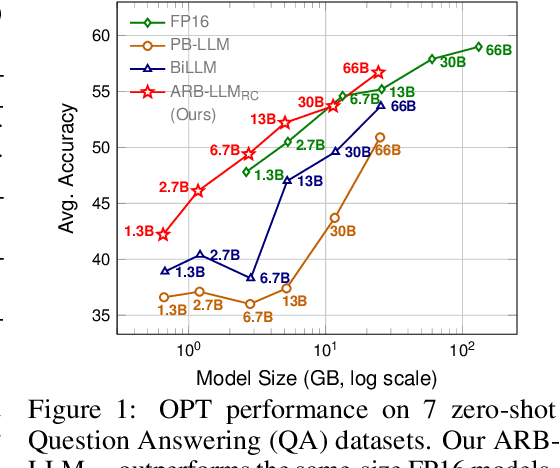

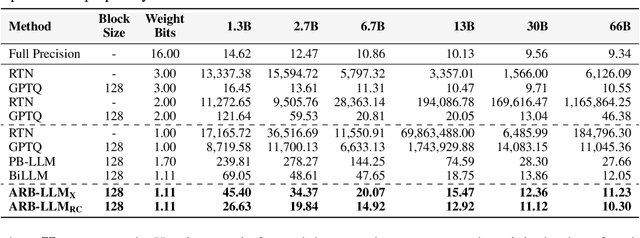

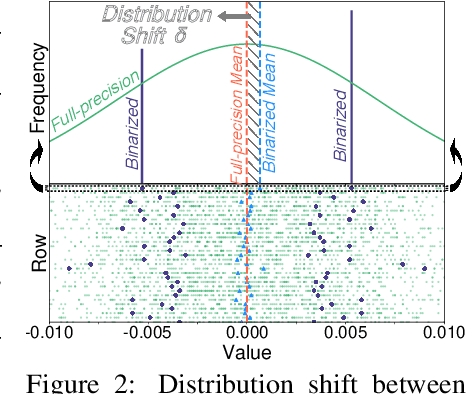

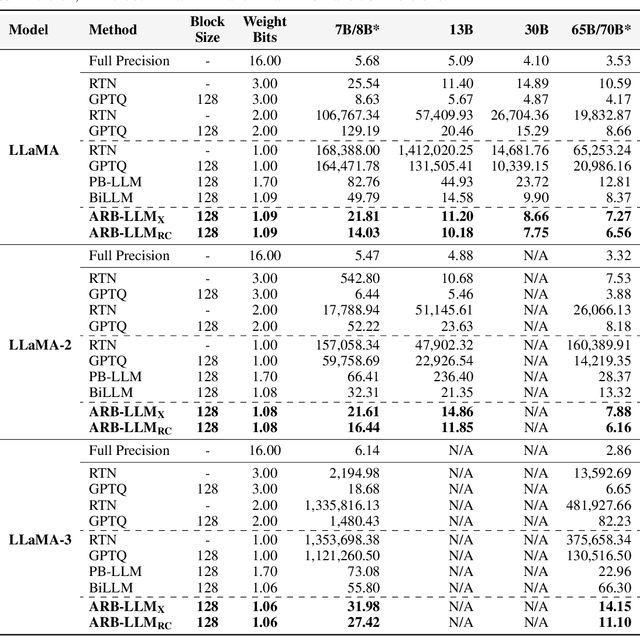

Abstract:Large Language Models (LLMs) have greatly pushed forward advancements in natural language processing, yet their high memory and computational demands hinder practical deployment. Binarization, as an effective compression technique, can shrink model weights to just 1 bit, significantly reducing the high demands on computation and memory. However, current binarization methods struggle to narrow the distribution gap between binarized and full-precision weights, while also overlooking the column deviation in LLM weight distribution. To tackle these issues, we propose ARB-LLM, a novel 1-bit post-training quantization (PTQ) technique tailored for LLMs. To narrow the distribution shift between binarized and full-precision weights, we first design an alternating refined binarization (ARB) algorithm to progressively update the binarization parameters, which significantly reduces the quantization error. Moreover, considering the pivot role of calibration data and the column deviation in LLM weights, we further extend ARB to ARB-X and ARB-RC. In addition, we refine the weight partition strategy with column-group bitmap (CGB), which further enhance performance. Equipping ARB-X and ARB-RC with CGB, we obtain ARB-LLM$_\text{X}$ and ARB-LLM$_\text{RC}$ respectively, which significantly outperform state-of-the-art (SOTA) binarization methods for LLMs. As a binary PTQ method, our ARB-LLM$_\text{RC}$ is the first to surpass FP16 models of the same size. The code and models will be available at https://github.com/ZHITENGLI/ARB-LLM.

An Effective Two-stage Training Paradigm Detector for Small Dataset

Sep 11, 2023

Abstract:Learning from the limited amount of labeled data to the pre-train model has always been viewed as a challenging task. In this report, an effective and robust solution, the two-stage training paradigm YOLOv8 detector (TP-YOLOv8), is designed for the object detection track in VIPriors Challenge 2023. First, the backbone of YOLOv8 is pre-trained as the encoder using the masked image modeling technique. Then the detector is fine-tuned with elaborate augmentations. During the test stage, test-time augmentation (TTA) is used to enhance each model, and weighted box fusion (WBF) is implemented to further boost the performance. With the well-designed structure, our approach has achieved 30.4% average precision from 0.50 to 0.95 on the DelftBikes test set, ranking 4th on the leaderboard.

A Cooperative-Competitive Multi-Agent Framework for Auto-bidding in Online Advertising

Jun 11, 2021

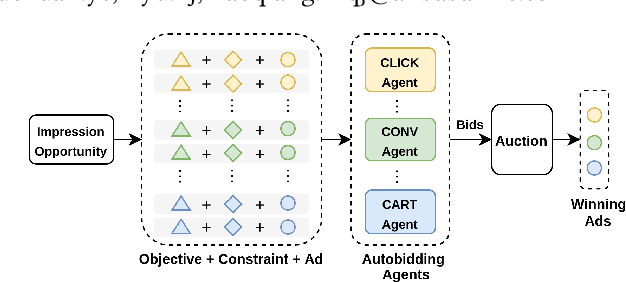

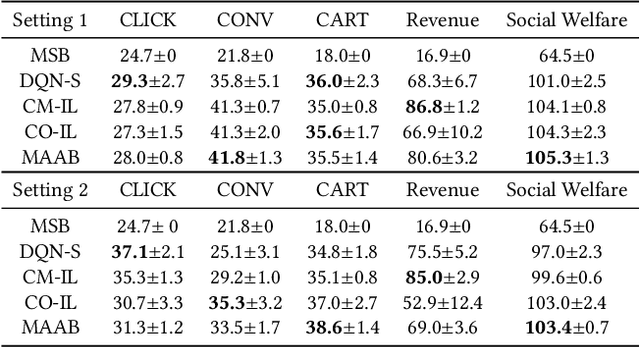

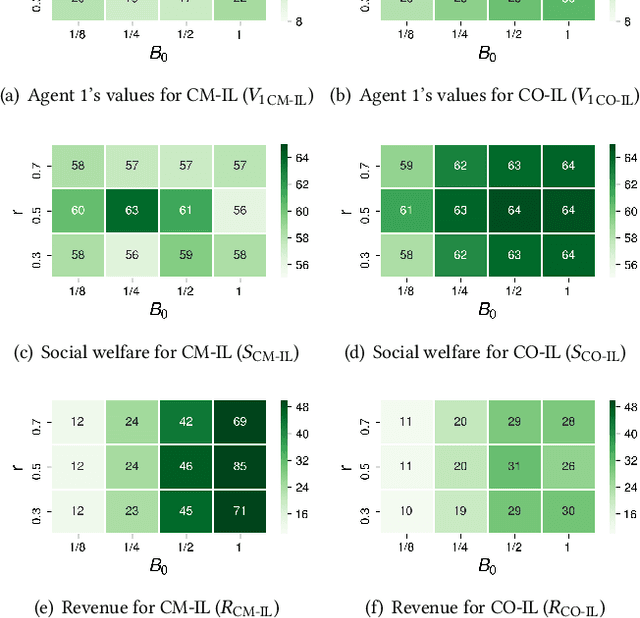

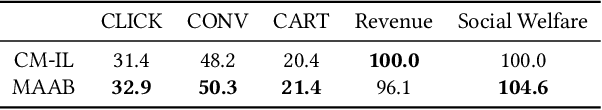

Abstract:In online advertising, auto-bidding has become an essential tool for advertisers to optimize their preferred ad performance metrics by simply expressing the high-level campaign objectives and constraints. Previous works consider the design of auto-bidding agents from the single-agent view without modeling the mutual influence between agents. In this paper, we instead consider this problem from the perspective of a distributed multi-agent system, and propose a general Multi-Agent reinforcement learning framework for Auto-Bidding, namely MAAB, to learn the auto-bidding strategies. First, we investigate the competition and cooperation relation among auto-bidding agents, and propose temperature-regularized credit assignment for establishing a mixed cooperative-competitive paradigm. By carefully making a competition and cooperation trade-off among the agents, we can reach an equilibrium state that guarantees not only individual advertiser's utility but also the system performance (social welfare). Second, due to the observed collusion behaviors of bidding low prices underlying the cooperation, we further propose bar agents to set a personalized bidding bar for each agent, and then to alleviate the degradation of revenue. Third, to deploy MAAB to the large-scale advertising system with millions of advertisers, we propose a mean-field approach. By grouping advertisers with the same objective as a mean auto-bidding agent, the interactions among advertisers are greatly simplified, making it practical to train MAAB efficiently. Extensive experiments on the offline industrial dataset and Alibaba advertising platform demonstrate that our approach outperforms several baseline methods in terms of social welfare and guarantees the ad platform's revenue.

Convergence Analysis of Nonconvex Distributed Stochastic Zeroth-order Coordinate Method

Mar 24, 2021

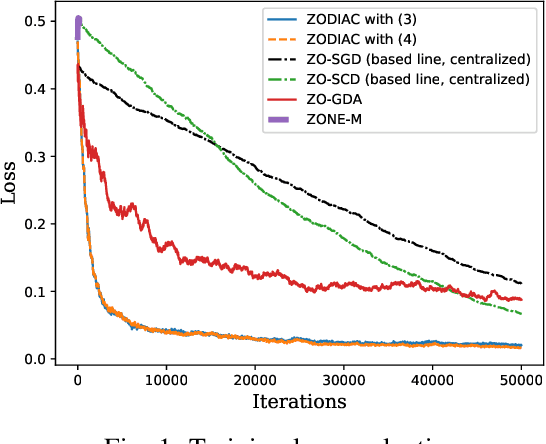

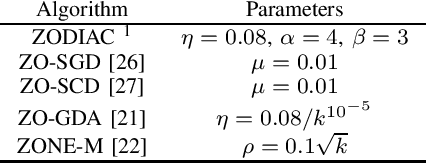

Abstract:This paper investigates the stochastic distributed nonconvex optimization problem of minimizing a global cost function formed by the summation of $n$ local cost functions. We solve such a problem by involving zeroth-order (ZO) information exchange. In this paper, we propose a ZO distributed primal-dual coordinate method (ZODIAC) to solve the stochastic optimization problem. Agents approximate their own local stochastic ZO oracle along with coordinates with an adaptive smoothing parameter. We show that the proposed algorithm achieves the convergence rate of $\mathcal{O}(\sqrt{p}/\sqrt{T})$ for general nonconvex cost functions. We demonstrate the efficiency of proposed algorithms through a numerical example in comparison with the existing state-of-the-art centralized and distributed ZO algorithms.

Hierarchical Classification of Pulmonary Lesions: A Large-Scale Radio-Pathomics Study

Oct 08, 2020

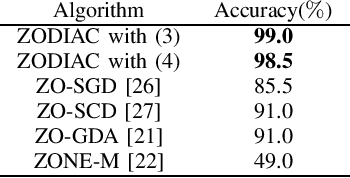

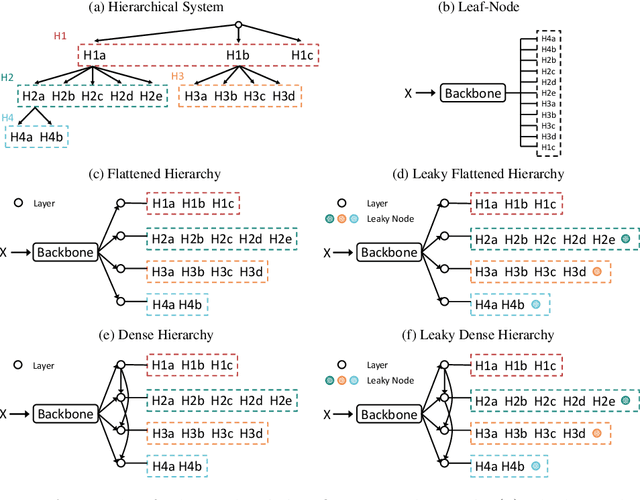

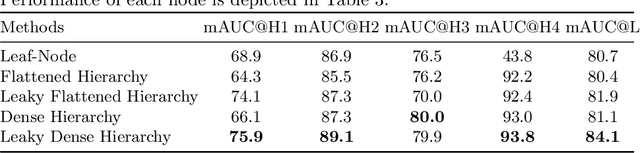

Abstract:Diagnosis of pulmonary lesions from computed tomography (CT) is important but challenging for clinical decision making in lung cancer related diseases. Deep learning has achieved great success in computer aided diagnosis (CADx) area for lung cancer, whereas it suffers from label ambiguity due to the difficulty in the radiological diagnosis. Considering that invasive pathological analysis serves as the clinical golden standard of lung cancer diagnosis, in this study, we solve the label ambiguity issue via a large-scale radio-pathomics dataset containing 5,134 radiological CT images with pathologically confirmed labels, including cancers (e.g., invasive/non-invasive adenocarcinoma, squamous carcinoma) and non-cancer diseases (e.g., tuberculosis, hamartoma). This retrospective dataset, named Pulmonary-RadPath, enables development and validation of accurate deep learning systems to predict invasive pathological labels with a non-invasive procedure, i.e., radiological CT scans. A three-level hierarchical classification system for pulmonary lesions is developed, which covers most diseases in cancer-related diagnosis. We explore several techniques for hierarchical classification on this dataset, and propose a Leaky Dense Hierarchy approach with proven effectiveness in experiments. Our study significantly outperforms prior arts in terms of data scales (6x larger), disease comprehensiveness and hierarchies. The promising results suggest the potentials to facilitate precision medicine.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge