Miao Xu

UniSurg: A Video-Native Foundation Model for Universal Understanding of Surgical Videos

Feb 05, 2026Abstract:While foundation models have advanced surgical video analysis, current approaches rely predominantly on pixel-level reconstruction objectives that waste model capacity on low-level visual details - such as smoke, specular reflections, and fluid motion - rather than semantic structures essential for surgical understanding. We present UniSurg, a video-native foundation model that shifts the learning paradigm from pixel-level reconstruction to latent motion prediction. Built on the Video Joint Embedding Predictive Architecture (V-JEPA), UniSurg introduces three key technical innovations tailored to surgical videos: 1) motion-guided latent prediction to prioritize semantically meaningful regions, 2) spatiotemporal affinity self-distillation to enforce relational consistency, and 3) feature diversity regularization to prevent representation collapse in texture-sparse surgical scenes. To enable large-scale pretraining, we curate UniSurg-15M, the largest surgical video dataset to date, comprising 3,658 hours of video from 50 sources across 13 anatomical regions. Extensive experiments across 17 benchmarks demonstrate that UniSurg significantly outperforms state-of-the-art methods on surgical workflow recognition (+14.6% F1 on EgoSurgery, +10.3% on PitVis), action triplet recognition (39.54% mAP-IVT on CholecT50), skill assessment, polyp segmentation, and depth estimation. These results establish UniSurg as a new standard for universal, motion-oriented surgical video understanding.

Enhancing Generative Auto-bidding with Offline Reward Evaluation and Policy Search

Sep 19, 2025Abstract:Auto-bidding is an essential tool for advertisers to enhance their advertising performance. Recent progress has shown that AI-Generated Bidding (AIGB), which formulates the auto-bidding as a trajectory generation task and trains a conditional diffusion-based planner on offline data, achieves superior and stable performance compared to typical offline reinforcement learning (RL)-based auto-bidding methods. However, existing AIGB methods still encounter a performance bottleneck due to their neglect of fine-grained generation quality evaluation and inability to explore beyond static datasets. To address this, we propose AIGB-Pearl (\emph{Planning with EvAluator via RL}), a novel method that integrates generative planning and policy optimization. The key to AIGB-Pearl is to construct a non-bootstrapped \emph{trajectory evaluator} to assign rewards and guide policy search, enabling the planner to optimize its generation quality iteratively through interaction. Furthermore, to enhance trajectory evaluator accuracy in offline settings, we incorporate three key techniques: (i) a Large Language Model (LLM)-based architecture for better representational capacity, (ii) hybrid point-wise and pair-wise losses for better score learning, and (iii) adaptive integration of expert feedback for better generalization ability. Extensive experiments on both simulated and real-world advertising systems demonstrate the state-of-the-art performance of our approach.

Calibration Attention: Instance-wise Temperature Scaling for Vision Transformers

Aug 12, 2025Abstract:Probability calibration is critical when Vision Transformers are deployed in risk-sensitive applications. The standard fix, post-hoc temperature scaling, uses a single global scalar and requires a held-out validation set. We introduce Calibration Attention (CalAttn), a drop-in module that learns an adaptive, per-instance temperature directly from the ViT's CLS token. Across CIFAR-10/100, MNIST, Tiny-ImageNet, and ImageNet-1K, CalAttn reduces calibration error by up to 4x on ViT-224, DeiT, and Swin, while adding under 0.1 percent additional parameters. The learned temperatures cluster tightly around 1.0, in contrast to the large global values used by standard temperature scaling. CalAttn is simple, efficient, and architecture-agnostic, and yields more trustworthy probabilities without sacrificing accuracy. Code: [https://github.com/EagleAdelaide/CalibrationAttention-CalAttn-](https://github.com/EagleAdelaide/CalibrationAttention-CalAttn-)

Pose-RFT: Enhancing MLLMs for 3D Pose Generation via Hybrid Action Reinforcement Fine-Tuning

Aug 11, 2025Abstract:Generating 3D human poses from multimodal inputs such as images or text requires models to capture both rich spatial and semantic correspondences. While pose-specific multimodal large language models (MLLMs) have shown promise in this task, they are typically trained with supervised objectives such as SMPL parameter regression or token-level prediction, which struggle to model the inherent ambiguity and achieve task-specific alignment required for accurate 3D pose generation. To address these limitations, we propose Pose-RFT, a reinforcement fine-tuning framework tailored for 3D human pose generation in MLLMs. We formulate the task as a hybrid action reinforcement learning problem that jointly optimizes discrete language prediction and continuous pose generation. To this end, we introduce HyGRPO, a hybrid reinforcement learning algorithm that performs group-wise reward normalization over sampled responses to guide joint optimization of discrete and continuous actions. Pose-RFT further incorporates task-specific reward functions to guide optimization towards spatial alignment in image-to-pose generation and semantic consistency in text-to-pose generation. Extensive experiments on multiple pose generation benchmarks demonstrate that Pose-RFT significantly improves performance over existing pose-specific MLLMs, validating the effectiveness of hybrid action reinforcement fine-tuning for 3D pose generation.

Multimodal Causal-Driven Representation Learning for Generalizable Medical Image Segmentation

Aug 07, 2025Abstract:Vision-Language Models (VLMs), such as CLIP, have demonstrated remarkable zero-shot capabilities in various computer vision tasks. However, their application to medical imaging remains challenging due to the high variability and complexity of medical data. Specifically, medical images often exhibit significant domain shifts caused by various confounders, including equipment differences, procedure artifacts, and imaging modes, which can lead to poor generalization when models are applied to unseen domains. To address this limitation, we propose Multimodal Causal-Driven Representation Learning (MCDRL), a novel framework that integrates causal inference with the VLM to tackle domain generalization in medical image segmentation. MCDRL is implemented in two steps: first, it leverages CLIP's cross-modal capabilities to identify candidate lesion regions and construct a confounder dictionary through text prompts, specifically designed to represent domain-specific variations; second, it trains a causal intervention network that utilizes this dictionary to identify and eliminate the influence of these domain-specific variations while preserving the anatomical structural information critical for segmentation tasks. Extensive experiments demonstrate that MCDRL consistently outperforms competing methods, yielding superior segmentation accuracy and exhibiting robust generalizability.

Rethinking Gating Mechanism in Sparse MoE: Handling Arbitrary Modality Inputs with Confidence-Guided Gate

May 26, 2025Abstract:Effectively managing missing modalities is a fundamental challenge in real-world multimodal learning scenarios, where data incompleteness often results from systematic collection errors or sensor failures. Sparse Mixture-of-Experts (SMoE) architectures have the potential to naturally handle multimodal data, with individual experts specializing in different modalities. However, existing SMoE approach often lacks proper ability to handle missing modality, leading to performance degradation and poor generalization in real-world applications. We propose Conf-SMoE to introduce a two-stage imputation module to handle the missing modality problem for the SMoE architecture and reveal the insight of expert collapse from theoretical analysis with strong empirical evidence. Inspired by our theoretical analysis, Conf-SMoE propose a novel expert gating mechanism by detaching the softmax routing score to task confidence score w.r.t ground truth. This naturally relieves expert collapse without introducing additional load balance loss function. We show that the insights of expert collapse aligns with other gating mechanism such as Gaussian and Laplacian gate. We also evaluate the proposed method on four different real world dataset with three different experiment settings to conduct comprehensive the analysis of Conf-SMoE on modality fusion and resistance to missing modality.

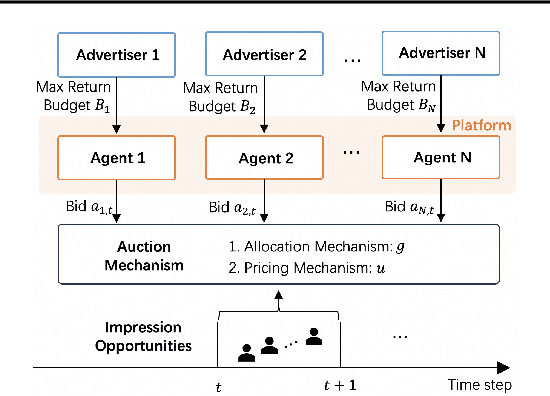

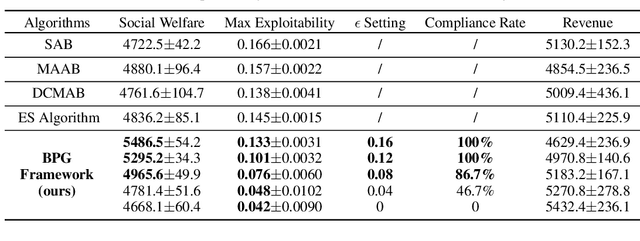

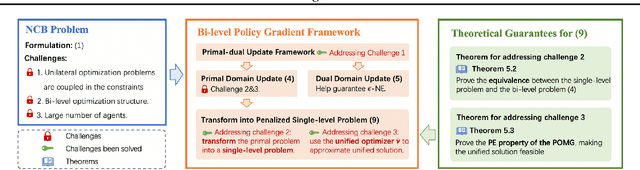

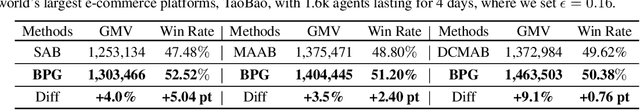

Nash Equilibrium Constrained Auto-bidding With Bi-level Reinforcement Learning

Mar 13, 2025

Abstract:Many online advertising platforms provide advertisers with auto-bidding services to enhance their advertising performance. However, most existing auto-bidding algorithms fail to accurately capture the auto-bidding problem formulation that the platform truly faces, let alone solve it. Actually, we argue that the platform should try to help optimize each advertiser's performance to the greatest extent -- which makes $\epsilon$-Nash Equilibrium ($\epsilon$-NE) a necessary solution concept -- while maximizing the social welfare of all the advertisers for the platform's long-term value. Based on this, we introduce the \emph{Nash-Equilibrium Constrained Bidding} (NCB), a new formulation of the auto-bidding problem from the platform's perspective. Specifically, it aims to maximize the social welfare of all advertisers under the $\epsilon$-NE constraint. However, the NCB problem presents significant challenges due to its constrained bi-level structure and the typically large number of advertisers involved. To address these challenges, we propose a \emph{Bi-level Policy Gradient} (BPG) framework with theoretical guarantees. Notably, its computational complexity is independent of the number of advertisers, and the associated gradients are straightforward to compute. Extensive simulated and real-world experiments validate the effectiveness of the BPG framework.

SRM-Hair: Single Image Head Mesh Reconstruction via 3D Morphable Hair

Mar 08, 2025Abstract:3D Morphable Models (3DMMs) have played a pivotal role as a fundamental representation or initialization for 3D avatar animation and reconstruction. However, extending 3DMMs to hair remains challenging due to the difficulty of enforcing vertex-level consistent semantic meaning across hair shapes. This paper introduces a novel method, Semantic-consistent Ray Modeling of Hair (SRM-Hair), for making 3D hair morphable and controlled by coefficients. The key contribution lies in semantic-consistent ray modeling, which extracts ordered hair surface vertices and exhibits notable properties such as additivity for hairstyle fusion, adaptability, flipping, and thickness modification. We collect a dataset of over 250 high-fidelity real hair scans paired with 3D face data to serve as a prior for the 3D morphable hair. Based on this, SRM-Hair can reconstruct a hair mesh combined with a 3D head from a single image. Note that SRM-Hair produces an independent hair mesh, facilitating applications in virtual avatar creation, realistic animation, and high-fidelity hair rendering. Both quantitative and qualitative experiments demonstrate that SRM-Hair achieves state-of-the-art performance in 3D mesh reconstruction. Our project is available at https://github.com/wang-zidu/SRM-Hair

We Care Each Pixel: Calibrating on Medical Segmentation Model

Mar 07, 2025Abstract:Medical image segmentation is fundamental for computer-aided diagnostics, providing accurate delineation of anatomical structures and pathological regions. While common metrics such as Accuracy, DSC, IoU, and HD primarily quantify spatial agreement between predictions and ground-truth labels, they do not assess the calibration quality of segmentation models, which is crucial for clinical reliability. To address this limitation, we propose pixel-wise Expected Calibration Error (pECE), a novel metric that explicitly measures miscalibration at the pixel level, thereby ensuring both spatial precision and confidence reliability. We further introduce a morphological adaptation strategy that applies morphological operations to ground-truth masks before computing calibration losses, particularly benefiting margin-based losses such as Margin SVLS and NACL. Additionally, we present the Signed Distance Calibration Loss (SDC), which aligns boundary geometry with calibration objectives by penalizing discrepancies between predicted and ground-truth signed distance functions (SDFs). Extensive experiments demonstrate that our method not only enhances segmentation performance but also improves calibration quality, yielding more trustworthy confidence estimates. Code is available at: https://github.com/EagleAdelaide/SDC-Loss.

PostHoc FREE Calibrating on Kolmogorov Arnold Networks

Mar 03, 2025Abstract:Kolmogorov Arnold Networks (KANs) are neural architectures inspired by the Kolmogorov Arnold representation theorem that leverage B Spline parameterizations for flexible, locally adaptive function approximation. Although KANs can capture complex nonlinearities beyond those modeled by standard MultiLayer Perceptrons (MLPs), they frequently exhibit miscalibrated confidence estimates manifesting as overconfidence in dense data regions and underconfidence in sparse areas. In this work, we systematically examine the impact of four critical hyperparameters including Layer Width, Grid Order, Shortcut Function, and Grid Range on the calibration of KANs. Furthermore, we introduce a novel TemperatureScaled Loss (TSL) that integrates a temperature parameter directly into the training objective, dynamically adjusting the predictive distribution during learning. Both theoretical analysis and extensive empirical evaluations on standard benchmarks demonstrate that TSL significantly reduces calibration errors, thereby improving the reliability of probabilistic predictions. Overall, our study provides actionable insights into the design of spline based neural networks and establishes TSL as a robust loss solution for enhancing calibration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge