Lisha Yao

$M^{2}$Fusion: Bayesian-based Multimodal Multi-level Fusion on Colorectal Cancer Microsatellite Instability Prediction

Jan 15, 2024

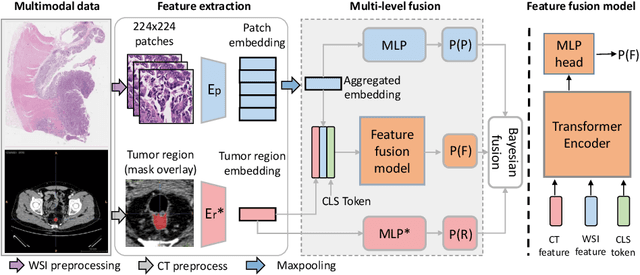

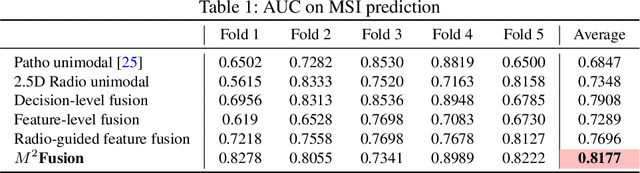

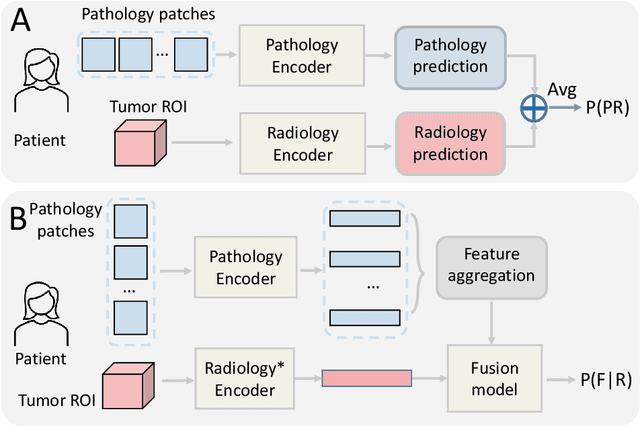

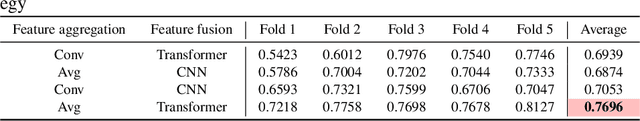

Abstract:Colorectal cancer (CRC) micro-satellite instability (MSI) prediction on histopathology images is a challenging weakly supervised learning task that involves multi-instance learning on gigapixel images. To date, radiology images have proven to have CRC MSI information and efficient patient imaging techniques. Different data modalities integration offers the opportunity to increase the accuracy and robustness of MSI prediction. Despite the progress in representation learning from the whole slide images (WSI) and exploring the potential of making use of radiology data, CRC MSI prediction remains a challenge to fuse the information from multiple data modalities (e.g., pathology WSI and radiology CT image). In this paper, we propose $M^{2}$Fusion: a Bayesian-based multimodal multi-level fusion pipeline for CRC MSI. The proposed fusion model $M^{2}$Fusion is capable of discovering more novel patterns within and across modalities that are beneficial for predicting MSI than using a single modality alone, as well as other fusion methods. The contribution of the paper is three-fold: (1) $M^{2}$Fusion is the first pipeline of multi-level fusion on pathology WSI and 3D radiology CT image for MSI prediction; (2) CT images are the first time integrated into multimodal fusion for CRC MSI prediction; (3) feature-level fusion strategy is evaluated on both Transformer-based and CNN-based method. Our approach is validated on cross-validation of 352 cases and outperforms either feature-level (0.8177 vs. 0.7908) or decision-level fusion strategy (0.8177 vs. 0.7289) on AUC score.

Convergence Analysis of Nonconvex Distributed Stochastic Zeroth-order Coordinate Method

Mar 24, 2021

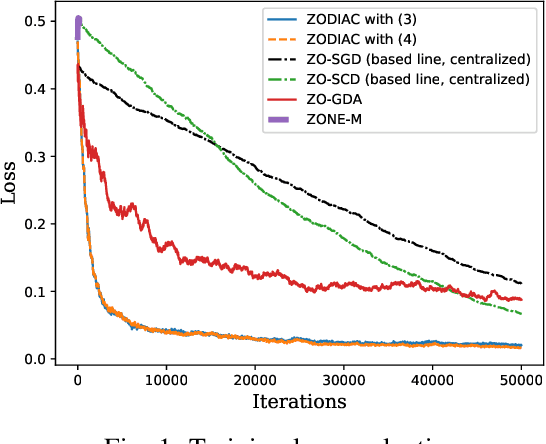

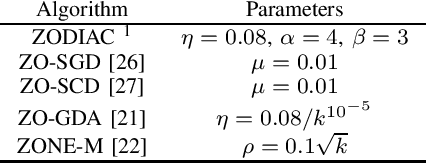

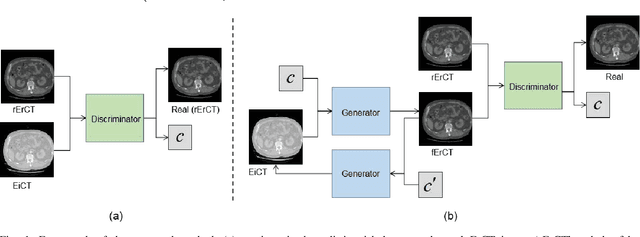

Abstract:This paper investigates the stochastic distributed nonconvex optimization problem of minimizing a global cost function formed by the summation of $n$ local cost functions. We solve such a problem by involving zeroth-order (ZO) information exchange. In this paper, we propose a ZO distributed primal-dual coordinate method (ZODIAC) to solve the stochastic optimization problem. Agents approximate their own local stochastic ZO oracle along with coordinates with an adaptive smoothing parameter. We show that the proposed algorithm achieves the convergence rate of $\mathcal{O}(\sqrt{p}/\sqrt{T})$ for general nonconvex cost functions. We demonstrate the efficiency of proposed algorithms through a numerical example in comparison with the existing state-of-the-art centralized and distributed ZO algorithms.

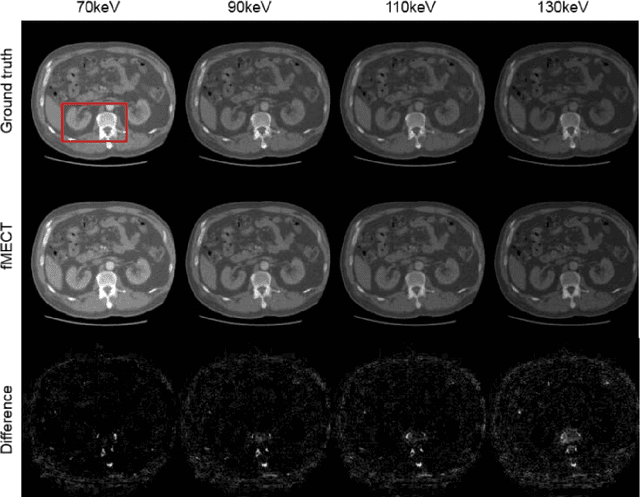

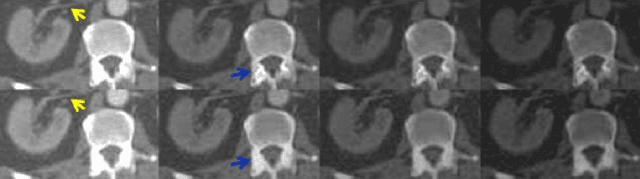

Direct Energy-resolving CT Imaging via Energy-integrating CT images using a Unified Generative Adversarial Network

Oct 14, 2019

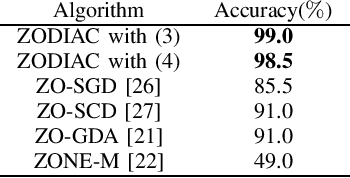

Abstract:Energy-resolving computed tomography (ErCT) has the ability to acquire energy-dependent measurements simultaneously and quantitative material information with improved contrast-to-noise ratio. Meanwhile, ErCT imaging system is usually equipped with an advanced photon counting detector, which is expensive and technically complex. Therefore, clinical ErCT scanners are not yet commercially available, and they are in various stage of completion. This makes the researchers less accessible to the ErCT images. In this work, we investigate to produce ErCT images directly from existing energy-integrating CT (EiCT) images via deep neural network. Specifically, different from other networks that produce ErCT images at one specific energy, this model employs a unified generative adversarial network (uGAN) to concurrently train EiCT datasets and ErCT datasets with different energies and then performs image-to-image translation from existing EiCT images to multiple ErCT image outputs at various energy bins. In this study, the present uGAN generates ErCT images at 70keV, 90keV, 110keV, and 130keV simultaneously from EiCT images at140kVp. We evaluate the present uGAN model on a set of over 1380 CT image slices and show that the present uGAN model can produce promising ErCT estimation results compared with the ground truth qualitatively and quantitatively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge