Dominik Narnhofer

Repurposing Protein Language Models for Latent Flow-Based Fitness Optimization

Feb 02, 2026Abstract:Protein fitness optimization is challenged by a vast combinatorial landscape where high-fitness variants are extremely sparse. Many current methods either underperform or require computationally expensive gradient-based sampling. We present CHASE, a framework that repurposes the evolutionary knowledge of pretrained protein language models by compressing their embeddings into a compact latent space. By training a conditional flow-matching model with classifier-free guidance, we enable the direct generation of high-fitness variants without predictor-based guidance during the ODE sampling steps. CHASE achieves state-of-the-art performance on AAV and GFP protein design benchmarks. Finally, we show that bootstrapping with synthetic data can further enhance performance in data-constrained settings.

A Variational Perspective on Generative Protein Fitness Optimization

Jan 31, 2025

Abstract:The goal of protein fitness optimization is to discover new protein variants with enhanced fitness for a given use. The vast search space and the sparsely populated fitness landscape, along with the discrete nature of protein sequences, pose significant challenges when trying to determine the gradient towards configurations with higher fitness. We introduce Variational Latent Generative Protein Optimization (VLGPO), a variational perspective on fitness optimization. Our method embeds protein sequences in a continuous latent space to enable efficient sampling from the fitness distribution and combines a (learned) flow matching prior over sequence mutations with a fitness predictor to guide optimization towards sequences with high fitness. VLGPO achieves state-of-the-art results on two different protein benchmarks of varying complexity. Moreover, the variational design with explicit prior and likelihood functions offers a flexible plug-and-play framework that can be easily customized to suit various protein design tasks.

Video Depth without Video Models

Nov 28, 2024

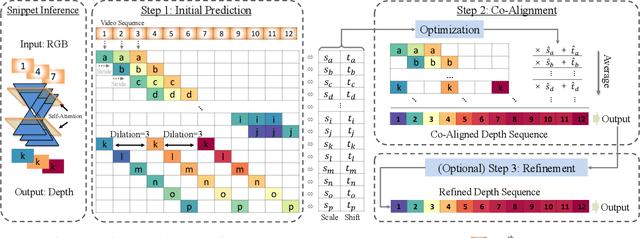

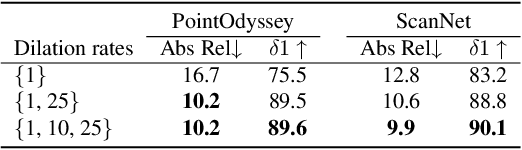

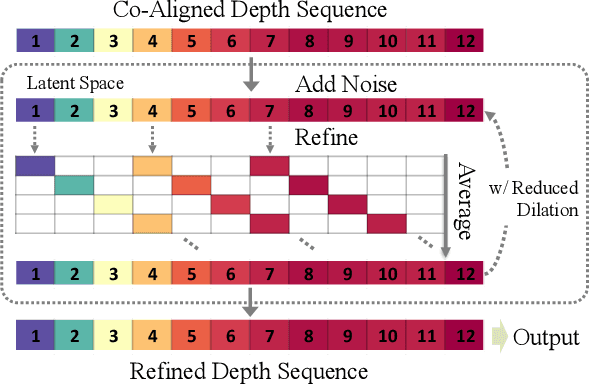

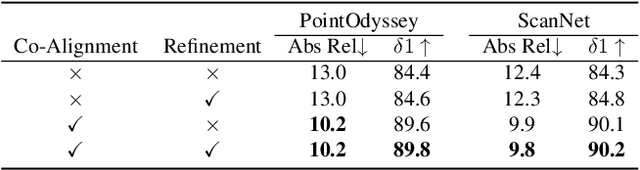

Abstract:Video depth estimation lifts monocular video clips to 3D by inferring dense depth at every frame. Recent advances in single-image depth estimation, brought about by the rise of large foundation models and the use of synthetic training data, have fueled a renewed interest in video depth. However, naively applying a single-image depth estimator to every frame of a video disregards temporal continuity, which not only leads to flickering but may also break when camera motion causes sudden changes in depth range. An obvious and principled solution would be to build on top of video foundation models, but these come with their own limitations; including expensive training and inference, imperfect 3D consistency, and stitching routines for the fixed-length (short) outputs. We take a step back and demonstrate how to turn a single-image latent diffusion model (LDM) into a state-of-the-art video depth estimator. Our model, which we call RollingDepth, has two main ingredients: (i) a multi-frame depth estimator that is derived from a single-image LDM and maps very short video snippets (typically frame triplets) to depth snippets. (ii) a robust, optimization-based registration algorithm that optimally assembles depth snippets sampled at various different frame rates back into a consistent video. RollingDepth is able to efficiently handle long videos with hundreds of frames and delivers more accurate depth videos than both dedicated video depth estimators and high-performing single-frame models. Project page: rollingdepth.github.io.

FlowSDF: Flow Matching for Medical Image Segmentation Using Distance Transforms

May 28, 2024

Abstract:Medical image segmentation is a crucial task that relies on the ability to accurately identify and isolate regions of interest in medical images. Thereby, generative approaches allow to capture the statistical properties of segmentation masks that are dependent on the respective structures. In this work we propose FlowSDF, an image-guided conditional flow matching framework to represent the signed distance function (SDF) leading to an implicit distribution of segmentation masks. The advantage of leveraging the SDF is a more natural distortion when compared to that of binary masks. By learning a vector field that is directly related to the probability path of a conditional distribution of SDFs, we can accurately sample from the distribution of segmentation masks, allowing for the evaluation of statistical quantities. Thus, this probabilistic representation allows for the generation of uncertainty maps represented by the variance, which can aid in further analysis and enhance the predictive robustness. We qualitatively and quantitatively illustrate competitive performance of the proposed method on a public nuclei and gland segmentation data set, highlighting its utility in medical image segmentation applications.

LoRA-Ensemble: Efficient Uncertainty Modelling for Self-attention Networks

May 23, 2024Abstract:Numerous crucial tasks in real-world decision-making rely on machine learning algorithms with calibrated uncertainty estimates. However, modern methods often yield overconfident and uncalibrated predictions. Various approaches involve training an ensemble of separate models to quantify the uncertainty related to the model itself, known as epistemic uncertainty. In an explicit implementation, the ensemble approach has high computational cost and high memory requirements. This particular challenge is evident in state-of-the-art neural networks such as transformers, where even a single network is already demanding in terms of compute and memory. Consequently, efforts are made to emulate the ensemble model without actually instantiating separate ensemble members, referred to as implicit ensembling. We introduce LoRA-Ensemble, a parameter-efficient deep ensemble method for self-attention networks, which is based on Low-Rank Adaptation (LoRA). Initially developed for efficient LLM fine-tuning, we extend LoRA to an implicit ensembling approach. By employing a single pre-trained self-attention network with weights shared across all members, we train member-specific low-rank matrices for the attention projections. Our method exhibits superior calibration compared to explicit ensembles and achieves similar or better accuracy across various prediction tasks and datasets.

MULDE: Multiscale Log-Density Estimation via Denoising Score Matching for Video Anomaly Detection

Mar 21, 2024

Abstract:We propose a novel approach to video anomaly detection: we treat feature vectors extracted from videos as realizations of a random variable with a fixed distribution and model this distribution with a neural network. This lets us estimate the likelihood of test videos and detect video anomalies by thresholding the likelihood estimates. We train our video anomaly detector using a modification of denoising score matching, a method that injects training data with noise to facilitate modeling its distribution. To eliminate hyperparameter selection, we model the distribution of noisy video features across a range of noise levels and introduce a regularizer that tends to align the models for different levels of noise. At test time, we combine anomaly indications at multiple noise scales with a Gaussian mixture model. Running our video anomaly detector induces minimal delays as inference requires merely extracting the features and forward-propagating them through a shallow neural network and a Gaussian mixture model. Our experiments on five popular video anomaly detection benchmarks demonstrate state-of-the-art performance, both in the object-centric and in the frame-centric setup.

Majorization-Minimization for sparse SVMs

Aug 31, 2023Abstract:Several decades ago, Support Vector Machines (SVMs) were introduced for performing binary classification tasks, under a supervised framework. Nowadays, they often outperform other supervised methods and remain one of the most popular approaches in the machine learning arena. In this work, we investigate the training of SVMs through a smooth sparse-promoting-regularized squared hinge loss minimization. This choice paves the way to the application of quick training methods built on majorization-minimization approaches, benefiting from the Lipschitz differentiabililty of the loss function. Moreover, the proposed approach allows us to handle sparsity-preserving regularizers promoting the selection of the most significant features, so enhancing the performance. Numerical tests and comparisons conducted on three different datasets demonstrate the good performance of the proposed methodology in terms of qualitative metrics (accuracy, precision, recall, and F 1 score) as well as computational cost.

Score-Based Generative Models for Medical Image Segmentation using Signed Distance Functions

Mar 10, 2023

Abstract:Medical image segmentation is a crucial task that relies on the ability to accurately identify and isolate regions of interest in images. Thereby, generative approaches allow to capture the statistical properties of segmentation masks that are dependent on the respective medical images. In this work we propose a conditional score-based generative modeling framework that leverages the signed distance function to represent an implicit and smoother distribution of segmentation masks. The score function of the conditional distribution of segmentation masks is learned in a conditional denoising process, which can be effectively used to generate accurate segmentation masks. Moreover, uncertainty maps can be generated, which can aid in further analysis and thus enhance the predictive robustness. We qualitatively and quantitatively illustrate competitive performance of the proposed method on a public nuclei and gland segmentation data set, highlighting its potential utility in medical image segmentation applications.

Posterior-Variance-Based Error Quantification for Inverse Problems in Imaging

Dec 23, 2022Abstract:In this work, a method for obtaining pixel-wise error bounds in Bayesian regularization of inverse imaging problems is introduced. The proposed method employs estimates of the posterior variance together with techniques from conformal prediction in order to obtain coverage guarantees for the error bounds, without making any assumption on the underlying data distribution. It is generally applicable to Bayesian regularization approaches, independent, e.g., of the concrete choice of the prior. Furthermore, the coverage guarantees can also be obtained in case only approximate sampling from the posterior is possible. With this in particular, the proposed framework is able to incorporate any learned prior in a black-box manner. Guaranteed coverage without assumptions on the underlying distributions is only achievable since the magnitude of the error bounds is, in general, unknown in advance. Nevertheless, experiments with multiple regularization approaches presented in the paper confirm that in practice, the obtained error bounds are rather tight. For realizing the numerical experiments, also a novel primal-dual Langevin algorithm for sampling from non-smooth distributions is introduced in this work.

Bayesian Uncertainty Estimation of Learned Variational MRI Reconstruction

Feb 12, 2021

Abstract:Recent deep learning approaches focus on improving quantitative scores of dedicated benchmarks, and therefore only reduce the observation-related (aleatoric) uncertainty. However, the model-immanent (epistemic) uncertainty is less frequently systematically analyzed. In this work, we introduce a Bayesian variational framework to quantify the epistemic uncertainty. To this end, we solve the linear inverse problem of undersampled MRI reconstruction in a variational setting. The associated energy functional is composed of a data fidelity term and the total deep variation (TDV) as a learned parametric regularizer. To estimate the epistemic uncertainty we draw the parameters of the TDV regularizer from a multivariate Gaussian distribution, whose mean and covariance matrix are learned in a stochastic optimal control problem. In several numerical experiments, we demonstrate that our approach yields competitive results for undersampled MRI reconstruction. Moreover, we can accurately quantify the pixelwise epistemic uncertainty, which can serve radiologists as an additional resource to visualize reconstruction reliability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge