Chao Qian

College of Chemical and Biological Engineering, Zhejiang Provincial Key Laboratory of Advanced Chemical Engineering Manufacture Technology, Zhejiang University, Hangzhou, P. R. China, Zhejiang Provincial Innovation Center of Advanced Chemicals Technology, Institute of Zhejiang University - Quzhou,P.R. China

Re$^{\text{2}}$MaP: Macro Placement by Recursively Prototyping and Packing Tree-based Relocating

Nov 11, 2025Abstract:This work introduces the Re$^{\text{2}}$MaP method, which generates expert-quality macro placements through recursively prototyping and packing tree-based relocating. We first perform multi-level macro grouping and PPA-aware cell clustering to produce a unified connection matrix that captures both wirelength and dataflow among macros and clusters. Next, we use DREAMPlace to build a mixed-size placement prototype and obtain reference positions for each macro and cluster. Based on this prototype, we introduce ABPlace, an angle-based analytical method that optimizes macro positions on an ellipse to distribute macros uniformly near chip periphery, while optimizing wirelength and dataflow. A packing tree-based relocating procedure is then designed to jointly adjust the locations of macro groups and the macros within each group, by optimizing an expertise-inspired cost function that captures various design constraints through evolutionary search. Re$^{\text{2}}$MaP repeats the above process: Only a subset of macro groups are positioned in each iteration, and the remaining macros are deferred to the next iteration to improve the prototype's accuracy. Using a well-established backend flow with sufficient timing optimizations, Re$^{\text{2}}$MaP achieves up to 22.22% (average 10.26%) improvement in worst negative slack (WNS) and up to 97.91% (average 33.97%) improvement in total negative slack (TNS) compared to the state-of-the-art academic placer Hier-RTLMP. It also ranks higher on WNS, TNS, power, design rule check (DRC) violations, and runtime than the conference version ReMaP, across seven tested cases. Our code is available at https://github.com/lamda-bbo/Re2MaP.

ReLAM: Learning Anticipation Model for Rewarding Visual Robotic Manipulation

Sep 26, 2025Abstract:Reward design remains a critical bottleneck in visual reinforcement learning (RL) for robotic manipulation. In simulated environments, rewards are conventionally designed based on the distance to a target position. However, such precise positional information is often unavailable in real-world visual settings due to sensory and perceptual limitations. In this study, we propose a method that implicitly infers spatial distances through keypoints extracted from images. Building on this, we introduce Reward Learning with Anticipation Model (ReLAM), a novel framework that automatically generates dense, structured rewards from action-free video demonstrations. ReLAM first learns an anticipation model that serves as a planner and proposes intermediate keypoint-based subgoals on the optimal path to the final goal, creating a structured learning curriculum directly aligned with the task's geometric objectives. Based on the anticipated subgoals, a continuous reward signal is provided to train a low-level, goal-conditioned policy under the hierarchical reinforcement learning (HRL) framework with provable sub-optimality bound. Extensive experiments on complex, long-horizon manipulation tasks show that ReLAM significantly accelerates learning and achieves superior performance compared to state-of-the-art methods.

Not Just for Archiving: Provable Benefits of Reusing the Archive in Evolutionary Multi-objective Optimization

Aug 23, 2025

Abstract:Evolutionary Algorithms (EAs) have become the most popular tool for solving widely-existed multi-objective optimization problems. In Multi-Objective EAs (MOEAs), there is increasing interest in using an archive to store non-dominated solutions generated during the search. This approach can 1) mitigate the effects of population oscillation, a common issue in many MOEAs, and 2) allow for the use of smaller, more practical population sizes. In this paper, we analytically show that the archive can even further help MOEAs through reusing its solutions during the process of new solution generation. We first prove that using a small population size alongside an archive (without incorporating archived solutions in the generation process) may fail on certain problems, as the population may remove previously discovered but promising solutions. We then prove that reusing archive solutions can overcome this limitation, resulting in at least a polynomial speedup on the expected running time. Our analysis focuses on the well-established SMS-EMOA algorithm applied to the commonly studied OneJumpZeroJump problem as well as one of its variants. We also show that reusing archive solutions can be better than using a large population size directly. Finally, we show that our theoretical findings can generally hold in practice by experiments on four well-known practical optimization problems -- multi-objective 0-1 Knapsack, TSP, QAP and NK-landscape problems -- with realistic settings.

Quality-Diversity Red-Teaming: Automated Generation of High-Quality and Diverse Attackers for Large Language Models

Jun 08, 2025Abstract:Ensuring safety of large language models (LLMs) is important. Red teaming--a systematic approach to identifying adversarial prompts that elicit harmful responses from target LLMs--has emerged as a crucial safety evaluation method. Within this framework, the diversity of adversarial prompts is essential for comprehensive safety assessments. We find that previous approaches to red-teaming may suffer from two key limitations. First, they often pursue diversity through simplistic metrics like word frequency or sentence embedding similarity, which may not capture meaningful variation in attack strategies. Second, the common practice of training a single attacker model restricts coverage across potential attack styles and risk categories. This paper introduces Quality-Diversity Red-Teaming (QDRT), a new framework designed to address these limitations. QDRT achieves goal-driven diversity through behavior-conditioned training and implements a behavioral replay buffer in an open-ended manner. Additionally, it trains multiple specialized attackers capable of generating high-quality attacks across diverse styles and risk categories. Our empirical evaluation demonstrates that QDRT generates attacks that are both more diverse and more effective against a wide range of target LLMs, including GPT-2, Llama-3, Gemma-2, and Qwen2.5. This work advances the field of LLM safety by providing a systematic and effective approach to automated red-teaming, ultimately supporting the responsible deployment of LLMs.

Towards Universal Offline Black-Box Optimization via Learning Language Model Embeddings

Jun 08, 2025Abstract:The pursuit of universal black-box optimization (BBO) algorithms is a longstanding goal. However, unlike domains such as language or vision, where scaling structured data has driven generalization, progress in offline BBO remains hindered by the lack of unified representations for heterogeneous numerical spaces. Thus, existing offline BBO approaches are constrained to single-task and fixed-dimensional settings, failing to achieve cross-domain universal optimization. Recent advances in language models (LMs) offer a promising path forward: their embeddings capture latent relationships in a unifying way, enabling universal optimization across different data types possible. In this paper, we discuss multiple potential approaches, including an end-to-end learning framework in the form of next-token prediction, as well as prioritizing the learning of latent spaces with strong representational capabilities. To validate the effectiveness of these methods, we collect offline BBO tasks and data from open-source academic works for training. Experiments demonstrate the universality and effectiveness of our proposed methods. Our findings suggest that unifying language model priors and learning string embedding space can overcome traditional barriers in universal BBO, paving the way for general-purpose BBO algorithms. The code is provided at https://github.com/lamda-bbo/universal-offline-bbo.

Runtime Analysis of Evolutionary NAS for Multiclass Classification

Jun 06, 2025Abstract:Evolutionary neural architecture search (ENAS) is a key part of evolutionary machine learning, which commonly utilizes evolutionary algorithms (EAs) to automatically design high-performing deep neural architectures. During past years, various ENAS methods have been proposed with exceptional performance. However, the theory research of ENAS is still in the infant. In this work, we step for the runtime analysis, which is an essential theory aspect of EAs, of ENAS upon multiclass classification problems. Specifically, we first propose a benchmark to lay the groundwork for the analysis. Furthermore, we design a two-level search space, making it suitable for multiclass classification problems and consistent with the common settings of ENAS. Based on both designs, we consider (1+1)-ENAS algorithms with one-bit and bit-wise mutations, and analyze their upper and lower bounds on the expected runtime. We prove that the algorithm using both mutations can find the optimum with the expected runtime upper bound of $O(rM\ln{rM})$ and lower bound of $\Omega(rM\ln{M})$. This suggests that a simple one-bit mutation may be greatly considered, given that most state-of-the-art ENAS methods are laboriously designed with the bit-wise mutation. Empirical studies also support our theoretical proof.

Automating Versatile Time-Series Analysis with Tiny Transformers on Embedded FPGAs

May 23, 2025Abstract:Transformer-based models have shown strong performance across diverse time-series tasks, but their deployment on resource-constrained devices remains challenging due to high memory and computational demand. While prior work targeting Microcontroller Units (MCUs) has explored hardware-specific optimizations, such approaches are often task-specific and limited to 8-bit fixed-point precision. Field-Programmable Gate Arrays (FPGAs) offer greater flexibility, enabling fine-grained control over data precision and architecture. However, existing FPGA-based deployments of Transformers for time-series analysis typically focus on high-density platforms with manual configuration. This paper presents a unified and fully automated deployment framework for Tiny Transformers on embedded FPGAs. Our framework supports a compact encoder-only Transformer architecture across three representative time-series tasks (forecasting, classification, and anomaly detection). It combines quantization-aware training (down to 4 bits), hardware-aware hyperparameter search using Optuna, and automatic VHDL generation for seamless deployment. We evaluate our framework on six public datasets across two embedded FPGA platforms. Results show that our framework produces integer-only, task-specific Transformer accelerators achieving as low as 0.033 mJ per inference with millisecond latency on AMD Spartan-7, while also providing insights into deployment feasibility on Lattice iCE40. All source code will be released in the GitHub repository (https://github.com/Edwina1030/TinyTransformer4TS).

Open3DBench: Open-Source Benchmark for 3D-IC Backend Implementation and PPA Evaluation

Mar 17, 2025

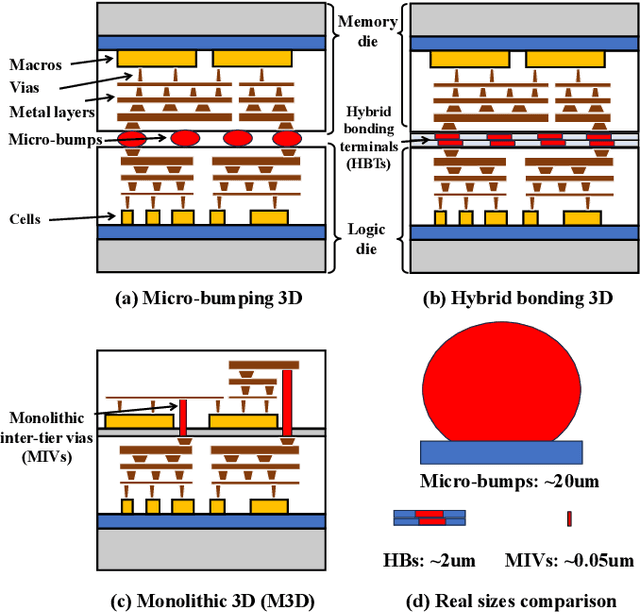

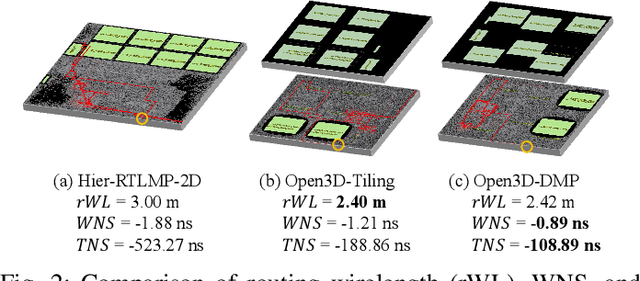

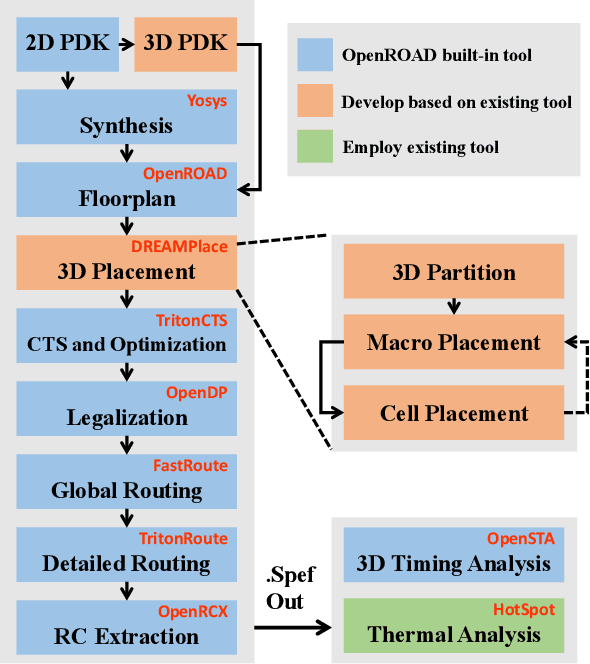

Abstract:This work introduces Open3DBench, an open-source 3D-IC backend implementation benchmark built upon the OpenROAD-flow-scripts framework, enabling comprehensive evaluation of power, performance, area, and thermal metrics. Our proposed flow supports modular integration of 3D partitioning, placement, 3D routing, RC extraction, and thermal simulation, aligning with advanced 3D flows that rely on commercial tools and in-house scripts. We present two foundational 3D placement algorithms: Open3D-Tiling, which emphasizes regular macro placement, and Open3D-DMP, which enhances wirelength optimization through cross-die co-placement with analytical placer DREAMPlace. Experimental results show significant improvements in area (51.19%), wirelength (24.06%), timing (30.84%), and power (5.72%) compared to 2D flows. The results also highlight that better wirelength does not necessarily lead to PPA gain, emphasizing the need of developing PPA-driven methods. Open3DBench offers a standardized, reproducible platform for evaluating 3D EDA methods, effectively bridging the gap between open-source tools and commercial solutions in 3D-IC design.

Stochastic Population Update Provably Needs An Archive in Evolutionary Multi-objective Optimization

Jan 28, 2025

Abstract:Evolutionary algorithms (EAs) have been widely applied to multi-objective optimization, due to their nature of population-based search. Population update, a key component in multi-objective EAs (MOEAs), is usually performed in a greedy, deterministic manner. However, recent studies have questioned this practice and shown that stochastic population update (SPU), which allows inferior solutions have a chance to be preserved, can help MOEAs jump out of local optima more easily. While introducing randomness in the population update process boosts the exploration of MOEAs, there is a drawback that the population may not always preserve the very best solutions found, thus entailing a large population. Intuitively, a possible solution to this issue is to introduce an archive that stores the best solutions ever found. In this paper, we theoretically show that using an archive can allow a small population and accelerate the search of SPU-based MOEAs substantially. Specifically, we analyze the expected running time of two well-established MOEAs, SMS-EMOA and NSGA-II, with SPU for solving a commonly studied bi-objective problem OneJumpZeroJump, and prove that using an archive can bring (even exponential) speedups. The comparison between SMS-EMOA and NSGA-II also suggests that the $(\mu+\mu)$ update mode may be more suitable for SPU than the $(\mu+1)$ update mode. Furthermore, our derived running time bounds for using SPU alone are significantly tighter than previously known ones. Our theoretical findings are also empirically validated on a well-known practical problem, the multi-objective traveling salesperson problem. We hope this work may provide theoretical support to explore different ideas of designing algorithms in evolutionary multi-objective optimization.

Pareto Set Learning for Multi-Objective Reinforcement Learning

Jan 14, 2025Abstract:Multi-objective decision-making problems have emerged in numerous real-world scenarios, such as video games, navigation and robotics. Considering the clear advantages of Reinforcement Learning (RL) in optimizing decision-making processes, researchers have delved into the development of Multi-Objective RL (MORL) methods for solving multi-objective decision problems. However, previous methods either cannot obtain the entire Pareto front, or employ only a single policy network for all the preferences over multiple objectives, which may not produce personalized solutions for each preference. To address these limitations, we propose a novel decomposition-based framework for MORL, Pareto Set Learning for MORL (PSL-MORL), that harnesses the generation capability of hypernetwork to produce the parameters of the policy network for each decomposition weight, generating relatively distinct policies for various scalarized subproblems with high efficiency. PSL-MORL is a general framework, which is compatible for any RL algorithm. The theoretical result guarantees the superiority of the model capacity of PSL-MORL and the optimality of the obtained policy network. Through extensive experiments on diverse benchmarks, we demonstrate the effectiveness of PSL-MORL in achieving dense coverage of the Pareto front, significantly outperforming state-of-the-art MORL methods in the hypervolume and sparsity indicators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge