Alberto Quattrini Li

RENEW: Risk- and Energy-Aware Navigation in Dynamic Waterways

Jan 23, 2026Abstract:We present RENEW, a global path planner for Autonomous Surface Vehicle (ASV) in dynamic environments with external disturbances (e.g., water currents). RENEW introduces a unified risk- and energy-aware strategy that ensures safety by dynamically identifying non-navigable regions and enforcing adaptive safety constraints. Inspired by maritime contingency planning, it employs a best-effort strategy to maintain control under adverse conditions. The hierarchical architecture combines high-level constrained triangulation for topological diversity with low-level trajectory optimization within safe corridors. Validated with real-world ocean data, RENEW is the first framework to jointly address adaptive non-navigability and topological path diversity for robust maritime navigation.

Underwater Dense Mapping with the First Compact 3D Sonar

Oct 21, 2025Abstract:In the past decade, the adoption of compact 3D range sensors, such as LiDARs, has driven the developments of robust state-estimation pipelines, making them a standard sensor for aerial, ground, and space autonomy. Unfortunately, poor propagation of electromagnetic waves underwater, has limited the visibility-independent sensing options of underwater state-estimation to acoustic range sensors, which provide 2D information including, at-best, spatially ambiguous information. This paper, to the best of our knowledge, is the first study examining the performance, capacity, and opportunities arising from the recent introduction of the first compact 3D sonar. Towards that purpose, we introduce calibration procedures for extracting the extrinsics between the 3D sonar and a camera and we provide a study on acoustic response in different surfaces and materials. Moreover, we provide novel mapping and SLAM pipelines tested in deployments in underwater cave systems and other geometrically and acoustically challenging underwater environments. Our assessment showcases the unique capacity of 3D sonars to capture consistent spatial information allowing for detailed reconstructions and localization in datasets expanding to hundreds of meters. At the same time it highlights remaining challenges related to acoustic propagation, as found also in other acoustic sensors. Datasets collected for our evaluations would be released and shared with the community to enable further research advancements.

Mapping the Catacombs: An Underwater Cave Segment of the Devil's Eye System

Jul 08, 2025

Abstract:This paper presents a framework for mapping underwater caves. Underwater caves are crucial for fresh water resource management, underwater archaeology, and hydrogeology. Mapping the cave's outline and dimensions, as well as creating photorealistic 3D maps, is critical for enabling a better understanding of this underwater domain. In this paper, we present the mapping of an underwater cave segment (the catacombs) of the Devil's Eye cave system at Ginnie Springs, FL. We utilized a set of inexpensive action cameras in conjunction with a dive computer to estimate the trajectories of the cameras together with a sparse point cloud. The resulting reconstructions are utilized to produce a one-dimensional retract of the cave passages in the form of the average trajectory together with the boundaries (top, bottom, left, and right). The use of the dive computer enables the observability of the z-dimension in addition to the roll and pitch in a visual/inertial framework (SVIn2). In addition, the keyframes generated by SVIn2 together with the estimated camera poses for select areas are used as input to a global optimization (bundle adjustment) framework -- COLMAP -- in order to produce a dense reconstruction of those areas. The same cave segment is manually surveyed using the MNemo V2 instrument, providing an additional set of measurements validating the proposed approach. It is worth noting that with the use of action cameras, the primary components of a cave map can be constructed. Furthermore, with the utilization of a global optimization framework guided by the results of VI-SLAM package SVIn2, photorealistic dense 3D representations of selected areas can be reconstructed.

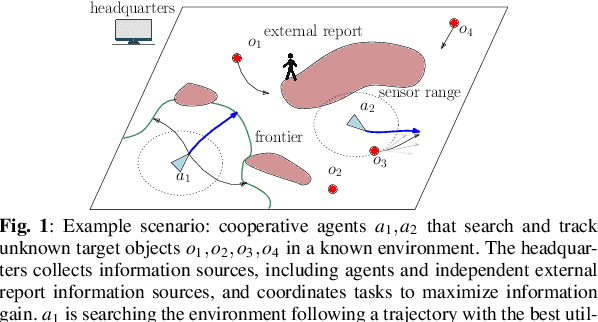

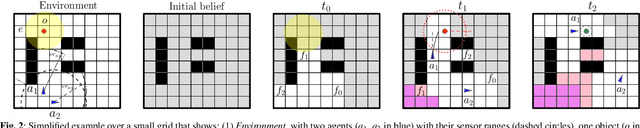

Multi-Object Active Search and Tracking by Multiple Agents in Untrusted, Dynamically Changing Environments

Feb 03, 2025

Abstract:This paper addresses the problem of both actively searching and tracking multiple unknown dynamic objects in a known environment with multiple cooperative autonomous agents with partial observability. The tracking of a target ends when the uncertainty is below a threshold. Current methods typically assume homogeneous agents without access to external information and utilize short-horizon target predictive models. Such assumptions limit real-world applications. We propose a fully integrated pipeline where the main contributions are: (1) a time-varying weighted belief representation capable of handling knowledge that changes over time, which includes external reports of varying levels of trustworthiness in addition to the agents; (2) the integration of a Long Short Term Memory-based trajectory prediction within the optimization framework for long-horizon decision-making, which reasons in time-configuration space, thus increasing responsiveness; and (3) a comprehensive system that accounts for multiple agents and enables information-driven optimization. When communication is available, our strategy consolidates exploration results collected asynchronously by agents and external sources into a headquarters, who can allocate each agent to maximize the overall team's utility, using all available information. We tested our approach extensively in simulations against baselines, and in robustness and ablation studies. In addition, we performed experiments in a 3D physics based engine robot simulator to test the applicability in the real world, as well as with real-world trajectories obtained from an oceanography computational fluid dynamics simulator. Results show the effectiveness of our method, which achieves mission completion times 1.3 to 3.2 times faster in finding all targets, even under the most challenging scenarios where the number of targets is 5 times greater than that of the agents.

An Untethered Bioinspired Robotic Tensegrity Dolphin with Multi-Flexibility Design for Aquatic Locomotion

Nov 01, 2024

Abstract:This paper presents the first steps toward a soft dolphin robot using a bio-inspired approach to mimic dolphin flexibility. The current dolphin robot uses a minimalist approach, with only two actuated cable-driven degrees of freedom actuated by a pair of motors. The actuated tail moves up and down in a swimming motion, but this first proof of concept does not permit controlled turns of the robot. While existing robotic dolphins typically use revolute joints to articulate rigid bodies, our design -- which will be made opensource -- incorporates a flexible tail with tunable silicone skin and actuation flexibility via a cable-driven system, which mimics muscle dynamics and design flexibility with a tunable skeleton structure. The design is also tunable since the backbone can be easily printed in various geometries. The paper provides insights into how a few such variations affect robot motion and efficiency, measured by speed and cost of transport (COT). This approach demonstrates the potential of achieving dolphin-like motion through enhanced flexibility in bio-inspired robotics.

Active Learning-augmented Intention-aware Obstacle Avoidance of Autonomous Surface Vehicles in High-traffic Waters

Nov 01, 2024

Abstract:This paper enhances the obstacle avoidance of Autonomous Surface Vehicles (ASVs) for safe navigation in high-traffic waters with an active state estimation of obstacle's passing intention and reducing its uncertainty. We introduce a topological modeling of passing intention of obstacles, which can be applied to varying encounter situations based on the inherent embedding of topological concepts in COLREGs. With a Long Short-Term Memory (LSTM) neural network, we classify the passing intention of obstacles. Then, for determining the ASV maneuver, we propose a multi-objective optimization framework including information gain about the passing obstacle intention and safety. We validate the proposed approach under extensive Monte Carlo simulations (2,400 runs) with a varying number of obstacles, dynamic properties, encounter situations, and different behavioral patterns of obstacles (cooperative, non-cooperative). We also present the results from a real marine accident case study as well as real-world experiments of a real ASV with environmental disturbances, showing successful collision avoidance with our strategy in real-time.

Multi-modal Perception Dataset of In-water Objects for Autonomous Surface Vehicles

Apr 29, 2024

Abstract:This paper introduces the first publicly accessible multi-modal perception dataset for autonomous maritime navigation, focusing on in-water obstacles within the aquatic environment to enhance situational awareness for Autonomous Surface Vehicles (ASVs). This dataset, consisting of diverse objects encountered under varying environmental conditions, aims to bridge the research gap in marine robotics by providing a multi-modal, annotated, and ego-centric perception dataset, for object detection and classification. We also show the applicability of the proposed dataset's framework using deep learning-based open-source perception algorithms that have shown success. We expect that our dataset will contribute to development of the marine autonomy pipeline and marine (field) robotics. Please note this is a work-in-progress paper about our on-going research that we plan to release in full via future publication.

Improving the perception of visual fiducial markers in the field using Adaptive Active Exposure Control

Apr 18, 2024

Abstract:Accurate localization is fundamental for autonomous underwater vehicles (AUVs) to carry out precise tasks, such as manipulation and construction. Vision-based solutions using fiducial marker are promising, but extremely challenging underwater because of harsh lighting condition underwater. This paper introduces a gradient-based active camera exposure control method to tackle sharp lighting variations during image acquisition, which can establish better foundation for subsequent image enhancement procedures. Considering a typical scenario for underwater operations where visual tags are used, we proposed several experiments comparing our method with other state-of-the-art exposure control method including Active Exposure Control (AEC) and Gradient-based Exposure Control (GEC). Results show a significant improvement in the accuracy of robot localization. This method is an important component that can be used in visual-based state estimation pipeline to improve the overall localization accuracy.

Scalable underwater assembly with reconfigurable visual fiducials

Oct 30, 2023

Abstract:We present a scalable combined localization infrastructure deployment and task planning algorithm for underwater assembly. Infrastructure is autonomously modified to suit the needs of manipulation tasks based on an uncertainty model on the infrastructure's positional accuracy. Our uncertainty model can be combined with the noise characteristics from multiple devices. For the task planning problem, we propose a layer-based clustering approach that completes the manipulation tasks one cluster at a time. We employ movable visual fiducial markers as infrastructure and an autonomous underwater vehicle (AUV) for manipulation tasks. The proposed task planning algorithm is computationally simple, and we implement it on AUV without any offline computation requirements. Combined hardware experiments and simulations over large datasets show that the proposed technique is scalable to large areas.

Buoyancy enabled autonomous underwater construction with cement blocks

May 09, 2023Abstract:We present the first free-floating autonomous underwater construction system capable of using active ballasting to transport cement building blocks efficiently. It is the first free-floating autonomous construction robot to use a paired set of resources: compressed air for buoyancy and a battery for thrusters. In construction trials, our system built structures of up to 12 components and weighing up to 100Kg (75Kg in water). Our system achieves this performance by combining a novel one-degree-of-freedom manipulator, a novel two-component cement block construction system that corrects errors in placement, and a simple active ballasting system combined with compliant placement and grasp behaviors. The passive error correcting components of the system minimize the required complexity in sensing and control. We also explore the problem of buoyancy allocation for building structures at scale by defining a convex program which allocates buoyancy to minimize the predicted energy cost for transporting blocks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge