Marios Xanthidis

Sim2Swim: Zero-Shot Velocity Control for Agile AUV Maneuvering in 3 Minutes

Dec 09, 2025

Abstract:Holonomic autonomous underwater vehicles (AUVs) have the hardware ability for agile maneuvering in both translational and rotational degrees of freedom (DOFs). However, due to challenges inherent to underwater vehicles, such as complex hydrostatics and hydrodynamics, parametric uncertainties, and frequent changes in dynamics due to payload changes, control is challenging. Performance typically relies on carefully tuned controllers targeting unique platform configurations, and a need for re-tuning for deployment under varying payloads and hydrodynamic conditions. As a consequence, agile maneuvering with simultaneous tracking of time-varying references in both translational and rotational DOFs is rarely utilized in practice. To the best of our knowledge, this paper presents the first general zero-shot sim2real deep reinforcement learning-based (DRL) velocity controller enabling path following and agile 6DOF maneuvering with a training duration of just 3 minutes. Sim2Swim, the proposed approach, inspired by state-of-the-art DRL-based position control, leverages domain randomization and massively parallelized training to converge to field-deployable control policies for AUVs of variable characteristics without post-processing or tuning. Sim2Swim is extensively validated in pool trials for a variety of configurations, showcasing robust control for highly agile motions.

SHRUMS: Sensor Hallucination for Real-time Underwater Motion Planning with a Compact 3D Sonar

Oct 21, 2025Abstract:Autonomous navigation in 3D is a fundamental problem for autonomy. Despite major advancements in terrestrial and aerial settings due to improved range sensors including LiDAR, compact sensors with similar capabilities for underwater robots have only recently become available, in the form of 3D sonars. This paper introduces a novel underwater 3D navigation pipeline, called SHRUMS (Sensor Hallucination for Robust Underwater Motion planning with 3D Sonar). To the best of the authors' knowledge, SHRUMS is the first underwater autonomous navigation stack to integrate a 3D sonar. The proposed pipeline exhibits strong robustness while operating in complex 3D environments in spite of extremely poor visibility conditions. To accommodate the intricacies of the novel sensor data stream while achieving real-time locally optimal performance, SHRUMS introduces the concept of hallucinating sensor measurements from non-existent sensors with convenient arbitrary parameters, tailored to application specific requirements. The proposed concepts are validated with real 3D sonar sensor data, utilizing real inputs in challenging settings and local maps constructed in real-time. Field deployments validating the proposed approach in full are planned in the very near future.

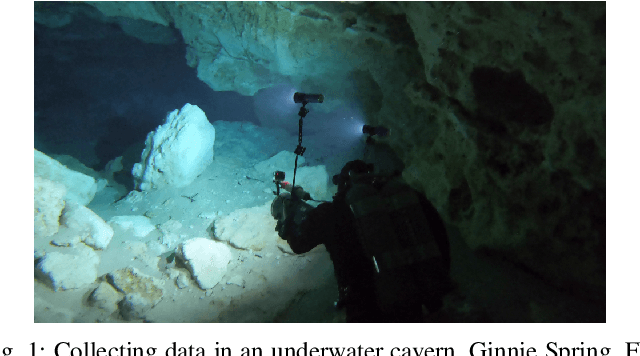

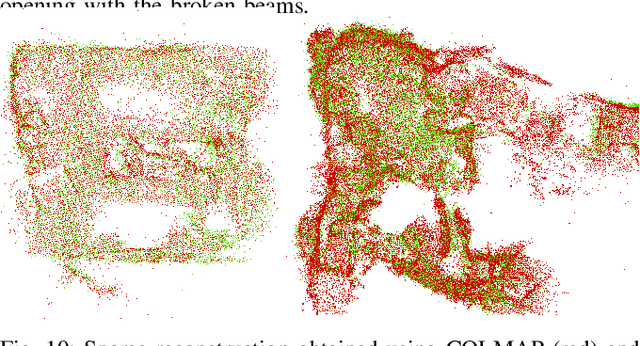

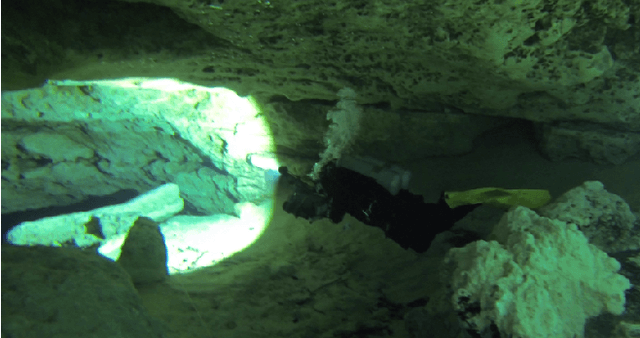

Underwater Dense Mapping with the First Compact 3D Sonar

Oct 21, 2025Abstract:In the past decade, the adoption of compact 3D range sensors, such as LiDARs, has driven the developments of robust state-estimation pipelines, making them a standard sensor for aerial, ground, and space autonomy. Unfortunately, poor propagation of electromagnetic waves underwater, has limited the visibility-independent sensing options of underwater state-estimation to acoustic range sensors, which provide 2D information including, at-best, spatially ambiguous information. This paper, to the best of our knowledge, is the first study examining the performance, capacity, and opportunities arising from the recent introduction of the first compact 3D sonar. Towards that purpose, we introduce calibration procedures for extracting the extrinsics between the 3D sonar and a camera and we provide a study on acoustic response in different surfaces and materials. Moreover, we provide novel mapping and SLAM pipelines tested in deployments in underwater cave systems and other geometrically and acoustically challenging underwater environments. Our assessment showcases the unique capacity of 3D sonars to capture consistent spatial information allowing for detailed reconstructions and localization in datasets expanding to hundreds of meters. At the same time it highlights remaining challenges related to acoustic propagation, as found also in other acoustic sensors. Datasets collected for our evaluations would be released and shared with the community to enable further research advancements.

Aquaculture field robotics: Applications, lessons learned and future prospects

Apr 19, 2024

Abstract:Aquaculture is a big marine industry and contributes to securing global food demands. Underwater vehicles such as remotely operated vehicles (ROVs) are commonly used for inspection, maintenance, and intervention (IMR) tasks in fish farms. However, underwater vehicle operations in aquaculture face several unique and demanding challenges, such as navigation in dynamically changing environments with time-varying sealoads and poor hydroacoustic sensor capabilities, challenges yet to be properly addressed in research. This paper will present various endeavors to address these questions and improve the overall autonomy level in aquaculture robotics, with a focus on field experiments. We will also discuss lessons learned during field trials and potential future prospects in aquaculture robotics.

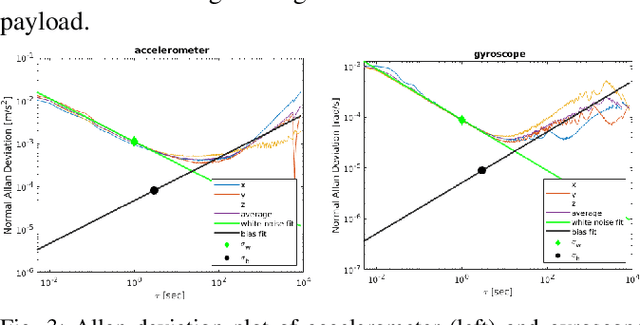

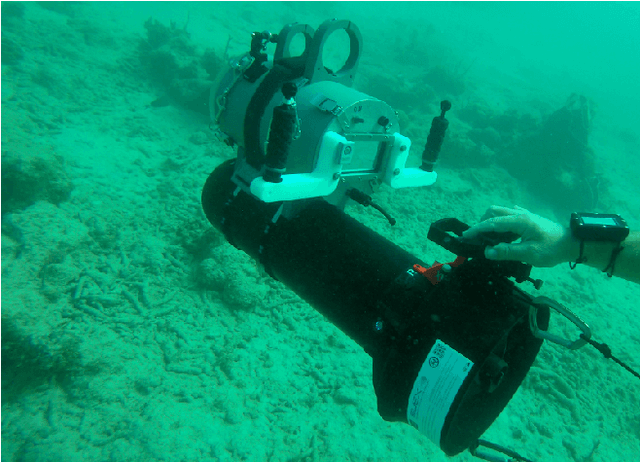

High Definition, Inexpensive, Underwater Mapping

Mar 10, 2022

Abstract:In this paper we present a complete framework for Underwater SLAM utilizing a single inexpensive sensor. Over the recent years, imaging technology of action cameras is producing stunning results even under the challenging conditions of the underwater domain. The GoPro 9 camera provides high definition video in synchronization with an Inertial Measurement Unit (IMU) data stream encoded in a single mp4 file. The visual inertial SLAM framework is augmented to adjust the map after each loop closure. Data collected at an artificial wreck of the coast of South Carolina and in caverns and caves in Florida demonstrate the robustness of the proposed approach in a variety of conditions.

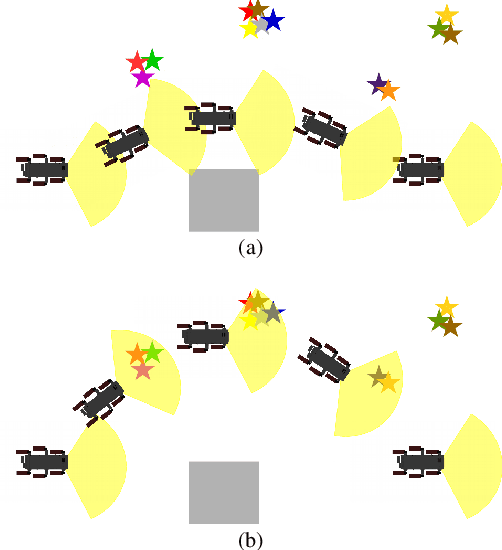

AquaVis: A Perception-Aware Autonomous Navigation Framework for Underwater Vehicles

Oct 04, 2021

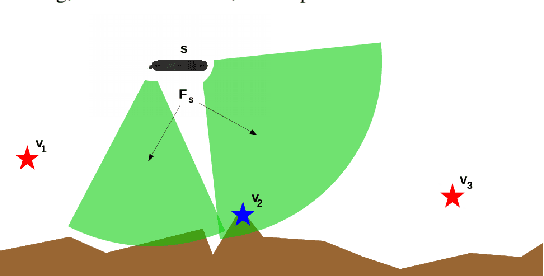

Abstract:Visual monitoring operations underwater require both observing the objects of interest in close-proximity, and tracking the few feature-rich areas necessary for state estimation.This paper introduces the first navigation framework, called AquaVis, that produces on-line visibility-aware motion plans that enable Autonomous Underwater Vehicles (AUVs) to track multiple visual objectives with an arbitrary camera configuration in real-time. Using the proposed pipeline, AUVs can efficiently move in 3D, reach their goals while avoiding obstacles safely, and maximizing the visibility of multiple objectives along the path within a specified proximity. The method is sufficiently fast to be executed in real-time and is suitable for single or multiple camera configurations. Experimental results show the significant improvement on tracking multiple automatically-extracted points of interest, with low computational overhead and fast re-planning times

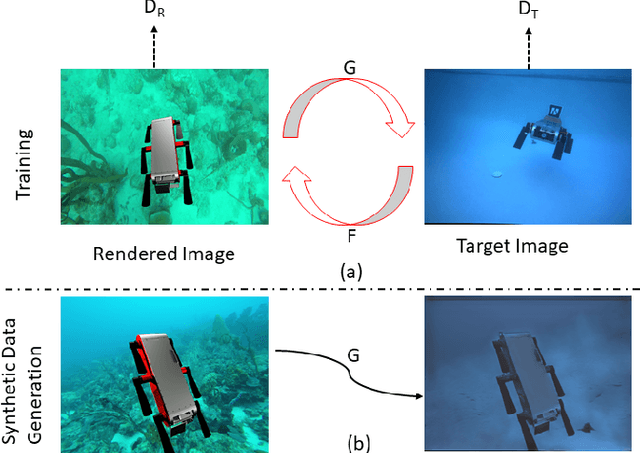

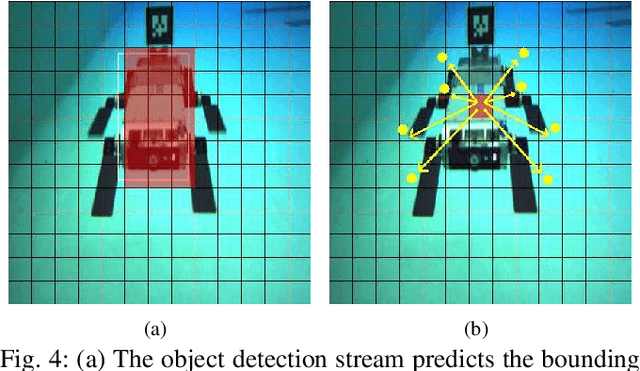

DeepURL: Deep Pose Estimation Framework for Underwater Relative Localization

Mar 13, 2020

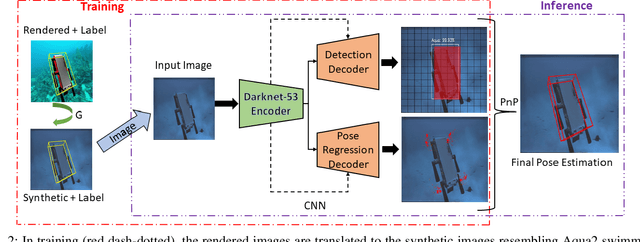

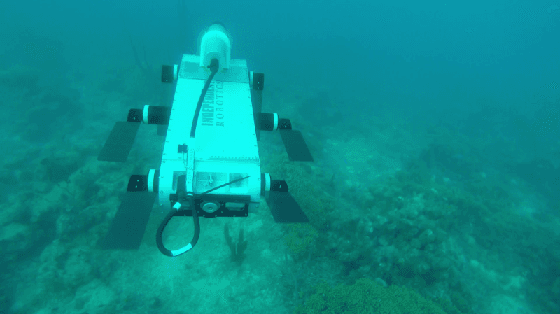

Abstract:In this paper, we propose a real-time deep-learning approach for determining the 6D relative pose of Autonomous Underwater Vehicles (AUV) from a single image. A team of autonomous robots localizing themselves, in a communication-constrained underwater environment, is essential for many applications such as underwater exploration, mapping, multi-robot convoying, and other multi-robot tasks. Due to the profound difficulty of collecting ground truth images with accurate 6D poses underwater, this work utilizes rendered images from the Unreal Game Engine simulation for training. An image translation network is employed to bridge the gap between the rendered and the real images producing synthetic images for training. The proposed method predicts the 6D pose of an AUV from a single image as 2D image keypoints representing 8 corners of the 3D model of the AUV, and then the 6D pose in the camera coordinates is determined using RANSAC-based PnP. Experimental results in underwater environments (swimming pool and ocean) with different cameras demonstrate the robustness of the proposed technique, where the trained system decreased translation error by 75.5% and orientation error by 64.6% over the state-of-the-art methods.

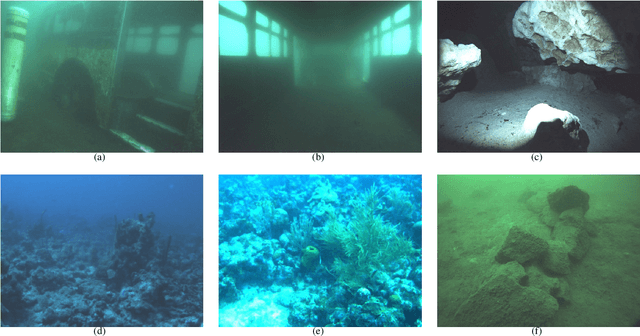

Experimental Comparison of Open Source Visual-Inertial-Based State Estimation Algorithms in the Underwater Domain

Apr 03, 2019

Abstract:A plethora of state estimation techniques have appeared in the last decade using visual data, and more recently with added inertial data. Datasets typically used for evaluation include indoor and urban environments, where supporting videos have shown impressive performance. However, such techniques have not been fully evaluated in challenging conditions, such as the marine domain. In this paper, we compare ten recent open-source packages to provide insights on their performance and guidelines on addressing current challenges. Specifically, we selected direct methods and tightly-coupled optimization techniques that fuse camera and Inertial Measurement Unit (IMU) data together. Experiments are conducted by testing all packages on datasets collected over the years with underwater robots in our laboratory. All the datasets are made available online.

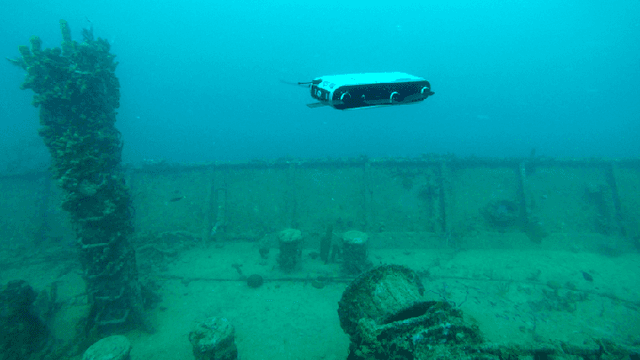

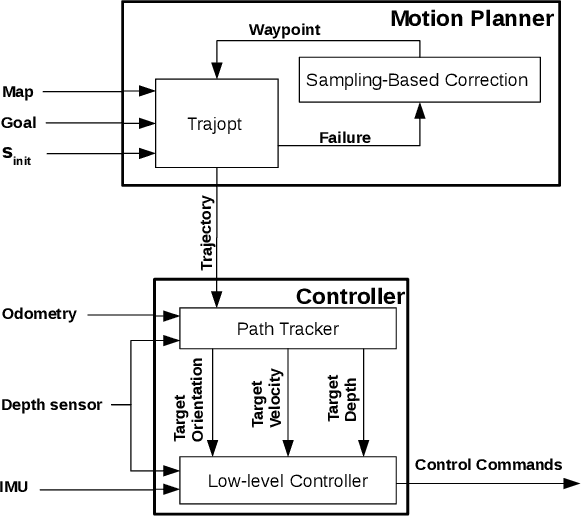

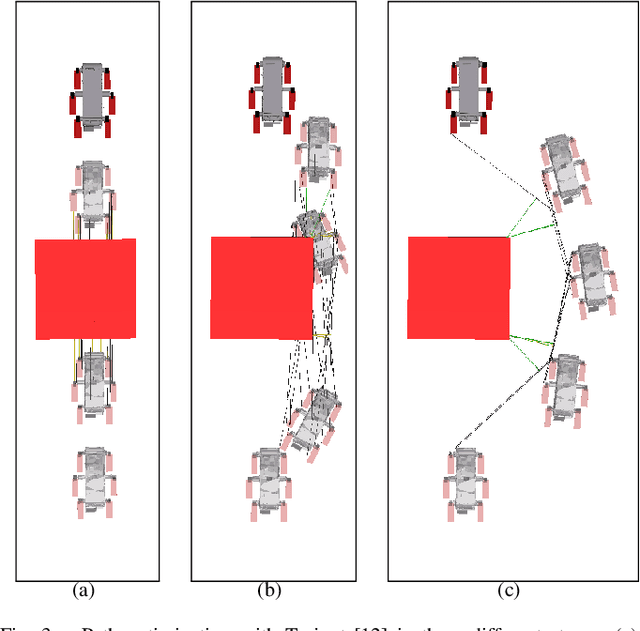

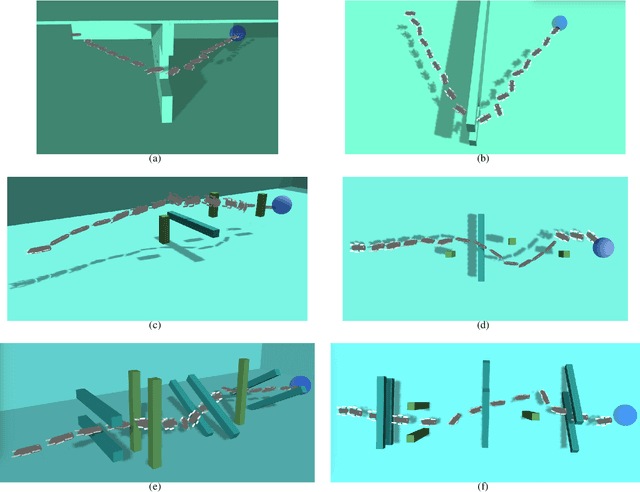

Navigation in the Presence of Obstacles for an Agile Autonomous Underwater Vehicle

Mar 28, 2019

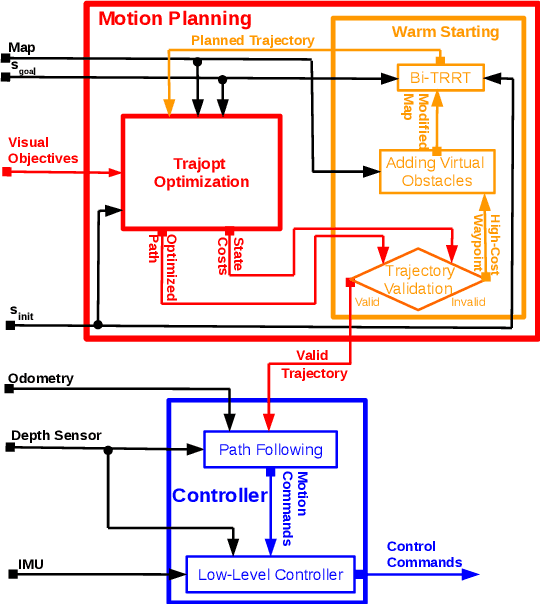

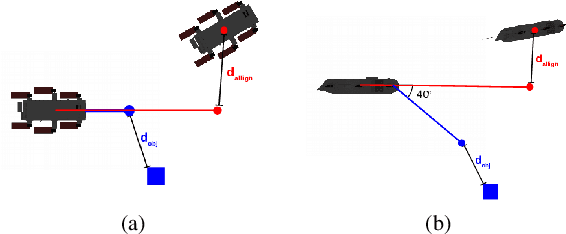

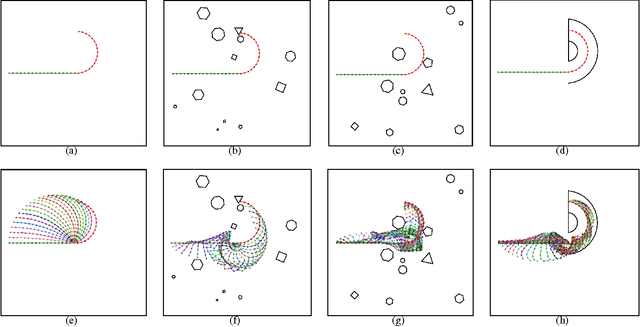

Abstract:Navigation underwater traditionally is done by keeping a safe distance from obstacles, resulting in "fly-overs" of the area of interest. An Autonomous Underwater Vehicle (AUV) moving through a cluttered space, such as a shipwreck, or a decorated cave is an extremely challenging problem and has not been addressed in the past. This paper proposed a novel navigation framework utilizing an enhanced version of Trajopt for fast 3D path-optimization with near-optimal guarantees for AUVs. A sampling based correction procedure ensures that the planning is not limited by local minima, enabling navigation through narrow spaces. The method is shown, both on simulation and in-pool experiments, to be fast enough to enable real-time autonomous navigation for an Aqua2 AUV with strong safety guarantees.

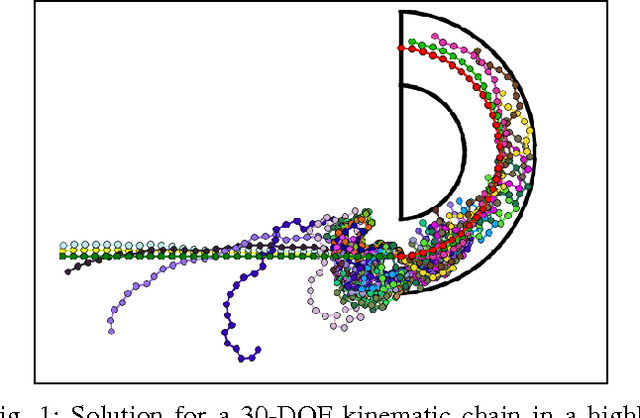

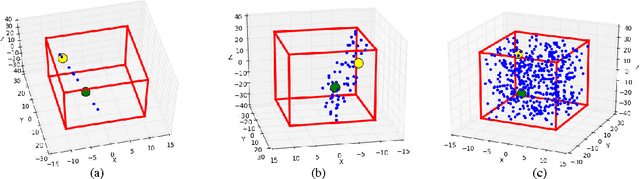

RRT+ : Fast Planning for High-Dimensional Configuration Spaces

Dec 24, 2016

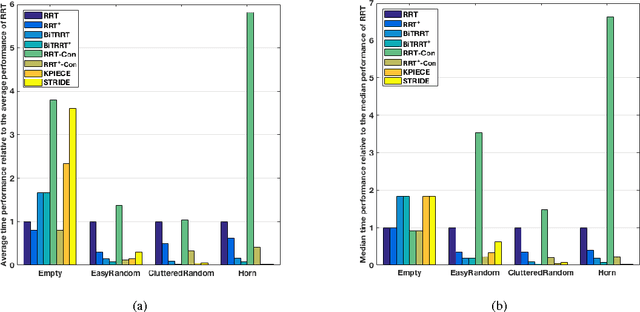

Abstract:In this paper we propose a new family of RRT based algorithms, named RRT+ , that are able to find faster solutions in high-dimensional configuration spaces compared to other existing RRT variants by finding paths in lower dimensional subspaces of the configuration space. The method can be easily applied to complex hyper-redundant systems and can be adapted by other RRT based planners. We introduce RRT+ and develop some variants, called PrioritizedRRT+ , PrioritizedRRT+-Connect, and PrioritizedBidirectionalT-RRT+ , that use the new sampling technique and we show that our method provides faster results than the corresponding original algorithms. Experiments using the state-of-the-art planners available in OMPL show superior performance of RRT+ for high-dimensional motion planning problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge