Sharmin Rahman

SM/VIO: Robust Underwater State Estimation Switching Between Model-based and Visual Inertial Odometry

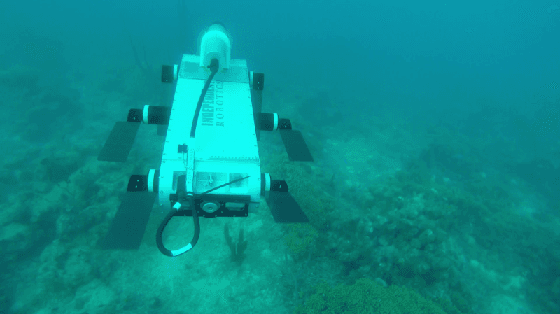

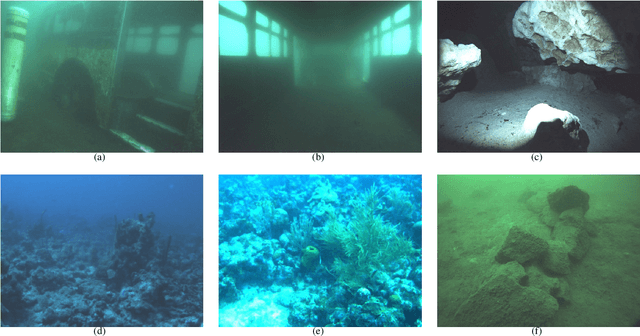

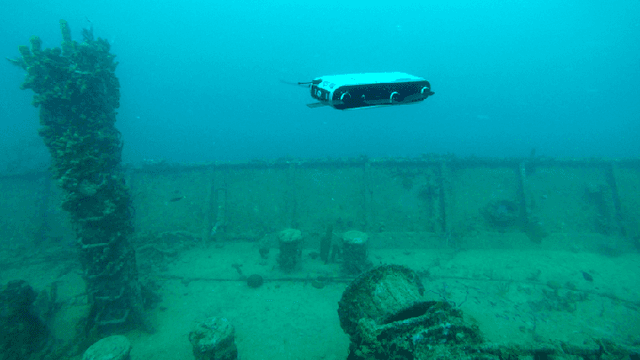

Apr 04, 2023Abstract:This paper addresses the robustness problem of visual-inertial state estimation for underwater operations. Underwater robots operating in a challenging environment are required to know their pose at all times. All vision-based localization schemes are prone to failure due to poor visibility conditions, color loss, and lack of features. The proposed approach utilizes a model of the robot's kinematics together with proprioceptive sensors to maintain the pose estimate during visual-inertial odometry (VIO) failures. Furthermore, the trajectories from successful VIO and the ones from the model-driven odometry are integrated in a coherent set that maintains a consistent pose at all times. Health-monitoring tracks the VIO process ensuring timely switches between the two estimators. Finally, loop closure is implemented on the overall trajectory. The resulting framework is a robust estimator switching between model-based and visual-inertial odometry (SM/VIO). Experimental results from numerous deployments of the Aqua2 vehicle demonstrate the robustness of our approach over coral reefs and a shipwreck.

High Definition, Inexpensive, Underwater Mapping

Mar 10, 2022

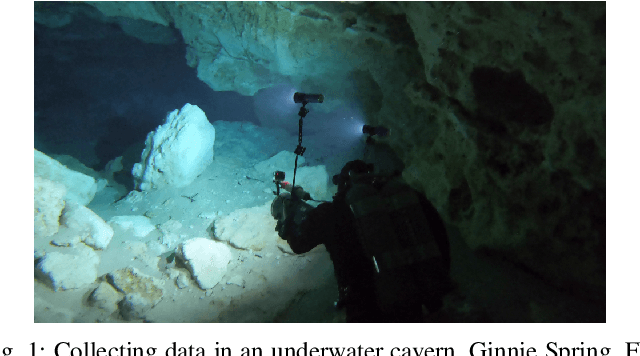

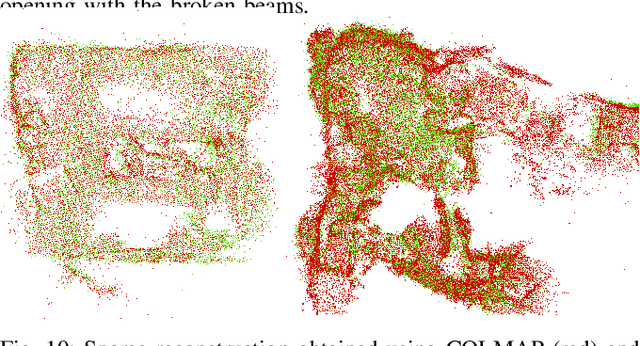

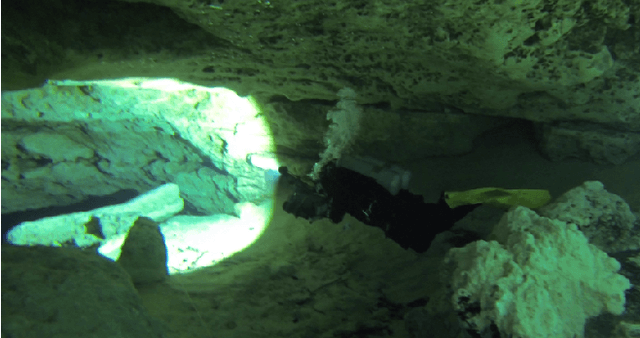

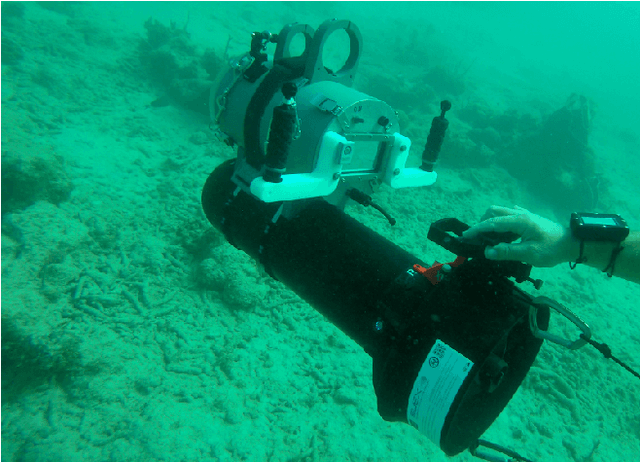

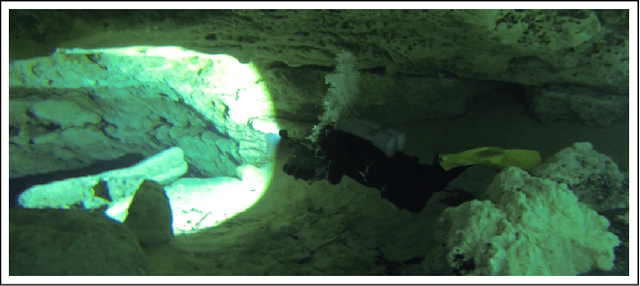

Abstract:In this paper we present a complete framework for Underwater SLAM utilizing a single inexpensive sensor. Over the recent years, imaging technology of action cameras is producing stunning results even under the challenging conditions of the underwater domain. The GoPro 9 camera provides high definition video in synchronization with an Inertial Measurement Unit (IMU) data stream encoded in a single mp4 file. The visual inertial SLAM framework is augmented to adjust the map after each loop closure. Data collected at an artificial wreck of the coast of South Carolina and in caverns and caves in Florida demonstrate the robustness of the proposed approach in a variety of conditions.

Experimental Comparison of Open Source Visual-Inertial-Based State Estimation Algorithms in the Underwater Domain

Apr 03, 2019

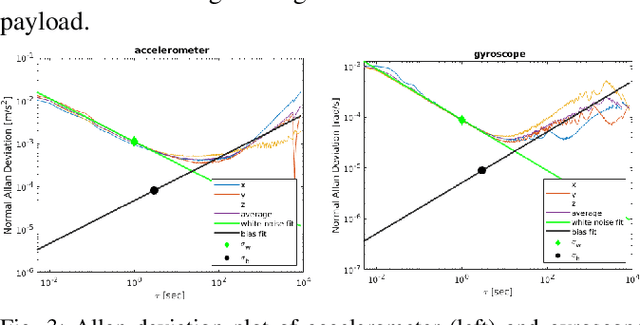

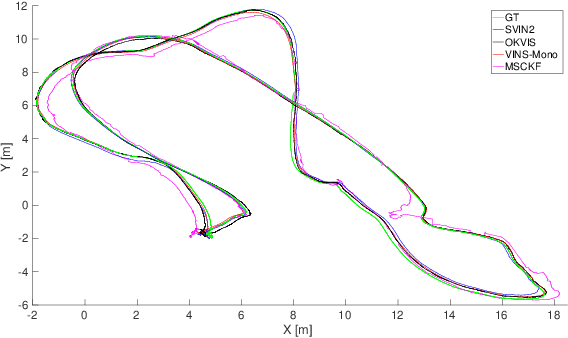

Abstract:A plethora of state estimation techniques have appeared in the last decade using visual data, and more recently with added inertial data. Datasets typically used for evaluation include indoor and urban environments, where supporting videos have shown impressive performance. However, such techniques have not been fully evaluated in challenging conditions, such as the marine domain. In this paper, we compare ten recent open-source packages to provide insights on their performance and guidelines on addressing current challenges. Specifically, we selected direct methods and tightly-coupled optimization techniques that fuse camera and Inertial Measurement Unit (IMU) data together. Experiments are conducted by testing all packages on datasets collected over the years with underwater robots in our laboratory. All the datasets are made available online.

Navigation in the Presence of Obstacles for an Agile Autonomous Underwater Vehicle

Mar 28, 2019

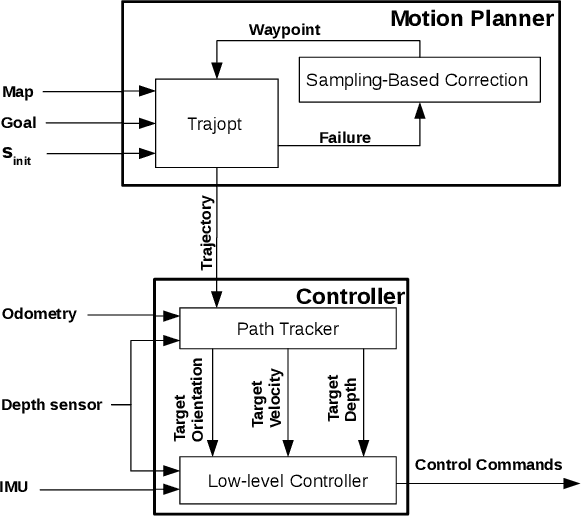

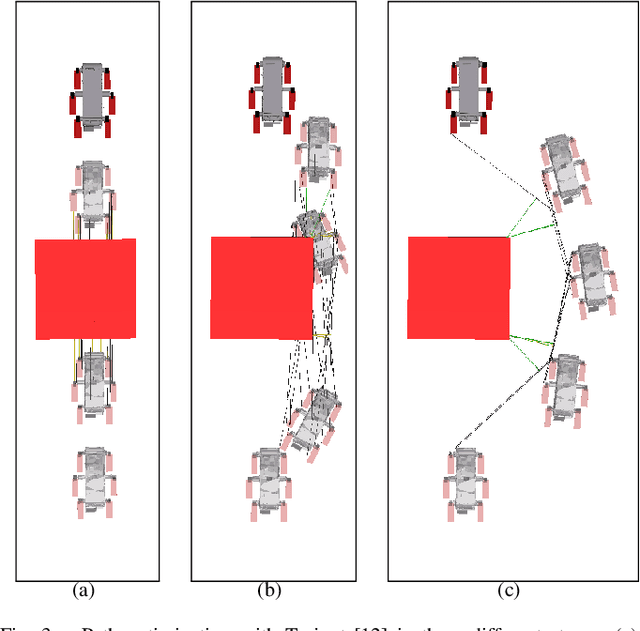

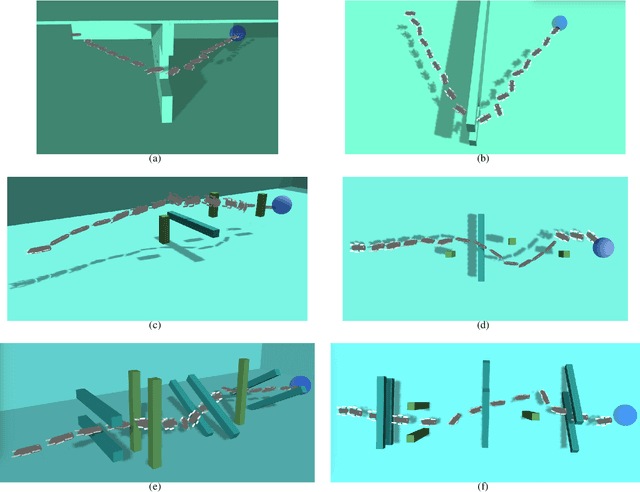

Abstract:Navigation underwater traditionally is done by keeping a safe distance from obstacles, resulting in "fly-overs" of the area of interest. An Autonomous Underwater Vehicle (AUV) moving through a cluttered space, such as a shipwreck, or a decorated cave is an extremely challenging problem and has not been addressed in the past. This paper proposed a novel navigation framework utilizing an enhanced version of Trajopt for fast 3D path-optimization with near-optimal guarantees for AUVs. A sampling based correction procedure ensures that the planning is not limited by local minima, enabling navigation through narrow spaces. The method is shown, both on simulation and in-pool experiments, to be fast enough to enable real-time autonomous navigation for an Aqua2 AUV with strong safety guarantees.

An Underwater SLAM System using Sonar, Visual, Inertial, and Depth Sensor

Mar 15, 2019

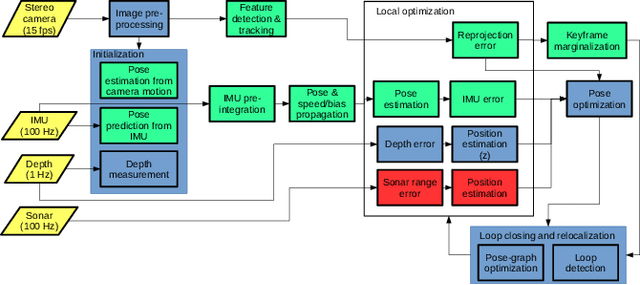

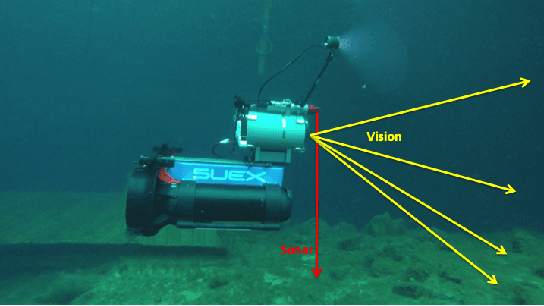

Abstract:This paper presents a novel tightly-coupled keyframe-based Simultaneous Localization and Mapping (SLAM) system with loop-closing and relocalization capabilities targeted for the underwater domain. Our previous work, SVIn, augmented the state-of-the-art visual-inertial state estimation package OKVIS to accommodate acoustic data from sonar in a non-linear optimization-based framework. This paper addresses drift and loss of localization -- one of the main problems affecting other packages in underwater domain -- by providing the following main contributions: a robust initialization method to refine scale using depth measurements, a fast preprocessing step to enhance the image quality, and a real-time loop-closing and relocalization method using bag of words (BoW). An additional contribution is the addition of depth measurements from a pressure sensor to the tightly-coupled optimization formulation. Experimental results on datasets collected with a custom-made underwater sensor suite and an autonomous underwater vehicle from challenging underwater environments with poor visibility demonstrate performance never achieved before in terms of accuracy and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge