Hunter Damron

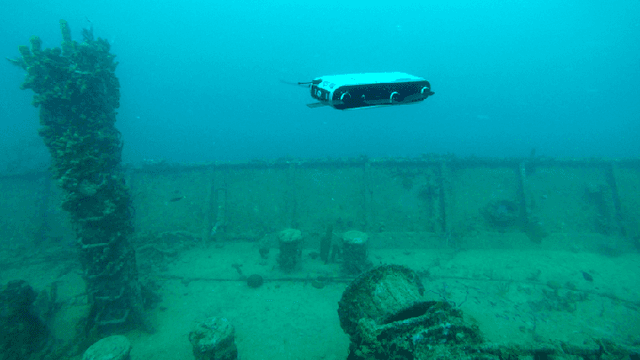

SM/VIO: Robust Underwater State Estimation Switching Between Model-based and Visual Inertial Odometry

Apr 04, 2023Abstract:This paper addresses the robustness problem of visual-inertial state estimation for underwater operations. Underwater robots operating in a challenging environment are required to know their pose at all times. All vision-based localization schemes are prone to failure due to poor visibility conditions, color loss, and lack of features. The proposed approach utilizes a model of the robot's kinematics together with proprioceptive sensors to maintain the pose estimate during visual-inertial odometry (VIO) failures. Furthermore, the trajectories from successful VIO and the ones from the model-driven odometry are integrated in a coherent set that maintains a consistent pose at all times. Health-monitoring tracks the VIO process ensuring timely switches between the two estimators. Finally, loop closure is implemented on the overall trajectory. The resulting framework is a robust estimator switching between model-based and visual-inertial odometry (SM/VIO). Experimental results from numerous deployments of the Aqua2 vehicle demonstrate the robustness of our approach over coral reefs and a shipwreck.

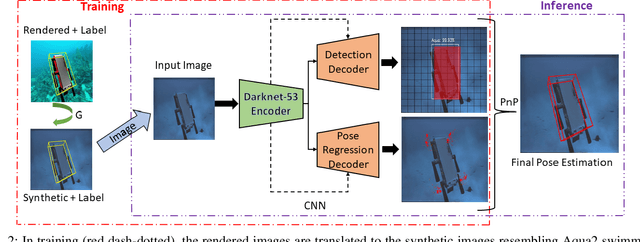

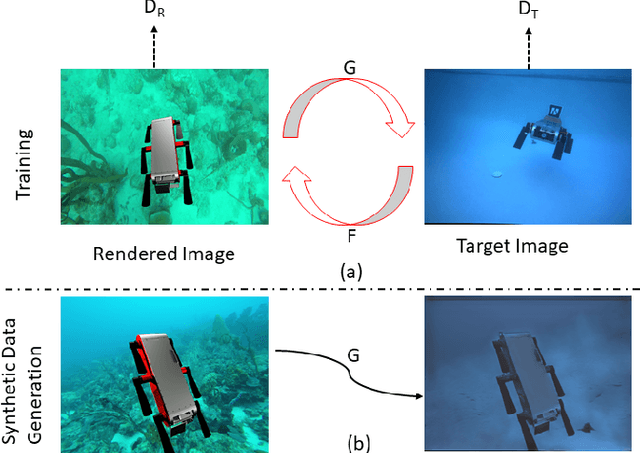

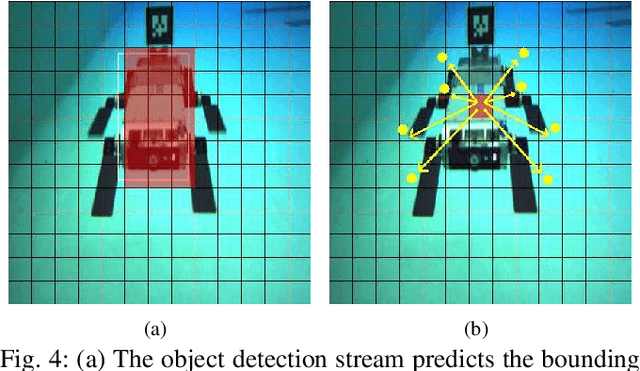

DeepURL: Deep Pose Estimation Framework for Underwater Relative Localization

Mar 13, 2020

Abstract:In this paper, we propose a real-time deep-learning approach for determining the 6D relative pose of Autonomous Underwater Vehicles (AUV) from a single image. A team of autonomous robots localizing themselves, in a communication-constrained underwater environment, is essential for many applications such as underwater exploration, mapping, multi-robot convoying, and other multi-robot tasks. Due to the profound difficulty of collecting ground truth images with accurate 6D poses underwater, this work utilizes rendered images from the Unreal Game Engine simulation for training. An image translation network is employed to bridge the gap between the rendered and the real images producing synthetic images for training. The proposed method predicts the 6D pose of an AUV from a single image as 2D image keypoints representing 8 corners of the 3D model of the AUV, and then the 6D pose in the camera coordinates is determined using RANSAC-based PnP. Experimental results in underwater environments (swimming pool and ocean) with different cameras demonstrate the robustness of the proposed technique, where the trained system decreased translation error by 75.5% and orientation error by 64.6% over the state-of-the-art methods.

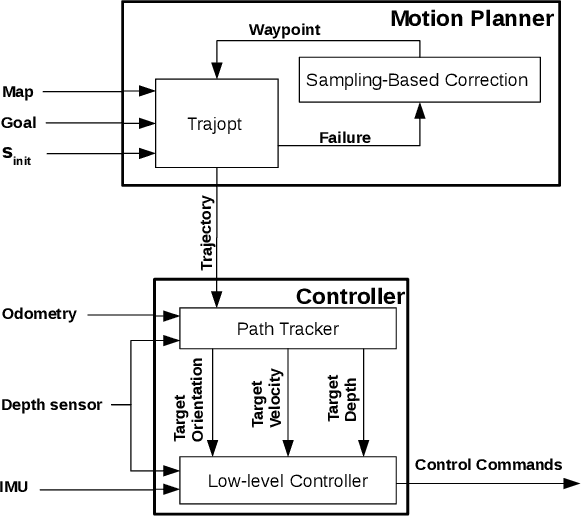

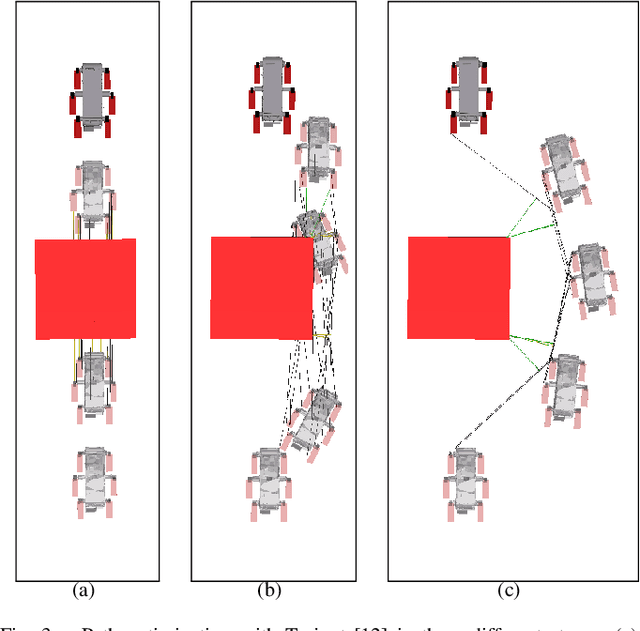

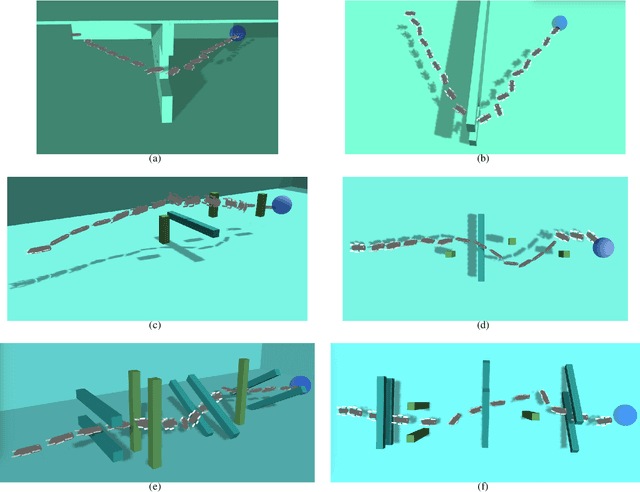

Navigation in the Presence of Obstacles for an Agile Autonomous Underwater Vehicle

Mar 28, 2019

Abstract:Navigation underwater traditionally is done by keeping a safe distance from obstacles, resulting in "fly-overs" of the area of interest. An Autonomous Underwater Vehicle (AUV) moving through a cluttered space, such as a shipwreck, or a decorated cave is an extremely challenging problem and has not been addressed in the past. This paper proposed a novel navigation framework utilizing an enhanced version of Trajopt for fast 3D path-optimization with near-optimal guarantees for AUVs. A sampling based correction procedure ensures that the planning is not limited by local minima, enabling navigation through narrow spaces. The method is shown, both on simulation and in-pool experiments, to be fast enough to enable real-time autonomous navigation for an Aqua2 AUV with strong safety guarantees.

Underwater Surveying via Bearing only Cooperative Localization

Sep 10, 2018

Abstract:Bearing only cooperative localization has been used successfully on aerial and ground vehicles. In this paper we present an extension of the approach to the underwater domain. The focus is on adapting the technique to handle the challenging visibility conditions underwater. Furthermore, data from inertial, magnetic, and depth sensors are utilized to improve the robustness of the estimation. In addition to robotic applications, the presented technique can be used for cave mapping and for marine archeology surveying, both by human divers. Experimental results from different environments, including a fresh water, low visibility, lake in South Carolina; a cavern in Florida; and coral reefs in Barbados during the day and during the night, validate the robustness and the accuracy of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge