Michail Kalaitzakis

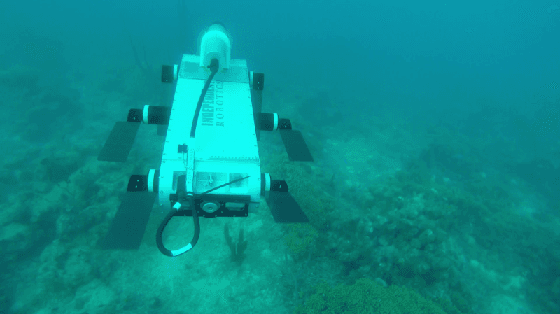

AquaVis: A Perception-Aware Autonomous Navigation Framework for Underwater Vehicles

Oct 04, 2021

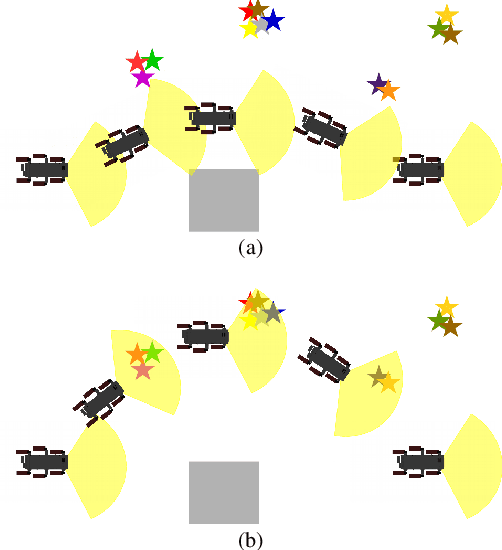

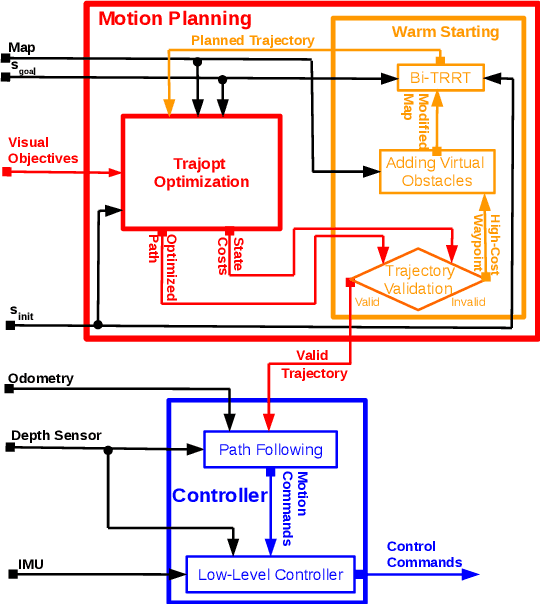

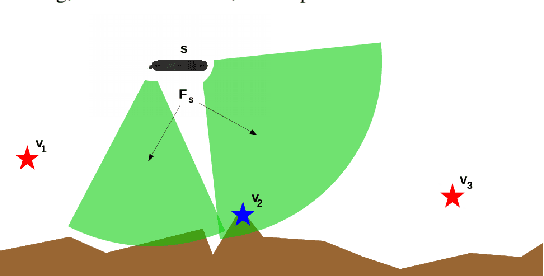

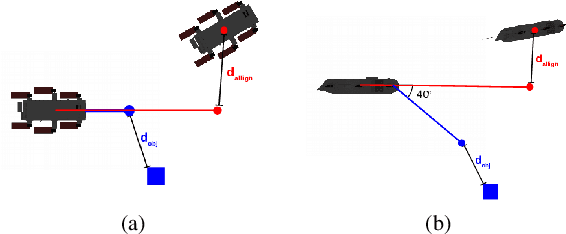

Abstract:Visual monitoring operations underwater require both observing the objects of interest in close-proximity, and tracking the few feature-rich areas necessary for state estimation.This paper introduces the first navigation framework, called AquaVis, that produces on-line visibility-aware motion plans that enable Autonomous Underwater Vehicles (AUVs) to track multiple visual objectives with an arbitrary camera configuration in real-time. Using the proposed pipeline, AUVs can efficiently move in 3D, reach their goals while avoiding obstacles safely, and maximizing the visibility of multiple objectives along the path within a specified proximity. The method is sufficiently fast to be executed in real-time and is suitable for single or multiple camera configurations. Experimental results show the significant improvement on tracking multiple automatically-extracted points of interest, with low computational overhead and fast re-planning times

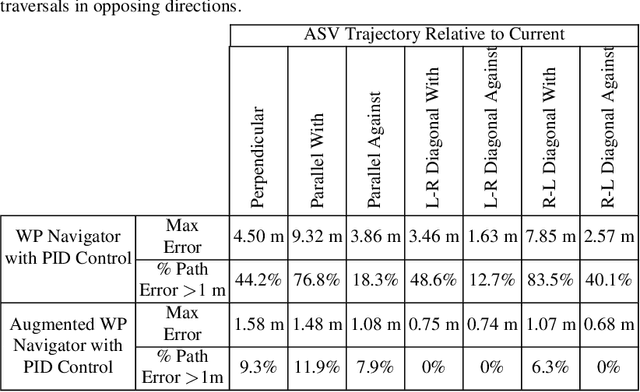

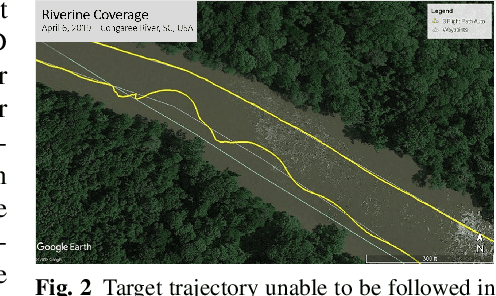

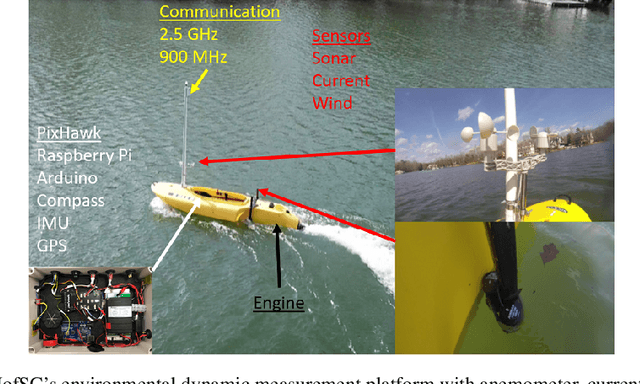

Dynamic Autonomous Surface Vehicle Controls Under Changing Environmental Forces

Aug 07, 2019

Abstract:The ability to navigate, search, and monitor dynamic marine environments such as ports, deltas, tributaries, and rivers presents several challenges to both human operated and autonomously operated surface vehicles. Human data collection and monitoring is overly taxing and inconsistent when faced with large coverage areas, disturbed environments, and potentially uninhabitable situations. In contrast,the same missions become achievable with Autonomous Surface Vehicles (ASVs)configured and capable of accurately maneuvering in such environments. The two dynamic factors that present formidable challenges to completing precise maneuvers in coastal and moving waters are currents and winds. In this work, we present novel and inexpensive methods for sensing these external forces, together with methods for accurately controlling an ASV in the presence of such external forces. The resulting platform is capable of deploying bathymetric and water quality monitoring sensors. Experimental results in local lakes and rivers demonstrate the feasibility of the proposed approach.

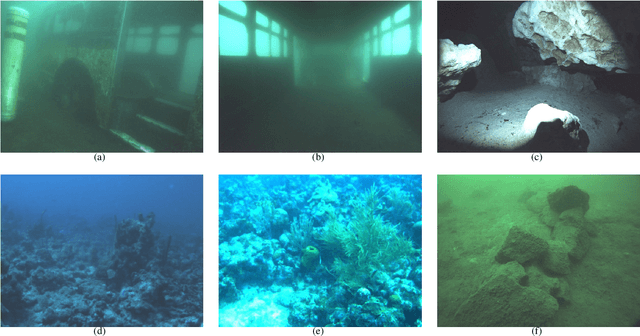

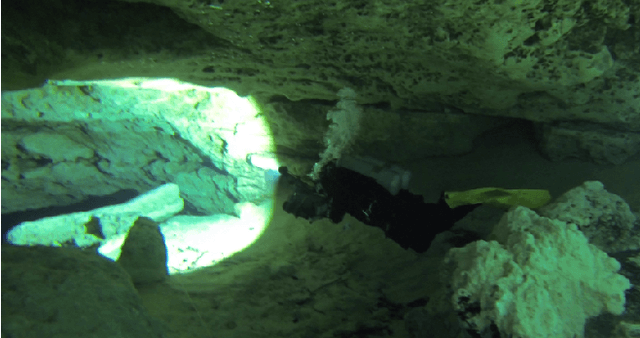

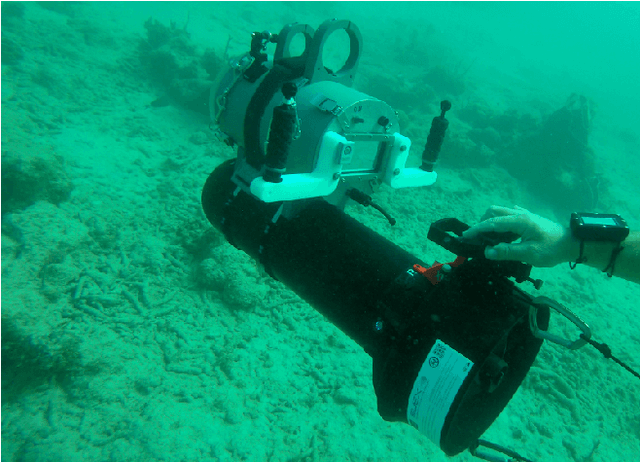

Experimental Comparison of Open Source Visual-Inertial-Based State Estimation Algorithms in the Underwater Domain

Apr 03, 2019

Abstract:A plethora of state estimation techniques have appeared in the last decade using visual data, and more recently with added inertial data. Datasets typically used for evaluation include indoor and urban environments, where supporting videos have shown impressive performance. However, such techniques have not been fully evaluated in challenging conditions, such as the marine domain. In this paper, we compare ten recent open-source packages to provide insights on their performance and guidelines on addressing current challenges. Specifically, we selected direct methods and tightly-coupled optimization techniques that fuse camera and Inertial Measurement Unit (IMU) data together. Experiments are conducted by testing all packages on datasets collected over the years with underwater robots in our laboratory. All the datasets are made available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge