Zijian Zhu

Collaborative Static-Dynamic Teaching: A Semi-Supervised Framework for Stripe-Like Space Target Detection

Aug 09, 2024Abstract:Stripe-like space target detection (SSTD) is crucial for space situational awareness. Traditional unsupervised methods often fail in low signal-to-noise ratio and variable stripe-like space targets scenarios, leading to weak generalization. Although fully supervised learning methods improve model generalization, they require extensive pixel-level labels for training. In the SSTD task, manually creating these labels is often inaccurate and labor-intensive. Semi-supervised learning (SSL) methods reduce the need for these labels and enhance model generalizability, but their performance is limited by pseudo-label quality. To address this, we introduce an innovative Collaborative Static-Dynamic Teacher (CSDT) SSL framework, which includes static and dynamic teacher models as well as a student model. This framework employs a customized adaptive pseudo-labeling (APL) strategy, transitioning from initial static teaching to adaptive collaborative teaching, guiding the student model's training. The exponential moving average (EMA) mechanism further enhances this process by feeding new stripe-like knowledge back to the dynamic teacher model through the student model, creating a positive feedback loop that continuously enhances the quality of pseudo-labels. Moreover, we present MSSA-Net, a novel SSTD network featuring a multi-scale dual-path convolution (MDPC) block and a feature map weighted attention (FMWA) block, designed to extract diverse stripe-like features within the CSDT SSL training framework. Extensive experiments verify the state-of-the-art performance of our framework on the AstroStripeSet and various ground-based and space-based real-world datasets.

SSTD: Stripe-Like Space Target Detection using Single-Point Supervision

Jul 25, 2024Abstract:Stripe-like space target detection (SSTD) plays a key role in enhancing space situational awareness and assessing spacecraft behaviour. This domain faces three challenges: the lack of publicly available datasets, interference from stray light and stars, and the variability of stripe-like targets, which complicates pixel-level annotation. In response, we introduces `AstroStripeSet', a pioneering dataset designed for SSTD, aiming to bridge the gap in academic resources and advance research in SSTD. Furthermore, we propose a novel pseudo-label evolution teacher-student framework with single-point supervision. This framework starts with generating initial pseudo-labels using the zero-shot capabilities of the Segment Anything Model (SAM) in a single-point setting, and refines these labels iteratively. In our framework, the fine-tuned StripeSAM serves as the teacher and the newly developed StripeNet as the student, consistently improving segmentation performance by improving the quality of pseudo-labels. We also introduce `GeoDice', a new loss function customized for the linear characteristics of stripe-like targets. Extensive experiments show that the performance of our approach matches fully supervised methods on all evaluation metrics, establishing a new state-of-the-art (SOTA) benchmark. Our dataset and code will be made publicly available.

Watch out Venomous Snake Species: A Solution to SnakeCLEF2023

Jul 19, 2023Abstract:The SnakeCLEF2023 competition aims to the development of advanced algorithms for snake species identification through the analysis of images and accompanying metadata. This paper presents a method leveraging utilization of both images and metadata. Modern CNN models and strong data augmentation are utilized to learn better representation of images. To relieve the challenge of long-tailed distribution, seesaw loss is utilized in our method. We also design a light model to calculate prior probabilities using metadata features extracted from CLIP in post processing stage. Besides, we attach more importance to venomous species by assigning venomous species labels to some examples that model is uncertain about. Our method achieves 91.31% score of the final metric combined of F1 and other metrics on private leaderboard, which is the 1st place among the participators. The code is available at https://github.com/xiaoxsparraw/CLEF2023.

Understanding the Robustness of 3D Object Detection with Bird's-Eye-View Representations in Autonomous Driving

Mar 30, 2023Abstract:3D object detection is an essential perception task in autonomous driving to understand the environments. The Bird's-Eye-View (BEV) representations have significantly improved the performance of 3D detectors with camera inputs on popular benchmarks. However, there still lacks a systematic understanding of the robustness of these vision-dependent BEV models, which is closely related to the safety of autonomous driving systems. In this paper, we evaluate the natural and adversarial robustness of various representative models under extensive settings, to fully understand their behaviors influenced by explicit BEV features compared with those without BEV. In addition to the classic settings, we propose a 3D consistent patch attack by applying adversarial patches in the 3D space to guarantee the spatiotemporal consistency, which is more realistic for the scenario of autonomous driving. With substantial experiments, we draw several findings: 1) BEV models tend to be more stable than previous methods under different natural conditions and common corruptions due to the expressive spatial representations; 2) BEV models are more vulnerable to adversarial noises, mainly caused by the redundant BEV features; 3) Camera-LiDAR fusion models have superior performance under different settings with multi-modal inputs, but BEV fusion model is still vulnerable to adversarial noises of both point cloud and image. These findings alert the safety issue in the applications of BEV detectors and could facilitate the development of more robust models.

Benchmarking Robustness of 3D Object Detection to Common Corruptions in Autonomous Driving

Mar 20, 2023Abstract:3D object detection is an important task in autonomous driving to perceive the surroundings. Despite the excellent performance, the existing 3D detectors lack the robustness to real-world corruptions caused by adverse weathers, sensor noises, etc., provoking concerns about the safety and reliability of autonomous driving systems. To comprehensively and rigorously benchmark the corruption robustness of 3D detectors, in this paper we design 27 types of common corruptions for both LiDAR and camera inputs considering real-world driving scenarios. By synthesizing these corruptions on public datasets, we establish three corruption robustness benchmarks -- KITTI-C, nuScenes-C, and Waymo-C. Then, we conduct large-scale experiments on 24 diverse 3D object detection models to evaluate their corruption robustness. Based on the evaluation results, we draw several important findings, including: 1) motion-level corruptions are the most threatening ones that lead to significant performance drop of all models; 2) LiDAR-camera fusion models demonstrate better robustness; 3) camera-only models are extremely vulnerable to image corruptions, showing the indispensability of LiDAR point clouds. We release the benchmarks and codes at https://github.com/kkkcx/3D_Corruptions_AD. We hope that our benchmarks and findings can provide insights for future research on developing robust 3D object detection models.

To Make Yourself Invisible with Adversarial Semantic Contours

Mar 01, 2023Abstract:Modern object detectors are vulnerable to adversarial examples, which may bring risks to real-world applications. The sparse attack is an important task which, compared with the popular adversarial perturbation on the whole image, needs to select the potential pixels that is generally regularized by an $\ell_0$-norm constraint, and simultaneously optimize the corresponding texture. The non-differentiability of $\ell_0$ norm brings challenges and many works on attacking object detection adopted manually-designed patterns to address them, which are meaningless and independent of objects, and therefore lead to relatively poor attack performance. In this paper, we propose Adversarial Semantic Contour (ASC), an MAP estimate of a Bayesian formulation of sparse attack with a deceived prior of object contour. The object contour prior effectively reduces the search space of pixel selection and improves the attack by introducing more semantic bias. Extensive experiments demonstrate that ASC can corrupt the prediction of 9 modern detectors with different architectures (\e.g., one-stage, two-stage and Transformer) by modifying fewer than 5\% of the pixels of the object area in COCO in white-box scenario and around 10\% of those in black-box scenario. We further extend the attack to datasets for autonomous driving systems to verify the effectiveness. We conclude with cautions about contour being the common weakness of object detectors with various architecture and the care needed in applying them in safety-sensitive scenarios.

* 11 pages, 7 figures, published in Computer Vision and Image Understanding in 2023

You Cannot Easily Catch Me: A Low-Detectable Adversarial Patch for Object Detectors

Sep 30, 2021

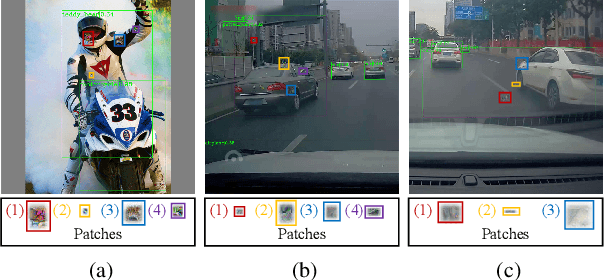

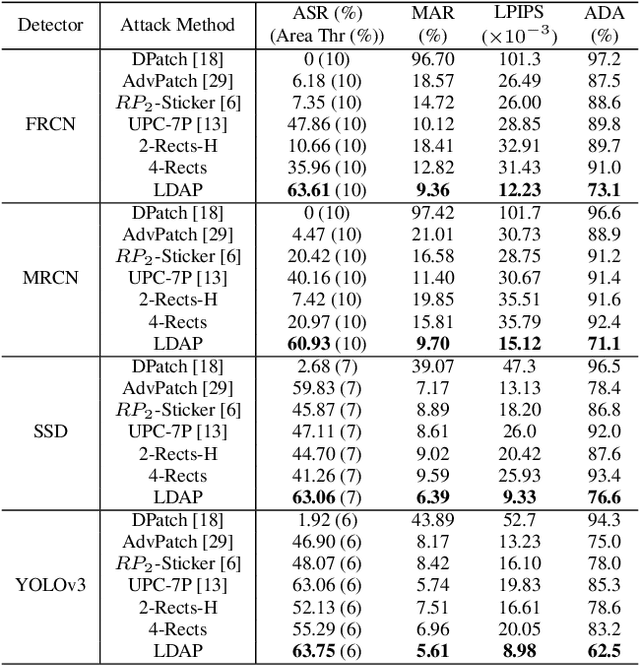

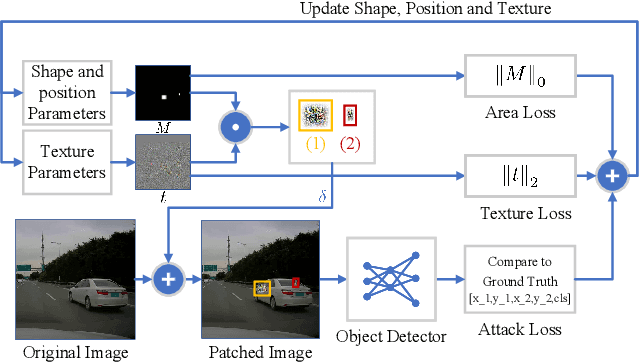

Abstract:Blind spots or outright deceit can bedevil and deceive machine learning models. Unidentified objects such as digital "stickers," also known as adversarial patches, can fool facial recognition systems, surveillance systems and self-driving cars. Fortunately, most existing adversarial patches can be outwitted, disabled and rejected by a simple classification network called an adversarial patch detector, which distinguishes adversarial patches from original images. An object detector classifies and predicts the types of objects within an image, such as by distinguishing a motorcyclist from the motorcycle, while also localizing each object's placement within the image by "drawing" so-called bounding boxes around each object, once again separating the motorcyclist from the motorcycle. To train detectors even better, however, we need to keep subjecting them to confusing or deceitful adversarial patches as we probe for the models' blind spots. For such probes, we came up with a novel approach, a Low-Detectable Adversarial Patch, which attacks an object detector with small and texture-consistent adversarial patches, making these adversaries less likely to be recognized. Concretely, we use several geometric primitives to model the shapes and positions of the patches. To enhance our attack performance, we also assign different weights to the bounding boxes in terms of loss function. Our experiments on the common detection dataset COCO as well as the driving-video dataset D2-City show that LDAP is an effective attack method, and can resist the adversarial patch detector.

Adversarial Semantic Contour for Object Detection

Sep 30, 2021

Abstract:Modern object detectors are vulnerable to adversarial examples, which brings potential risks to numerous applications, e.g., self-driving car. Among attacks regularized by $\ell_p$ norm, $\ell_0$-attack aims to modify as few pixels as possible. Nevertheless, the problem is nontrivial since it generally requires to optimize the shape along with the texture simultaneously, which is an NP-hard problem. To address this issue, we propose a novel method of Adversarial Semantic Contour (ASC) guided by object contour as prior. With this prior, we reduce the searching space to accelerate the $\ell_0$ optimization, and also introduce more semantic information which should affect the detectors more. Based on the contour, we optimize the selection of modified pixels via sampling and their colors with gradient descent alternately. Extensive experiments demonstrate that our proposed ASC outperforms the most commonly manually designed patterns (e.g., square patches and grids) on task of disappearing. By modifying no more than 5\% and 3.5\% of the object area respectively, our proposed ASC can successfully mislead the mainstream object detectors including the SSD512, Yolov4, Mask RCNN, Faster RCNN, etc.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge