Zhenyue Qin

Bob

A Federated and Parameter-Efficient Framework for Large Language Model Training in Medicine

Jan 29, 2026Abstract:Large language models (LLMs) have demonstrated strong performance on medical benchmarks, including question answering and diagnosis. To enable their use in clinical settings, LLMs are typically further adapted through continued pretraining or post-training using clinical data. However, most medical LLMs are trained on data from a single institution, which faces limitations in generalizability and safety in heterogeneous systems. Federated learning (FL) is a promising solution for enabling collaborative model development across healthcare institutions. Yet applying FL to LLMs in medicine remains fundamentally limited. First, conventional FL requires transmitting the full model during each communication round, which becomes impractical for multi-billion-parameter LLMs given the limited computational resources. Second, many FL algorithms implicitly assume data homogeneity, whereas real-world clinical data are highly heterogeneous across patients, diseases, and institutional practices. We introduce the model-agnostic and parameter-efficient federated learning framework for adapting LLMs to medical applications. Fed-MedLoRA transmits only low-rank adapter parameters, reducing communication and computation overhead, while Fed-MedLoRA+ further incorporates adaptive, data-aware aggregation to improve convergence under cross-site heterogeneity. We apply the framework to clinical information extraction (IE), which transforms patient narratives into structured medical entities and relations. Accuracy was assessed across five patient cohorts through comparisons with BERT models, and LLaMA-3 and DeepSeek-R1, GPT-4o models. Evaluation settings included (1) in-domain training and testing, (2) external validation on independent cohorts, and (3) a low-resource new-site adaptation scenario using real-world clinical notes from the Yale New Haven Health System.

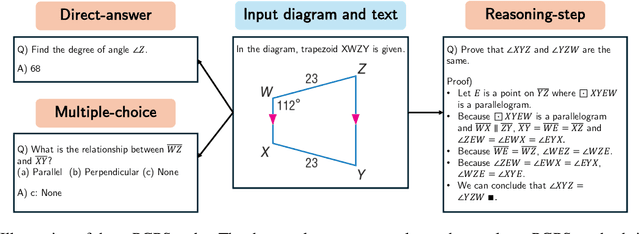

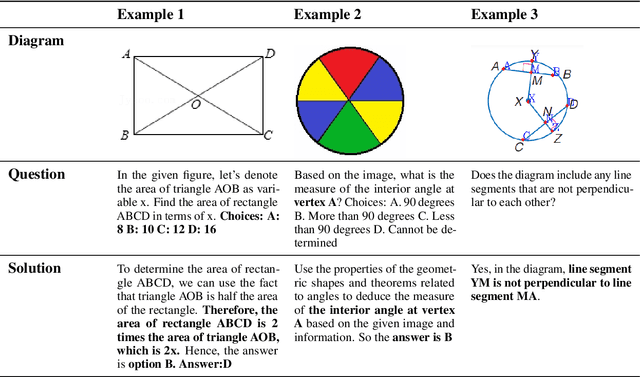

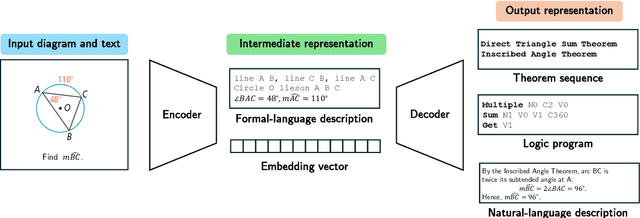

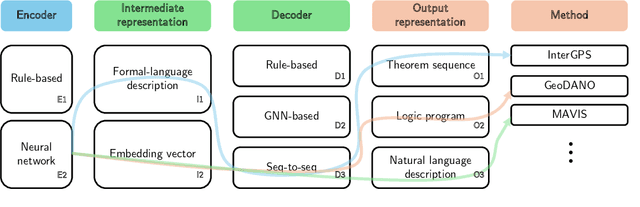

Plane Geometry Problem Solving with Multi-modal Reasoning: A Survey

May 20, 2025

Abstract:Plane geometry problem solving (PGPS) has recently gained significant attention as a benchmark to assess the multi-modal reasoning capabilities of large vision-language models. Despite the growing interest in PGPS, the research community still lacks a comprehensive overview that systematically synthesizes recent work in PGPS. To fill this gap, we present a survey of existing PGPS studies. We first categorize PGPS methods into an encoder-decoder framework and summarize the corresponding output formats used by their encoders and decoders. Subsequently, we classify and analyze these encoders and decoders according to their architectural designs. Finally, we outline major challenges and promising directions for future research. In particular, we discuss the hallucination issues arising during the encoding phase within encoder-decoder architectures, as well as the problem of data leakage in current PGPS benchmarks.

GeoDANO: Geometric VLM with Domain Agnostic Vision Encoder

Feb 17, 2025Abstract:We introduce GeoDANO, a geometric vision-language model (VLM) with a domain-agnostic vision encoder, for solving plane geometry problems. Although VLMs have been employed for solving geometry problems, their ability to recognize geometric features remains insufficiently analyzed. To address this gap, we propose a benchmark that evaluates the recognition of visual geometric features, including primitives such as dots and lines, and relations such as orthogonality. Our preliminary study shows that vision encoders often used in general-purpose VLMs, e.g., OpenCLIP, fail to detect these features and struggle to generalize across domains. We develop GeoCLIP, a CLIP based model trained on synthetic geometric diagram-caption pairs to overcome the limitation. Benchmark results show that GeoCLIP outperforms existing vision encoders in recognizing geometric features. We then propose our VLM, GeoDANO, which augments GeoCLIP with a domain adaptation strategy for unseen diagram styles. GeoDANO outperforms specialized methods for plane geometry problems and GPT-4o on MathVerse.

HandCraft: Anatomically Correct Restoration of Malformed Hands in Diffusion Generated Images

Nov 07, 2024

Abstract:Generative text-to-image models, such as Stable Diffusion, have demonstrated a remarkable ability to generate diverse, high-quality images. However, they are surprisingly inept when it comes to rendering human hands, which are often anatomically incorrect or reside in the "uncanny valley". In this paper, we propose a method HandCraft for restoring such malformed hands. This is achieved by automatically constructing masks and depth images for hands as conditioning signals using a parametric model, allowing a diffusion-based image editor to fix the hand's anatomy and adjust its pose while seamlessly integrating the changes into the original image, preserving pose, color, and style. Our plug-and-play hand restoration solution is compatible with existing pretrained diffusion models, and the restoration process facilitates adoption by eschewing any fine-tuning or training requirements for the diffusion models. We also contribute MalHand datasets that contain generated images with a wide variety of malformed hands in several styles for hand detector training and hand restoration benchmarking, and demonstrate through qualitative and quantitative evaluation that HandCraft not only restores anatomical correctness but also maintains the integrity of the overall image.

Visual Prompting in LLMs for Enhancing Emotion Recognition

Oct 03, 2024Abstract:Vision Large Language Models (VLLMs) are transforming the intersection of computer vision and natural language processing. Nonetheless, the potential of using visual prompts for emotion recognition in these models remains largely unexplored and untapped. Traditional methods in VLLMs struggle with spatial localization and often discard valuable global context. To address this problem, we propose a Set-of-Vision prompting (SoV) approach that enhances zero-shot emotion recognition by using spatial information, such as bounding boxes and facial landmarks, to mark targets precisely. SoV improves accuracy in face count and emotion categorization while preserving the enriched image context. Through a battery of experimentation and analysis of recent commercial or open-source VLLMs, we evaluate the SoV model's ability to comprehend facial expressions in natural environments. Our findings demonstrate the effectiveness of integrating spatial visual prompts into VLLMs for improving emotion recognition performance.

LMOD: A Large Multimodal Ophthalmology Dataset and Benchmark for Large Vision-Language Models

Oct 02, 2024

Abstract:Ophthalmology relies heavily on detailed image analysis for diagnosis and treatment planning. While large vision-language models (LVLMs) have shown promise in understanding complex visual information, their performance on ophthalmology images remains underexplored. We introduce LMOD, a dataset and benchmark for evaluating LVLMs on ophthalmology images, covering anatomical understanding, diagnostic analysis, and demographic extraction. LMODincludes 21,993 images spanning optical coherence tomography, scanning laser ophthalmoscopy, eye photos, surgical scenes, and color fundus photographs. We benchmark 13 state-of-the-art LVLMs and find that they are far from perfect for comprehending ophthalmology images. Models struggle with diagnostic analysis and demographic extraction, reveal weaknesses in spatial reasoning, diagnostic analysis, handling out-of-domain queries, and safeguards for handling biomarkers of ophthalmology images.

Authentic Emotion Mapping: Benchmarking Facial Expressions in Real News

Apr 21, 2024Abstract:In this paper, we present a novel benchmark for Emotion Recognition using facial landmarks extracted from realistic news videos. Traditional methods relying on RGB images are resource-intensive, whereas our approach with Facial Landmark Emotion Recognition (FLER) offers a simplified yet effective alternative. By leveraging Graph Neural Networks (GNNs) to analyze the geometric and spatial relationships of facial landmarks, our method enhances the understanding and accuracy of emotion recognition. We discuss the advancements and challenges in deep learning techniques for emotion recognition, particularly focusing on Graph Neural Networks (GNNs) and Transformers. Our experimental results demonstrate the viability and potential of our dataset as a benchmark, setting a new direction for future research in emotion recognition technologies. The codes and models are at: https://github.com/wangzhifengharrison/benchmark_real_news

Detecting and Restoring Non-Standard Hands in Stable Diffusion Generated Images

Dec 07, 2023Abstract:We introduce a pipeline to address anatomical inaccuracies in Stable Diffusion generated hand images. The initial step involves constructing a specialized dataset, focusing on hand anomalies, to train our models effectively. A finetuned detection model is pivotal for precise identification of these anomalies, ensuring targeted correction. Body pose estimation aids in understanding hand orientation and positioning, crucial for accurate anomaly correction. The integration of ControlNet and InstructPix2Pix facilitates sophisticated inpainting and pixel-level transformation, respectively. This dual approach allows for high-fidelity image adjustments. This comprehensive approach ensures the generation of images with anatomically accurate hands, closely resembling real-world appearances. Our experimental results demonstrate the pipeline's efficacy in enhancing hand image realism in Stable Diffusion outputs. We provide an online demo at https://fixhand.yiqun.io

Strengthening Skeletal Action Recognizers via Leveraging Temporal Patterns

Jun 04, 2022

Abstract:Skeleton sequences are compact and lightweight. Numerous skeleton-based action recognizers have been proposed to classify human behaviors. In this work, we aim to incorporate components that are compatible with existing models and further improve their accuracy. To this end, we design two temporal accessories: discrete cosine encoding (DCE) and chronological loss (CRL). DCE facilitates models to analyze motion patterns from the frequency domain and meanwhile alleviates the influence of signal noise. CRL guides networks to explicitly capture the sequence's chronological order. These two components consistently endow many recently-proposed action recognizers with accuracy boosts, achieving new state-of-the-art (SOTA) accuracy on two large benchmark datasets (NTU60 and NTU120).

ANUBIS: Skeleton Action Recognition Dataset, Review, and Benchmark

May 08, 2022

Abstract:Skeleton-based action recognition, as a subarea of action recognition, is swiftly accumulating attention and popularity. The task is to recognize actions performed by human articulation points. Compared with other data modalities, 3D human skeleton representations have extensive unique desirable characteristics, including succinctness, robustness, racial-impartiality, and many more. We aim to provide a roadmap for new and existing researchers a on the landscapes of skeleton-based action recognition for new and existing researchers. To this end, we present a review in the form of a taxonomy on existing works of skeleton-based action recognition. We partition them into four major categories: (1) datasets; (2) extracting spatial features; (3) capturing temporal patterns; (4) improving signal quality. For each method, we provide concise yet informatively-sufficient descriptions. To promote more fair and comprehensive evaluation on existing approaches of skeleton-based action recognition, we collect ANUBIS, a large-scale human skeleton dataset. Compared with previously collected dataset, ANUBIS are advantageous in the following four aspects: (1) employing more recently released sensors; (2) containing novel back view; (3) encouraging high enthusiasm of subjects; (4) including actions of the COVID pandemic era. Using ANUBIS, we comparably benchmark performance of current skeleton-based action recognizers. At the end of this paper, we outlook future development of skeleton-based action recognition by listing several new technical problems. We believe they are valuable to solve in order to commercialize skeleton-based action recognition in the near future. The dataset of ANUBIS is available at: http://hcc-workshop.anu.edu.au/webs/anu101/home.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge