Seunghyuk Cho

In-Place Feedback: A New Paradigm for Guiding LLMs in Multi-Turn Reasoning

Oct 01, 2025Abstract:Large language models (LLMs) are increasingly studied in the context of multi-turn reasoning, where models iteratively refine their outputs based on user-provided feedback. Such settings are crucial for tasks that require complex reasoning, yet existing feedback paradigms often rely on issuing new messages. LLMs struggle to integrate these reliably, leading to inconsistent improvements. In this work, we introduce in-place feedback, a novel interaction paradigm in which users directly edit an LLM's previous response, and the model conditions on this modified response to generate its revision. Empirical evaluations on diverse reasoning-intensive benchmarks reveal that in-place feedback achieves better performance than conventional multi-turn feedback while using $79.1\%$ fewer tokens. Complementary analyses on controlled environments further demonstrate that in-place feedback resolves a core limitation of multi-turn feedback: models often fail to apply feedback precisely to erroneous parts of the response, leaving errors uncorrected and sometimes introducing new mistakes into previously correct content. These findings suggest that in-place feedback offers a more natural and effective mechanism for guiding LLMs in reasoning-intensive tasks.

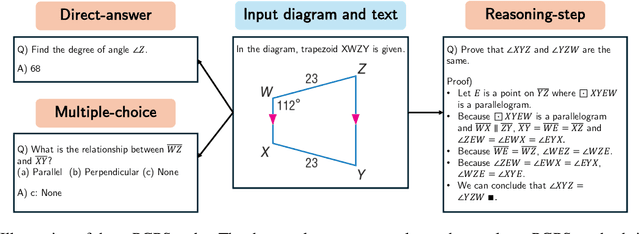

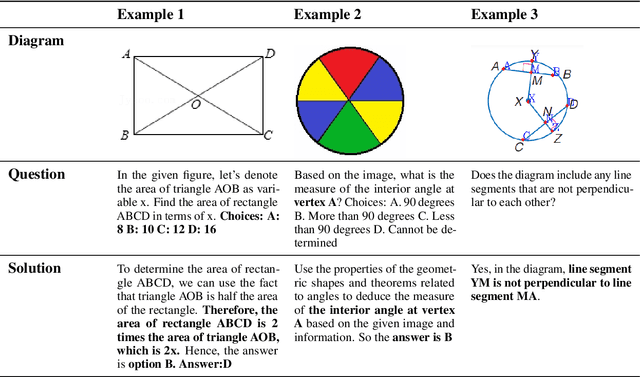

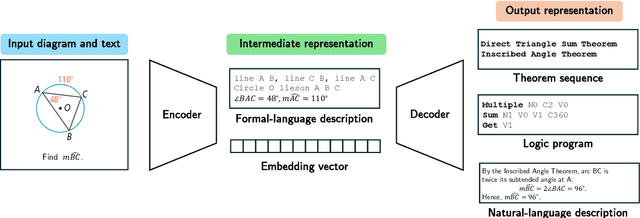

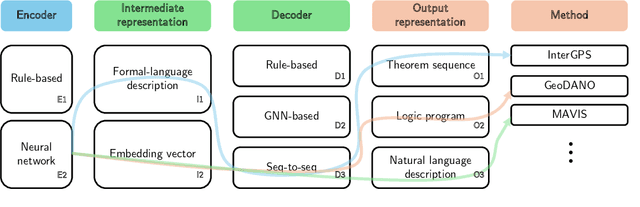

Plane Geometry Problem Solving with Multi-modal Reasoning: A Survey

May 20, 2025

Abstract:Plane geometry problem solving (PGPS) has recently gained significant attention as a benchmark to assess the multi-modal reasoning capabilities of large vision-language models. Despite the growing interest in PGPS, the research community still lacks a comprehensive overview that systematically synthesizes recent work in PGPS. To fill this gap, we present a survey of existing PGPS studies. We first categorize PGPS methods into an encoder-decoder framework and summarize the corresponding output formats used by their encoders and decoders. Subsequently, we classify and analyze these encoders and decoders according to their architectural designs. Finally, we outline major challenges and promising directions for future research. In particular, we discuss the hallucination issues arising during the encoding phase within encoder-decoder architectures, as well as the problem of data leakage in current PGPS benchmarks.

CoPL: Collaborative Preference Learning for Personalizing LLMs

Mar 03, 2025

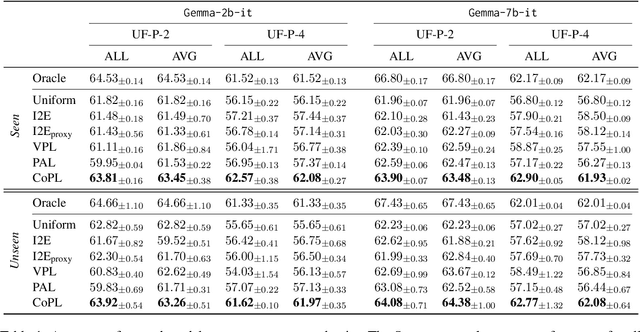

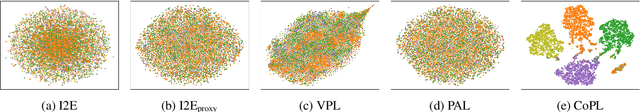

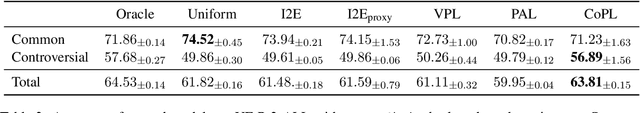

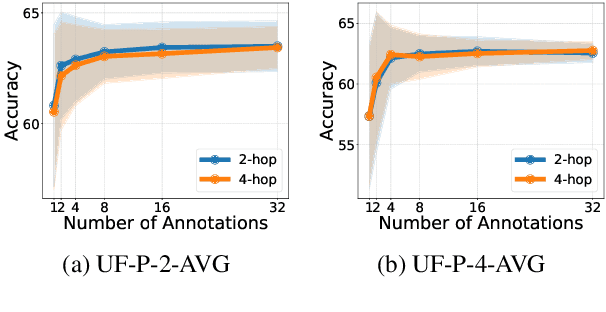

Abstract:Personalizing large language models (LLMs) is important for aligning outputs with diverse user preferences, yet existing methods struggle with flexibility and generalization. We propose CoPL (Collaborative Preference Learning), a graph-based collaborative filtering framework that models user-response relationships to enhance preference estimation, particularly in sparse annotation settings. By integrating a mixture of LoRA experts, CoPL efficiently fine-tunes LLMs while dynamically balancing shared and user-specific preferences. Additionally, an optimization-free adaptation strategy enables generalization to unseen users without fine-tuning. Experiments on UltraFeedback-P demonstrate that CoPL outperforms existing personalized reward models, effectively capturing both common and controversial preferences, making it a scalable solution for personalized LLM alignment.

GeoDANO: Geometric VLM with Domain Agnostic Vision Encoder

Feb 17, 2025Abstract:We introduce GeoDANO, a geometric vision-language model (VLM) with a domain-agnostic vision encoder, for solving plane geometry problems. Although VLMs have been employed for solving geometry problems, their ability to recognize geometric features remains insufficiently analyzed. To address this gap, we propose a benchmark that evaluates the recognition of visual geometric features, including primitives such as dots and lines, and relations such as orthogonality. Our preliminary study shows that vision encoders often used in general-purpose VLMs, e.g., OpenCLIP, fail to detect these features and struggle to generalize across domains. We develop GeoCLIP, a CLIP based model trained on synthetic geometric diagram-caption pairs to overcome the limitation. Benchmark results show that GeoCLIP outperforms existing vision encoders in recognizing geometric features. We then propose our VLM, GeoDANO, which augments GeoCLIP with a domain adaptation strategy for unseen diagram styles. GeoDANO outperforms specialized methods for plane geometry problems and GPT-4o on MathVerse.

Curve Your Attention: Mixed-Curvature Transformers for Graph Representation Learning

Sep 08, 2023Abstract:Real-world graphs naturally exhibit hierarchical or cyclical structures that are unfit for the typical Euclidean space. While there exist graph neural networks that leverage hyperbolic or spherical spaces to learn representations that embed such structures more accurately, these methods are confined under the message-passing paradigm, making the models vulnerable against side-effects such as oversmoothing and oversquashing. More recent work have proposed global attention-based graph Transformers that can easily model long-range interactions, but their extensions towards non-Euclidean geometry are yet unexplored. To bridge this gap, we propose Fully Product-Stereographic Transformer, a generalization of Transformers towards operating entirely on the product of constant curvature spaces. When combined with tokenized graph Transformers, our model can learn the curvature appropriate for the input graph in an end-to-end fashion, without the need of additional tuning on different curvature initializations. We also provide a kernelized approach to non-Euclidean attention, which enables our model to run in time and memory cost linear to the number of nodes and edges while respecting the underlying geometry. Experiments on graph reconstruction and node classification demonstrate the benefits of generalizing Transformers to the non-Euclidean domain.

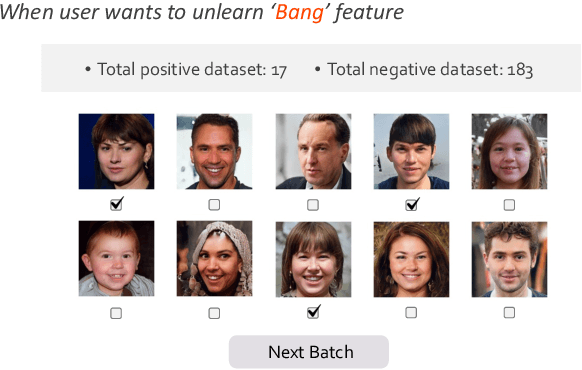

Feature Unlearning for Generative Models via Implicit Feedback

Mar 10, 2023

Abstract:We tackle the problem of feature unlearning from a pretrained image generative model. Unlike a common unlearning task where an unlearning target is a subset of the training set, we aim to unlearn a specific feature, such as hairstyle from facial images, from the pretrained generative models. As the target feature is only presented in a local region of an image, unlearning the entire image from the pretrained model may result in losing other details in the remaining region of the image. To specify which features to unlearn, we develop an implicit feedback mechanism where a user can select images containing the target feature. From the implicit feedback, we identify a latent representation corresponding to the target feature and then use the representation to unlearn the generative model. Our framework is generalizable for the two well-known families of generative models: GANs and VAEs. Through experiments on MNIST and CelebA datasets, we show that target features are successfully removed while keeping the fidelity of the original models.

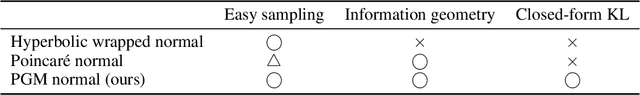

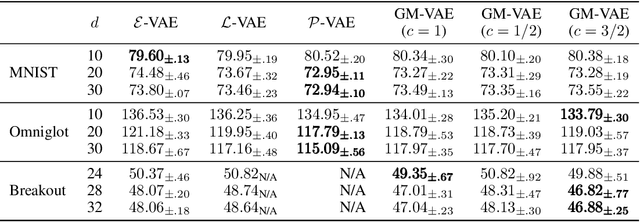

GM-VAE: Representation Learning with VAE on Gaussian Manifold

Sep 30, 2022

Abstract:We propose a Gaussian manifold variational auto-encoder (GM-VAE) whose latent space consists of a set of diagonal Gaussian distributions. It is known that the set of the diagonal Gaussian distributions with the Fisher information metric forms a product hyperbolic space, which we call a Gaussian manifold. To learn the VAE endowed with the Gaussian manifold, we first propose a pseudo Gaussian manifold normal distribution based on the Kullback-Leibler divergence, a local approximation of the squared Fisher-Rao distance, to define a density over the latent space. With the newly proposed distribution, we introduce geometric transformations at the last and the first of the encoder and the decoder of VAE, respectively to help the transition between the Euclidean and Gaussian manifolds. Through the empirical experiments, we show competitive generalization performance of GM-VAE against other variants of hyperbolic- and Euclidean-VAEs. Our model achieves strong numerical stability, which is a common limitation reported with previous hyperbolic-VAEs.

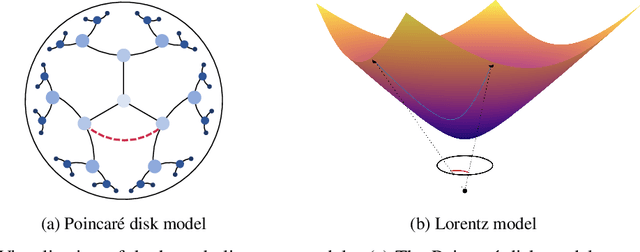

A Rotated Hyperbolic Wrapped Normal Distribution for Hierarchical Representation Learning

May 25, 2022

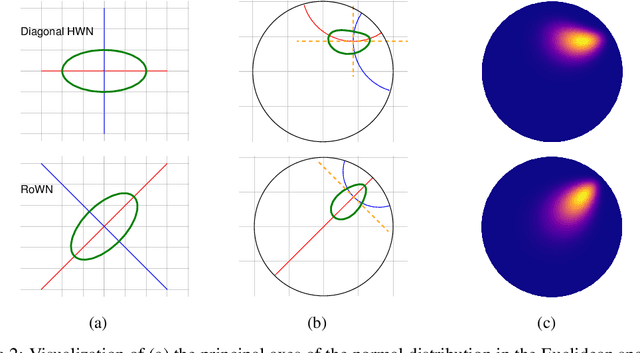

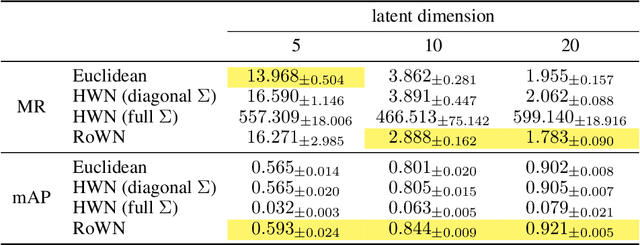

Abstract:We present a rotated hyperbolic wrapped normal distribution (RoWN), a simple yet effective alteration of a hyperbolic wrapped normal distribution (HWN). The HWN expands the domain of probabilistic modeling from Euclidean to hyperbolic space, where a tree can be embedded with arbitrary low distortion in theory. In this work, we analyze the geometric properties of the diagonal HWN, a standard choice of distribution in probabilistic modeling. The analysis shows that the distribution is inappropriate to represent the data points at the same hierarchy level through their angular distance with the same norm in the Poincar\'e disk model. We then empirically verify the presence of limitations of HWN, and show how RoWN, the newly proposed distribution, can alleviate the limitations on various hierarchical datasets, including noisy synthetic binary tree, WordNet, and Atari 2600 Breakout.

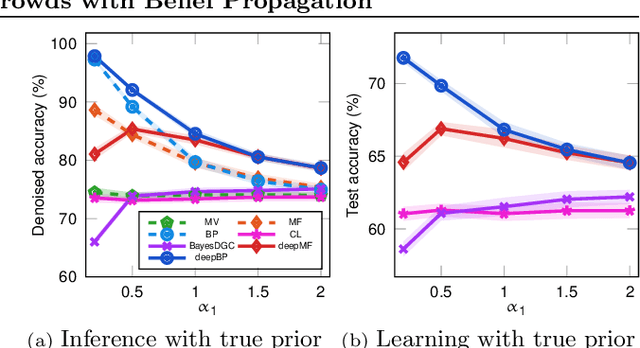

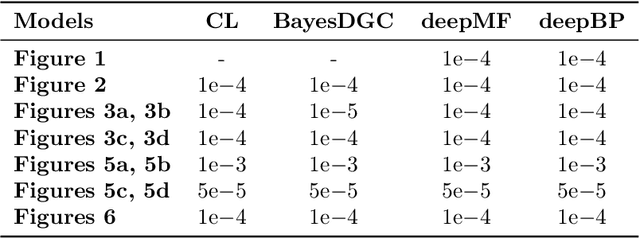

Robust Deep Learning from Crowds with Belief Propagation

Nov 01, 2021

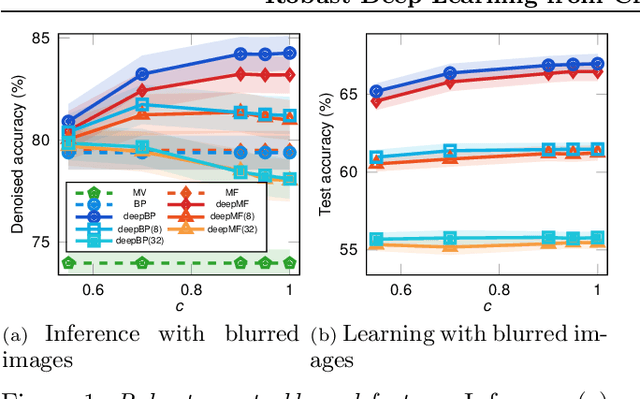

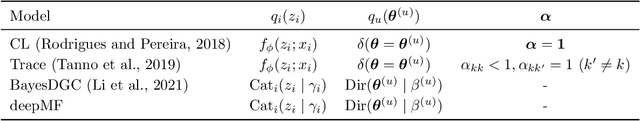

Abstract:Crowdsourcing systems enable us to collect noisy labels from crowd workers. A graphical model representing local dependencies between workers and tasks provides a principled way of reasoning over the true labels from the noisy answers. However, one needs a predictive model working on unseen data directly from crowdsourced datasets instead of the true labels in many cases. To infer true labels and learn a predictive model simultaneously, we propose a new data-generating process, where a neural network generates the true labels from task features. We devise an EM framework alternating variational inference and deep learning to infer the true labels and to update the neural network, respectively. Experimental results with synthetic and real datasets show a belief-propagation-based EM algorithm is robust to i) corruption in task features, ii) multi-modal or mismatched worker prior, and iii) few spammers submitting noises to many tasks.

Semi-supervised Image Classification with Grad-CAM Consistency

Aug 31, 2021

Abstract:Consistency training, which exploits both supervised and unsupervised learning with different augmentations on image, is an effective method of utilizing unlabeled data in semi-supervised learning (SSL) manner. Here, we present another version of the method with Grad-CAM consistency loss, so it can be utilized in training model with better generalization and adjustability. We show that our method improved the baseline ResNet model with at most 1.44 % and 0.31 $\pm$ 0.59 %p accuracy improvement on average with CIFAR-10 dataset. We conducted ablation study comparing to using only psuedo-label for consistency training. Also, we argue that our method can adjust in different environments when targeted to different units in the model. The code is available: https://github.com/gimme1dollar/gradcam-consistency-semi-sup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge