Zhe Jin

Auto-US: An Ultrasound Video Diagnosis Agent Using Video Classification Framework and LLMs

Nov 11, 2025Abstract:AI-assisted ultrasound video diagnosis presents new opportunities to enhance the efficiency and accuracy of medical imaging analysis. However, existing research remains limited in terms of dataset diversity, diagnostic performance, and clinical applicability. In this study, we propose \textbf{Auto-US}, an intelligent diagnosis agent that integrates ultrasound video data with clinical diagnostic text. To support this, we constructed \textbf{CUV Dataset} of 495 ultrasound videos spanning five categories and three organs, aggregated from multiple open-access sources. We developed \textbf{CTU-Net}, which achieves state-of-the-art performance in ultrasound video classification, reaching an accuracy of 86.73\% Furthermore, by incorporating large language models, Auto-US is capable of generating clinically meaningful diagnostic suggestions. The final diagnostic scores for each case exceeded 3 out of 5 and were validated by professional clinicians. These results demonstrate the effectiveness and clinical potential of Auto-US in real-world ultrasound applications. Code and data are available at: https://github.com/Bean-Young/Auto-US.

UltraGS: Gaussian Splatting for Ultrasound Novel View Synthesis

Nov 11, 2025Abstract:Ultrasound imaging is a cornerstone of non-invasive clinical diagnostics, yet its limited field of view complicates novel view synthesis. We propose \textbf{UltraGS}, a Gaussian Splatting framework optimized for ultrasound imaging. First, we introduce a depth-aware Gaussian splatting strategy, where each Gaussian is assigned a learnable field of view, enabling accurate depth prediction and precise structural representation. Second, we design SH-DARS, a lightweight rendering function combining low-order spherical harmonics with ultrasound-specific wave physics, including depth attenuation, reflection, and scattering, to model tissue intensity accurately. Third, we contribute the Clinical Ultrasound Examination Dataset, a benchmark capturing diverse anatomical scans under real-world clinical protocols. Extensive experiments on three datasets demonstrate UltraGS's superiority, achieving state-of-the-art results in PSNR (up to 29.55), SSIM (up to 0.89), and MSE (as low as 0.002) while enabling real-time synthesis at 64.69 fps. The code and dataset are open-sourced at: https://github.com/Bean-Young/UltraGS.

Wormhole Dynamics in Deep Neural Networks

Aug 20, 2025Abstract:This work investigates the generalization behavior of deep neural networks (DNNs), focusing on the phenomenon of "fooling examples," where DNNs confidently classify inputs that appear random or unstructured to humans. To explore this phenomenon, we introduce an analytical framework based on maximum likelihood estimation, without adhering to conventional numerical approaches that rely on gradient-based optimization and explicit labels. Our analysis reveals that DNNs operating in an overparameterized regime exhibit a collapse in the output feature space. While this collapse improves network generalization, adding more layers eventually leads to a state of degeneracy, where the model learns trivial solutions by mapping distinct inputs to the same output, resulting in zero loss. Further investigation demonstrates that this degeneracy can be bypassed using our newly derived "wormhole" solution. The wormhole solution, when applied to arbitrary fooling examples, reconciles meaningful labels with random ones and provides a novel perspective on shortcut learning. These findings offer deeper insights into DNN generalization and highlight directions for future research on learning dynamics in unsupervised settings to bridge the gap between theory and practice.

Explicit and Implicit Representations in AI-based 3D Reconstruction for Radiology: A systematic literature review

Apr 15, 2025Abstract:The demand for high-quality medical imaging in clinical practice and assisted diagnosis has made 3D reconstruction in radiological imaging a key research focus. Artificial intelligence (AI) has emerged as a promising approach to enhancing reconstruction accuracy while reducing acquisition and processing time, thereby minimizing patient radiation exposure and discomfort and ultimately benefiting clinical diagnosis. This review explores state-of-the-art AI-based 3D reconstruction algorithms in radiological imaging, categorizing them into explicit and implicit approaches based on their underlying principles. Explicit methods include point-based, volume-based, and Gaussian representations, while implicit methods encompass implicit prior embedding and neural radiance fields. Additionally, we examine commonly used evaluation metrics and benchmark datasets. Finally, we discuss the current state of development, key challenges, and future research directions in this evolving field. Our project available on: https://github.com/Bean-Young/AI4Med.

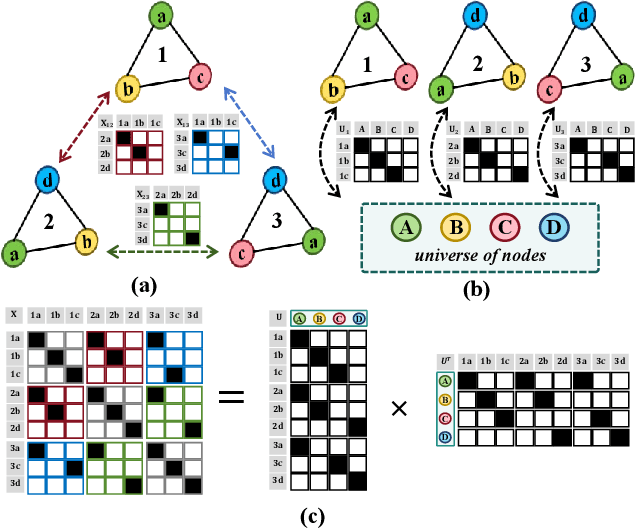

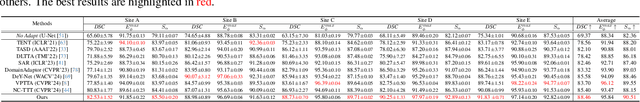

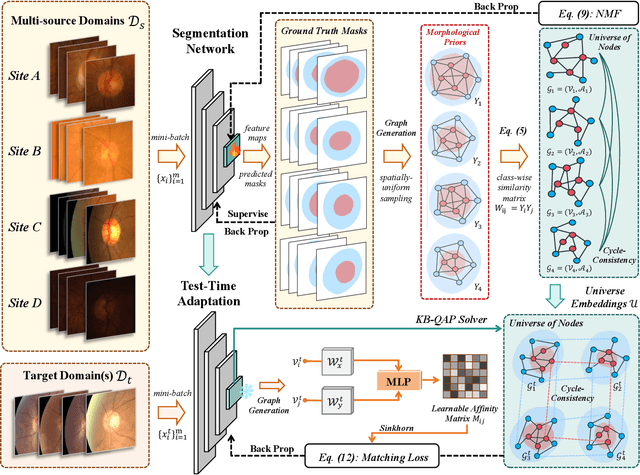

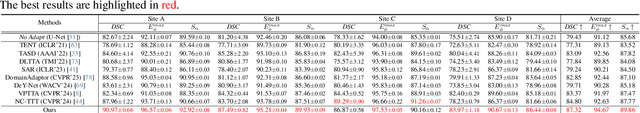

Test-Time Domain Generalization via Universe Learning: A Multi-Graph Matching Approach for Medical Image Segmentation

Mar 17, 2025

Abstract:Despite domain generalization (DG) has significantly addressed the performance degradation of pre-trained models caused by domain shifts, it often falls short in real-world deployment. Test-time adaptation (TTA), which adjusts a learned model using unlabeled test data, presents a promising solution. However, most existing TTA methods struggle to deliver strong performance in medical image segmentation, primarily because they overlook the crucial prior knowledge inherent to medical images. To address this challenge, we incorporate morphological information and propose a framework based on multi-graph matching. Specifically, we introduce learnable universe embeddings that integrate morphological priors during multi-source training, along with novel unsupervised test-time paradigms for domain adaptation. This approach guarantees cycle-consistency in multi-matching while enabling the model to more effectively capture the invariant priors of unseen data, significantly mitigating the effects of domain shifts. Extensive experiments demonstrate that our method outperforms other state-of-the-art approaches on two medical image segmentation benchmarks for both multi-source and single-source domain generalization tasks. The source code is available at https://github.com/Yore0/TTDG-MGM.

Compose Your Aesthetics: Empowering Text-to-Image Models with the Principles of Art

Mar 15, 2025

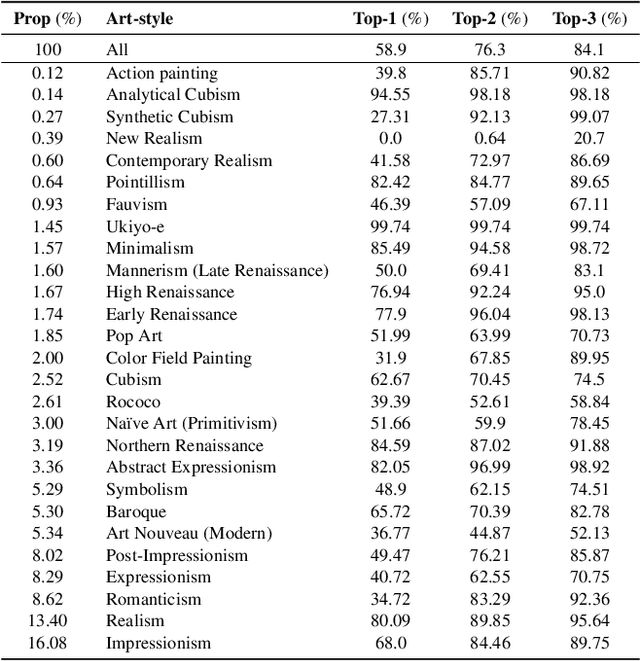

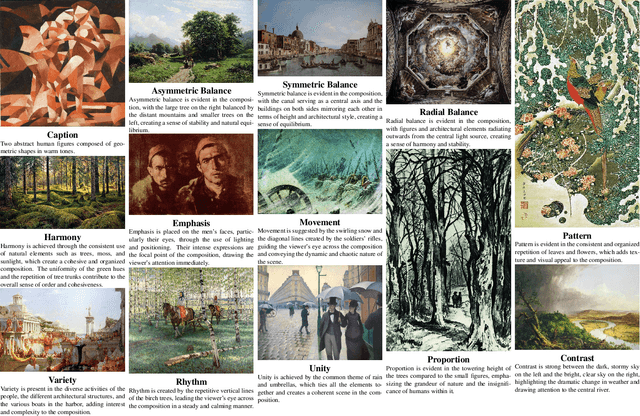

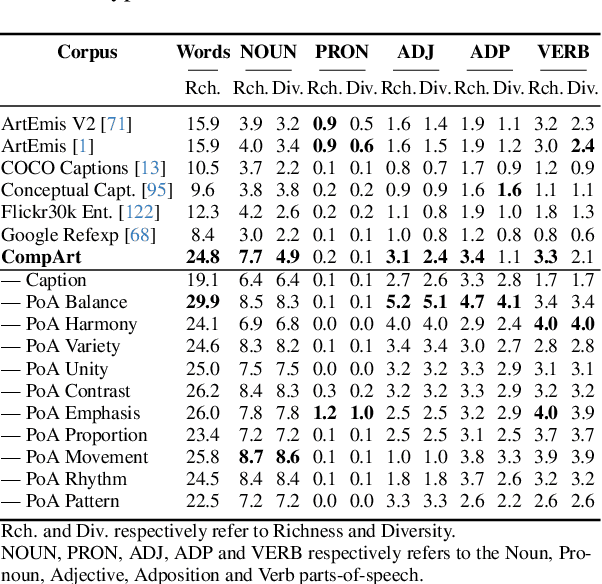

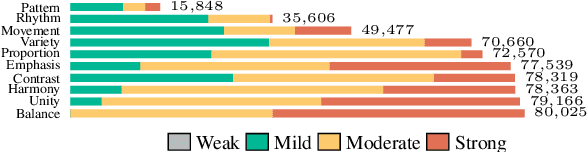

Abstract:Text-to-Image (T2I) diffusion models (DM) have garnered widespread adoption due to their capability in generating high-fidelity outputs and accessibility to anyone able to put imagination into words. However, DMs are often predisposed to generate unappealing outputs, much like the random images on the internet they were trained on. Existing approaches to address this are founded on the implicit premise that visual aesthetics is universal, which is limiting. Aesthetics in the T2I context should be about personalization and we propose the novel task of aesthetics alignment which seeks to align user-specified aesthetics with the T2I generation output. Inspired by how artworks provide an invaluable perspective to approach aesthetics, we codify visual aesthetics using the compositional framework artists employ, known as the Principles of Art (PoA). To facilitate this study, we introduce CompArt, a large-scale compositional art dataset building on top of WikiArt with PoA analysis annotated by a capable Multimodal LLM. Leveraging the expressive power of LLMs and training a lightweight and transferrable adapter, we demonstrate that T2I DMs can effectively offer 10 compositional controls through user-specified PoA conditions. Additionally, we design an appropriate evaluation framework to assess the efficacy of our approach.

A Multi-task Adversarial Attack Against Face Authentication

Aug 15, 2024Abstract:Deep-learning-based identity management systems, such as face authentication systems, are vulnerable to adversarial attacks. However, existing attacks are typically designed for single-task purposes, which means they are tailored to exploit vulnerabilities unique to the individual target rather than being adaptable for multiple users or systems. This limitation makes them unsuitable for certain attack scenarios, such as morphing, universal, transferable, and counter attacks. In this paper, we propose a multi-task adversarial attack algorithm called MTADV that are adaptable for multiple users or systems. By interpreting these scenarios as multi-task attacks, MTADV is applicable to both single- and multi-task attacks, and feasible in the white- and gray-box settings. Furthermore, MTADV is effective against various face datasets, including LFW, CelebA, and CelebA-HQ, and can work with different deep learning models, such as FaceNet, InsightFace, and CurricularFace. Importantly, MTADV retains its feasibility as a single-task attack targeting a single user/system. To the best of our knowledge, MTADV is the first adversarial attack method that can target all of the aforementioned scenarios in one algorithm.

IFViT: Interpretable Fixed-Length Representation for Fingerprint Matching via Vision Transformer

Apr 12, 2024

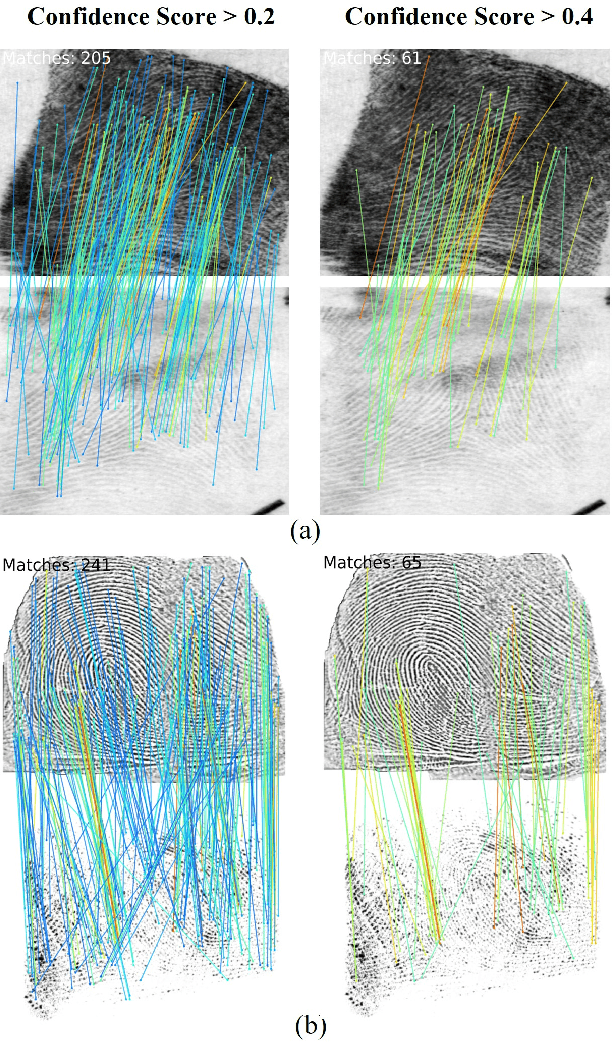

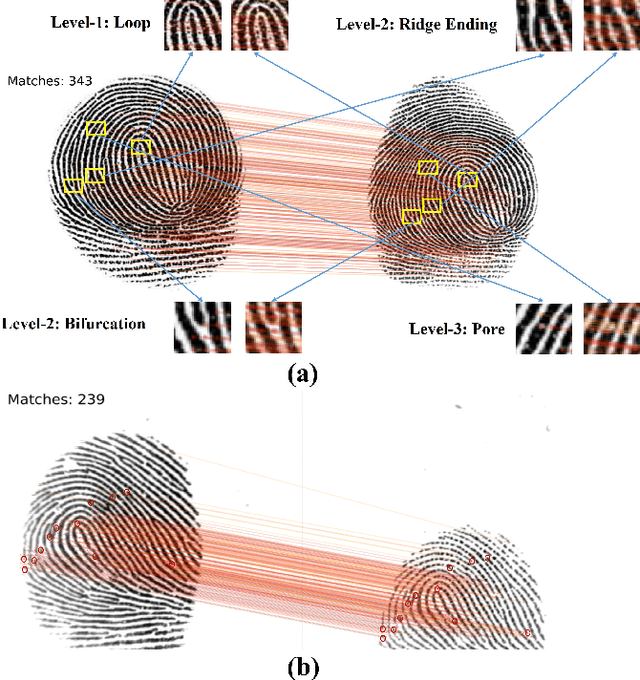

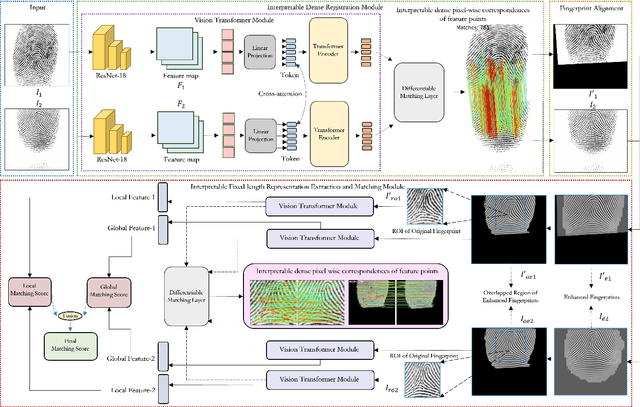

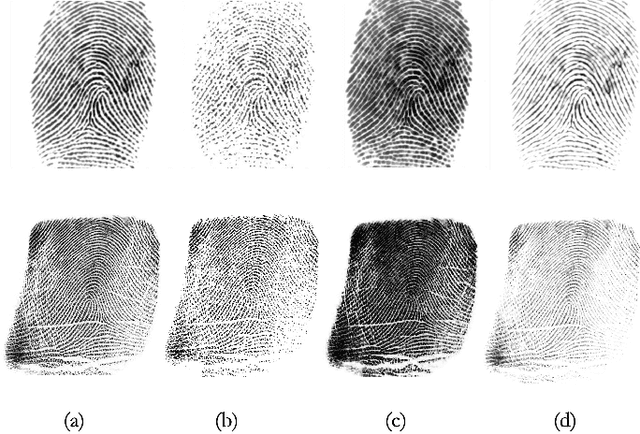

Abstract:Determining dense feature points on fingerprints used in constructing deep fixed-length representations for accurate matching, particularly at the pixel level, is of significant interest. To explore the interpretability of fingerprint matching, we propose a multi-stage interpretable fingerprint matching network, namely Interpretable Fixed-length Representation for Fingerprint Matching via Vision Transformer (IFViT), which consists of two primary modules. The first module, an interpretable dense registration module, establishes a Vision Transformer (ViT)-based Siamese Network to capture long-range dependencies and the global context in fingerprint pairs. It provides interpretable dense pixel-wise correspondences of feature points for fingerprint alignment and enhances the interpretability in the subsequent matching stage. The second module takes into account both local and global representations of the aligned fingerprint pair to achieve an interpretable fixed-length representation extraction and matching. It employs the ViTs trained in the first module with the additional fully connected layer and retrains them to simultaneously produce the discriminative fixed-length representation and interpretable dense pixel-wise correspondences of feature points. Extensive experimental results on diverse publicly available fingerprint databases demonstrate that the proposed framework not only exhibits superior performance on dense registration and matching but also significantly promotes the interpretability in deep fixed-length representations-based fingerprint matching.

On Computational Entanglement and Its Interpretation in Adversarial Machine Learning

Oct 04, 2023Abstract:Adversarial examples in machine learning has emerged as a focal point of research due to their remarkable ability to deceive models with seemingly inconspicuous input perturbations, potentially resulting in severe consequences. In this study, we embark on a comprehensive exploration of adversarial machine learning models, shedding light on their intrinsic complexity and interpretability. Our investigation reveals intriguing links between machine learning model complexity and Einstein's theory of special relativity, through the concept of entanglement. More specific, we define entanglement computationally and demonstrate that distant feature samples can exhibit strong correlations, akin to entanglement in quantum realm. This revelation challenges conventional perspectives in describing the phenomenon of adversarial transferability observed in contemporary machine learning models. By drawing parallels with the relativistic effects of time dilation and length contraction during computation, we gain deeper insights into adversarial machine learning, paving the way for more robust and interpretable models in this rapidly evolving field.

UMS-VINS: United Monocular-Stereo Features for Visual-Inertial Tightly Coupled Odometry

Mar 15, 2023

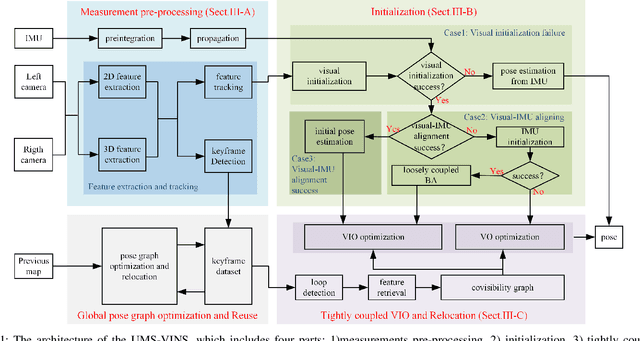

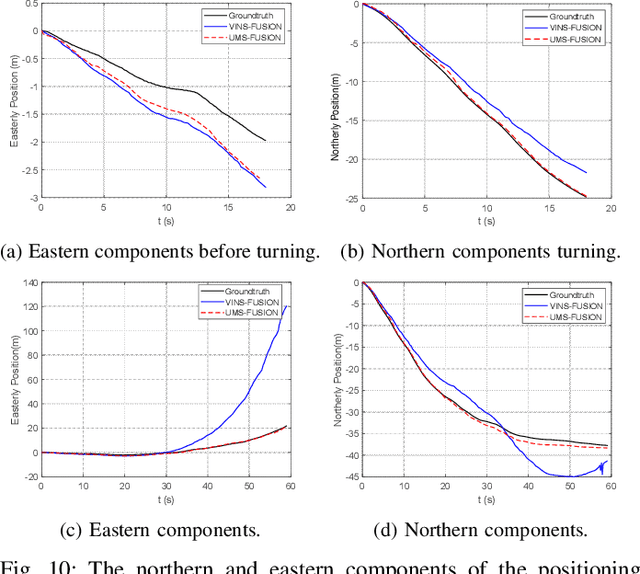

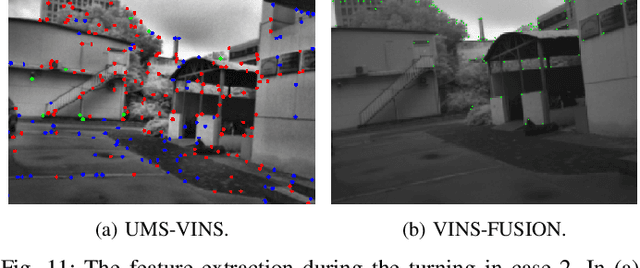

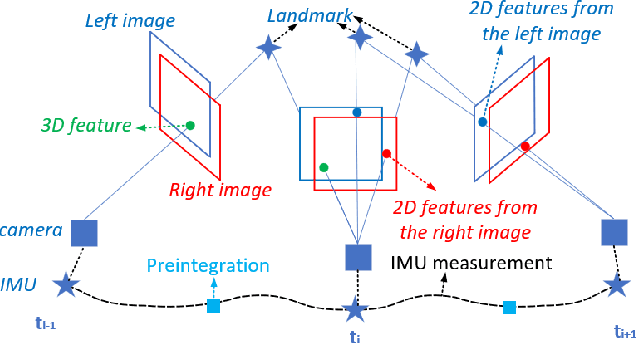

Abstract:This paper introduces the united monocular-stereo features into a visual-inertial tightly coupled odometry (UMS-VINS) for robust pose estimation. UMS-VINS requires two cameras and a low-cost inertial measurement unit (IMU). The UMS-VINS is an evolution of VINS-FUSION, which modifies the VINS-FUSION from the following three perspectives. 1) UMS-VINS extracts and tracks features from the sub-pixel plane to achieve better positions of the features. 2) UMS-VINS introduces additional 2-dimensional features from the left and/or right cameras. 3) If the visual initialization fails, the IMU propagation is directly used for pose estimation, and if the visual-IMU alignment fails, UMS-VINS estimates the pose via the visual odometry. The performances on both public datasets and new real-world experiments indicate that the proposed UMS-VINS outperforms the VINS-FUSION from the perspective of localization accuracy, localization robustness, and environmental adaptability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge